-

摘要:

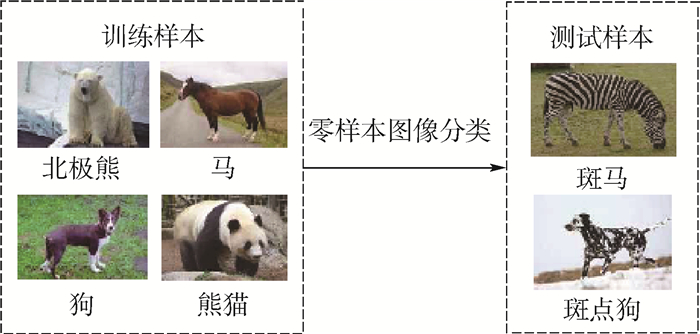

在图像分类任务中,零样本图像分类问题已成为一个研究热点。为了解决零样本图像分类问题,采用一种基于生成对抗网络(GAN)的方法,通过生成未知类的图像特征使得零样本分类任务转换为传统的图像分类任务。同时对生成对抗网络中的判别网络做出改进,使其判别过程更加准确,从而进一步提高生成图像特征的质量。实验结果表明:所提方法在AWA、CUB和SUN数据集上的分类准确率分别提高了0.4%、0.4%和0.5%。因此,所提方法通过改进生成对抗网络,能够生成质量更好的图像特征,从而有效解决零样本图像分类问题。

-

关键词:

- 深度学习 /

- 图像分类 /

- 零样本学习 /

- 生成对抗网络(GAN) /

- 图像特征生成

Abstract:The problem of zero-shot image classification has become a research focus in the field of image classification. In this paper, a method based on generative adversarial network (GAN) is used to solve the problem of zero-shot image classification. By generating image features of unseen classes, the zero-shot classification task is transformed into a conventional image classification task. At the same time, this paper makes modifications to the discriminant network in the generative adversarial network to make the discriminating process more accurate. The experimental results show that the performance of the proposed method has been increased by 0.4%, 0.4% and 0.5% on the datasets of AWA, CUB and SUN, respectively. Therefore, the proposed method can generate the better features by improving the generative adversarial networks, which results in solving the problem of zero-shot image calssification effectively.

-

表 1 三个数据集的划分情况

Table 1. Division of three datasets

数据集 类别数 已知类别数 未知类别数 AWA 50 40 10 CUB 200 150 50 SUN 717 645 72 表 2 不同方法在3个数据集上的分类准确率比较

Table 2. Comparison of classification accuracy of different methods on three datasets

-

[1] LAROCHELLE H, ERHAN D, BENGIO Y.Zero-data learning of new tasks[C]//Proceedings of the Association for the Advancement of Artificial Intelligence. Palo Alto: AAAI Press, 2008: 646-651. http://www.cs.toronto.edu/~larocheh/publications/aaai2008_zero-data.pdf [2] CHANGPINYO S, CHAO W, GONG B, et al.Synthesized classifiers for zero-shot learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2016: 5327-5336. https://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Changpinyo_Synthesized_Classifiers_for_CVPR_2016_paper.pdf [3] KODIROV E, XIANG T, GONG S.Semantic auto-encoder for zero-shot learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 4447-4456. http://openaccess.thecvf.com/content_cvpr_2017/papers/Kodirov_Semantic_Autoencoder_for_CVPR_2017_paper.pdf [4] XIAN Y, SCHIELE B, AKATA Z.Zero-shot learning-the good, the bad and the ugly[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 3077-3086. https://arxiv.org/pdf/1703.04394.pdf [5] DING Z, SHAO M, FU Y.Low-rank embedded ensemble semantic dictionary for zero-shot learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 6005-6013. http://openaccess.thecvf.com/content_cvpr_2017/papers/Ding_Low-Rank_Embedded_Ensemble_CVPR_2017_paper.pdf [6] LAMPERT C H, NICKISCH H, HARMELING S.Attribute-based classification for zero-shot visual object categorization[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(3):453-465. doi: 10.1109/TPAMI.2013.140 [7] ROMERA-PAREDES B, TORR P.An embarrassingly simple approach to zero-shot learning[C]//Proceedings of the International Conference on Machine Learning.Madison: Omnipress, 2015: 2152-2161. http://proceedings.mlr.press/v37/romera-paredes15.pdf [8] GOODFELLOW I, POUGET-ABADIE J, MIRZA M, et al.Generative adversarial nets[C]//Proceedings of the Neural Information Processing Systems.Cambridge: MIT Press, 2014: 2672-2680. https://arxiv.org/pdf/1406.2661v1.pdf [9] XIAN Y, LORENZ T, SCHIELE B, et al.Feature generating networks for zero-shot learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2018: 5542-5551. https://arxiv.org/pdf/1712.00981.pdf [10] ZHU Y, ELHOSEINY M, LIU B, et al.A generative adversarial approach for zero-shot learning from noisy texts[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2018: 1004-1013. http://openaccess.thecvf.com/content_cvpr_2018/papers/Zhu_A_Generative_Adversarial_CVPR_2018_paper.pdf [11] ARJOVSKY M, CHINTALA S, BOTTOU L.Wasserstein generative adversarial networks[C]//Proceedings of the International Conference on Machine Learning.Madison: Omnipress, 2017: 214-223. [12] MIRZA M, OSINDERO S.Conditional generative adversarial nets[EB/OL].(2014-11-06)[2019-07-01].https://arxiv.org/abs/1411.1784. [13] LAMPERT C H, NICKISCH H, HARMELING S.Learning to detect unseen object classes by between-class attribute transfer[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2009: 951-958. https://blog.csdn.net/u011070272/article/details/73250102 [14] WAH C, BRANSON S, WELINDER P, et al.The Caltech-UCSD Birds-200-2011 dataset:CNS-TR-2011-001[R].Pasadena:California Institute of Technology, 2011. [15] PATTERSON G, HAYS J.SUN attribute database: Discovering, annotating, and recognizing scene attributes[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2012: 2751-2758. [16] NOROUZI M, MIKOLOV T, BENGIO S.Zero-shot learning by convex combination of semantic embeddings[C]//Proceedings of the International Conference on Learning Representations.Brookline: Microtome Publishing, 2014: 10-19. https://arxiv.org/abs/1312.5650 [17] ZHANG Z, SALIGRAMA V.Zero-shot learning via semantic similarity embedding[C]//Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2015: 4166-4174. https://arxiv.org/pdf/1509.04767.pdf [18] FROME A, CORRADO G S, SHLENS J, et al.DeViSE: A deep visual semantic embedding model[C]//Proceedings of the Neural Information Processing Systems.Cambridge: MIT Press, 2013: 2121-2129. http://www.cs.toronto.edu/~ranzato/publications/frome_nips2013.pdf [19] AKATA Z, REED S, WALTER D, et al.Evaluation of output embeddings for fine-grained image classification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2015: 2927-2936. https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Akata_Evaluation_of_Output_2015_CVPR_paper.pdf [20] AKATA Z, PERRONNIN F, HARCHAOUI Z, et al.Label-embedding for image classification[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(7):1425-1438. doi: 10.1109/TPAMI.2015.2487986 -

下载:

下载: