-

摘要:

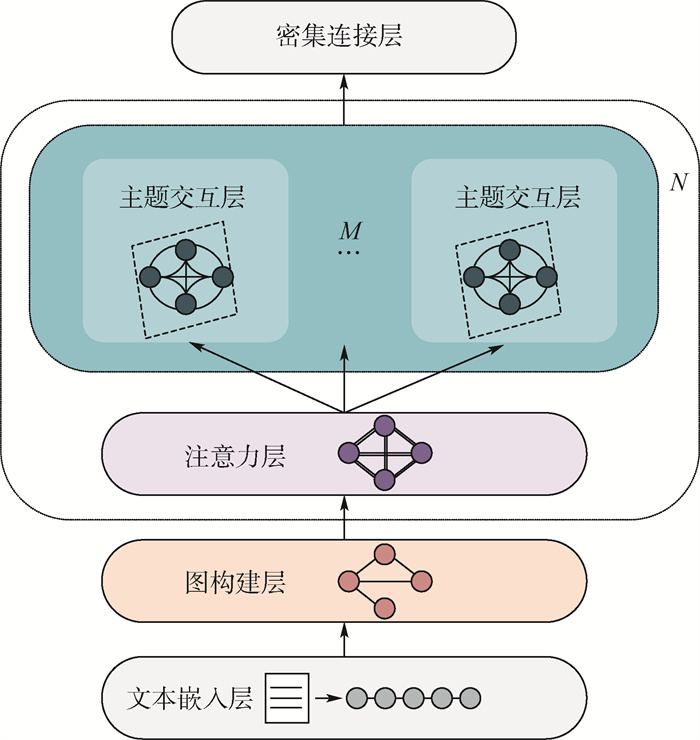

关键词抽取对文本处理影响较大,其识别的准确度及流畅程度是任务的关键。为有效缓解短文本关键词提取过程中词划分不准确、关键词与文本主题不匹配、多语言混合等难题,提出了一种基于图到序列学习模型的自适应短文本关键词生成模型ADGCN。模型采用图神经网络与注意力机制相结合的方式作为对文本信息特征提取的编码框架,针对词的位置特征和语境特征编码,解决了短文本结构不规律和词之间存在关联复杂信息的问题。同时采用了一种线性解码方案,生成了可解释的关键词。在解决问题的过程中,从某社交平台收集并公布了一个标签数据集,其包括社交平台发文文本和话题标签。实验中,从用户需求角度出发对模型结果的相关性、信息量、连贯性进行评估和分析,所提模型不仅可以生成符合短文本主题的关键词,还可以有效缓解数据扰动对模型的影响。所提模型在公开数据集KP20k上仍表现良好,具有较好的可移植性

Abstract:Keyword extraction has a great impact on text processing, and the accuracy and fluency of keyword recognition are the keys to the task. In order to effectively solve the problems such as inaccurate word division, mismatch between keywords and text topics, and multi-language mixing in the process of keyword extraction from short text, we propose an adaptive short text keyword generation model based on graph convolutional neural network (ADGCN). First, the model uses graph neural network as the coding framework of text information feature extraction to solve the problem of irregular short text structure and the existence of complex information between words. Then, according to the location features and context features of words, the self attention mechanism is combined to capture rich context dependent information. Finally, a linear decoding scheme is used to generate interpretable keywords. We collect and publish a tag dataset TH from social media platform, including text and topic tags. We evaluate and analyze the relevance, information and coherence of the model results from the perspective of user needs. The model can not only generate keywords that meet the topic of short text, but also effectively alleviate the impact of data disturbance on the model. It is proved that the model performs well on the public dataset KP20k and has good portability.

-

Key words:

- keyword extraction /

- keyword generation /

- graph neural network /

- attention mechanism /

- topic model

-

表 1 不同话题中的TH样本和标签数量

Table 1. Number of TH samples and tags in different topics

主题 文本条数 标签个数 生活 14 044 10 教育 11 181 7 健康 4 868 5 表 2 KP20k数据集描述

Table 2. KP20k dataset description

KP20k 样本条数 训练集 530 809 验证集 20 000 测试集 20 000 表 3 生活主题下Baseline模型和本文模型的3个度量评估比较

Table 3. Comparison of three measurement evaluation of Baseline model and proposed model under life topic

模型 相关性 信息量 连贯性 结果 Tf-idf 6.75 5.70 8.03 6.83 TextTank 6.31 4.75 8.22 6.43 Maui 5.27 4.09 7.82 5.73 RNN 5.38 3.46 7.93 5.59 CopyRNN 6.52 5.21 8.04 6.59 CovRNN 6.56 5.24 8.09 6.63 ADGCN 8.13 6.21 7.53 7.29 表 4 教育主题下Baseline模型和本文模型的3个度量评估比较

Table 4. Comparison of three measurement evaluation of Baseline model and proposed model under education topic

模型 相关性 信息量 连贯性 结果 Tf-idf 5.01 4.01 7.17 5.40 TextTank 4.93 4.47 7.34 5.58 Maui 4.31 4.39 5.35 4.68 RNN 4.05 4.60 5.67 4.77 CopyRNN 5.31 4.95 6.26 5.51 CovRNN 5.27 5.02 6.24 5.51 ADGCN 7.91 6.33 6.14 6.79 表 5 健康主题下Baseline模型和本文所提模型的3个度量评估比较

Table 5. Comparison of three measurement evaluation of Baseline model and proposed model under health topic

模型 相关性 信息量 连贯性 结果 Tf-idf 4.65 4.51 6.95 5.37 TextTank 4.74 4.53 7.04 5.44 Maui 3.43 4.57 4.94 4.31 RNN 2.51 5.08 5.26 4.28 CopyRNN 4.87 5.39 5.31 5.19 CovRNN 4.85 5.47 5.43 5.25 ADGCN 6.97 6.36 5.09 6.14 表 6 KP20k数据集上Baseline模型和本文模型的精确率、召回率和F1值评估比较

Table 6. Comparison of precision, recall and F1 evaluation of Baseline model and proposed model on KP20k dataset

模型 P R F1 Tf-idf 0.413 0.052 0.093 TextTank 0.309 0.054 0.092 Maui 0.564 0.125 0.205 RNN 0.581 0.126 0.208 CopyRNN 0.652 0.213 0.321 CovRNN 0.683 0.220 0.333 ADGCN 0.735 0.327 0.453 表 7 ADGCN模型的消融

Table 7. Ablation of ADGCN model

模型 P R F1 ADGCN 0.735 0.327 0.453 去除图构建层 0.603 0.329 0.426 去除注意力层 0.667 0.299 0.413 去除主题交互层 0.661 0.293 0.406 去除注意力层,主题交互层 0.565 0.301 0.393 去除密集连接层 0.682 0.326 0.441 -

[1] BOUDIN F. A comparison of centrality measures for graph-based keyphrase extraction[C]//Proceedings of the International Joint Conferences on Natural Language Processing (IJCNLP), 2013: 834-838. [2] LAHIRI S, CHOUDHURY S R, CARAGEA C. Keyword and keyphrase extraction using centrality measures on collocation networks[EB/OL]. (2014-01-25)[2020-10-01]. https://arxiv.org/abs/1401.6571. [3] PALSHIKAR G K. Keyword extraction from a single document using centrality measures[J]. Pattern Recognition and Machine Intelligence (PReMI), 2007: 4851(1): 503-510. [4] EDIGER D, JIANG E J, BADER D A, et al. Massive social network analysis: Mining twitter for social good[C]//39th International Conference on Parallel Processing(ICPP), 2010: 583-593. [5] BULGAROV F, CARAGEA C. A comparison of supervised keyphrase extraction models[C]//Proceedings of the 2015 International Conference on World Wide Web, 2015: 13-14. [6] MOTHE J, RAMIANDRISOA F, RASOLOMANANA M. Automatic keyphrase extraction using graph-based methods[C]//Proceedings of the 33rd Annual ACM Symposium on Applied Computing, 2018: 728-730. [7] 刘啸剑, 谢飞, 吴信东. 基于图和LDA主题模型的关键词抽取算法[J]. 情报学报, 2016, 35(6): 664-672. doi: 10.3772/j.issn.1000-0135.2016.006.010LIU X J, XIE F, WU X D. Keyword extraction algorithm based on graph and LDA topic model[J]. Journal of the China Society for Scientific and Technical Information, 2016, 35(6): 664-672(in Chinese). doi: 10.3772/j.issn.1000-0135.2016.006.010 [8] BOUDIN F. Unsupervised keyphrase extraction with multipartite graphs[C]//Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2018: 667-672. [9] SAROJ K B, MONALI B, JACOB S. A graph based keyword extraction model using collective node weight[J]. Expert Systems with Application, 2018, 97(1): 51-59. [10] BELLAACHIA A, AL-DHELAAN M. NE-Rank: A novel graph-based key phrase extraction in twitter[C]//Proceedings of the International Joint Conferences on Web Intelligence and Intelligent Agent Technology, 2012: 372-379. [11] LI Z, WANG C. Keyword extraction with character-level convolutional neural tensor networks[C]//23rd Pacific-Asia Conference on Advances in Knowledge Discovery and Data Mining, 2019: 400-413. [12] 杨丹浩, 吴岳辛, 范春晓. 一种基于注意力机制的中文短文本关键词提取模型[J]. 计算机科学, 2020, 41(1): 193-198. https://www.cnki.com.cn/Article/CJFDTOTAL-JSJA202001026.htmYANG D H, WU Y X, FAN C X. A Chinese short text keyword extraction model based on attention mechanism[J]. Computer Science, 2020, 41(1): 193-198(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JSJA202001026.htm [13] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]//Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, 2017: 5998-6008. [14] 冯建周, 宋沙沙, 王元卓, 等. 基于改进注意力机制的实体关系抽取方法[J]. 电子学报, 2019, 47(8): 1692-1700. doi: 10.3969/j.issn.0372-2112.2019.08.012FENG J Z, SONG S S, WANG Y Z, et al. Entity relation extraction method based on improved attention mechanism[J]. Acta Electronica Sinica, 2019, 47(8): 1692-1700(in Chinese). doi: 10.3969/j.issn.0372-2112.2019.08.012 [15] MATTHEW E P, MARK N, MOHIT I, et al. Deep contextualized word representations[C]//Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2018: 2227-2237. [16] HAMILTON W L, YING Z, LESKOVEC J. Inductive representation learning on large graphs[C]//Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, 2017: 1024-1034. [17] BERG R, KIPF T N, WELLING M. Graph convolutional matrix completion[EB/OL]. (2017-10-25)[2020-10-01]. https://arxiv.org/abs/1706.02263. [18] YING R, HE R, CHEN K, et al. Graph convolutional neural networks for web-scale recommender systems[C]//Proceedings of the 24th International Conference on Knowledge Discovery and Data Mining, 2018: 974-983. [19] HAMAGUCHI T, OIWA H, SHIMBO M, et al. Knowledge transfer for out-of-knowledge-base entities: A graph neural network approach[C]//Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, 2017: 1802-1808. [20] KAMPFFMEYER M, CHEN Y, LIANG X, et al. Rethinking knowledge graph propagation for zero-shot learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 11487-11496. [21] WANG X L, YE Y F, GUPTA A. Zero-shot recognition via semantic embeddings and knowledge graphs[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 6857-6866. [22] LI Z, SUN Y, ZHU J, et al. Improve relation extraction with dual attention-guided graph convolutional networks[J]. Neural Computing and Applications, 2021, 33: 1773-1784. doi: 10.1007/s00521-020-05087-z [23] PENG H, LI J X, HE Y, et al. Large-scale hierarchical text classification with recursively regularized deep graph-CNN[C]//Proceedings of the 2018 World Wide Web Conference, 2018: 1063-1072. [24] LIU B, NIU D, WEI H, et al. Matching article pairs with graphical decomposition and convolutions[C]//Proceedings of the 57th Conference of the Association for Computational Linguistics, 2019: 6284-6294. [25] LIU X, YOU X, ZHANG X, et al. Tensor graph convolutional networks for text classification[C]//The Thirty-Second Innovative Applications of Artificial Intelligence Conference, 2020: 8409-8416. [26] XU K, WU L F, WANG Z G, et al. Graph2Seq: Graph to sequence learning with attention-based neural networks[EB/OL]. (2018-12-03)[2020-10-01]. https://arxiv.org/abs/1804.00823. [27] XU K, WU L, WANG Z, et al. SQL-to-text generation with graph-to-sequence model[C]//Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, 2018: 931-936. [28] BECK D, HAFFARI G, COHN T. Graph-to-sequence learning using gated graph neural networks[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, 2018: 273-283. [29] XUE H, QIN B, LIU T. Topical key concept extraction from folksonomy through graph-based ranking[J]. Multimedia Tools and Applications, 2016, 75(15): 8875-8893. doi: 10.1007/s11042-014-2303-9 [30] NAGARAJAN R, NAIR S A H, ARUNA P. Keyword extraction using graph based approach[J]. International Journal Advanced Research in Computer Science and Software Engineering, 2016, 6(10): 25-29. [31] SONG H, GO J, PARK S, et al. A just-in-time keyword extraction from meeting transcripts using temporal and participant information[J]. Journal of Intelligent Information Systems, 2017, 48(1): 117-140. doi: 10.1007/s10844-015-0391-2 [32] KIPF T N, WELLING M. Semi-supervised classification with graph convolutional networks[EB/OL]. (2017-02-22)[2020-10-01]. https://arxiv.org/abs/1609.02907. [33] DZMITRY B, KYUNGHYUN C, YOSHUA B. Neural machine translation by jointly learning to align and translate[EB/OL]. (2016-05-19)[2020-10-01]. https://arxiv.org/abs/1409.0473. [34] GU J T, LU Z D, LI H, et al. Incorporating copying mechanism in sequence-to-sequence learning[EB/OL]. (2016-06-08)[2020-10-01]. https://arxiv.org/abs/1603.06393. [35] MENG R, ZHAO S, HAN S, et al. Deep keyphrase generation[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, 2017: 582-592. [36] MEDELYAN O, FRANK E, WITTEN I H. Human-competitive tagging using automatic keyphrase extraction[C]//Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, 2009: 1318-1327. [37] ZHANG Y, XIAO W. Keyphrase generation based on deep Seq2seq model[J]. IEEE Access, 2018, 6: 46047-46057. doi: 10.1109/ACCESS.2018.2865589 -

下载:

下载: