-

摘要:

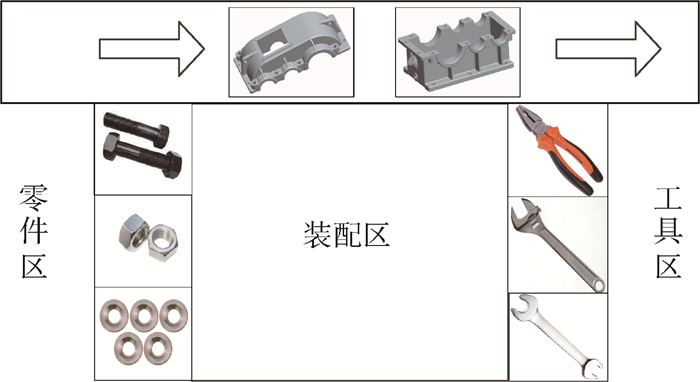

为实现安全高效的人机协作(HRC),需要机器人及时对人的动作做出预测,从而积极主动地辅助人工作。为解决在HRC装配场景中机器人对人的动作终点预测问题,提出了一种基于长短时记忆(LSTM)网络的动作终点预测方法。在训练阶段,用人的动作序列与对应的动作终点组成的样本训练LSTM网络,构建动作序列与动作终点之间的映射。在应用阶段,根据人的动作的初始部分对动作终点提前做出预测。通过在装配场景中,对人抓取工具或零件的动作终点进行预测,验证了所提方法的有效性。在观测到50%的动作片段时,预测准确率达到80%以上。

-

关键词:

- 人机协作(HRC) /

- 终点预测 /

- 伸及动作 /

- 长短时记忆(LSTM) /

- 意图识别

Abstract:To realize a safe and effective human-robot collaboration (HRC), it is necessary for the robot to predict human motions in a timely manner, so as to assist human more actively in the cooperative work. In order to solve the problem of human motion prediction in HRC assembly scenario, a motion end point prediction method based on long short-term memory (LSTM) network is proposed. In the training phase, the LSTM network is trained with samples of human motion sequences and corresponding motion end points, and the mapping between motion sequences and motion end points is constructed. In the application phase, the motion end point is predicted in advance based on the initial part of the human motion sequence. The effectiveness of the proposed method is verified by predicting the end points of motion of a human grasping tool or part in an assembly scenario. When 50% of the motion fragments are observed, the accuracy rate of prediction is above 80%.

-

表 1 LSTM网络的相关参数

Table 1. Related parameters for LSTM network

参数 数值 输入层节点个数 30 输出层节点个数 9 隐藏层层数 2 隐藏层单元维度 32 初始学习率 0.000 1 正则项系数 0.001 5 训练样本批量 100 迭代次数 5 000 表 2 不同模型预测效果统计

Table 2. Prediction results statistics among different models

动作片段观测比例/% 准确率/% Random GMM RNN LSTM-1 LSTM-2 30 11.1 56.5 54.2 24.4 67.7 40 11.1 67.7 61.1 27.8 76.4 50 11.1 73.6 66.3 32.8 80.3 60 11.1 78.8 74.2 41.1 87.5 70 11.1 83.0 83.1 54.1 89.2 80 11.1 87.7 89.3 65.4 93.9 90 11.1 92.7 95.4 89.1 97.2 -

[1] 何玉庆, 赵忆文, 韩建达, 等.与人共融——机器人技术发展的新趋势[J].机器人产业, 2015(5):74-80. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jqrcy201505012HU Y Q, ZHAO Y W, HAN J D, et al.Coincidence with people-New trend of robot technology development[J].Robot Industry Forum, 2015(5):74-80(in Chinese). http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jqrcy201505012 [2] 张含阳.人机协作:下一代机器人的必然属性[J].机器人产业, 2016(3):37-45. http://d.old.wanfangdata.com.cn/Periodical/yqyb201212035ZHANG H Y.Human-robot collaboration:The inevitable property of the next generation robot[J].Robot Industry Forum, 2016(3):37-45(in Chinese). http://d.old.wanfangdata.com.cn/Periodical/yqyb201212035 [3] 邹方.人机协作标准及应用动态研究[J].航空制造技术, 2016, 59(23):58-63. http://d.old.wanfangdata.com.cn/Periodical/hkgyjs201623008ZOU F.Standard for human-robot collaboration and its application trend[J].Aeronautical Manufacturing Technology, 2016, 59(23):58-63(in Chinese). http://d.old.wanfangdata.com.cn/Periodical/hkgyjs201623008 [4] LIU H, WANG L.Human motion prediction for human-robot collaboration[J].Journal of Manufacturing Systems, 2017, 44:287-294. doi: 10.1016/j.jmsy.2017.04.009 [5] PÉREZ-D'ARPINO C, SHAH J A.Fast target prediction of human reaching motion for cooperative human-robot manipulation tasks using time series classification[C]//IEEE International Conference on Robotics and Automation.Piscataway, NJ: IEEE Press, 2015: 6175-6182. [6] HAWKINS K P, VO N, BANSAL S, et al.Probabilistic human action prediction and wait-sensitive planning for responsive human-robot collaboration[C]//IEEE-RAS International Conference on Humanoid Robots.Piscataway, NJ: IEEE Press, 2013: 499-506. [7] NIKOLAIDIS S, SHAH J.Human-robot cross-training: Computational formulation, modeling and evaluation of a human team training strategy[C]//8th ACM/IEEE International Conference on Human-Robot Interaction (HRI).Piscataway, NJ: IEEE Press, 2013: 33-40. [8] DING H, SCHIPPER M, MATTHIAS B.Collaborative behavior design of industrial robots for multiple human-robot collaboration[C]//International Symposium on Robotics.Piscataway, NJ: IEEE Press, 2014: 1-6. [9] 李瑞峰, 王亮亮, 王珂.人体动作行为识别研究综述[J].模式识别与人工智能, 2014, 27(1):35-48. doi: 10.3969/j.issn.1003-6059.2014.01.005LI R F, WANG L L, WANG K.A survey of human body action recognition[J].Pattern Recognition and Artificial Intelligence, 2014, 27(1):35-48(in Chinese). doi: 10.3969/j.issn.1003-6059.2014.01.005 [10] 朱煜, 赵江坤, 王逸宁, 等.基于深度学习的人体行为识别算法综述[J].自动化学报, 2016, 42(6):848-857. http://d.old.wanfangdata.com.cn/Periodical/zdhxb201606005ZHU Y, ZHAO J K, WANG Y N, et al.A review of human action recognition based on deep learning[J].Acta Automatica Sinica, 2016, 42(6):848-857(in Chinese). http://d.old.wanfangdata.com.cn/Periodical/zdhxb201606005 [11] BORGES P V K, CONCI N, CAVALLARO A.Video-based human behavior understanding:A survey[J].IEEE Transactions on Circuits & Systems for Video Technology, 2013, 23(11):1993-2008. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0231154131/ [12] VISHWAKARMA S, AGRAWAL A.A survey on activity recognition and behavior understanding in video surveillance[J].Visual Computer, 2013, 29(10):983-1009. doi: 10.1007/s00371-012-0752-6 [13] MAINPRICE J, HAYNE R, BERENSON D.Goal set inverse optimal control and iterative replanning for predicting human reaching motions in shared workspaces[J].IEEE Transactions on Robotics, 2016, 32(4):897-908. doi: 10.1109/TRO.2016.2581216 [14] KALAKRISHNAN M, CHITTA S, THEODOROU E, et al.STOMP: Stochastic trajectory optimization for motion planning[C]//IEEE International Conference on Robotics and Automation.Piscataway, NJ: IEEE Press, 2011: 4569-4574. [15] MAINPRICE J, BERENSON D.Human-robot collaborative manipulation planning using early prediction of human motion[C]//IEEE/RSJ International Conference on Intelligent Robots and Systems.Piscataway, NJ: IEEE Press, 2013: 299-306. [16] JOSLIN C, EL-SAWAH A, CHEN Q, et al.Dynamic gesture recognition[C]//2005 IEEE Instrumentation and Measurement Technology Conference.Piscataway, NJ: IEEE Press, 2006: 1706-1711. [17] GEHRIG D, KUEHNE H, WOERNER A, et al.HMM-based human motion recognition with optical flow data[C]//9th IEEE-RAS International Conference on Humanoid Robots.Piscataway, NJ: IEEE Press, 2010: 425-430. [18] HÜSKEN M, STAGGE P.Recurrent neural networks for time series classification[J].Neurocomputing, 2003, 50:223-235. doi: 10.1016/S0925-2312(01)00706-8 [19] LIPTON Z C, BERKOWITZ J, ELKAN C.A critical review of recurrent neural networks for sequence learning[EB/OL].(2015-10-17)[2018-05-04].https://arxiv.org/abs/1506.00019. [20] ESCHRODT F, BUTZ M V.Just imagine! learning to emulate and infer actions with a stochastic generative architecture[J].Frontiers in Robotics and AI, 2016, 3:5 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=Doaj000004610608 [21] BUTEPAGE J, BLACK M J, KRAGIC D, et al.Deep representation learning for human motion prediction and classification[C]//IEEE Conference on Computer Vision and Pattern Recognition(CVPR).Piscataway, NJ: IEEE Press, 2017: 1591-1599. [22] HOCHREITER S, BENGIO Y, FRASCONI P, et al.Gradient flow in recurrent nets: The difficulty of learning long-term dependencies[M]//KREMER S C, KOLEN J F.A field guide to dynamical recurrent networks.Piscataway, NJ: IEEE Press, 2001: 237-243. [23] HOCHREITER S, SCHMIDHUBER J.Long short-term memory[J].Neural Computation, 1997, 9(8):1735-1780. doi: 10.1162/neco.1997.9.8.1735 [24] SAK H, SENIOR A, BEAUFAYS F.Long short-term memory based recurrent neural network architectures for large vocabulary speech recognition[C]//15th Annual Conference of the International Speech Communication Association. Baixas: ISCA, 2014: 338-342. [25] DU Y, WANG W, WANG L.Hierarchical recurrent neural network for skeleton based action recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2015: 1110-1118. [26] SRIVASTAVA N, MANSIMOV E, SALAKHUTDINOV R.Unsupervised learning of video representations using LSTMs[C]//International Conference on International Conference on Machine Learning, 2015: 843-852. [27] MA X L, TAO Z M, WANG Y H, et al.Long short-term memory neural network for traffic speed prediction using remote microwave sensor data[J].Transportation Research Part C, 2015, 54:187-197. doi: 10.1016/j.trc.2015.03.014 [28] GRAVES A, SCHMIDHUBER J.Framewise phoneme classification with bidirectional LSTM and other neural network architectures[J].Neural Networks, 2005, 18(5):602-610. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=7d8e4fc476a8fcf19687f5687cebc612 [29] BACCOUCHE M, MAMALET F, WOLF C, et al.Sequential deep learning for human action recognition[C]//International Conference on Human Behavior Understanding.Berlin: Springer-Verlag, 2011: 29-39. [30] GRAVES A, MOHAMED A R, HINTON G.Speech recognition with deep recurrent neural networks[C]//2013 IEEE International Conference on Acoustics, Speech and Signal Processing.Piscataway, NJ: IEEE Press, 2013: 6645-6649. [31] AMARI S I.Backpropagation and stochastic gradient descent method[J].Neurocomputing, 1993, 5(4-5):185-196. doi: 10.1016/0925-2312(93)90006-O [32] DUCHI J, HAZAN E, SINGER Y.Adaptive subgradient methods for online learning and stochastic optimization[J].Journal of Machine Learning Research, 2011, 12(7):257-269. http://cn.bing.com/academic/profile?id=230ff39b42b741fbf3fc546f244cd194&encoded=0&v=paper_preview&mkt=zh-cn [33] YEUNG S, RUSSAKOVSKY O, JIN N, et al.Every moment counts:Dense detailed labeling of actions in complex videos[J].International Journal of Computer Vision, 2018, 126(2-4):375-389. doi: 10.1007/s11263-017-1013-y [34] KINGMA D P, BA J.Adam: A method for stochastic optimization[C]//3rd International Conference for Learning Representations, 2015. [35] BERNDT D J, CLIFFORD J.Using dynamic time warping to find patterns in time series: WS-94-03[R].Palo Alto: AAAI, 1994: 359-370. [36] HOWARD A G.Some improvements on deep convolutional neural network based image classification[EB/OL].(2013-12-19)[2018-05-04].http://arxiv.org/abs.1312.5402. -

下载:

下载: