-

摘要:

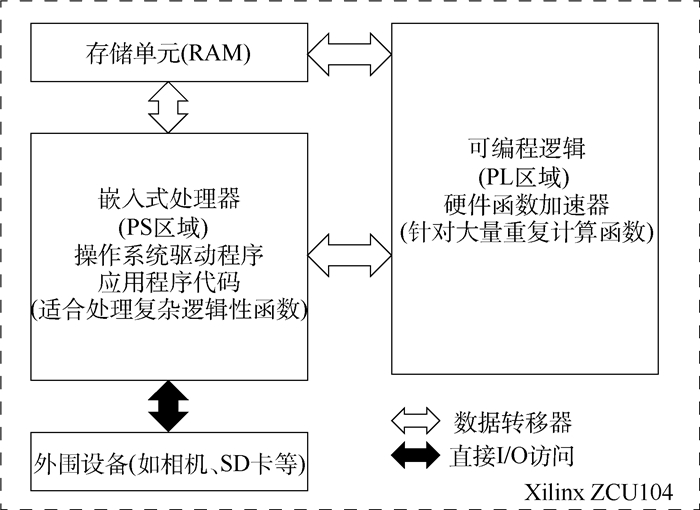

现有无人机(UAV)影像三维重建方法在功耗、时效等方面无法满足移动终端对低功耗、高时效的需求。为此,在有限资源FPGA平台下,结合指令优化策略和软硬件协同优化方法,提出一种基于FPGA高吞吐量硬件优化架构的无人机航拍影像快速低功耗高精度三维重建方法。首先,构建多尺度深度图融合算法架构,增强传统FPGA相位相关算法对不可信区域的鲁棒性,如低纹理、河流等区域。其次,结合高并行指令优化策略,提出高性能软硬件协同优化方案,实现多尺度深度图融合算法架构在有限资源FPGA平台的高效运行。最后,将现有CPU方法、GPU方法与FPGA方法进行综合实验比较,实验结果表明:FPGA方法在重建时间消耗上与GPU方法接近,比CPU方法快近20倍,但功耗仅为GPU方法的2.23%。

Abstract:The existing 3D reconstruction methods based on Unmanned Aerial Vehicle (UAV) images cannot meet the mobile terminal's demand for low power consumption and high time efficiency. To tackle this issue, we propose a fast, low-power and high-precision 3D reconstruction method based on resource-constrained FPGA platforms, which combines instruction optimization strategy and hardware-software co-design method. First, we construct a multi-scale depth map fusion algorithm architecture to enhance the robustness of traditional FPGA phase correlation algorithms to untrustworthy areas, such as low-texture area and rivers. Secondly, based on the high parallel instruction optimization hardware acceleration function strategy, a high-performance hardware-software co-design scheme is proposed to realize the efficient operation of the multi-scale deep map fusion algorithm architecture on the FPGA platform with limited resources. Finally, we comprehensively compare the state-of-the-art CPU and GPU methods with our method. The experimental results show that our method is close to the GPU method in reconstruction time consumption, nearly 20 times faster than the CPU method, but the power consumption is only 2.23% of the GPU method.

-

Key words:

- low-power /

- FPGA /

- 3D reconstruction /

- phase correlation /

- hardware-software co-design

-

表 1 不同软硬件协同优化方案对比

Table 1. Comparison of different hardware-software co-design solutions

硬件资源类型 方案1 方案2 行FFT 列FFT+CPS+列IFFT 行IFFT 总计/% 子图像块提取 行FFT 列FFT+CPS+列IFFT 行IFFT 视差估计 总计/% BRAM_18K/个 12 18 6 34 0 12 18 6 0 49 FF/个 14 849 23 559 7 439 10 1 766 14 718 23 559 7 240 2 431 11 LUT/个 16 811 27 088 8 366 23 2 932 16 452 27 088 8 125 4 745 27 时间/s 8.1 6.4 表 2 不同指令优化策略对比

Table 2. Comparison of different instruction optimization strategies

硬件资源类型 方案1 方案2 方案3 128×128窗口 总计/% 128×128窗口 64×64窗口 32×32窗口 总计/% 128×128窗口 64×64窗口 32×32窗口 总计/% BRAM/Mb 207.5 66.51 20 20 11 70.19 101 38 11 67.31 FF/个 66 047 14.33 38 858 34 804 31 608 39.03 25 955 22 477 19 319 20.41 LUT/个 47 847 20.77 27 963 24 889 23 630 57.73 21 104 18 371 17 098 31.38 时间/s 77.95 19.05 12.85 14.67 47.77 6.75 7.02 8.25 23.1 表 3 基于CPU、GPU、FPGA平台三维重建方法的定量评估结果

Table 3. Quantitative evaluation of 3D reconstruction results based on CPU, GPU and FPGA methods

方法 平均误差 均方根误差 CPU 1.511 0 0.014 9 GPU 1.502 0 0.012 3 FPGA 1.519 5 0.013 1 -

[1] 韩磊, 徐梦溪, 王鑫, 等. 基于光场成像的多线索融合深度估计方法[J]. 计算机学报, 2020, 43(1): 107-122. https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX202001008.htmHAN L, XU M X, WANG X, et al. Depth estimation from multiple cues based light-field cameras[J]. Chinese Journal of Computers, 2020, 43(1): 107-122(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX202001008.htm [2] 李海生, 武玉娟, 郑艳萍, 等. 基于深度学习的三维数据分析理解方法研究综述[J]. 计算机学报, 2020, 43(1): 41-63. https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX202001004.htmLI H S, WU Y J, ZHENG Y P, et al. A survey of 3D data analysis and understanding based on deep learning[J]. Chinese Journal of Computers, 2020, 43(1): 41-63(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JSJX202001004.htm [3] LI J, LIU Y, DU S, et al. Hierarchical and adaptive phase correlation for precise disparity estimation of UAV images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(12): 7092-7104. doi: 10.1109/TGRS.2016.2595861 [4] TREVOR G, PATRICE D, SYLVAIN J, et al. A dedicated lightweight binocular stereo system for real-time depth-map generation[C]//Proceedings of the 2016 Conference on Design and Architectures for Signal and Image Processing (DASIP). Piscataway: IEEE Press, 2016: 215-221. [5] LENTARIS G, MARAGOS K, SOUDRIS D, et al. Single- and multi-FPGA acceleration of dense stereo vision for planetary rovers[J]. ACM Transactions on Embedded Computing Systems, 2019, 18(2): 1-27. doi: 10.1145/3312743 [6] BHATIA T, LAHA J, MAJI A, et al. Design of FPGA based horizontal velocity computation and image storage unit for lunar lander[C]//Proceedings of the 2017 4th IEEE Uttar Pradesh Section International Conference on Electrical, Computer and Electronics (UPCON). Piscataway: IEEE Press, 2017: 521-526. [7] LENTARIS G, STRATAKOS I, STAMOULIAS I, et al. High-performance vision-based navigation on SoC FPGA for spacecraft proximity operations[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2020, 30(4): 1188-1202. doi: 10.1109/TCSVT.2019.2900802 [8] LI J, LIU Y. High precision and fast disparity estimation via parallel phase correlation hierarchical framework[J/OL]. Journal of Real-Time Image Processing, (2020-05-02)[2020-08-13]. https://doi.org/10.1007/s11554-020-00972-1. [9] ZHENG J, ZHAI B, WANG Y, et al. Motion estimation using maximum sub-image and sub-pixel phase correlation on a DSP platform[J]. Multimedia Tools and Applications, 2019, 78(14): 19019-19043. doi: 10.1007/s11042-018-7146-3 [10] KUHN M, MOSER S, ISLER O, et al. Efficient ASIC implementation of a real-time depth mapping stereo vision system[C]//Proceedings of the 2003 46th Midwest Symposium on Circuits and Systems. Piscataway: IEEE Press, 2003: 1478-1481. [11] MASRANI D K, MACLEAN W J. A real-time large disparity range stereo-system using FPGAs[C]//Proceedings of the 4th IEEE International Conference on Computer Vision Systems (ICVS'06). Piscataway: IEEE Press, 2006: 42-51. [12] BATTIATO S, BRUNA A R, PUGLISI G, et al. A robust block-based image/video registration approach for mobile imaging devices[J]. IEEE Transactions on Multimedia, 2010, 12(7): 622-635. doi: 10.1109/TMM.2010.2060474 [13] MONTEIRO E, VIZZOTTO B B, DINIZ C U, et al. Applying CUDA architecture to accelerate full search block matching algorithm for high performance motion estimation in video encoding[C]//Proceedings of the 2011 23rd International Symposium on Computer Architecture and High Performance Computing. Piscataway: IEEE Press, 2011: 128-135. [14] KIM S W, YIN S, YUN K, et al. Spatio-temporal weighting in local patches for direct estimation of camera motion in video stabilization[J]. Computer Vision and Image Understanding, 2014, 118: 71-83. doi: 10.1016/j.cviu.2013.09.005 [15] WU X, ZHAO Q, BU W, et al. A SIFT-based contactless palmprint verification approach using iterative RANSAC and local palmprint descriptors[J]. Pattern Recognition, 2014, 47(10): 3314-3326. doi: 10.1016/j.patcog.2014.04.008 [16] CHEN J, XING M, SUN G, et al. A 2-D space-variant motion estimation and compensation method for ultrahigh-resolution airborne stepped-frequency SAR with long integration time[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(11): 6390-6401. doi: 10.1109/TGRS.2017.2727060 [17] ISMAIL Y, MCNEELY J, SHAABAN M, et al. A fast discrete transform architecture for frequency domain motion estimation[C]//Proceedings of the IEEE International Conference on Image Processing. Piscataway: IEEE Press, 2010: 1249-1252. [18] ALBA A, VIGUERAS-GOMEZ J F, ARCE-SANTANA E R, et al. Phase correlation with sub-pixel accuracy: A comparative study in 1D and 2D[J]. Computer Vision and Image Understanding, 2015, 137: 76-87. doi: 10.1016/j.cviu.2015.03.011 [19] DONG Y, LONG T, JIAO W, et al. A novel image registration method based on phase correlation using low-rank matrix factorization with mixture of gaussian[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(1): 446-460. doi: 10.1109/TGRS.2017.2749436 [20] KUMAR S, AZARTASH H, BISWAS M, et al. Real-time affine global motion estimation using phase correlation and its application for digital image stabilization[J]. IEEE Transactions on Image Processing, 2011, 20(12): 3406-3418. doi: 10.1109/TIP.2011.2156420 [21] TZIMIROPOULOS G, ARGYRIOU V, ZAFEIRIOU S, et al. Robust FFT-based scale-invariant image registration with image gradients[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(10): 1899-1906. doi: 10.1109/TPAMI.2010.107 [22] MATTHIES L, MAIMONE M, JOHNSON A E, et al. Computer vision on mars[J]. International Journal of Computer Vision, 2007, 75(1): 67-92. doi: 10.1007/s11263-007-0046-z [23] DARABIHA A, ROSE J, MACLEAN J W, et al. Video-rate stereo depth measurement on programmable hardware[C]//Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2003: 203-210. [24] BHATIA T, MAJI A, LAHA J, et al. Practical approach for implementation of phase correlation based image registration in FPGA[C]//Proceedings of the 2018 International Conference on Current Trends Towards Converging Technologies (ICCTCT). Piscataway: IEEE Press, 2018: 1-4. [25] MATSUO K, HAMADA T, MIYOSHI M, et al. Accelerating phase correlation functions using GPU and FPGA[C]//Proceedings of the 2009 NASA/ESA Conference on Adaptive Hardware and Systems. Piscataway: IEEE Press, 2009: 433-438. [26] LIU J G, HONG S Y. Robust phase correlation methods for sub-pixel feature matching[C]//Proceeding of the 1st Conference of Systems Engineering for Autonomous Systems. Piscataway: IEEE Press, 2012: 1-10. [27] TAKITA K, MUQUIT M A, AOKI T, et al. A sub-pixel correspondence search technique for computer vision applications[J]. IEICE Transactions on Fundamentals of Electronics, Communications and Computer Sciences, 2004, 87(8): 1913-1923. http://ci.nii.ac.jp/naid/110003213121/en [28] CHEN T, LIU Y, LI J, et al. Fast narrow-baseline stereo matching using CUDA compatible GPUs[C]//Proceeding of the Advances in Image and Graphics Technologies(IGTA 2015). Piscataway: IEEE Press, 2015: 10-17. [29] MORGAN G L, LIU J, YAN H, et al. Precise subpixel disparity measurement from very narrow baseline stereo[J]. IEEE Transactions on Geoscience and Remote Sensing, 2010, 48(9): 3424-3433. doi: 10.1109/TGRS.2010.2046672 [30] 刘怡光, 赵晨晖, 黄蓉刚, 等. 勿需图像矫正的高精度窄基线三维重建算法[J]. 电子科技大学学报, 2014, 43(2): 262-267. doi: 10.3969/j.issn.1001-0548.2014.02.020LIU Y G, ZHAO C H, HUANG R G, et al. Rectification-free 3-dimensional reconstruction method based on phase correlation for narrow baseline image pairs[J]. Journal of University of Electronic Science and Technology of China, 2014, 43(2): 262-267(in Chinese). doi: 10.3969/j.issn.1001-0548.2014.02.020 -

下载:

下载: