Three-dimensional human pose estimation based on multi-source image weakly-supervised learning

-

摘要:

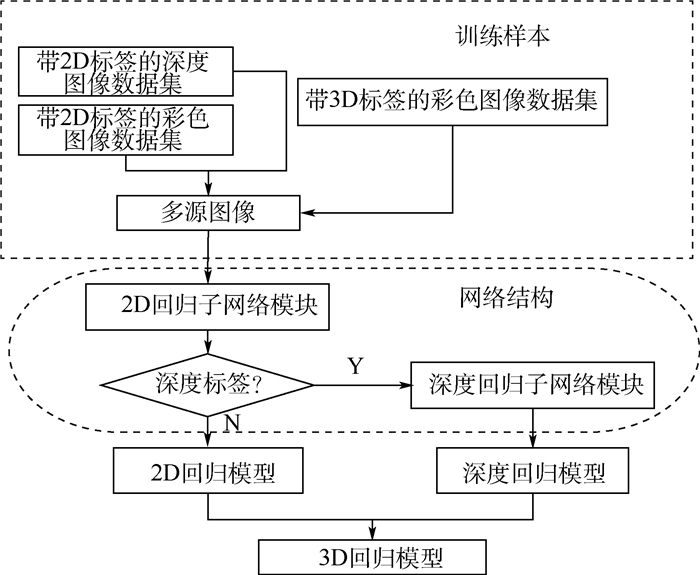

3D人体姿态估计是计算机视觉领域一大研究热点,针对深度图像缺乏深度标签,以及因姿态单一造成的模型泛化能力不高的问题,创新性地提出了基于多源图像弱监督学习的3D人体姿态估计方法。首先,利用多源图像融合训练的方法,提高模型的泛化能力;然后,提出弱监督学习方法解决标签不足的问题;最后,为了提高姿态估计的效果,改进了残差模块的设计。实验结果表明:改善的网络结构在训练时间下降约28%的情况下,准确率提高0.2%,并且所提方法不管是在深度图像还是彩色图像上,均达到了较好的估计结果。

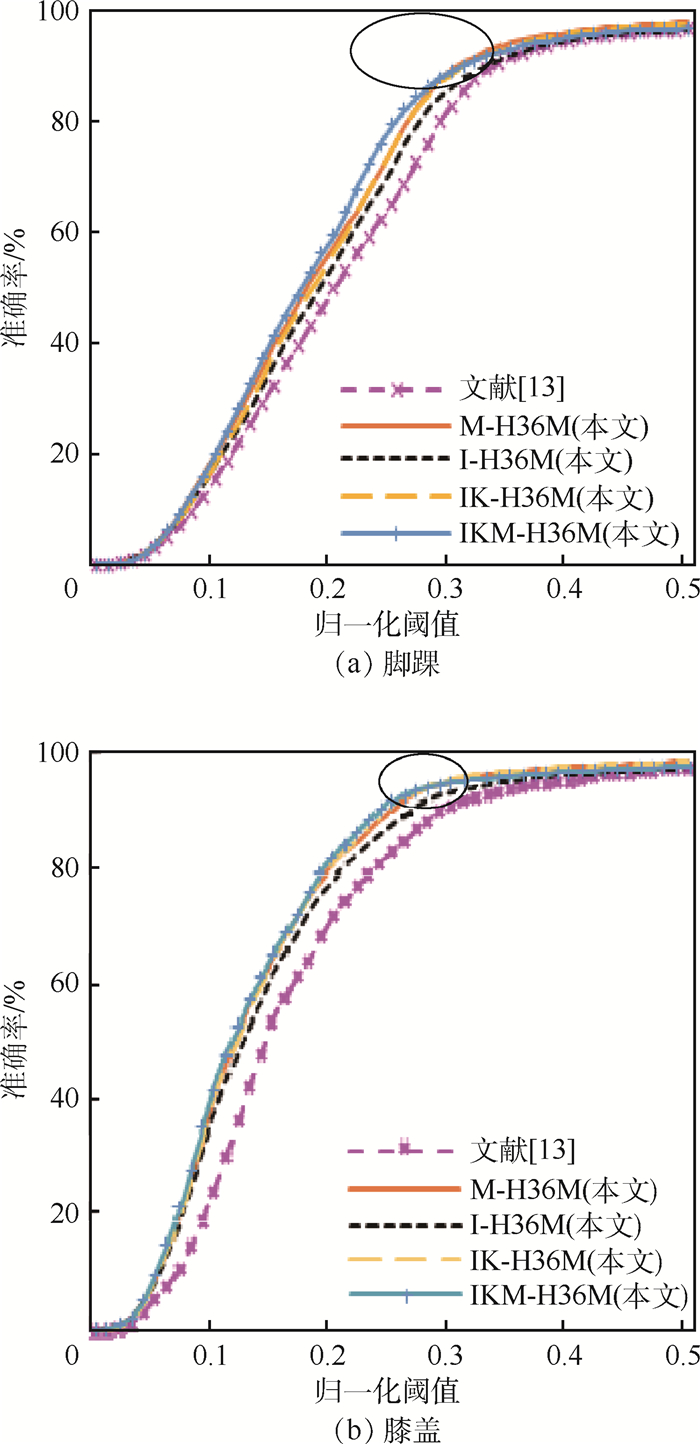

Abstract:Three-dimensional human pose estimation is a hot research topic in the field of computer vision. Aimed at the lack of labels in depth images and the low generalization ability of models caused by single human pose, this paper innovatively proposes a method of 3D human pose estimation based on multi-source image weakly-supervised learning. This method mainly includes the following points. First, multi-source image fusion training method is used to improve the generalization ability of the model. Second, weakly-supervised learning approach is proposed to solve the problem of label insufficiency. Third, in order to improve the attitude estimation results, this paper improve the design of the residual module. The experimental results show that the regression accuracy from our improved network increases by 0.2%, and meanwhile the training time reduces by 28% compared with the original network. In a word, the proposed method obtains excellent estimation results with both depth images and color images.

-

Key words:

- human pose estimation /

- hourglass networks /

- weakly-supervised /

- multi-source image /

- depth image

-

航空发动机中,高速柔性转子为工作转速在弯曲振型临界转速之上的转子系统,该类转子系统往往采用多支点支承的方案,但受制造及装配工艺的限制,往往存在支承不同心问题。与地面设备相比,航空燃气涡轮发动机转子具有工作转速高、工作过程中除传递较大扭矩外还传递轴向力的特点,因此,多支点支承高速柔性转子系统在支承不同心激励下轴内部会产生交变应力,极易造成转子产生疲劳破坏。

因此,为减小支承不同心的影响,多支点支承的高速柔性转子可采取松动支承设计,即在支承结构中设计合理的间隙。但是,对于支承带有间隙的转子结构系统,转子振幅变化时,支承的约束作用会产生突变,使得转子系统支承刚度具有阶跃的非连续特征。而航空发动机主轴承工作环境极其恶劣,因此,松动支承引起的支承刚度非连续特征可能会使转子系统产生复杂的非线性响应,影响航空发动机转子系统的动力特性稳定。尤其对于高速柔性转子,由于该类转子系统质量、刚度分布极为不均,弯曲临界转速低,工作在超临界状态下,使得松动支承对高速柔性转子动力特性的不利影响更加突出[1]。而当转子支承系统的结构参数如松动支承的位置、松动间隙、不平衡量等设计合理时,转子系统非线性振动响应会受到抑制,所以多支点支承高速柔性转子采用松动支承设计时保持良好的动力特性,需对其非线性振动响应特征进行研究。

Wang[2]及Hussien[3]等采用龙格库塔法对非线性油膜力及非线性支承共同作用下的Jeffcott转子的振动响应特征及其稳定性进行数值求解,将仿真结果和线性转子系统振动响应对比发现,支承刚度改变时,在一定转速范围内转子可产生拟周期运动;Goldman和Muszynska[4-6]考虑了支承结构的刚度、阻尼及切向摩擦,其研究结果表明松动支承激励下转子存在同步及次同步振动成分,同时存在高次谐波振动成分,一定工作状态下混沌运动可能产生;Karpenko等[7-8]仅考虑了支承间隙及非连续支承刚度的影响,建立的多点法数值求解结果表明,当转子与带有间隙的支承之间产生频繁碰撞时,可产生混沌运动;Kim、Noah[9]和Ehrich[10-11]综合考虑了挤压油膜和支承间隙对转子振动响应的影响,分别通过数值仿真和实验证明了松动支承的间隙是导致带有支承松动的转子系统产生复杂的非线性振动响应特征的原因;Kim和Noah[12]采用谐波平衡法研究了带有间隙支承的转子结构系统的分岔特性及其稳定性,发现转子系统可通过Hopt分岔产生拟周期运动;Wiercigroch等[13-14]利用Jeffcott转子研究了支承松动转子系统通往混沌的途径,发现间隙支承的转子结构系统可通过切分岔、倍周期分叉的途径通往混沌。

综上,目前针对松动支承激励下转子结构系统非线性振动响应特征的研究,计算模型多采用简单的Jeffcott转子,且没有综合考虑非连续支承刚度、切向摩擦及挤压油膜的影响,因而不能直接用于指导多支点支承高速柔性转子的松动支承设计。本文参考上述工作,基于松动支承的力学特征,研究得到非连续支承刚度产生机理,分析松动支承对柔性转子系统动力特性的影响,进而综合考虑阶跃的非连续支承刚度及油膜力的影响,建立带有松动支承的多支点支承高速柔性转子系统动力学模型,仿真研究得到了转子的非线性振动响应特征。进而根据支承结构参数对多支点支承高速柔性转子系统动力学特性的影响,对支承位置和支承刚度进行优化,以减弱非连续支承刚度对转子动力特性的影响,进而根据转子振动响应幅值随转速的变化设计松动间隙,使工作转速区域远离混沌响应区域,并具有一定的安全裕度,保证高速柔性转子采用松动支承设计时具有稳健的动力特性,从而为多支点支承高速柔性转子系统动力特性设计提供理论方法。

1. 松动支承激励的力学特征及动力学模型

1.1 松动支承激励的力学特征

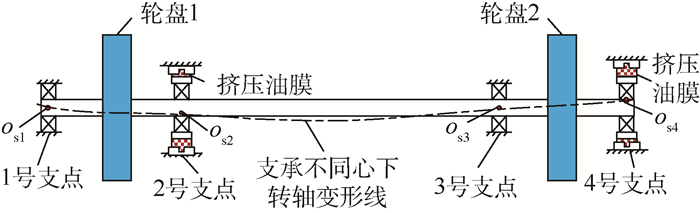

如图 1所示(图中osi为各支点位置处转子轴线位置),工程中典型的松动支承设计是通过调整挤压油膜阻尼器(Squeeze Film Damper,SFD)的间隙,加大转子与支承之间的间隙,从而消除装配时支承不同心对转子的附加约束[1]。

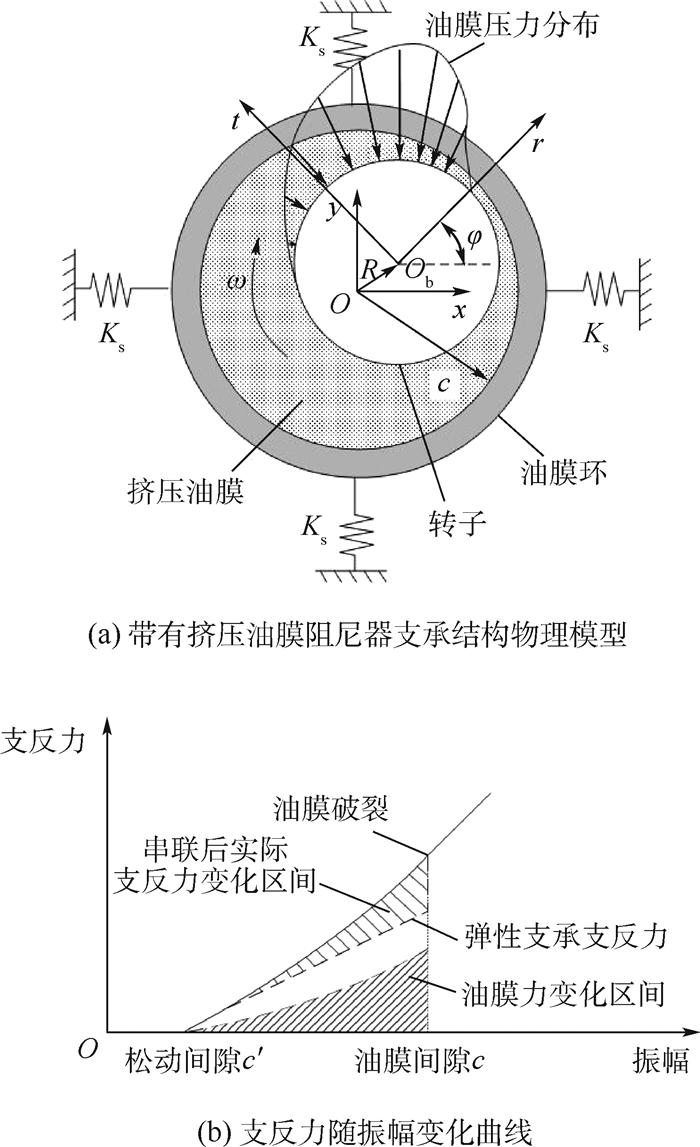

图 2(a)为带有挤压油膜阻尼器的支承结构物理模型。转子运动过程中,支承刚度主要由油膜压力产生的油膜刚度及刚性支承的支承刚度Ks串联而成,由于油膜压力不同,因而在不同位置处挤压油膜力在径向方向r和切向方向t分量有所差异,因而当转子振动位移与水平方向的夹角φ不同时,在水平和垂直方向分量也会随之改变。对于油膜间隙较大的挤压油膜阻尼器,转子作微幅振动油膜不受挤压时,支承不起约束作用。转子振幅增加,转子与油膜环内表面产生挤压油膜作用时,会产生油膜力,为转子提供油膜刚度,此时支承本身刚度与油膜刚度为串联关系,油膜刚度起主要支承作用,在油膜受挤压的扩张区内,靠近间隙小的一端阻力大,故而油压升高大,靠近间隙大的一端阻力小,油压升高小,从而会形成如图 2(a)所示的油压分布。同时,由于油膜力除受振幅影响外,运动速度及进动角度也会改变油膜力大小,从而在振幅一定时,根据转子运动轨迹的随机性,油膜力具有区间分布特征,如图 2(b)阴影所示。转子振幅继续增加与油膜环产生碰撞时,油膜会破裂,油膜刚度消失,此时支承本身刚度起主要支承作用。据此绘制支承支反力随振幅变化曲线,如图 2(b)所示,可以看出,松动支承可使转子支承刚度产生突变,从而支反力随振幅变化在油膜破裂点处导数不连续,具有非连续的力学特征,同时当转子与带间隙的支承之间产生频繁碰撞时,转子运动轨迹的随机性导致支承刚度具有区间分布特征,使转子系统具有非确定性特征。

支承刚度改变时,转子系统固有频率会随之改变,进而转子系统共振点会产生突变,使转子系统振动响应较为复杂。当转子动力特性对支承刚度敏感度较高时,支承松动可能会使转子产生混沌[1]。所以,高速柔性转子系统采用松动支承设计时,应对其支承方案及相应支承参数进行优化设计,以降低转子动力特性对支承刚度非连续变化的敏感度,使工作转速区域远离混沌响应区域并具有一定裕度,以保证转子动力特性稳定。

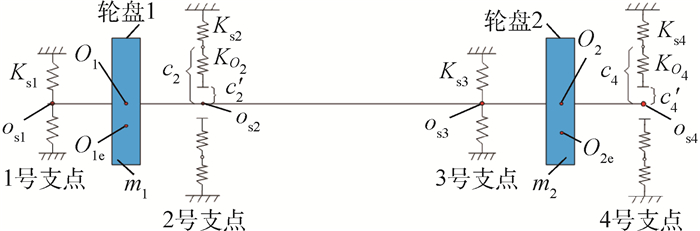

1.2 松动支承激励下多支点支承高速柔性转子系统的动力学模型

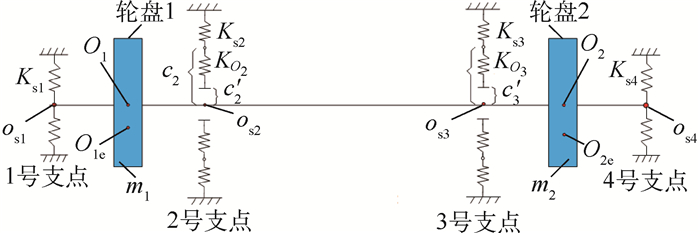

根据高速柔性转子多支点支承的特点以及松动支承的力学特征,建立如图 3所示多支点支承高速柔性转子系统力学模型。O1、O2为轮盘的几何中心,O1e、O2e为轮盘的质心,e1、e2为质心到形心距离,轮盘质量分别为m1、m2,轮盘处阻尼分别为Cd1、Cd2。Ksi和Csi分别为各支点的支承刚度和阻尼,c2、c3为油膜间隙,c′2、c′3为松动间隙。

忽略陀螺力矩和扭转振动的影响,转子系统横向振动的运动微分方程为

(1a)

(1b)

(1c)

(1d)

(1e)

(1f) 式中:ω为转子同步进动角速度;msi为支承质量;ki为转子在轮盘i处的刚度;xsi、ysi为支承位置处转子振动位移分量;xdi、ydi为轮盘振动位移分量;Fsix、Fsiy为松动支承支反力;2、3号支点采用支承松动设计,支承刚度为Ks2、Ks3。支承的支反力随转子振幅变化具有非连续特征,其表达式为

(2)

(3) 式中:fxi、fyi为油膜力;

采用短轴承油膜理论,半油膜条件下油膜力的表达式为

(4) 式中:

(5) 其中:I1、I2、I3为Sommerfeld系数[15-16]。

2. 松动支承激励下的转子系统动力学特性分析

2.1 非线性振动响应特征

根据松动支承激励下多支点支承高速柔性转子系统力学模型和动力学模型,参考涡轴发动机动力涡轮转子结构,选取结构的各参数如表 1、表 2所示。

表 1 结构参数取值Table 1. Values of structural parameters参数 数值 m1e1/(g·mm) 10 m2e2/(g·mm) 10 c′2/mm 7×10-4 c′4/mm 4×10-4 c2-c′2/mm 2×10-4 c4-c′4/mm 2×10-4 k1/(N·m-1) 2×105 k2/(N·m-1) 1×104 表 2 挤压油膜阻尼器参数取值Table 2. Values of SFD parameters参数 滑油黏度/(Pa·s) 轴向承载长度/mm 轴承半径/mm 数值 1×10-3 90 40 改变转子转速,仿真计算得到松动支承激励下高速柔性转子系统振动响应,如图 4~图 7所示。

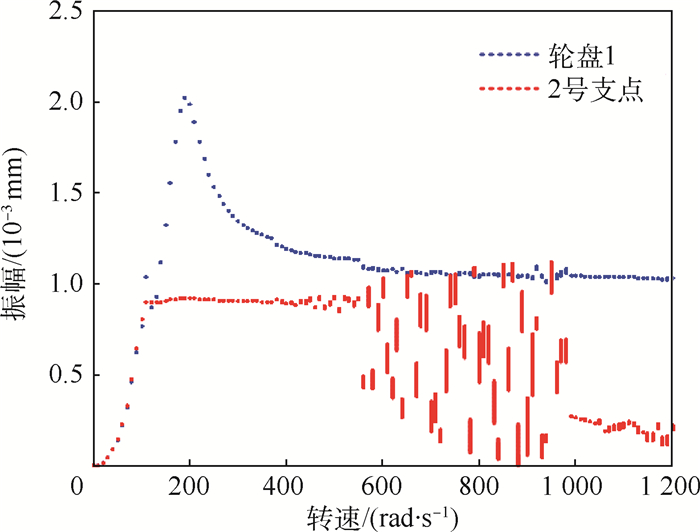

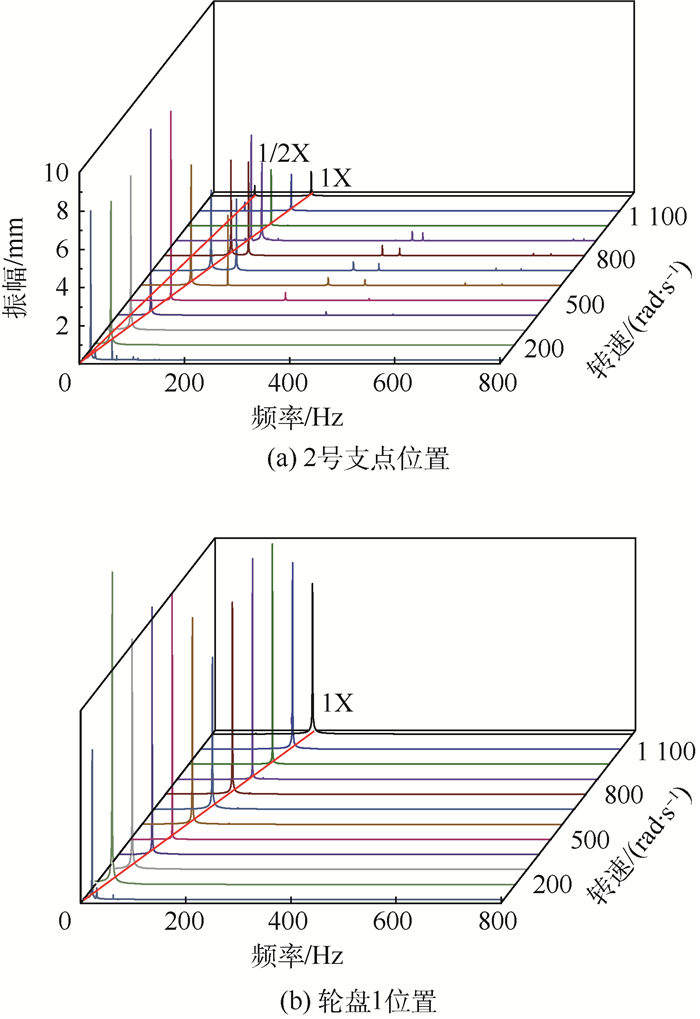

从图 4、图 5可以看出,松动支承激励下的柔性转子,其振动响应具有如下特征:

1) 对于带有间隙的2号支点位置处的振动响应,在10~1200rad/s转速范围内,随转速增加,其运动形式依次为周期—拟周期—混沌—拟周期运动。

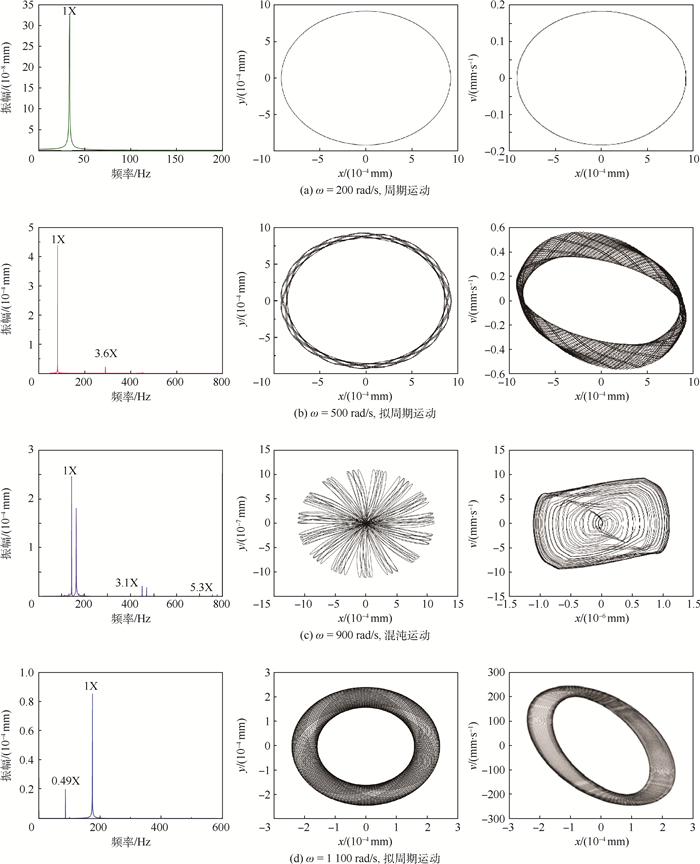

如图 4所示,当转速较低(ω < 100rad/s)时,由于转子振幅很小,松动支承几乎不起约束作用,轮盘1和2号支点振幅相同,此时转子处于两支点支承状态,为线性系统,频域响应仅为1倍频,转子做周期运动。

转子转速继续增加,向临界转速靠近,在不平衡力作用下,转子振幅增加使转子与松动支承之间的油膜受到挤压时,油膜的刚度和阻尼会抑制转子的振动,此时轮盘1和2号支点振幅出现差异,在非线性油膜力作用下,频域响应为1倍频和3倍频成分的叠加,转子产生拟周期运动。

转子转速继续增加,逐渐远离共振转速,不平衡激振力加大,当振幅下降至与松动支承之间产生频繁碰撞时,整个系统可看成2个系统的耦合:①固有非线性系统,即无不平衡激振作用下的松动支承转子系统;②不平衡激振力作用下的线性振动系统。其中,不平衡激振力可代表 2个系统的耦合程度[16]。当不平衡激振力很小时,线性系统的振动很弱,对应非线性系统的作用也很弱。系统的运动便可近似看成是2个独立运动的叠加,即整个系统的运动是围绕平衡点之一的线性振动。此时根据初始条件不同,系统会绕不同的平衡点运动,从而在相平面上形成不同的流域。由于不平衡激振很小,振动很弱,系统的振动才不会越出既定的流域而趋近于另一平衡点。

根据图 4和图 6(c)的相图所示,当转速增加至580rad/s左右时,受松动支承的影响,频域响应为1倍频、3倍频、5倍频及多种其他频率成分的叠加,运动形式为混沌运动。这是由于不平衡激振力增加,使得2个系统的相互影响增强到使振动可以超过初始流域的界限,从而可从初始的流域转到新的流域,将向其他平衡点靠近。同时,系统振幅也可能增大到超过新流域的界限而趋近于初始流域,此时系统会绕初始的平衡点运动。而系统在自发随机性的影响下会从一个流域进入另一个流域,系统在不同流域之间产生跳动而具有随机的特点,从而产生混沌运动[17-18]。

转速继续增加,不平衡激振力增大,使得线性系统的振动处于主导地位,振幅继续下降,在非线性油膜力作用下,转子运动状态为拟周期运动。

当不平衡激振力很大时,线性系统完全处于支配地位,松动支承引起的非线性影响极弱。此时,整个系统便按线性系统的运动方式运动,变为周期运动[16-17]。

2) 图 7为松动支承激励下轮盘的振动响应,运动形式主要为拟周期和周期运动。2号支点位置处存在混沌运动时,对应轮盘振动响应以转速1倍频为主,同时包含其他频率成分,呈拟周期运动;2号支点位置为拟周期和周期运动时,对应轮盘振动响应仅为1倍频,呈周期运动。

2.2 参数影响规律

为了研究松动间隙、非连续支承刚度、不平衡量大小及松动支承位置对转子响应的影响,以转子系统的加速过程为例,分析不同参数下的松动支承激励的转子系统响应分岔图。

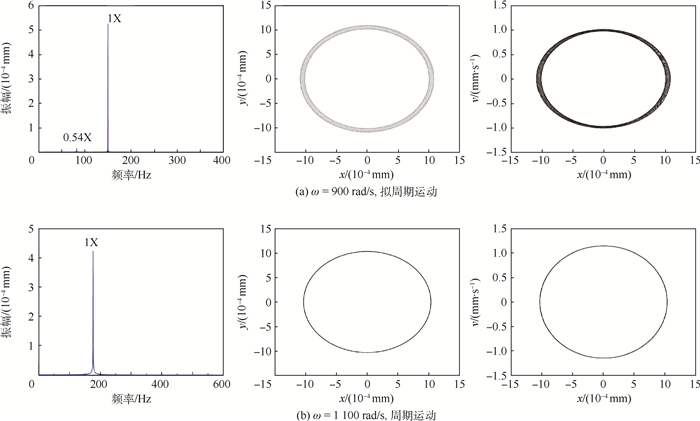

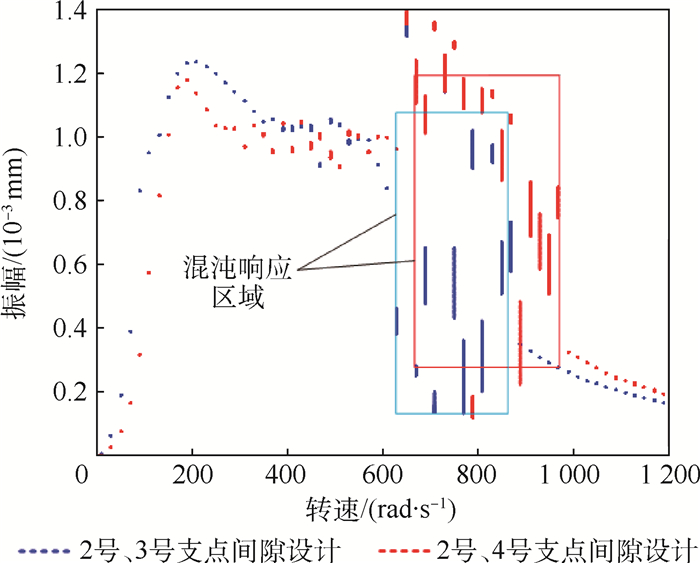

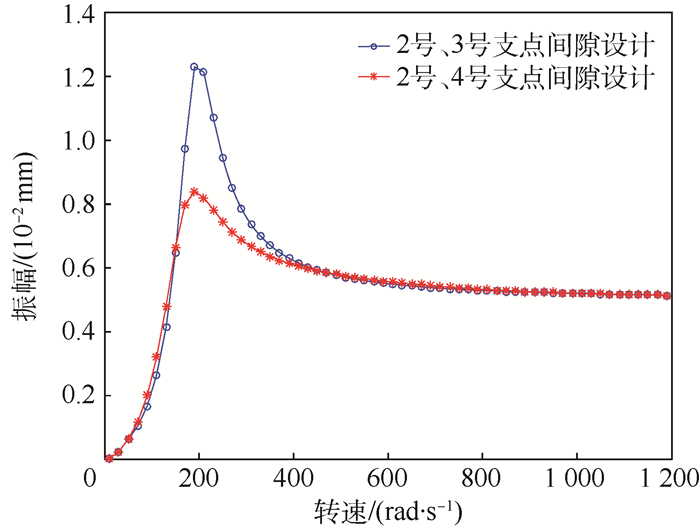

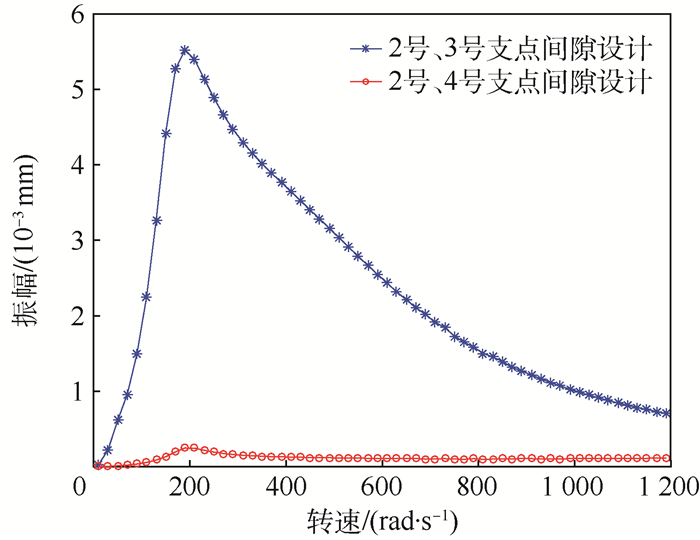

2.2.1 松动支承位置影响

改变松动支承位置,将2号、4号支点处设计松动间隙,其物理模型如图 8所示。得到2号、3号支点位置处及轮盘2位置处转子振幅随转速变化的分岔图。根据图 9,改变轮盘2位置处松动支承的位置,对2号支点位置处转子的振动响应影响较小,混沌响应区域稍有偏移。如图 10所示,松动支承位置改变对轮盘2及其支承位置处振动响应影较大,4号支点采用松动支承设计时,轮盘2在共振点处的峰值略有降低。根据图 11可知,随转速增加,不平衡激振力增加使得转子与松动支承之间存在碰撞冲击时,松动支承位置处响应幅值对转速变化较敏感,因而在2号、3号支点位置设计松动间隙时,3号支点位置处转子响应幅值对转速变化较敏感。同时由于3号支点靠近转子质心,其振幅变化在非连续支承刚度影响下对转速变化敏感时,不利于转子动力特性稳定,因此,优先选择2号、4号支点采用松动支承设计。

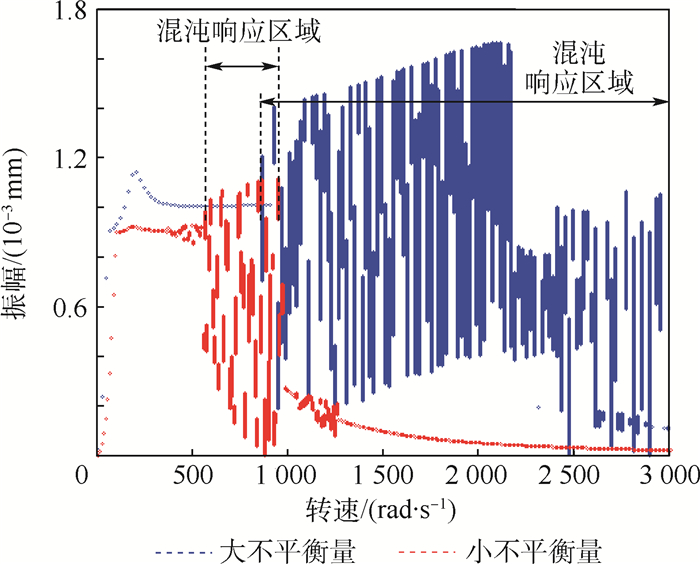

2.2.2 不平衡量影响

如表 3所示,改变轮盘1、2的不平衡量,得到轮盘1及2号支点位置处转子振幅随转速变化的分岔图,如图 12所示。可以看出,转子不平衡量增加时,混沌响应的转速区域向高转速区偏移。这是由于当松动支承处的间隙保持不变时,只有当振幅达到0.4×10-3~0.9×10-3mm时,2号支点位置处转子才会与支承发生频繁碰撞,从而产生混沌运动。由于转子不平衡量增加时,转子振幅随转速变化会整体增加,因此与不平衡量小的情况相比转子在更高的转速范围内,2号支点位置处转子的振幅才会减小至0.4×10-3~0.9×10-3mm,与支承产生频繁碰撞,从而产生混沌运动,且由于该振幅范围对应转速范围较宽,因此,对应混沌响应的转速范围较宽广。

表 3 轮盘不平衡量取值Table 3. Unbalance value of disk不平衡量 m1e1/(g·mm) m2e2/(g·mm) 小不平衡量 10 10 大不平衡量 50 50 2.2.3 非连续支承刚度影响

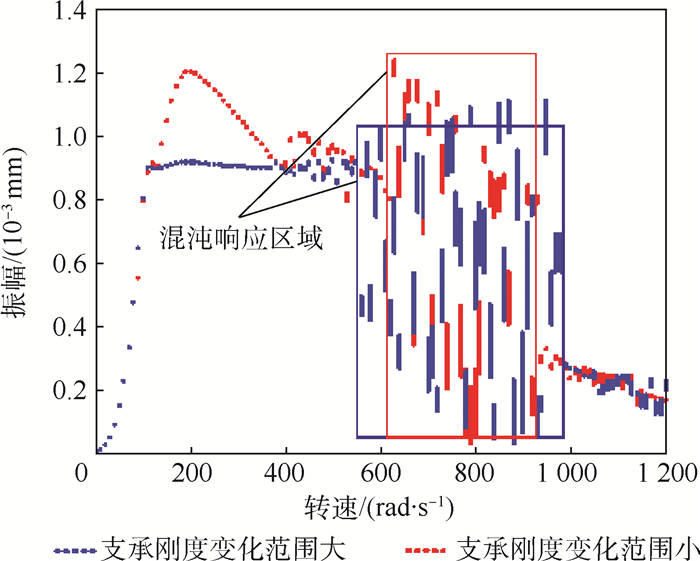

如表 4所示,为研究支承刚度非连续的影响,改变松动支承位置处的支承刚度变化的区间范围,得到2号支点位置处转子振幅随转速变化的分岔图,如图 13所示。可以看出,松动支承位置处支承刚度变化范围增大时,混响应区域稍有扩大。

表 4 支承刚度变化区间Table 4. Variation range of bearing stiffness支承刚度变化范围 Ks2/(N·m-1) Ks3/(N·m-1) 支承刚度变化范围小 0~1×105 0~1×105 支承刚度变化范围大 0~1×107 0~1×107 2.2.4 松动间隙影响

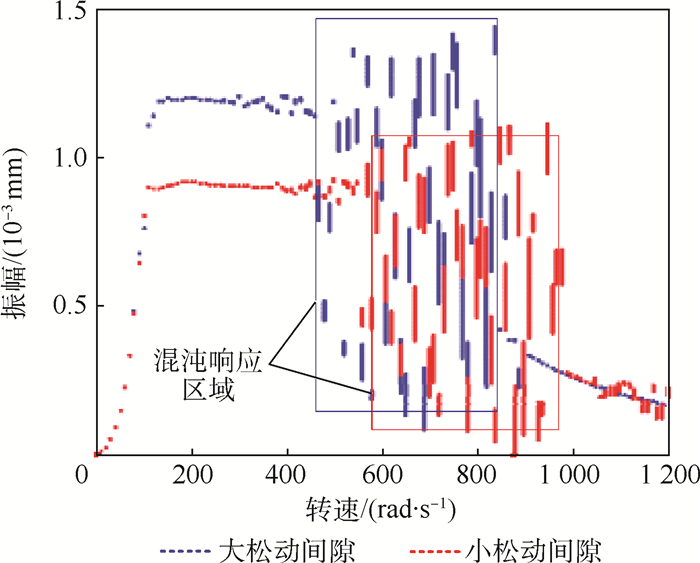

如表 5所示,改变松动支承位置处的间隙,得到2号支点位置处转子振幅随转速变化的分岔图,如图 14所示。可以看出,松动支承的间隙加大时,松动支承位置处转子振幅需增加至可与支承产生频繁碰撞才能引发混沌运动产生,所以产生混沌运动的转速区域向共振区域靠近,且混沌运动振幅随之增加。

表 5 松动间隙参数取值Table 5. Parameter values of bearing clearance松动间隙 c′2/mm c′4/mm c2/mm c4/mm 小松动间隙 1.4×10-3 8×10-4 2×10-4 2×10-4 大松动间隙 7×10-3 4×10-3 2×10-3 2×10-3 3. 结论

松动支承激励下多支点支承柔性转子的运动状态具有非线性特征,其非线性振动形式主要为拟周期和混沌运动:

1) 在一定不平衡量下,转子与松动支承之间产生频繁碰撞时,松动支承位置处转子的运动形式为混沌运动。对应轮盘处运动形式为拟周期运动。

2) 非线性油膜力作用下,松动支承位置处转子的运动形式为拟周期运动,对应轮盘处运动形式为周期运动。

-

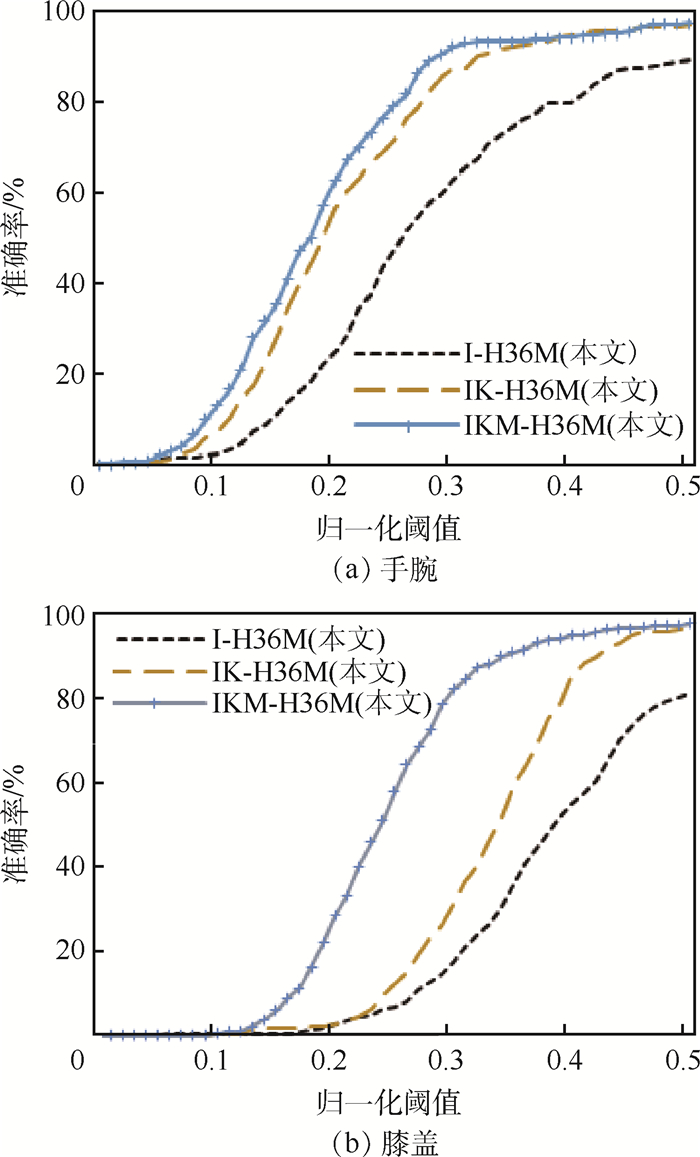

表 1 不同模型对应的训练图像

Table 1. Training images corresponding to different models

模型 训练数据 深度图像数据库 彩色图像数据库 ITOP K2HGD MPII Human 3.6M 文献[13] √ √ M-H36M(本文) √ √ I-H36M(本文) √ √ IK-H36M(本文) √ √ √ IKM-H36M(本文) √ √ √ √ 表 2 不同模型准确率、参数量及训练时间对比

Table 2. Comparison of accuracy rate, parameter quantity and training time among different models

模型 准确率/ % 参数量/ 106 训练时间/ (s·batch-1) 本文模型(131~128) 90.10 31.00 0.16 文献[13] (131~256) 92.26 116.60 0.29 本文模型(333~128) 92.48 101.10 0.21 本文模型(333~256) 92.92 390.00 0.41 表 3 不同沙漏网络个数准确率、参数量及训练时间对比

Table 3. Comparison of accuracy rate, parameter quantity and training time with different numbers of hourglass network

模型 准确率/ % 参数量/ 106 训练时间/ (s·batch-1) 文献[13] (2 stack) 92.26 116.60 0.29 本文模型(2 stack) 92.48 101.10 0.21 本文模型(4 stack) 92.83 185.30 0.32 表 4 基于PDJ评价指标,不同训练模型在Human 3.6M测试图像上的3D人体姿态估计结果

Table 4. Three-dimensional pose estimation results of different regression models on Human 3.6M test images base on based on PDJ evaluation criteria

方法 准确率/% 脚踝 膝盖 髋 手腕 手肘 肩膀 头 平均 文献[13] 65.00 82.90 89.27 77.91 86.46 94.73 94.42 84.38 M-H36M(本文) 75.07 90.33 94.69 80.92 86.24 96.38 94.69 88.33 I-H36M(本文) 71.52 88.03 92.52 76.46 82.55 94.07 95.30 85.78 IK-H36M(本文) 79.23 91.60 94.40 77.61 81.40 92.69 93.34 87.18 IKM-H36M(本文) 74.98 91.06 94.33 78.65 85.21 95.77 95.16 87.88 -

[1] PARK S, HWANG J, KWAK N.3D human pose estimation using convolutional neural networks with 2D pose information[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 156-169. [2] YANG W, OUYANG W, LI H, et al.End-to-end learning of deformable mixture of parts and deep convolutional neural networks for human pose estimation[C]//IEEE Computer Society Conference on Computer Vision and Patter Recognition.Piscataway, NJ: IEEE Press, 2016: 3073-3082. [3] ZE W K, FU Z S, HUI C, et al.Human pose estimation from depth images via inference embedded multi-task learning[C]//Proceedings of the 2016 ACM on Multimedia Conference.New York: ACM, 2016: 1227-1236. [4] SHEN W, DENG K, BAI X, et al.Exemplar-based human action pose correction[J].IEEE Transactions on Cybernetics, 2014, 44(7):1053-1066. doi: 10.1109/TCYB.2013.2279071 [5] GULER R A, KOKKINOS L, NEVEROVA N, et al.DensePose: Dense human pose estimation in the wild[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2018: 7297-7306. [6] RHODIN H, SALZMANN M, FUA P.Unsupervised geometry-aware representation for 3D human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2018: 765-782. doi: 10.1007/978-3-030-01249-6_46 [7] OMRAN M, LASSNER C, PONS-MOLL G, et al.Neural body fitting: Unifying deep learning and model based human pose and shape estimation[C]//International Conference on 3D Vision.Piscataway, NJ: IEEE Press, 2018: 484-494. [8] HAQUE A, PENG B, LUO Z, et al.Towards viewpoint invariant 3D human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 160-177. doi: 10.1007%2F978-3-319-46448-0_10 [9] TOSHEV A, SZEGEDY C.DeepPose: Human pose estimation via deep neural networks[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2014: 1653-1660. [10] CAO Z, SIMON T, WEI S E, et al.Realtime multi-person 2D pose estimation using part affinity fields[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 7291-7299. [11] WEI S E, RAMAKRISHNA V, KANADE T, et al.Convolutional pose machines[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2016: 4724-4732. [12] NEWELL A, YANG K, DENG J, et al.Stacked hourglass networks for human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 483-499. doi: 10.1007%2F978-3-319-46484-8_29 [13] YI Z X, XING H Q, XIAO S, et al.Towards 3D pose estimation in the wild: A weakly-supervised approach[C]//IEEE International Conference on Computer Vision.Piscataway, NJ: IEEE Press, 2017: 398-407. [14] SAM J, MARK E.Clustered pose and nonlinear appearance models for human pose estimation[C]//Proceedings of the 21st British Machine Vision Conference, 2010: 12.1-12.11. [15] ANDRILUKA M, PISHCHULIN L, GEHLER P, et al.2D human pose estimation: New benchmark and state of the art analysis[C]//IEEE Computer Society Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2014: 3686-3693. [16] CTALIN I, DRAGOS P, VLAD O, et al.Human 3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7): 1325-1339. [17] HAN X F, LEUNG T, JIA Y Q, et al.MatchNet: Unifying feature and metric learning for patch-based matching[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2015: 3279-3286. [18] XU G H, LI M, CHEN L T, et al.Human pose estimation method based on single depth image[J].IEEE Transactions on Computer Vision, 2018, 12(6):919-924. doi: 10.1049/iet-cvi.2017.0536 [19] SI J L, ANTONI B.3D human pose estimation from monocular images with deep convolutional neural network[C]//Asian Conference on Computer Vision.Berlin: Springer, 2014: 332-347. doi: 10.1007/978-3-319-16808-1_23 [20] GHEZELGHIEH M F, KASTURI R, SARKAR S.Learning camera viewpoint using CNN to improve 3D body pose estimation[C]//International Conference on 3D Vision.Piscataway, NJ: IEEE Press, 2016: 685-693. [21] CHEN C H, RAMANAN D.3D human pose estimation=2D pose estimation+matching[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 5759-5767. [22] POPA A I, ZANFIR M, SMINCHISESCU C.Deep multitask architecture for integrated 2D and 3D human sensing[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 4714-4723. [23] COLLOBERT R, KAVUKCUOGLU K, FARABET C.Torch7: A Matlab-like environment for machine learning[C]//Conference and Workshop on Neural Information Processing Systems, 2011: 1-6. [24] NIKOS K, GEORGIOS P, KOSTAS D.Convolutional mesh regression for single-image human shape reconstruction[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2019: 4510-4519. [25] CHENXU L, XIAO C, ALAN Y.OriNet: A fully convolutional network for 3D human pose estimation[C]//British Machine Vision Conference, 2018: 321-333. 期刊类型引用(8)

1. 张勇. 超高速离心叶轮转子系统不平衡响应研究. 机械强度. 2025(01): 42-49 .  百度学术

百度学术2. 龙伦,袁巍,王建方,刘文魁,唐振寰. 高速柔性转子-支承系统耦合动力特性计算与试验. 航空发动机. 2022(03): 60-64 .  百度学术

百度学术3. 黑棣,郑美茹,孙珂琪. 基于Newmark法两端支座松动拉杆转子动力学分析. 机械设计. 2021(01): 115-126 .  百度学术

百度学术4. 蒋勉,刘双奇,张静静,罗柏文. 一种转子系统混沌运动转速范围确定方法. 应用力学学报. 2021(02): 802-810 .  百度学术

百度学术5. 王杰,左彦飞,江志农,冯坤. 带中介轴承的双转子系统振动耦合作用评估. 航空学报. 2021(06): 401-412 .  百度学术

百度学术6. 洪杰,宋制宏,王东,马艳红. 高速转子系统支承结构及力学特性设计方法. 航空动力学报. 2019(05): 961-970 .  百度学术

百度学术7. 洪杰,栗天壤,倪耀宇,吕春光,马艳红. 复杂转子系统支点动载荷模型及其优化设计. 北京航空航天大学学报. 2019(05): 847-854 .  本站查看

本站查看8. 洪杰,杨哲夫,吕春光,马艳红. 高速柔性转子系统动力特性稳健设计方法. 北京航空航天大学学报. 2019(05): 855-862 .  本站查看

本站查看其他类型引用(5)

-

下载:

下载:

下载:

下载:

百度学术

百度学术