Intelligent algorithm of warship’s vital parts detection, trajectory prediction and pose estimation

-

摘要:

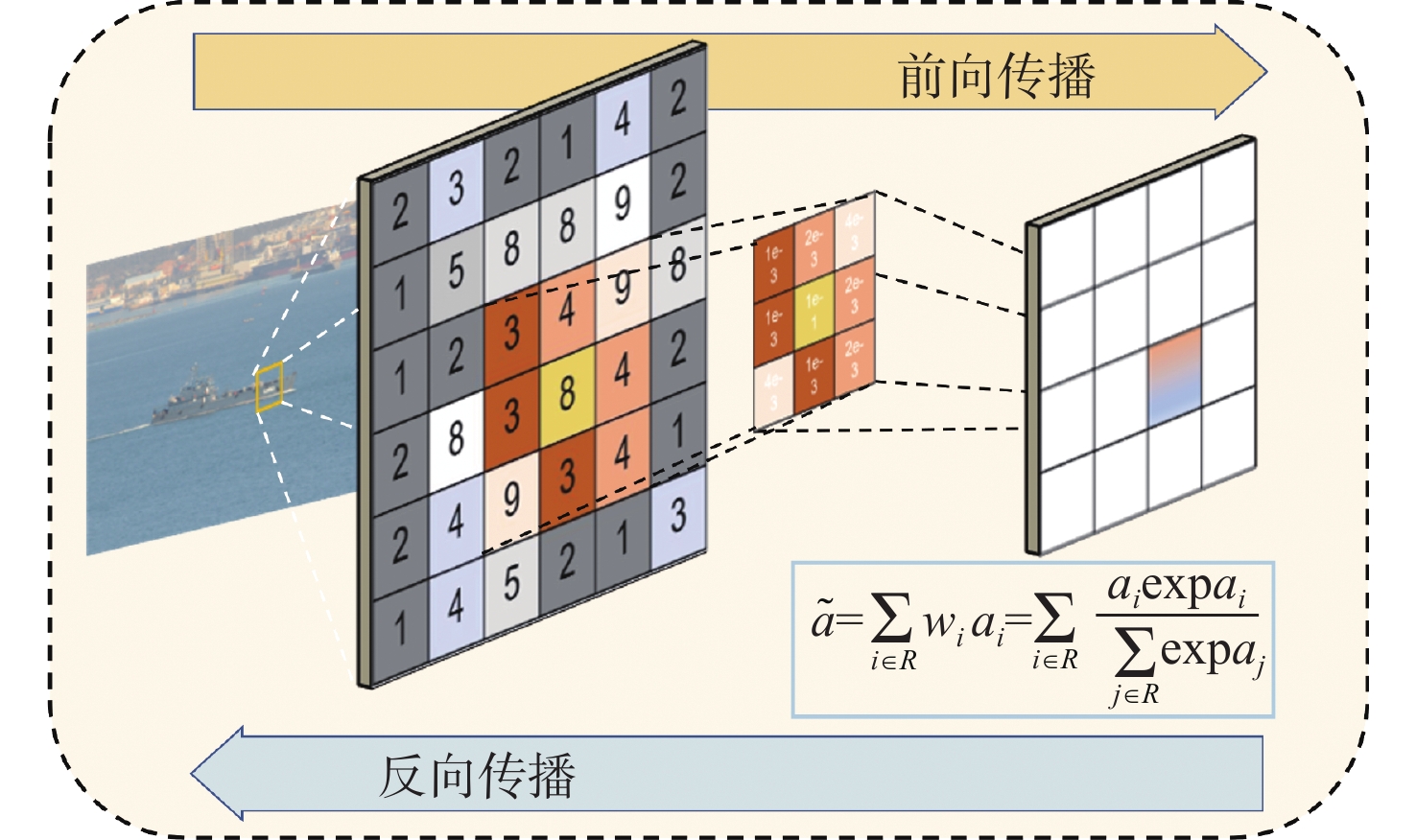

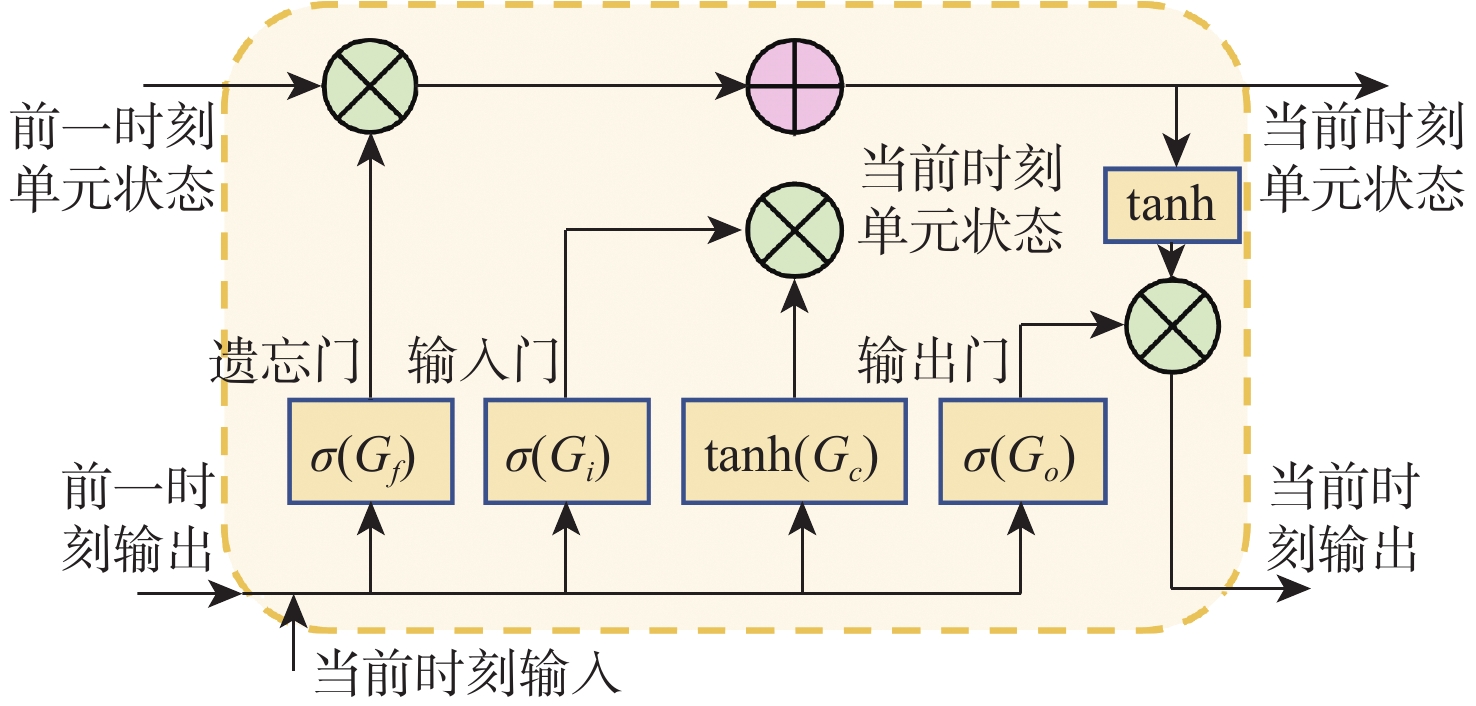

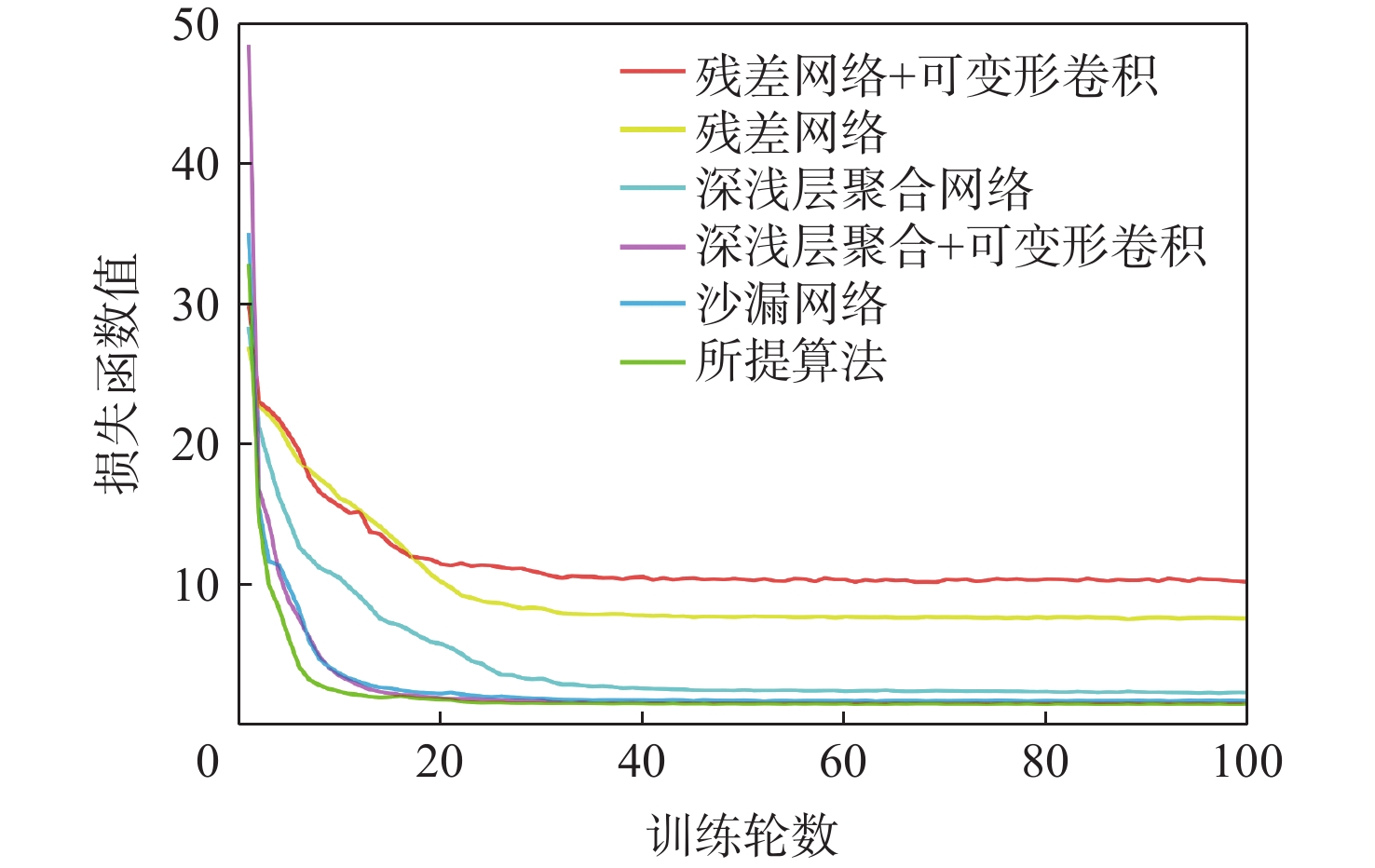

准确检测与打击舰船要害部位可有效提升反舰导弹毁伤效能。针对舰船要害部位检测精度低、导引误差解算精度不足等问题,提出基于深度学习的舰船要害关键点检测、轨迹预测与导引头位姿估计算法。融合深层语义信息与浅层定位信息,采用SoftPool池化保留细粒度特征,提升多角度多尺度舰船要害部位检测精度;将关键点检测结果与舰船空间结构建立映射,解算导引头三维位姿;引入长短期记忆网络挖掘要害打击点时空特征,实现多尺度舰船要害动态轨迹预测。实验结果表明:所提算法对舰船要害部位检测与轨迹预测精度高,导引头位姿估计结果较准确,满足自主突防视角反舰导弹对复杂海战场的态势感知需求。

Abstract:Accurate detection and attack of warship’s vital parts can effectively improve the damage efficiency of anti-ship missile. Aiming at the problems of low detection accuracy on vital parts and insufficient accuracy of guidance error, this paper proposes an algorithm of warship’s vital parts detection, trajectory prediction and pose estimation based on deep learning. The deep semantic information and shallow positioning information are integrated, and the SoftPool modules are used to preserve fine-grained features. The detection accuracy of multi-angle and multi-scale warship’s vital parts is improved. Combining the detection results with the warship’s space structure can establish the mapping relationship, which is used to calculate the three-dimensional position and posture of the seeker. The long short term memory network is introduced to mine the space-time characteristics of key-points to realize the dynamic trajectory prediction on multi-scale warship. Experimental results show that this algorithm has high accuracy in detection of warship’s vital parts and trajectory prediction. The posture estimation results of the seeker are precise. The situational awareness requirement in complex marine battlefield of autonomous self-piloted anti-ship missiles is satisfied in independent penetration perspective.

-

Key words:

- target detection /

- key-points network /

- SoftPool /

- long short term memory /

- pose estimation /

- anti-ship missile

-

表 1 实验环境

Table 1. Experimental environment

参数 配置信息 CPU AMD Ryzen 9 3900X CPU显存 32 GB GPU GEFORCE RTX 2080Ti GPU显存 11 GB IDE Pycharm、gedit、vim 系统 Ubuntu 16.04 LTS 语言 Python 加速环境 CUDA10.0,CuDNN7.6 深度学习框架 Pytorch1.0 表 2 舰船关键点测试结果

Table 2. Test results of warship’s key-points

表 3 不同池化方式测试结果

Table 3. Test results of different poolings

池化方式 mAP/% 检测速度/FPS 最大值池化 84.4 29 随机采样池化 85.4 28 空间金字塔池化 85.9 29 SoftPool池化 87.7 27 表 4 位姿估计测试结果

Table 4. Test results of pose estimation

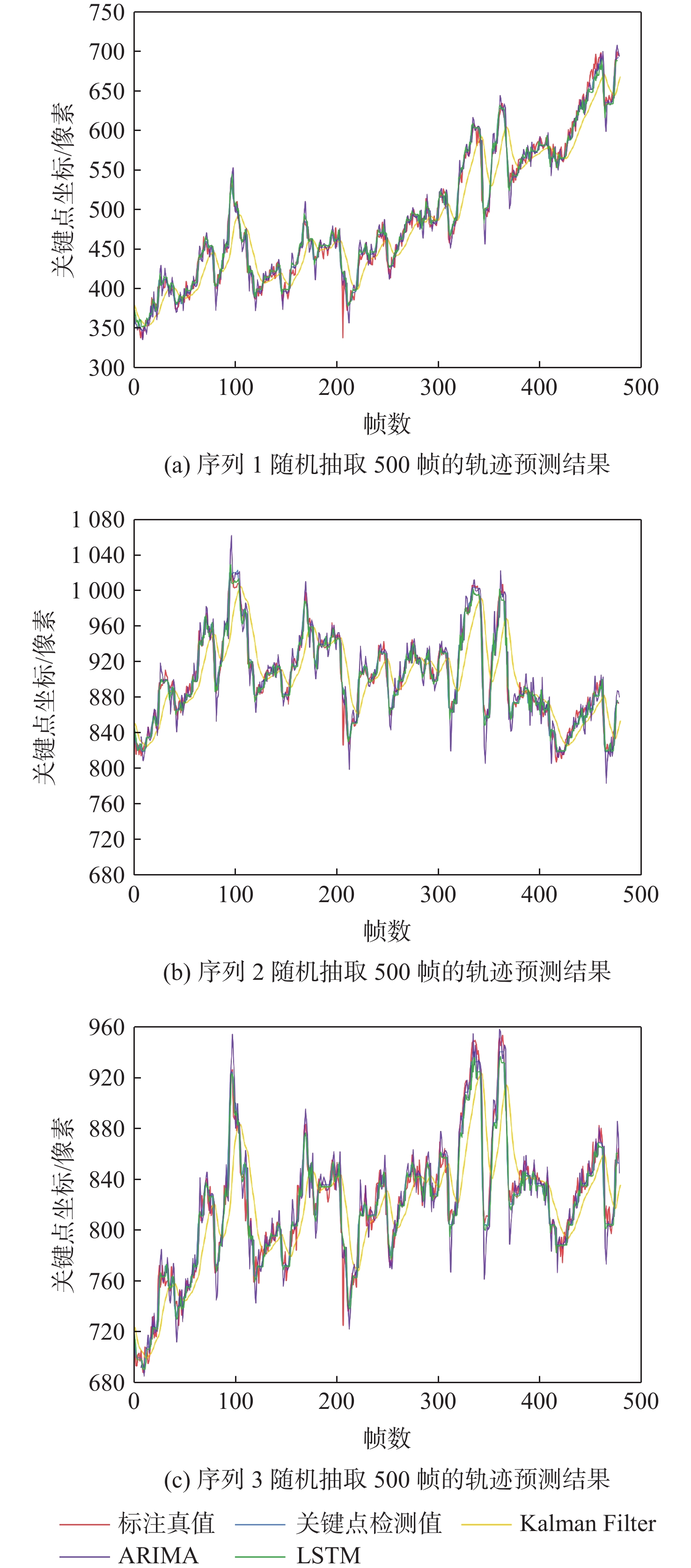

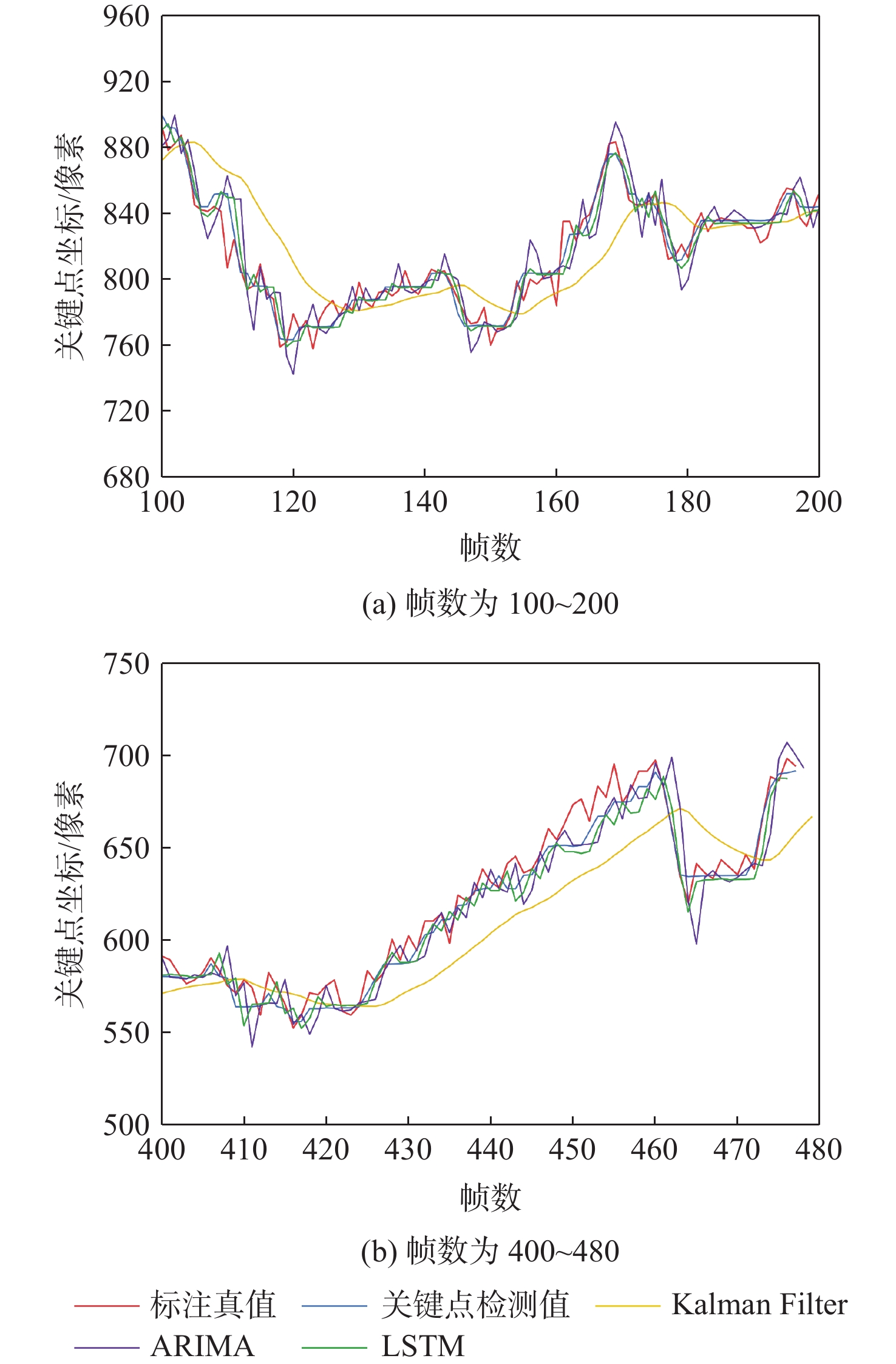

表 5 轨迹预测算法对比

Table 5. Comparison of trajectory prediction algorithms

算法 ADE/像素 FDE/像素 关键点检测真值 0.303 2 1.450 6 Kalman Filter 1.139 8 2.671 2 ARIMA 0.963 5 2.045 6 LSTM 0.326 3 1.632 5 -

[1] 余瑞星, 吴虞霖, 曹萌, 等. 基于边缘与角点相结合的目标提取与匹配算法[J]. 西北工业大学学报, 2017, 35(4): 586-590. doi: 10.3969/j.issn.1000-2758.2017.04.005YU R X, WU Y L, CAO M, et al. Target extraction and image matching algorithm based on combination of edge and corner[J]. Journal of Northwestern Polytechnical University, 2017, 35(4): 586-590(in Chinese). doi: 10.3969/j.issn.1000-2758.2017.04.005 [2] 苏娟, 杨龙, 黄华, 等. 用于SAR图像小目标舰船检测的改进SSD算法[J]. 系统工程与电子技术, 2020, 42(5): 1026-1034. doi: 10.3969/j.issn.1001-506X.2020.05.08SU J, YANG L, HUANG H, et al. Improved SSD algorithm for small-sized SAR ship detection[J]. Systems Engineering and Electronics, 2020, 42(5): 1026-1034(in Chinese). doi: 10.3969/j.issn.1001-506X.2020.05.08 [3] GAO F, HE Y S, WANG J, et al. Anchor-free convolutional network with dense attention feature aggregation for ship detection in SAR images[J]. Remote Sensing, 2020, 12(16): 2619. doi: 10.3390/rs12162619 [4] TANG G, LIU S B, FUJINO I, et al. H-YOLO: A single-shot ship detection approach based on region of interest preselected network[J]. Remote Sensing, 2020, 12(24): 4192. doi: 10.3390/rs12244192 [5] 王玺坤, 姜宏旭, 林珂玉. 基于改进型YOLO算法的遥感图像舰船检测[J]. 北京航空航天大学学报, 2020, 46(6): 1184-1191. doi: 10.13700/j.bh.1001-5965.2019.0394WANG X K, JIANG H X, LIN K Y. Remote sensing image ship detection based on modified YOLO algorithm[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(6): 1184-1191(in Chinese). doi: 10.13700/j.bh.1001-5965.2019.0394 [6] ZHANG Y L, GUO L H, WANG Z F, et al. Intelligent ship detection in remote sensing images based on multi-layer convolutional feature fusion[J]. Remote Sensing, 2020, 12(20): 3316. doi: 10.3390/rs12203316 [7] 刘勇, 李杰, 张建林, 等. 基于深度学习的二维人体姿态估计研究进展[J]. 计算机工程, 2021, 47(3): 1-16. doi: 10.19678/j.issn.1000-3428.0058799LIU Y, LI J, ZHANG J L, et al. Research progress of two-dimensional human pose estimation based on deep learning[J]. Computer Engineering, 2021, 47(3): 1-16(in Chinese). doi: 10.19678/j.issn.1000-3428.0058799 [8] TOSHEV A, SZEGEDY C. DeepPose: Human pose estimation via deep neural networks[C]// IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014 : 1653-1660. [9] TOMPSON J, JAIN A, LECUN Y, et al. Joint training of a convolutional network and a graphical model for human pose estimation[C]//Proceedings of the Conference on Neural Information Processing Systems. New York: Curran Associates Press, 2014: 1799-1807. [10] WEI S H, RAMAKRISHNA V, KANADE T, et al. Convolutional pose machines[C]// IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 4724-4732. [11] 陈宗海, 裴浩渊, 王纪凯, 等. 基于单目相机的视觉重定位方法综述[J]. 机器人, 2021, 43(3): 373-384. doi: 10.13973/j.cnki.robot.200350CHEN Z H, PEI H Y, WANG J K, et al. Survey of monocular camera-based visual relocalization[J]. Robot, 2021, 43(3): 373-384(in Chinese). doi: 10.13973/j.cnki.robot.200350 [12] ALAHI A, GOEL K, RAMANATHAN V, et al. Social LSTM: Human trajectory prediction in crowded spaces[C]// IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 961-971. [13] ZHOU X Y, WANG D Q, KRÄHENBÜHL P. Objects as points[EB/OL]. (2019-04-16)[2021-04-26]. [14] STERGIOU A, POPPE R, KALLIATAKIS G. Refining activation downsampling with SoftPool[C]// IEEE/CVF International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2021 : 10337-10346. [15] ZENG W L, QUAN Z B, ZHAO Z Y, et al. A deep learning approach for aircraft trajectory prediction in terminal airspace[J]. IEEE Access, 2020, 8: 151250-151266. doi: 10.1109/ACCESS.2020.3016289 [16] 黄洁, 姜志国, 张浩鹏, 等. 基于卷积神经网络的遥感图像舰船目标检测[J]. 北京航空航天大学学报, 2017, 43(9): 1841-1848. doi: 10.13700/j.bh.1001-5965.2016.0755HUANG J, JIANG Z G, ZHANG H P, et al. Ship object detection in remote sensing images using convolutional neural networks[J]. Journal of Beijing University of Aeronautics and Astronautics, 2017, 43(9): 1841-1848(in Chinese). doi: 10.13700/j.bh.1001-5965.2016.0755 [17] 张雪松, 庄严, 闫飞, 等. 基于迁移学习的类别级物体识别与检测研究与进展[J]. 自动化学报, 2019, 45(7): 1224-1243. doi: 10.16383/j.aas.c180093ZHANG X S, ZHUANG Y, YAN F, et al. Status and development of transfer learning based category-level object recognition and detection[J]. Acta Automatica Sinica, 2019, 45(7): 1224-1243(in Chinese). doi: 10.16383/j.aas.c180093 [18] XIAO B, WU H P, WEI Y C. Simple baselines for human pose estimation and tracking[C]//Proceedings of the Conference on European Conference on Computer Vision. Berlin: Springer, 2018: 472-487. [19] YU F, WANG D Q, SHELHAMER E, et al. Deep layer aggregation[C]// IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018 : 2403-2412. [20] NEWELL A, YANG K Y, DENG J. Stacked hourglass networks for human pose estimation[C]//Proceedings of the Conference on European Conference on Computer Vision. Berlin: Springer, 2016: 483-499. [21] 王新, 杨任农, 左家亮, 等. 基于HPSO-TPFENN的目标机轨迹预测[J]. 西北工业大学学报, 2019, 37(3): 612-620. doi: 10.3969/j.issn.1000-2758.2019.03.025WANG X, YANG R N, ZUO J L, et al. Trajectory prediction of target aircraft based on HPSO-TPFENN neural network[J]. Journal of Northwestern Polytechnical University, 2019, 37(3): 612-620(in Chinese). doi: 10.3969/j.issn.1000-2758.2019.03.025 期刊类型引用(4)

1. 张瑞芳,董凤,程小辉. 改进YOLOv5s算法在非机动车头盔佩戴检测中的应用. 河南科技大学学报(自然科学版). 2023(01): 44-53+7 .  百度学术

百度学术2. 张冬冬,王春平,付强. 基于改进YOLOv4-tiny的舰船关重部位检测算法. 无线电工程. 2023(03): 628-635 .  百度学术

百度学术3. 张冬冬,王春平,付强. 基于语义特征的遥感舰船关重部位检测网络. 应用光学. 2023(03): 595-604 .  百度学术

百度学术4. 曹健,陈怡梅,李海生,蔡强. 基于图神经网络的行人轨迹预测研究综述. 计算机工程与科学. 2023(06): 1040-1053 .  百度学术

百度学术其他类型引用(3)

-

下载:

下载:

百度学术

百度学术