-

摘要:

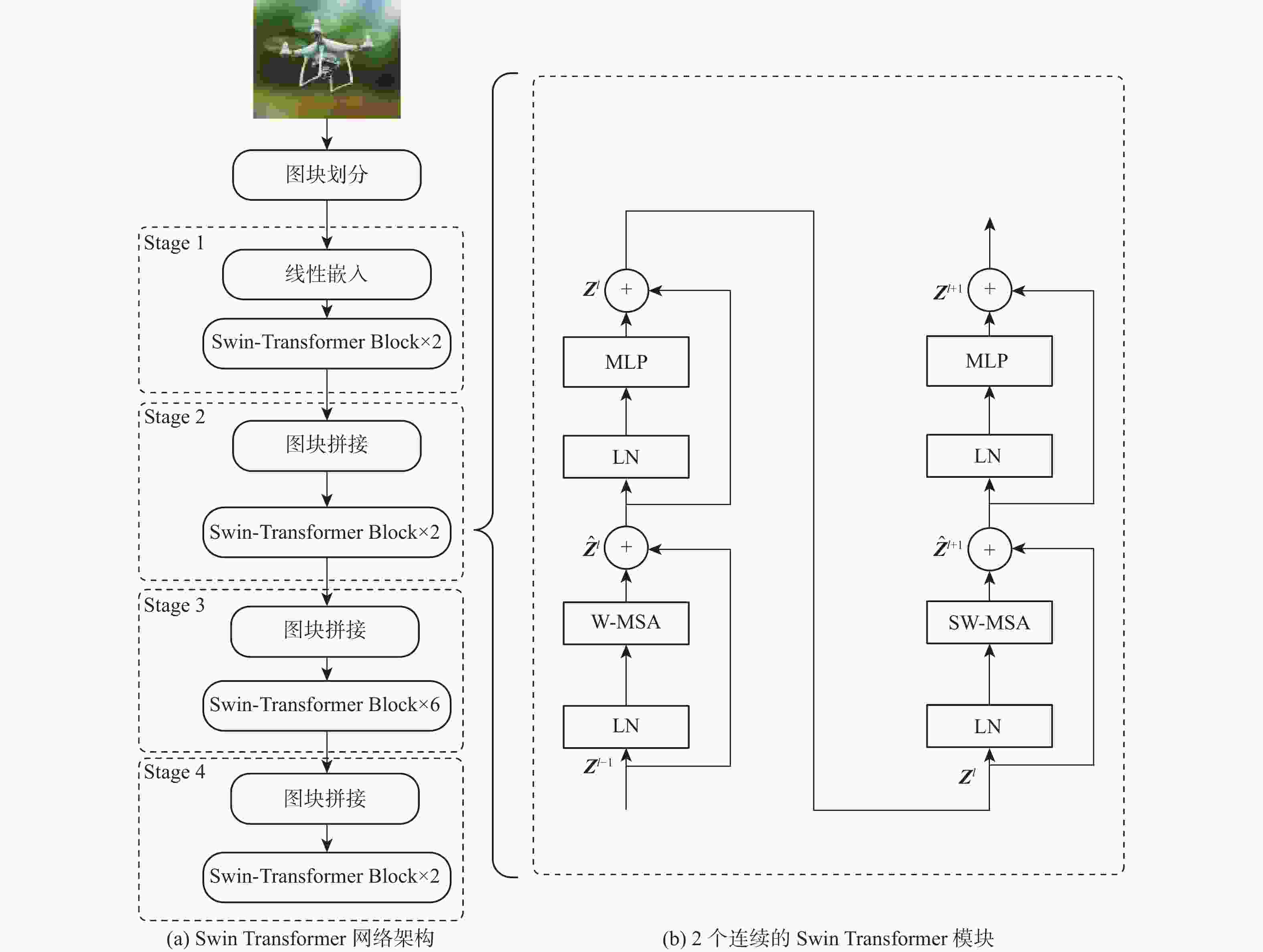

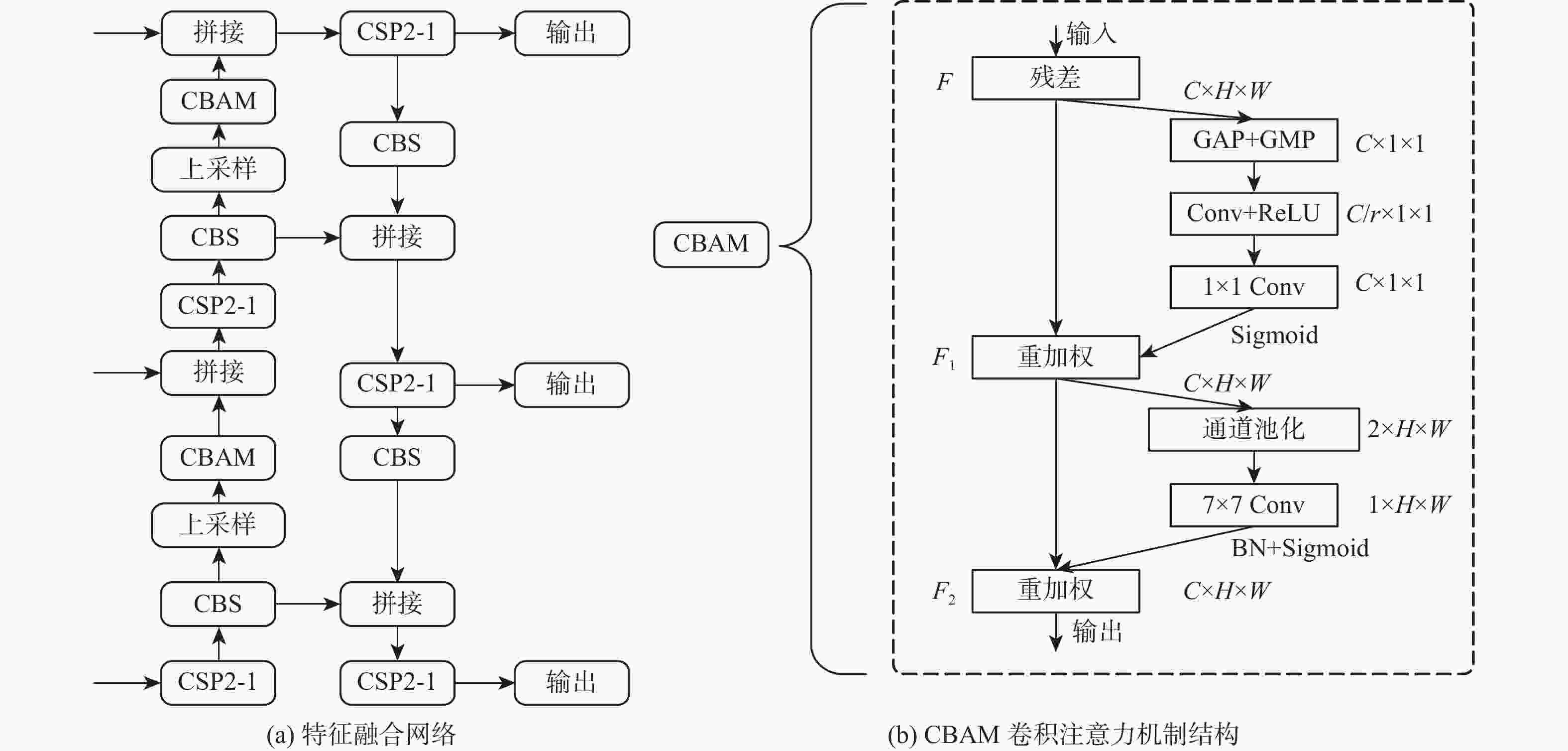

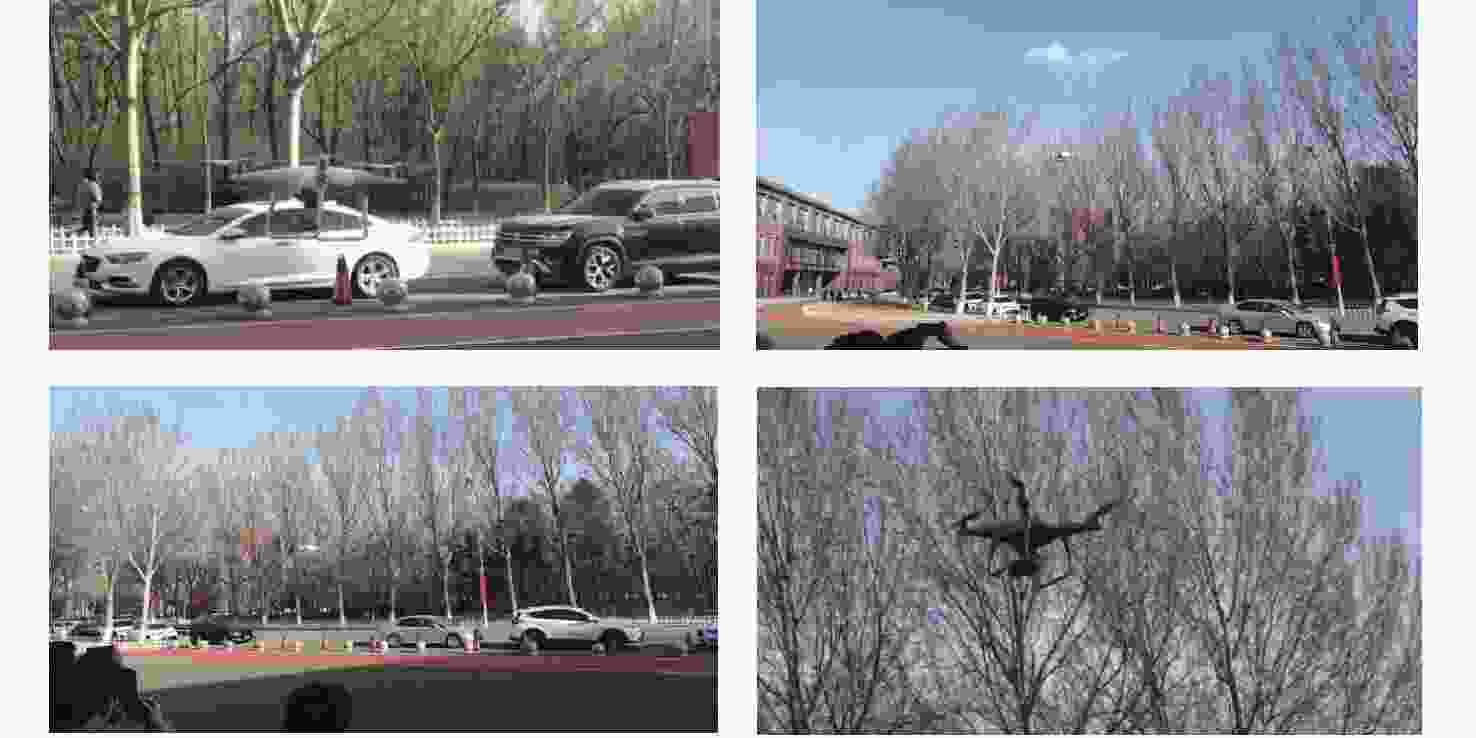

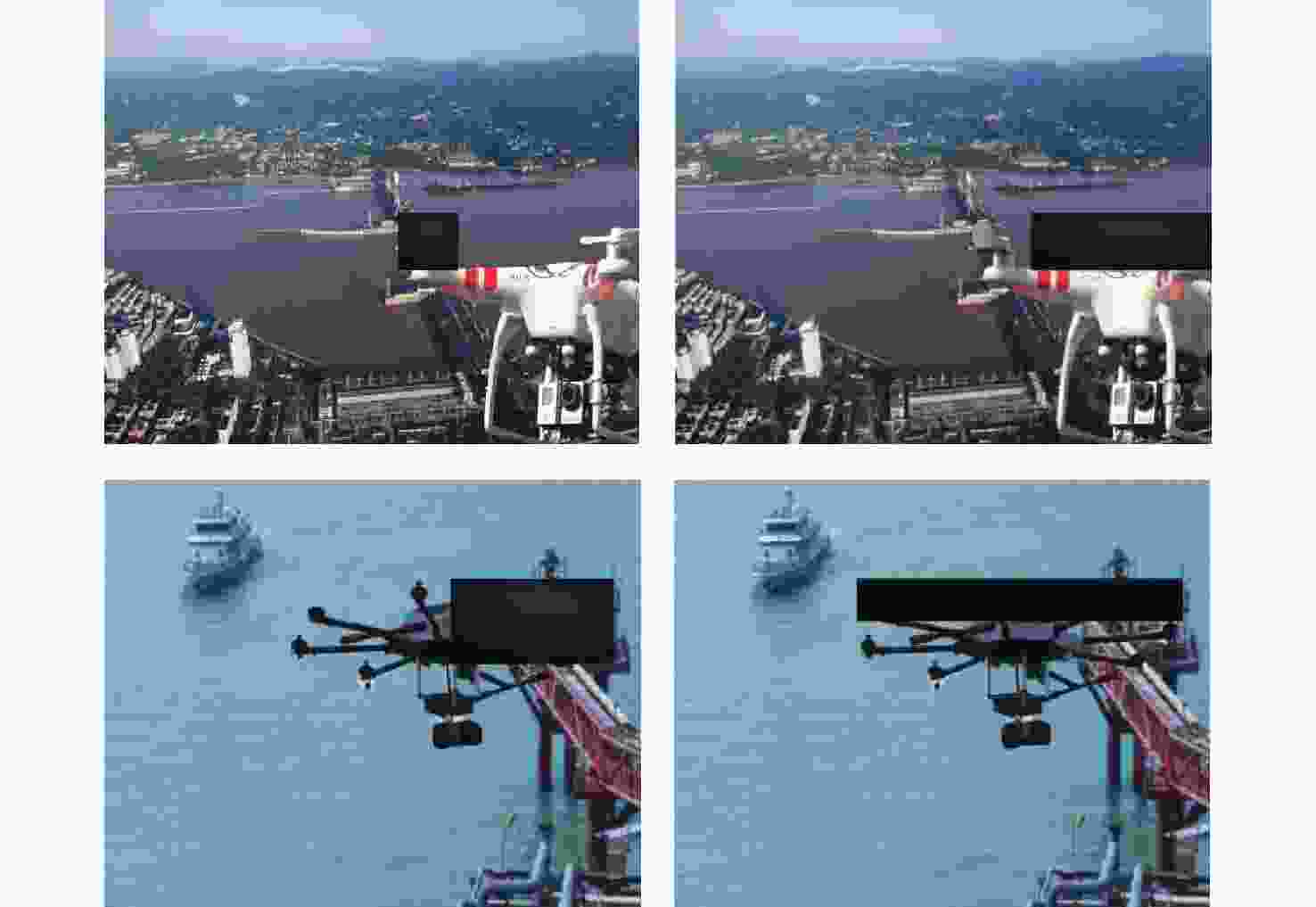

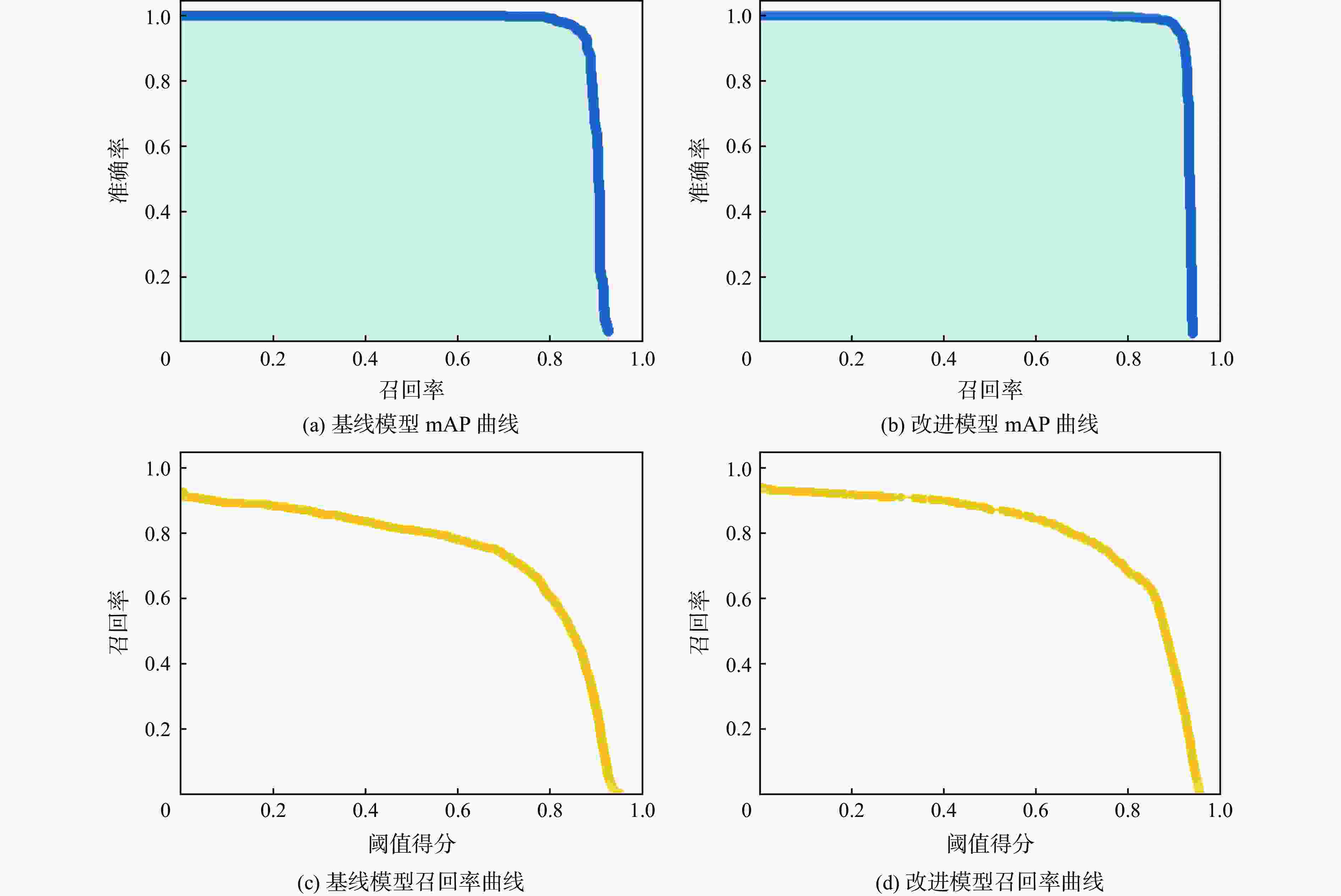

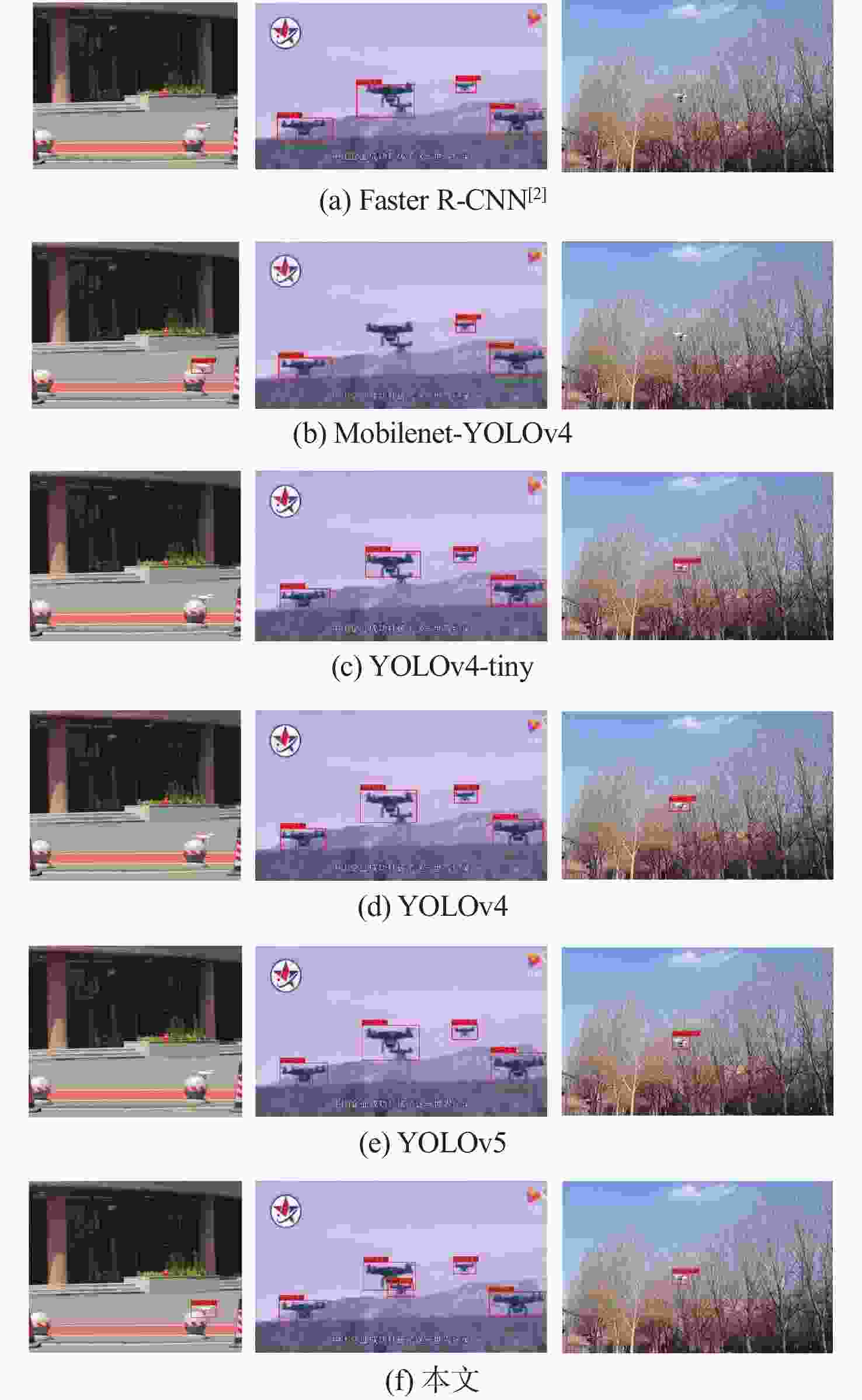

针对背景与无人机(UAV)颜色相似、目标遮挡重叠等复杂环境条件下,对无人机目标快速精准识别存在的问题,提出一种改进的无人机检测算法STC-YOLOv5。STC-YOLOv5算法的骨干特征提取网络采用Swin Transformer,以增强网络在复杂环境下的鲁棒性;在YOLOv5模型特征融合网络集成卷积注意力模块(CBAM),以增强对无人机目标重要特征的关注并抑制不必要的特征关注;根据无人机的特点优化损失函数,在完整交并比(CIoU)损失函数中引入角度损失、距离损失和形状损失,提高被遮挡无人机目标的识别准确率。在自主建立的无人机飞行数据集上进行实验验证,结果表明:在无人机目标被部分遮挡情况下,改进的STC-YOLOv5算法的平均精度为92.98%,召回率为87.09%,相比于YOLOv5算法分别提高了2.88%、6.03%,能够在复杂环境下对无人机进行快速准确识别。

-

关键词:

- 无人机 /

- 目标检测 /

- Swin Transformer /

- 卷积注意力模块 /

- 损失函数

Abstract:An improved unmannd aerial vehicle (UAV) detection algorithm, STC-YOLOv5 is proposed to address the problem of failing to quickly and accurately identify UAV targets under complex environmental conditions, such as similar colors of the background and UAVs, and overlapping of target occlusions. The backbone feature extraction network of STC-YOLOv5 employs Swin Transformer to enhance the robustness of the network against complex environments. The YOLOv5 model feature fusion network incorporates the convolution block attention module (CBAM) to decrease superfluous feature attention and increase attention on the UAV target’s key features. The loss function is optimized according to the characteristics of UAVs, and angle loss, distance loss and shape loss are introduced into the complete-IoU (CIoU) loss function, which improves the recognition accuracy of occluded UAV targets. In the case of partially occluded UAV targets, the improved STC-YOLOv5 algorithm has an average precision of 92.98% and a recall of 87.09%, which are 2.88% and 6.03% higher than the YOLOv5 algorithm, respectively. The results of experimental validation on the independently established UAV flight dataset demonstrate that the algorithm can achieve quick and precise UAV recognition in challenging scenarios.

-

表 1 消融实验结果

Table 1. Ablation experiment results

Swin Transformer CBAM 损失函数 mAP/% R/% F1-score P/% 90.10 81.06 0.89 98.87 √ 88.85 81.64 0.90 99.34 √ 91.15 86.34 0.92 99.10 √ 89.47 84.46 0.91 99.59 √ √ √ 92.98 87.09 0.93 98.76 表 2 模型复杂度对比

Table 2. Comparison of model complexity

Swin Transformer CBAM 损失函数 参数量 T/s 7.43×106 2.04 √ 31.09×106 2.06 √ 7.61×106 2.02 √ 7.43×106 2.01 √ √ √ 31.26×106 2.02 表 3 经典目标检测算法检测性能对比

Table 3. Detection performance comparison of classical target detection algorithms

算法 R/% mAP/% F1-score P/% T/s Faster R-CNN[2] 69.78 83.35 0.81 95.85 6.68 Mobilenet-YOLOv4 46.98 72.66 0.63 97.71 3.20 YOLOv4-tiny 74.59 79.71 0.83 94.76 3.09 YOLOv4 67.58 80.94 0.79 95.72 3.78 YOLOv5 81.06 90.10 0.89 98.87 2.04 本文 87.09 92.98 0.93 98.76 2.02 表 4 YOLOv8检测性能对比

Table 4. YOLOv8 detection performance comparison

算法 R/% mAP/% F1-score P/% YOLOv8 88.96 95.55 0.94 99.89 YOLOv8+CBAM 92.51 95.64 0.95 97.21 -

[1] 赵飞, 娄文忠, 冯恒振, 等. 反无人机图像导引头远距空中目标探测技术[J]. 兵工学报, 2023, 44(4): 1023-1033.ZHAO F, LOU W Z, FENG H Z, et al. Long-range aerial target detection technology of anti-UAV image guide[J]. Acta Armamentarii, 2023, 44(4): 1023-1033(in Chinese). [2] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [3] LIU W, ANGUELOV D, ERHAN D, et al. SSD: single shot multibox detector[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 21-37. [4] 杨勇, 王雪松, 张斌. 基于时频检测与极化匹配的雷达无人机检测方法[J]. 电子与信息学报, 2021, 43(3): 509-515. doi: 10.11999/JEIT200768YANG Y, WANG X S, ZHANG B. Radar detection of unmanned aerial vehicles based on time-frequency detection and polarization matching[J]. Journal of Electronics & Information Technology, 2021, 43(3): 509-515(in Chinese). doi: 10.11999/JEIT200768 [5] 虞晓霞, 刘智, 耿振野, 等. 一种基于深度学习的禁飞区无人机目标识别方法[J]. 长春理工大学学报(自然科学版), 2018, 41(3): 95-101.YU X X, LIU Z, GENG Z Y, et al. A deep learning-based target recognition method for UAVs in no-fly zones[J]. Journal of Changchun University of Science and Technology (Natural Science Edition), 2018, 41(3): 95-101(in Chinese). [6] SHINDE C, LIMA R, DAS K. Multi-view geometry and deep learning based drone detection and localization[C]//Proceedings of the 5th Indian Control Conference. Piscataway: IEEE Press, 2019: 289-294. [7] HU Y Y, WU X J, ZHENG G D, et al. Object detection of UAV for anti-UAV based on improved YOLOv3[C]//Proceedings of the Chinese Control Conference. Piscataway: IEEE Press, 2019: 8386-8390. [8] 崔艳鹏, 王元皓, 胡建伟. 一种改进YOLOv3的动态小目标检测方法[J]. 西安电子科技大学学报, 2020, 47(3): 1-7.CUI Y P, WANG Y H, HU J W. An improved dynamic small target detection method for YOLOv3[J]. Journal of Xi’an Electronic Science and Technology University, 2020, 47(3): 1-7(in Chinese). [9] 刘芳, 浦昭辉, 张帅超. 基于注意力特征融合的无人机多目标跟踪算法[J]. 控制与决策, 2023, 38(2): 345-353.LIU F, PU Z H, ZHANG S C. UAV multi-target tracking algorithm based on attention feature fusion[J]. Control and Decision, 2023, 38(2): 345-353(in Chinese). [10] 杨帅东, 谌海云, 许瑾, 等. 利用深度卷积特征的无人机视觉跟踪[J]. 控制与决策, 2023, 38(9): 2496-2504.YANG S D, CHEN H Y, XU J, et al. UAV visual tracking using deep convolutional features[J]. Control and Decision, 2023, 38(9): 2496-2504(in Chinese). [11] 张瑞鑫, 黎宁, 张夏夏, 等. 基于优化CenterNet的低空无人机检测方法[J]. 北京航空航天大学学报, 2022, 48(11): 2335-2344.ZHANG R X, LI N, ZHANG X X, et al. Low-altitude UAV detection method based on optimized CenterNet[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(11): 2335-2344(in Chinese). [12] 薛珊, 王亚博, 吕琼莹, 等. 基于YOLOX-drone的反无人机系统抗遮挡目标检测算法[J]. 工程科学学报, 2023, 45(9): 1539-1549.XUE S, WANG Y B, LV Q Y, et al. Anti-obscurity target detection algorithm for anti-UAS based on YOLOX-drone[J]. Journal of Engineering Science, 2023, 45(9): 1539-1549(in Chinese). [13] BOCHKOVSKIY A, WANG C Y, LIAO H Y M. YOLOv4: optimal speed and accuracy of object detection[EB/OL]. (2020-04-23)[2023-10-08]. https://arxiv.org/abs/2004.10934?context=eess. [14] HE K M, ZHANG X Y, REN S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. doi: 10.1109/TPAMI.2015.2389824 [15] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 936-944. [16] LIU Z, LIN Y T, CAO Y, et al. Swin Transformer: hierarchical vision Transformer using shifted windows[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2021: 9992-10002. [17] VASWANI A, SHAZEER N, PARMAR N, et a1. Attention is all on need[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems. New York: ACM, 2017: 6000-6010. [18] WOO S, PARK J, LEE J Y, et al. CBAM: convolutional block attention module[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 3-19. [19] GEVORGYAN Z. SIoU loss: more powerful learning for bounding box regression[EB/OL]. [2023-10-08]. https://arxiv.org/pdf/2205.12740. [20] DU S J, ZHANG B F, ZHANG P, et al. An improved bounding box regression loss function based on CIOU loss for multi-scale object detection[C]//Proceedings of the IEEE 2nd International Conference on Pattern Recognition and Machine Learning. Piscataway: IEEE Press, 2021: 92-98. -

下载:

下载: