Online condition prediction of avionic devices based on sparse kernel incremental extreme learning machine

-

摘要:

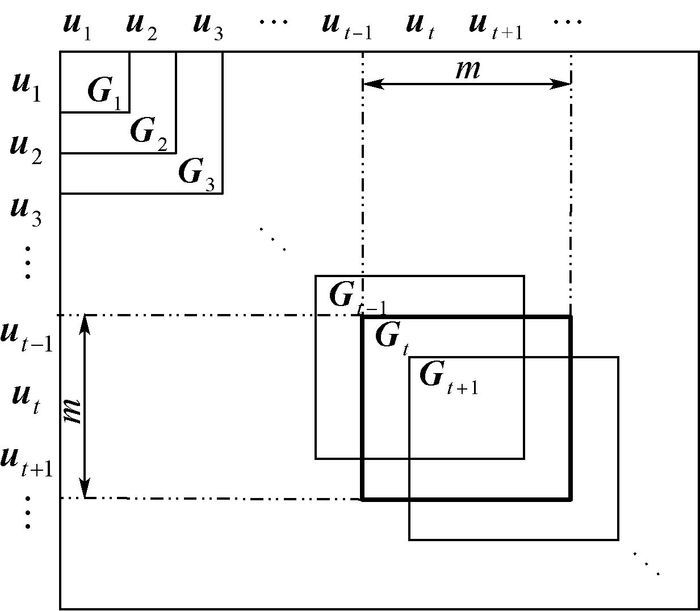

为实现对机载设备工作状态的在线状态预测,提出了一种稀疏核增量超限学习机(ELM)算法。针对核在线学习中核矩阵膨胀问题,基于瞬时信息测量提出了一个融合构造与修剪策略的两步稀疏化方法。通过在构造阶段最小化字典冗余,在修剪阶段最大化字典元素的瞬时条件自信息量,选择一个具有固定记忆规模的稀疏字典。针对基于核的增量超限学习机核权重更新问题,提出改进的减样学习算法,其可以实现字典中任一个核函数删除后剩余核函数Gram矩阵的逆矩阵的前向递推更新。通过对某型飞机发动机的状态预测,在预测数据长度等于20的条件下,本文提出的算法将预测的整体平均误差率下降到2.18%,相比于3种流形的核超限学习机在线算法,预测精度分别提升了0.72%、0.14%和0.13%。

-

关键词:

- 状态预测 /

- 核在线学习 /

- 稀疏测量 /

- 超限学习机(ELM) /

- 有效集

Abstract:In order to achieve the online condition prediction for avionic devices, a sparse kernel incremental extreme learning machine (ELM) algorithm is presented. For the problem of Gram matrix expansion in kernel online learning algorithms, a novel sparsification rule is presented by measuring the instantaneous learnable information contained on a data sample for dictionary selection. The proposed sparsification method combines the constructive strategy and the pruning strategy in two stages. By minimizing the redundancy of dictionary in the constructive phase and maximizing the instantaneous conditional self-information of dictionary atoms in the pruning phase, a compact dictionary with predefined size can be selected adaptively. For the kernel weight updating of kernel based incremental ELM, an improved decremental learning algorithm is proposed by using matrix elementary transformation and block matrix inversion formula, which effectively moderate the computational complexity at each iteration.In proposed algorithm, the inverse matrix of Gram matrix of the other samples can be directly updated after one sample is deleted from previous dictionary. The experimental results of the aero-engine condition prediction show that the proposed method can make the whole average error rate reduce to 2.18% when the prediction step is equal to 20. Compared with three well-known kernel ELM online learning algorithms, the prediction accuracy is improved by 0.72%, 0.14% and 0.13% respectively.

-

表 1 验1选择的参数设置

Table 1. Selected parameter setting in Experiment 1

算法 正则化参数γ 核参数θ 其他参数 ReOS-ELM 2×103 L=80 KB-IELM 2×103 2×102 SKIELM 2×103 2×102 m=80 注:L为ReOS-ELM中隐层神经元个数。 表 2 Mackey-Glass时间序列预测结果

Table 2. Prediction results for Mackey-Glass time series

算法 训练 测试 训练时间/s RMSE RMSE MPE AER/% ReOS-ELM 1.062 5 0.039 3 0.036 8 0.089 7 1.38 KB-IELM 38.935 0 0.012 6 0.011 7 0.027 6 0.98 SKIELM 0.502 0 0.015 3 0.014 5 0.031 2 1.14 表 3 实验2选择的参数设置

Table 3. Selected parameter settings in Experiment 2

项目 FOKELM ALD-KOS-ELM OKELM SKIELM θ m θ σ θ m θ m 发动机扭矩 5×104 30 5×104 2×10-5 5×104 30 5×104 30 发动机转速 1×109 30 1×109 2×10-8 1×109 30 1×109 30 排气温度 1×107 30 1×107 2×10-9 1×107 30 1×107 30 滑油温度 2×105 30 2×105 2×10-9 2×105 30 2×105 30 滑油压力 2×104 30 2×104 2×10-9 2×104 30 2×104 30 燃油瞬时流量 2×105 30 2×105 2×10-6 2×105 30 2×105 30 注:σ为ALD-KOS-ELM的阈值参数;m为其他3种算法的时间窗宽度。 表 4 飞机发动机扭矩状态预测结果

Table 4. Condition prediction results for torque of aeroengine

算法 训练 测试 训练时间/

sRMSE/

(N·m)RMSE/

(N·m)MPE/

(N·m)AER/

%FOKELM 0.034 0 1.016 7 0.949 6 2.044 4 10.87 ALD-KOS-ELM 0.034 5 0.839 2 0.955 4 2.581 4 9.84 OKELM 0.034 4 0.782 2 0.962 9 2.655 6 10.09 SKIELM 0.038 0 0.752 8 0.929 2 2.578 5 9.76> 表 5 飞机发动机转速状态预测结果

Table 5. Condition prediction results for rotational speed of aeroengine

算法 训练 测试 训练

时间/sRMSE

(r·

min-1)RMSE/

(r·

min-1)MPE/

(r·

min-1)AER/

%FOKELM 0.036 6 98.919 6 68.621 0 157.50 0.22 ALD-KOS-ELM 0.030 7 92.516 0 77.050 0 173.71 0.22 OKELM 0.032 9 94.846 1 66.927 4 149.73 0.27 SKIELM 0.035 9 88.533 8 64.282 1 149.02 0.19 表 6 飞机发动机排气温度状态预测结果

Table 6. Condition prediction results for exhaust gas temperature of aeroengine

算法 训练 测试 训练时间/s RMSE/K RMSE/K MPE/K AER/% FOKELM 0.034 9 5.273 6 2.632 1 4.860 8 0.49 ALD-KOS-ELM 0.065 2 3.626 4 2.840 6 5.664 6 0.49 OKELM 0.038 8 3.929 0 3.131 5 7.274 9 0.55 SKIELM 0.027 6 3.481 7 2.495 3 5.286 9 0.47 表 7 飞机发动机滑油温度状态预测结果

Table 7. Condition prediction results for oil temperature of aeroengine

算法 训练 测试 训练时间/s RMSE/℃ RMSE/℃ MPE/℃ AER/% FOKELM 0.023 6 0.167 6 0.203 8 0.310 6 0.52 ALD-KOS-ELM 0.072 1 0.031 9 0.086 3 0.138 2 0.21 OKELM 0.031 5 0.025 3 0.059 3 0.100 3 0.14 SKIELM 0.031 3 0.026 0 0.059 2 0.100 0 0.14 表 8 飞机发动机滑油压力状态预测结果

Table 8. Condition prediction results for oil pressure of aeroengine

算法 训练 测试 训练时间/s RMSE/N RMSE/N MPE/N AER/% FOKELM 0.027 5 0.097 0 0.108 8 0.127 1 3.51 ALD-KOS-ELM 0.063 7 0.039 6 0.033 7 0.043 6 1.05 OKELM 0.033 0 0.036 1 0.029 7 0.038 6 0.92 SKIELM 0.026 3 0.034 3 0.024 8 0.033 2 0.75 表 9 飞机发动机燃油瞬时流量状态预测结果

Table 9. Condition prediction results for fuel instantaneous flux of aeroengine

算法 训练 测试 训练

时间/sRMSE/(L·

min-1)RMSE/(L·

min-1)MPE/(L·

min-1)AER/

%FOKELM 0.028 5 2.635 8 6.523 2 22.729 7 1.89 ALD-KOS-ELM 0.019 6 3.118 3 6.564 2 18.451 2 2.13 OKELM 0.031 4 2.707 2 6.547 8 22.688 6 1.94 SKIELM 0.027 6 2.567 2 6.100 5 22.327 9 1.82 -

[1] TIAN Z, QIAN C, GU B, et al.Electric vehicle air conditioning system performance prediction based on artificial neural network[J].Applied Thermal Engineering, 2015, 89:101-104. doi: 10.1016/j.applthermaleng.2015.06.002 [2] 孙伟超, 李文海, 李文峰.融合粗糙集与D-S证据理论的航空装备故障诊断[J].北京航空航天大学学报, 2015, 41(10):1902-1909. http://bhxb.buaa.edu.cn/CN/abstract/abstract13502.shtmlSUN W C, LI W H, LI W F.Avionic devices fault diagnosis based on fusion method of rough set and D-S theory[J].Journal of Beijing University of Aeronautics and Astronautics, 2015, 41(10):1902-1909(in Chinese). http://bhxb.buaa.edu.cn/CN/abstract/abstract13502.shtml [3] YE F M, ZHANG Z B, CHAKRABARTY K, et al.Board-level functional fault diagnosis using multikernel support vector machines and incremental learning[J].IEEE Transactions on Computer-aided Design of Integrated Circuits and Systems, 2014, 33(2):279-290. doi: 10.1109/TCAD.2013.2287184 [4] JIE Y.A nonlinear kernel Gaussian mixture model based inferential monitoring approach for fault detection and diagnosis of chemical processes[J].Chemical Engineering Science, 2012, 68(1):506-519. doi: 10.1016/j.ces.2011.10.011 [5] ZHAO X Q, XUE Y F, WANG T.Fault detection of batch process based on multi-way kernel T-PLS[J].Journal of Chemical and Pharmaceutical Research, 2014, 6(7):338-346. [6] HUANG G B, ZHOU H, DING X, et al.Extreme learning machine for regression and multiclass classification[J].IEEE Transactions on Systems, Man and Cybernetics-Part B:Cybernetics, 2011, 42(2):513-529. http://www.ntu.edu.sg/home/egbhuang/pdf/ELM-Unified-Learning.pdf [7] HUANG G B, ZHU Q Y, SIEW C K.Extreme learning machine:Theory and application[J].Neurocomputing, 2006, 70(1-3):489-501. doi: 10.1016/j.neucom.2005.12.126 [8] GUO L, HAO J H, LIU M.An incremental extreme learning machine for online sequential learning problems[J].Neurocomputing, 2014, 128:50-58. doi: 10.1016/j.neucom.2013.03.055 [9] ZHAO S L, CHEN B D, ZHU P P, et al.Fixed budget quantized kernel least-mean-square algorithm[J].Signal Processing, 2013, 93(9):2759-2770. doi: 10.1016/j.sigpro.2013.02.012 [10] RICHARD C, BERMUDEZ M, HONEINE P.Online prediction of time series data with kernels[J].IEEE Transactions on Signal Processing, 2009, 57(3):1058-1067. doi: 10.1109/TSP.2008.2009895 [11] GAO W, CHEN J, RICHARD C, et al.Online dictionary learning for kernel LMS[J].IEEE Transactions on Signal Processing, 2014, 62(11):2765-2777. doi: 10.1109/TSP.2014.2318132 [12] FAN H J, SONG Q, XU Z.Online learning with kernel regularized least mean square algorithms[J].Knowledge-Based Systems, 2014, 59:21-32. doi: 10.1016/j.knosys.2014.02.005 [13] DIETHE T, GIROLAMI M.Online learning with (multiple) kernels:A review[J].Neural Computation, 2013, 25(3):567-625. doi: 10.1162/NECO_a_00406 [14] HONEINE P.Analyzing sparse dictionaries for online learning with kernels[J].IEEE Transactions on Signal Processing, 2015, 63(23):6343-6353. doi: 10.1109/TSP.2015.2457396 [15] ENGEL Y, MANNOR S, MEIR R.The kernel recursive least-squares algorithm[J].IEEE Transactions on Signal Processing, 2004, 52(8):2275-2285. doi: 10.1109/TSP.2004.830985 [16] LIU W F, PARK I, PRINCIPE J C.An information theoretic approach of designing sparse kernel adaptive filters[J].IEEE Transactions on Neural Networks, 2009, 20(12):1950-1961. doi: 10.1109/TNN.2009.2033676 [17] ZHOU X R, LIU Z J, ZHU C X.Online regularized and kernelized extreme learning machines with forgetting mechanism[J].Mathematical problems in engineering, 2014, 2014:1-11. doi: 10.1007/s13042-017-0666-8 [18] ZHOU X R, WANG C H.Cholesky factorization based online regularized and kernelized extreme learning machines with forgetting mechanism[J].Neurocomputing, 2016, 174:1147-1155. doi: 10.1016/j.neucom.2015.10.033 [19] GU Y, LIU J F, CHEN Y Q, et al.TOSELM:Timeliness online sequential extreme learning machin[J].Neurocomputing, 2014, 128:119-127. doi: 10.1016/j.neucom.2013.02.047 [20] SIMONE S, DANILO C, MICHELE S, et al.Online sequential extreme learning machine with kernel[J].IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(9):2214-2220. doi: 10.1109/TNNLS.2014.2382094 [21] 张英堂, 马超, 李志宁, 等.基于快速留一交叉验证的核极限学习机在线建模[J].上海交通大学学报, 2014, 48(5):641-646. http://www.cnki.com.cn/Article/CJFDTOTAL-SHJT201405011.htmZHANG Y T, MA C, LI Z N, et al.Online modeling of kernel extreme learning machine based on fast leave-one-out cross-validation[J].Journal of Shanghai Jiaotong University, 2014, 48(5):641-646(in Chinese). http://www.cnki.com.cn/Article/CJFDTOTAL-SHJT201405011.htm [22] HUYNH H T, WON Y.Regularized online sequential learning algorithm for single-hidden layer feedforward neural networks[J].Pattern Recognition Letters, 2011, 32(14):1930-1935. doi: 10.1016/j.patrec.2011.07.016 -

下载:

下载: