Research and implementation of multi-size aerial image positioning method based on CNN

-

摘要:

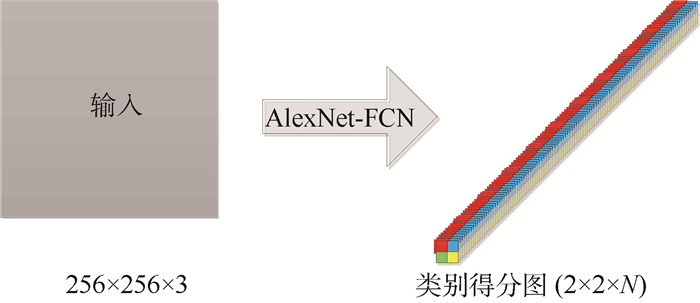

图像定位常用于无人机视觉导航,传统的无人机视觉导航广泛采用景象匹配导航方式,随着计算机技术的不断发展,深度学习技术为视觉导航的实现提供了新途径。以无人机的垂直侦查为背景,将飞行区域的航拍图像划分成大小相同的若干网格,每个网格代表一类区域,用网格图像制作数据集训练卷积神经网络(CNN)。基于AlexNet设计了一种融合显著性特征的全卷积网络模型,有效实现了一个基于CNN的多尺寸输入的滑动窗口分类器,并提出了一种邻域显著性参照定位策略来筛选分类结果,从而实现多尺寸航拍图像的定位。

Abstract:Image positioning is the key of UAV visual navigation. Scene matching navigation is widely used in traditional UAV visual navigation. With the continuous development of computer technology, deep learning technology provides a new way for the realization of visual navigation. In this context, this research mainly focuses on image localization based on convolution neural network. In this paper, based on the vertical reconnaissance of UAV, the aerial image of flight area is divided into several grids of the same size, each grid represents a class of regions, and the convolutional neural network (CNN) is trained by making data sets of grid images. This paper designs a fully convolutional network model based on AlexNet, which integrates saliency features. It effectively implements a sliding window classifier with CNN multi-size input, and proposes a neighborhood saliency reference positioning strategy to filter the classification results, so as to realize the positioning of multi-size aerial images.

-

表 1 查询图像集准确率

Table 1. Query image set accuracy

方法 输入 准确率/% 所用环境 平均运行时间/s AlexNet-FCN RGB 94.1 0.017 multi-channel

AlexNet-FCNRGB+显著特征

RGB+HOG

RGB+LBP95.4

94.6

94.2GPU: Titan X

Pytorch0.022

0.191

0.034SIFT

SURF

ORBRGB 63.3

73.6

61.9CPU: E5-2670 37.865

31.25

44.586表 2 航拍图像为512×512大小时top-k下的准确率

Table 2. Accuracy at top-k when aerial image size is 512×512

是否使用邻域显著性参照定位 区域所占完整网格比例/% 准确率/% k=1 k=2 k=3 k=4 否 100 67.3 39.5 26.6 19.9 ≥80 81.2 68.1 49.3 38.0 ≥60 83.9 74.1 56.0 43.6 ≥40 85.1 76.0 58.3 45.5 ≥20 85.6 77.1 59.8 46.7 ≥0 86.1 77.9 61.1 47.9 是 100 69.4 40.1 27.0 20.3 ≥80 83.1 69.0 49.9 38.2 ≥60 85.3 75.0 56.7 44.0 ≥40 86.3 77.0 59.0 45.8 ≥20 86.7 77.8 60.4 47.1 ≥0 87.1 78.5 61.6 48.3 表 3 航拍图像为768×768大小时top-k下的准确率

Table 3. Accuracy at top-k when aerial image size is 768×768

是否使用邻域显著性参照定位 区域所占完整网格比例/% 准确率/% k=1 k=2 k=3 k=4 否 100 65.7 62.1 59.6 55.4 ≥80 94.6 93.3 91.3 88.2 ≥60 95.7 94.9 93.3 90.5 ≥40 96.8 95.8 94.3 91.7 ≥20 97.2 96.2 94.8 92.3 ≥0 97.6 96.6 95.3 93.2 是 100 68.8 65.8 57.5 51.0 ≥80 95.7 94.2 89.2 84.3 ≥60 96.6 95.7 91.3 87.4 ≥40 97.6 96.5 92.4 88.8 ≥20 97.8 96.7 92.8 89.6 ≥0 98.1 97.1 93.5 90.0 表 4 航拍图像为512×512大小时top-k下的召回率

Table 4. Recall rate at top-k when aerial image size is 512×512

是否使用邻域显著性参照定位 区域所占完整网格比例/% 召回率/% k=1 k=2 k=3 k=4 否 100 67.3 79.1 79.8 79.8 ≥80 46.1 72.3 76.9 78.2 ≥60 29.7 51.3 57.1 58.9 ≥40 20.0 35.8 41.3 43.0 ≥20 14.8 26.8 31.6 33.1 ≥0 8.6 15.5 18.2 19.0 是 100 69.4 80.3 81.1 81.1 ≥80 47.2 73.4 77.9 78.9 ≥60 30.1 51.8 57.8 59.3 ≥40 20.3 36.2 41.8 43.3 ≥20 15.0 27.1 31.9 33.3 ≥0 8.7 15.6 18.3 19.1 表 5 航拍图像为768×768大小时top-k下的召回率

Table 5. Recall rate at top-k when aerial image size is 768×768

是否使用邻域显著性参照定位 区域所占完整网格比例/% 召回率/% k=1 k=2 k=3 k=4 否 100 21.5 40.5 58.1 72.3 ≥80 16.6 32.6 47.9 61.5 ≥60 12.6 25.0 36.9 47.7 ≥40 10.1 20.0 29.5 38.2 ≥20 8.1 16.0 23.7 30.8 ≥0 5.6 11.0 16.3 21.0 是 100 22.6 43.1 61.5 75.1 ≥80 16.8 32.9 48.5 62.3 ≥60 12.8 25.3 37.3 48.1 ≥40 10.2 20.1 29.7 38.5 ≥20 8.2 16.1 23.8 31.0 ≥0 5.6 11.1 16.4 21.3 -

[1] PREWITT J M S.Object enhancement and extraction[M]//LIPKIN B S, ROSENFELD A.Picture processing and psychopictorics.Salt Lake City: Academic Press, 1970: 75-149. [2] MARR D, HILDRETH E.Theory of edge detection[J].Proceedings of the Royal Society of London, 1980, 207(1167):187-217. http://d.old.wanfangdata.com.cn/OAPaper/oai_doaj-articles_849b7f238dda9761c6f2919ad1c04adf [3] CANNY J.A computational approach to edge detection[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 1986, 8(6):679-698. http://d.old.wanfangdata.com.cn/OAPaper/oai_doaj-articles_3d39d0b11988c5f90bf44b10d764f020 [4] HARRIS C, STEPHENS M.A combined corner and edge detector[C]//4th Alvey Vision Conference, 1988: 117-151. [5] SMITHSM, BRADYM.SUSAN-Anewapproachtolowlevel image processing[J].Intemational Joumal of Computer Vision, 1997, 23(1):45-78. doi: 10.1023/A%3A1007963824710 [6] MIKOLAJCZYK K, SCHMID C.An affine invariant interest point detector[C]//Proceedings of European Conference on Computer Vision.Berlin: Springer, 2002: 128-142. http://www.springerlink.com/content/d946wrklrq1fnpjh [7] LOWE D G.Distinctive image features from scale-invariant keypoints[J].International Journal of Computer Vision, 2004, 60(2):91-110. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ025429678/ [8] BAY H, TUVTELLARS T, VAN GOOL L.SURF: Speeded up robust features[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2006: 404-417. doi: 10.1007/11744023_32 [9] RUBLEE E, RABAUD V, KONOLIGE K, et al.ORB: An efficient alternative to SIFT or SURF[C]//IEEE International Conference on Computer Vision.Piscataway, NJ: IEEE Press, 2012: 2564-2571. http://www.researchgate.net/publication/221111151 [10] HAUSDORFF F.Grundzüge der mengenlehre[M].Von Veit:Grundzüge der mengenlehre, 1914:A34-A35. [11] HUTTENLOCHER D P, KLANDERMAN G A, RUCKLIDGE W J.Comparing images using the Hausdorff distance[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 1993, 15(9):850-863. http://cn.bing.com/academic/profile?id=99ceaba6c7dae17907a35f4c081e639e&encoded=0&v=paper_preview&mkt=zh-cn [12] DUBUISSON M P, JAIN A K.A modified Hausdorff distance for object matching[C]//Proceedings of the 12th International Conference on Pattern Recognition.Piscataway, NJ: IEEE Press, 1994: 566-568. http://www.researchgate.net/publication/3684010_A_modified_Hausdorff_distance_for_object_matching [13] ZHAO C, SHI W, DENG Y.A new Hausdorff distance for image matching[J].Pattern Recognition Letters, 2005, 26(5):581-586. doi: 10.1016-j.patrec.2004.09.022/ [14] BELONGIE S, MALIK J, PUZICHA J, et al.Shape context: A new descriptor for shape matching and object recognition[C]//Proceedings of the 13th International Conference on Neural Information Processing Systems.Cambridge: MIT Press, 2000: 831-837. http://www.researchgate.net/publication/2353535_Shape_Context_A_new_descriptor_for_shape_matching_and_object_recognition [15] BELONGIE S, MALIK J, PUZICHA J.Shape matching and object recognition using shape context[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(4):509-522. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0217363455/ [16] KRIZHEVSKY A, SUTSKEVER I, HINTON G E.ImageNet classification with deep convolutional neural networks[J].Communications of the ACM, 2012, 60(6):84-90. http://cn.bing.com/academic/profile?id=1dc5d01904d2c274eaec2181a93aa339&encoded=0&v=paper_preview&mkt=zh-cn [17] SERMANET P, EIGEN D, ZHANG X, et al.OverFeat: Integrated recognition, localization and detection using convolutional networks[EB/OL].(2013-12-21)[2019-02-10].https: //arxiv.org/abs/1312.6229. [18] HOU X, HAREL J, KOCH C.Image signature:Highlighting sparse salient regions[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(1):194-201. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ0224793953/ [19] OJALA T, PIETIKÄINEN M, MÄENPÄÄ T.Multiresolution gray-scale and rotation invariant texture classification with local binary patterns[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(7):971-987. http://cn.bing.com/academic/profile?id=bbab6ef5dd30d698066c963f5602e424&encoded=0&v=paper_preview&mkt=zh-cn -

下载:

下载: