Three-dimensional human pose estimation based on multi-source image weakly-supervised learning

-

摘要:

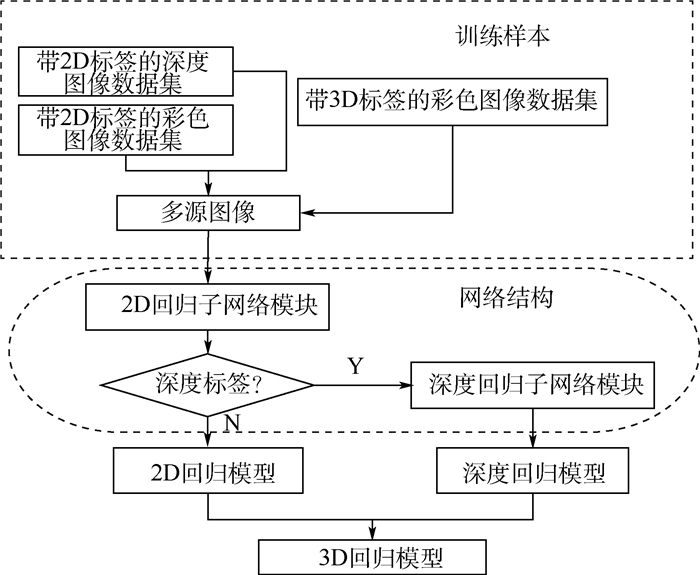

3D人体姿态估计是计算机视觉领域一大研究热点,针对深度图像缺乏深度标签,以及因姿态单一造成的模型泛化能力不高的问题,创新性地提出了基于多源图像弱监督学习的3D人体姿态估计方法。首先,利用多源图像融合训练的方法,提高模型的泛化能力;然后,提出弱监督学习方法解决标签不足的问题;最后,为了提高姿态估计的效果,改进了残差模块的设计。实验结果表明:改善的网络结构在训练时间下降约28%的情况下,准确率提高0.2%,并且所提方法不管是在深度图像还是彩色图像上,均达到了较好的估计结果。

Abstract:Three-dimensional human pose estimation is a hot research topic in the field of computer vision. Aimed at the lack of labels in depth images and the low generalization ability of models caused by single human pose, this paper innovatively proposes a method of 3D human pose estimation based on multi-source image weakly-supervised learning. This method mainly includes the following points. First, multi-source image fusion training method is used to improve the generalization ability of the model. Second, weakly-supervised learning approach is proposed to solve the problem of label insufficiency. Third, in order to improve the attitude estimation results, this paper improve the design of the residual module. The experimental results show that the regression accuracy from our improved network increases by 0.2%, and meanwhile the training time reduces by 28% compared with the original network. In a word, the proposed method obtains excellent estimation results with both depth images and color images.

-

Key words:

- human pose estimation /

- hourglass networks /

- weakly-supervised /

- multi-source image /

- depth image

-

表 1 不同模型对应的训练图像

Table 1. Training images corresponding to different models

模型 训练数据 深度图像数据库 彩色图像数据库 ITOP K2HGD MPII Human 3.6M 文献[13] √ √ M-H36M(本文) √ √ I-H36M(本文) √ √ IK-H36M(本文) √ √ √ IKM-H36M(本文) √ √ √ √ 表 2 不同模型准确率、参数量及训练时间对比

Table 2. Comparison of accuracy rate, parameter quantity and training time among different models

模型 准确率/ % 参数量/ 106 训练时间/ (s·batch-1) 本文模型(131~128) 90.10 31.00 0.16 文献[13] (131~256) 92.26 116.60 0.29 本文模型(333~128) 92.48 101.10 0.21 本文模型(333~256) 92.92 390.00 0.41 表 3 不同沙漏网络个数准确率、参数量及训练时间对比

Table 3. Comparison of accuracy rate, parameter quantity and training time with different numbers of hourglass network

模型 准确率/ % 参数量/ 106 训练时间/ (s·batch-1) 文献[13] (2 stack) 92.26 116.60 0.29 本文模型(2 stack) 92.48 101.10 0.21 本文模型(4 stack) 92.83 185.30 0.32 表 4 基于PDJ评价指标,不同训练模型在Human 3.6M测试图像上的3D人体姿态估计结果

Table 4. Three-dimensional pose estimation results of different regression models on Human 3.6M test images base on based on PDJ evaluation criteria

方法 准确率/% 脚踝 膝盖 髋 手腕 手肘 肩膀 头 平均 文献[13] 65.00 82.90 89.27 77.91 86.46 94.73 94.42 84.38 M-H36M(本文) 75.07 90.33 94.69 80.92 86.24 96.38 94.69 88.33 I-H36M(本文) 71.52 88.03 92.52 76.46 82.55 94.07 95.30 85.78 IK-H36M(本文) 79.23 91.60 94.40 77.61 81.40 92.69 93.34 87.18 IKM-H36M(本文) 74.98 91.06 94.33 78.65 85.21 95.77 95.16 87.88 -

[1] PARK S, HWANG J, KWAK N.3D human pose estimation using convolutional neural networks with 2D pose information[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 156-169. [2] YANG W, OUYANG W, LI H, et al.End-to-end learning of deformable mixture of parts and deep convolutional neural networks for human pose estimation[C]//IEEE Computer Society Conference on Computer Vision and Patter Recognition.Piscataway, NJ: IEEE Press, 2016: 3073-3082. [3] ZE W K, FU Z S, HUI C, et al.Human pose estimation from depth images via inference embedded multi-task learning[C]//Proceedings of the 2016 ACM on Multimedia Conference.New York: ACM, 2016: 1227-1236. [4] SHEN W, DENG K, BAI X, et al.Exemplar-based human action pose correction[J].IEEE Transactions on Cybernetics, 2014, 44(7):1053-1066. doi: 10.1109/TCYB.2013.2279071 [5] GULER R A, KOKKINOS L, NEVEROVA N, et al.DensePose: Dense human pose estimation in the wild[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2018: 7297-7306. [6] RHODIN H, SALZMANN M, FUA P.Unsupervised geometry-aware representation for 3D human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2018: 765-782. doi: 10.1007/978-3-030-01249-6_46 [7] OMRAN M, LASSNER C, PONS-MOLL G, et al.Neural body fitting: Unifying deep learning and model based human pose and shape estimation[C]//International Conference on 3D Vision.Piscataway, NJ: IEEE Press, 2018: 484-494. [8] HAQUE A, PENG B, LUO Z, et al.Towards viewpoint invariant 3D human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 160-177. doi: 10.1007%2F978-3-319-46448-0_10 [9] TOSHEV A, SZEGEDY C.DeepPose: Human pose estimation via deep neural networks[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2014: 1653-1660. [10] CAO Z, SIMON T, WEI S E, et al.Realtime multi-person 2D pose estimation using part affinity fields[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 7291-7299. [11] WEI S E, RAMAKRISHNA V, KANADE T, et al.Convolutional pose machines[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2016: 4724-4732. [12] NEWELL A, YANG K, DENG J, et al.Stacked hourglass networks for human pose estimation[C]//European Conference on Computer Vision.Berlin: Springer, 2016: 483-499. doi: 10.1007%2F978-3-319-46484-8_29 [13] YI Z X, XING H Q, XIAO S, et al.Towards 3D pose estimation in the wild: A weakly-supervised approach[C]//IEEE International Conference on Computer Vision.Piscataway, NJ: IEEE Press, 2017: 398-407. [14] SAM J, MARK E.Clustered pose and nonlinear appearance models for human pose estimation[C]//Proceedings of the 21st British Machine Vision Conference, 2010: 12.1-12.11. [15] ANDRILUKA M, PISHCHULIN L, GEHLER P, et al.2D human pose estimation: New benchmark and state of the art analysis[C]//IEEE Computer Society Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2014: 3686-3693. [16] CTALIN I, DRAGOS P, VLAD O, et al.Human 3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7): 1325-1339. [17] HAN X F, LEUNG T, JIA Y Q, et al.MatchNet: Unifying feature and metric learning for patch-based matching[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2015: 3279-3286. [18] XU G H, LI M, CHEN L T, et al.Human pose estimation method based on single depth image[J].IEEE Transactions on Computer Vision, 2018, 12(6):919-924. doi: 10.1049/iet-cvi.2017.0536 [19] SI J L, ANTONI B.3D human pose estimation from monocular images with deep convolutional neural network[C]//Asian Conference on Computer Vision.Berlin: Springer, 2014: 332-347. doi: 10.1007/978-3-319-16808-1_23 [20] GHEZELGHIEH M F, KASTURI R, SARKAR S.Learning camera viewpoint using CNN to improve 3D body pose estimation[C]//International Conference on 3D Vision.Piscataway, NJ: IEEE Press, 2016: 685-693. [21] CHEN C H, RAMANAN D.3D human pose estimation=2D pose estimation+matching[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 5759-5767. [22] POPA A I, ZANFIR M, SMINCHISESCU C.Deep multitask architecture for integrated 2D and 3D human sensing[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2017: 4714-4723. [23] COLLOBERT R, KAVUKCUOGLU K, FARABET C.Torch7: A Matlab-like environment for machine learning[C]//Conference and Workshop on Neural Information Processing Systems, 2011: 1-6. [24] NIKOS K, GEORGIOS P, KOSTAS D.Convolutional mesh regression for single-image human shape reconstruction[C]//IEEE Conference on Computer Vision and Pattern Recognition.Piscataway, NJ: IEEE Press, 2019: 4510-4519. [25] CHENXU L, XIAO C, ALAN Y.OriNet: A fully convolutional network for 3D human pose estimation[C]//British Machine Vision Conference, 2018: 321-333. -

下载:

下载: