-

摘要:

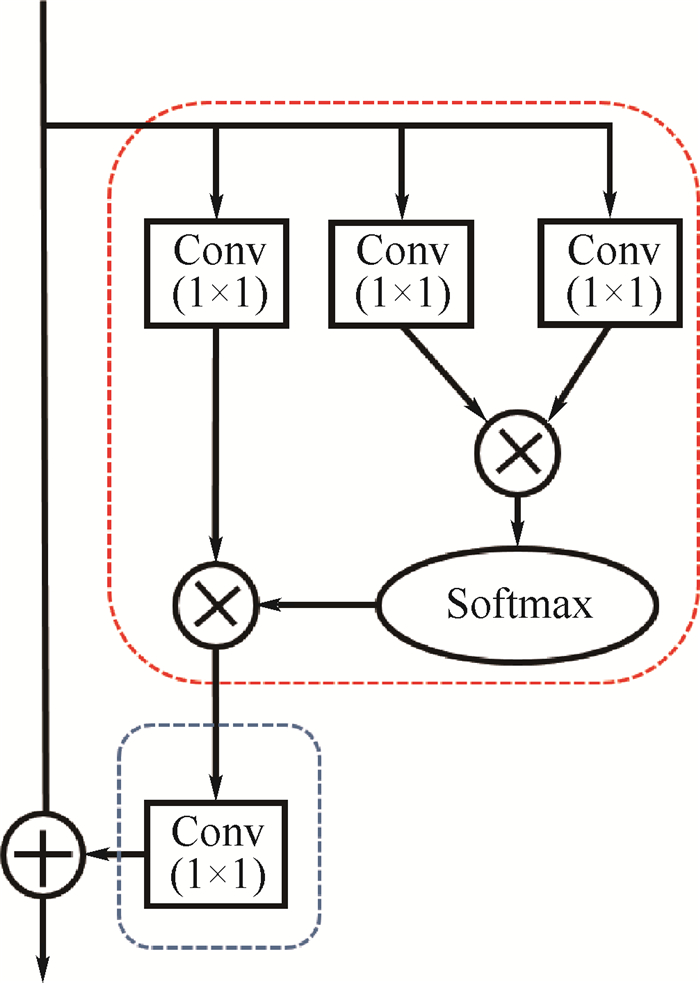

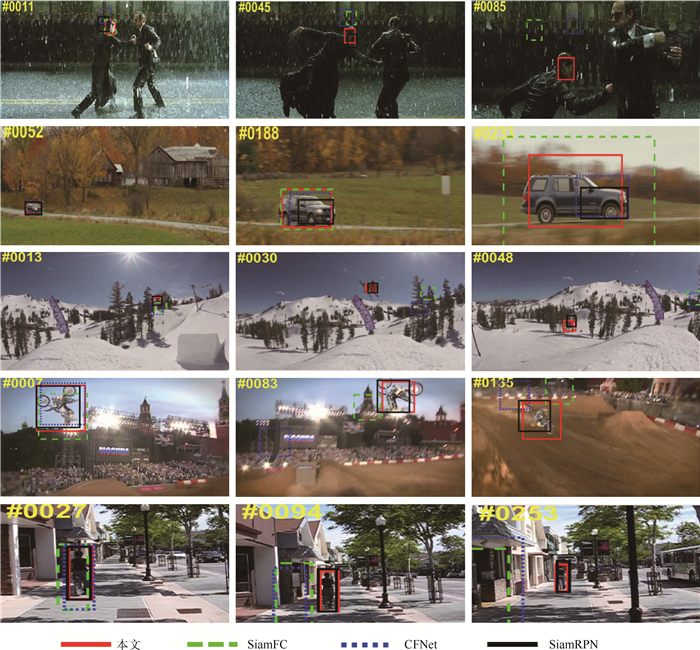

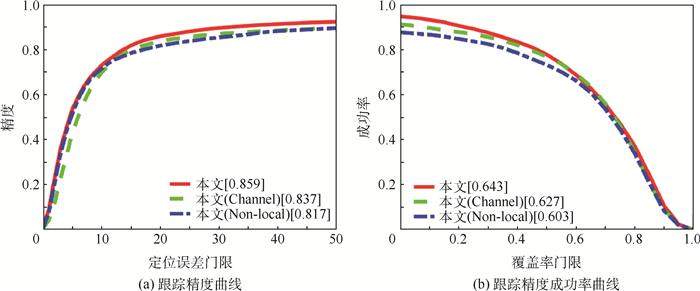

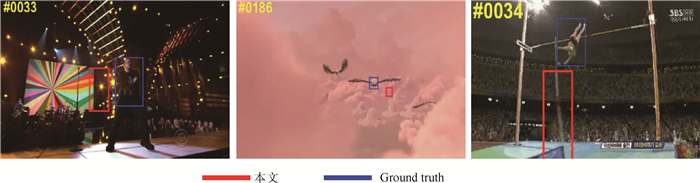

针对全卷积孪生网络(SiamFC)在相似物体干扰及目标发生大尺度外观变化时容易跟踪失败的问题,提出了一种基于级联注意力机制的孪生网络视觉跟踪算法。首先,在网络的最后一层加入非局部注意力模块,从空间维度得到关于目标区域的自注意特征图,并与最后一层特征进行相加运算。其次,考虑到不同通道特征对不同目标和各类场景的响应差异,引入通道注意力模块实现对特征通道的重要性选择。为了进一步提高跟踪的鲁棒性,将其与SiamFC算法进行加权融合,得到最终的响应图。最后,将提出的孪生网络模型在GOT10k和VID数据集上进行联合训练,进一步提升模型的表达力与判别力。实验结果表明:所提算法相比于SiamFC,在跟踪精度上提高了9.3%,在成功率上提高了5.4%。

Abstract:Aimed at the problem that the Fully Convolutional Siamese Network (SiamFC) is easy to fail to track when it is disturbed by similar object or the target has large-scale appearance changes, this paper proposes a Siamese network visual tracking algorithm based on cascaded attention mechanism. First, the non-local attention module is added to the last layer of the network, and the self-attention feature map of the target area is obtained from the spatial dimension and is added with the last-layer feature. Then, considering the different responses of different channel features to different targets and scenes, the channel attention module is introduced to select the importance of feature channel. In order to further improve the robustness of tracking, it is weighted fused with SiamFC algorithm to obtain the final response map. Finally, the Siamese network model is proposed to jointly train on the GOT10k and VID data set to further improve the expression and discrimination of the model. Experimental results show that compared with SiamFC, the proposed algorithm improves the accuracy by 9.3% and the success rate by 5.4%.

-

Key words:

- visual tracking /

- Siamese network /

- non-local attention /

- channel attention /

- model integration

-

表 1 深度学习算法跟踪速度对比

Table 1. Comparison of tracking speed of deep learning algorithms

算法 本文 HCF CFNet DCFNet SiamFC 跟踪速度/(帧·s-1) 58 10.2 78.4 65.9 83.7 -

[1] SMEULDERS A W M, CHU D M, CUCCHIARA R, et al.Visual tracking:An experimental survey[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 36(7):1442-1468. [2] BOLME D S, BEVERIDGE J R, DRAPER B A, et al.Visual object tracking using adaptive correlation filters[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2010: 2544-2550. [3] HENRIQUES J F, CASEIRO R, MARTINS P, et al.Exploiting the circulant structure of tracking-by-detection with kernels[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2012: 702-715. [4] HENRIQUES J F, RUI C, MARTINS P, et al.High-speed tracking with kernelized correlation filters[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3):583-596. [5] DANELLJAN M, SHAHBAZ K F, FELSBERG M, et al.Adaptive color attributes for real-time visual tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2014: 1090-1097. [6] GIRSHICK R, DONAHUE J, DARRELL T, et al.Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2014: 580-587. [7] LONG J, SHELHAMER E, DARRELL T.Fully convolutional networks for semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2015: 3431-3440. [8] RAWAT W, WANG Z.Deep convolutional neural networks for image classification:A comprehensive review[J].Neural Computation, 2017, 29(9):2352-2449. [9] NAM H, HAN B.Learning multi-domain convolutional neural networks for visual tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2016: 4293-4302. [10] DANELLJAN M, HAGER G, KHAN S F, et al.Convolutional features for correlation filter based visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision Workshops.Piscataway: IEEE Press, 2015: 58-66. [11] DANELLJAN M, ROBINSON A, KHAN F S, et al.Beyond correlation filters: Learning continuous convolution operators for visual tracking[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2016: 472-488. [12] BHAT G, JOHNANDER J, DANELLJAN M, et al.Unveiling the power of deep tracking[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2018: 483-498. [13] DANELLJAN M, BHAT G, KHAN S F, et al.ECO: Efficient convolution operators for tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2017: 6931-6939. [14] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al.Fully convolutional siamese networks for object tracking[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2016: 850-865. [15] LI B, YAN J Y, WU W, et al.High performance visual tracking with siamese region proposal network[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2018: 8971-8980. [16] GUO Q, FENG W, ZHOU C, et al.Learning dynamic siamese network for visual object tracking[C]//Proceedings of the IEEE International Conference on Computer Vision.Piscataway: IEEE Press, 2017: 1781-1789. [17] WU Y, LIM J, YANG M H.Object tracking benchmark[J].IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9):1834-1848. [18] WANG X, GIRSHICK R, GUPTA A, et al.Non-local neural networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2018: 7794-7803. [19] HU J, SHEN L, SUN G.Squeeze-and-excitation networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2018: 7132-7141. [20] MA C, HUANG J B, YANG X K, et al.Hierarchical convolutional features for visual tracking[C]//IEEE International Conference on Computer Vision.Piscataway: IEEE Press, 2015: 3074-3082. [21] BERTINETTO L, VALMADRE J, GOLODETZ S, et al.Staple: Complementary learners for real-time tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2016: 1401-1409. [22] LI Y, ZHU J.A scale adaptive kernel correlation filter tracker with feature integration[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2014: 254-265. [23] MA C, YANG X, ZHANG C, et al.Long-term correlation tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2015: 5388-5396. [24] VALMADRE J, BERTINETTO L, HENRIQUES J, et al.End-to-end representation learning for correlation filter based tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.Piscataway: IEEE Press, 2017: 5000-5008. [25] WANG Q, GAO J, XING J L, et al.DCFNet: Discriminant correlation filters network for visual tracking[EB/OL].(2017-04-13)[2019-11-20]. [26] ZHANG J, MA S, SCLAROFF S.MEEM: Robust tracking via multiple experts using entropy minimization[C]//Proceedings of the European Conference on Computer Vision.Berlin: Springer, 2014: 188-203. -

下载:

下载: