Unmanned vehicle positioning and mapping method based on multi-constraint factor graph optimization

-

摘要:

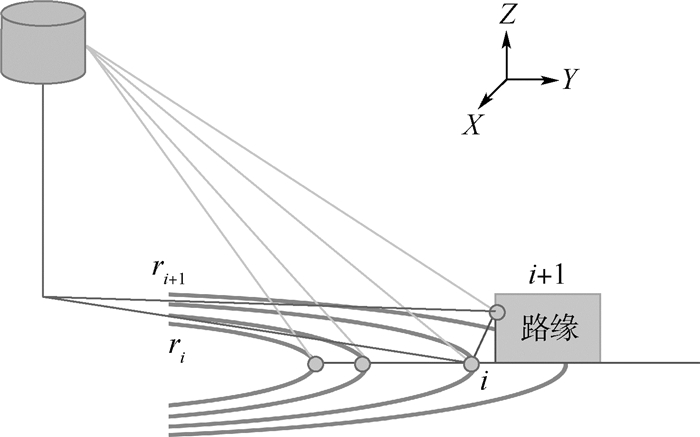

针对目前在特定场景下应用的低速无人车定位系统极度依赖全球导航卫星系统(GNSS),存在定位精度不高、漂移误差大、受环境影响严重等问题,提出一种低成本、高精度的无人车定位与建图方法。该方法基于三维激光定位与建图(SLAM)技术。首先,使用点云主成分分析(PCA)实现基于特征匹配的激光里程计;其次,将GNSS位置信息、点云分割聚类得到的地平面和点云聚类特征作为位姿约束分别加入图优化框架,消除激光里程计的累积误差;最后,得到最优位姿和大规模场景的点云地图,以实现无人车的自主定位导航。利用包含大型户外城市街道环境的KITTI数据集对所提出的SLAM算法进行了评估,结果表明:系统在3km运动距离情况下定位偏差可控制在1.5 m以下,在局部精度和全局一致性方面均优于其他里程计系统,为无人车的定位提供了新思路。

-

关键词:

- 图优化 /

- 三维激光定位与建图(SLAM) /

- 点云分割 /

- 主成分分析(PCA) /

- 无人车

Abstract:Aimed at the problem that the current low-speed positioning system of unmanned vehicle extremely relies on the Global Navigation Satellite System (GNSS), which has low positioning accuracy, large drift error and serious environmental impact, a low-cost and high-precision positioning and mapping method is proposed. This method is based on the three-dimensional laser Simultaneous Localization and Mapping(SLAM) technology. First, the point cloud Principal Component Analysis (PCA) is used to implement laser odometry based on feature matching. Then, the GNSS location information, ground plane and clustering feature of point cloud obtained by point cloud segmentation and clustering are added to the graph optimization framework as pose constraints, and the cumulative error of the laser odometry is eliminated. Finally, an optimal pose and large-scale scenes point cloud map is obtained to achieve the unmanned vehicles' position navigation. The proposed SLAM algorithm is evaluated using the KITTI dataset containing large outdoor urban street environments. The results show that the positioning deviation of this system can be controlled below 1.5 m at a movement distance of 3 km, and both in terms of local accuracy and global consistency, it is superior to other odometry systems and provides new ideas for the positioning of unmanned vehicles.

-

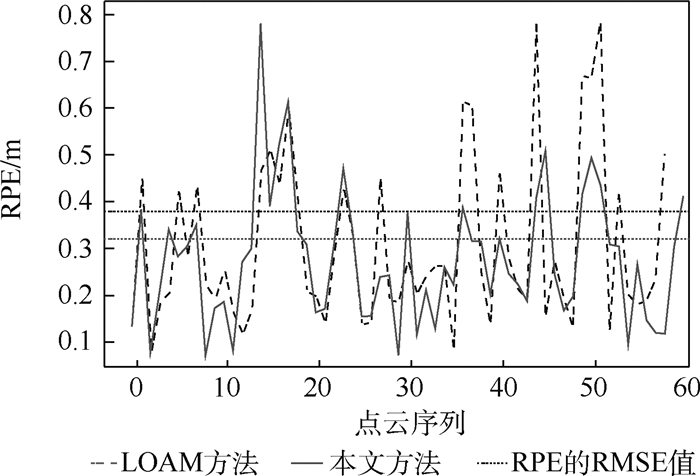

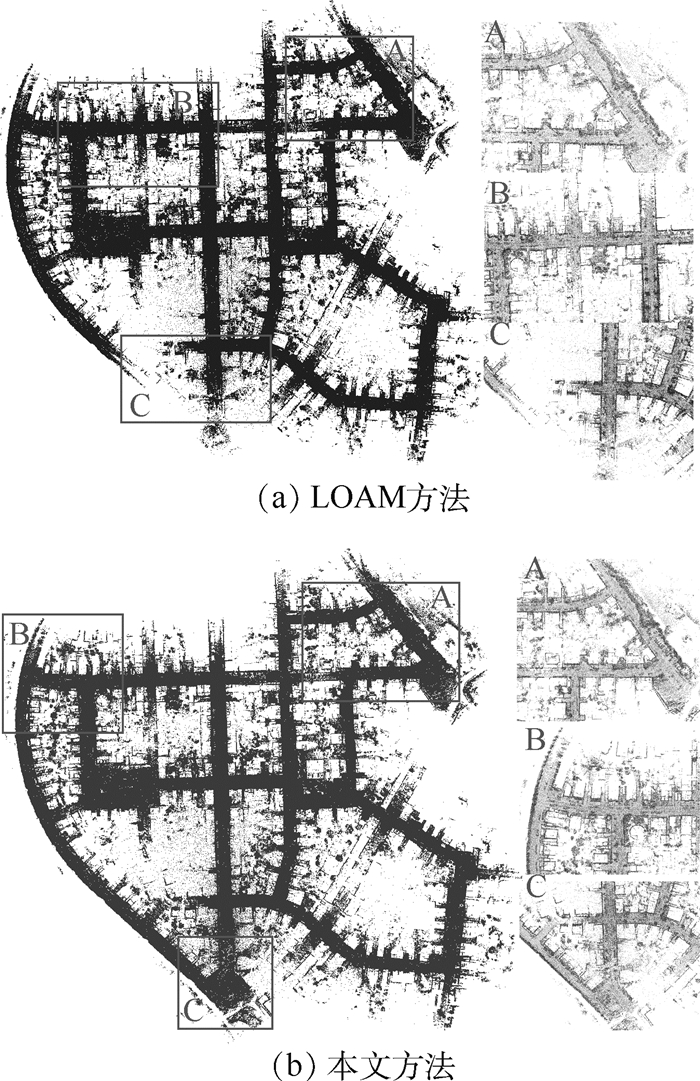

表 1 相对位姿误差对比

Table 1. Comparison of Relative Pose Errors (RPE)

实验结果 LOAM 本文 短距离 长距离 短距离 长距离 RPE最大值/m 0.79 9.40 0.81 6.21 RPE最小值/m 0.09 0.08 0.10 0.08 RPE平均值/m 0.32 1.98 0.30 0.81 RPE中值/m 0.29 1.31 0.23 0.73 RPE RMSE/m 0.39 2.09 0.31 1.05 数据帧数 1704 4544 1704 4544 轨迹长度/m 1392 3714 1392 3714 表 2 绝对轨迹误差对比

Table 2. Comparison of Absolute Trajectory Errors (ATE)

实验结果 LOAM 本文 ATE最大值 39.85 6.44 ATE最小值 0.03 0.02 ATE平均值 18.68 3.16 ATE中值 15.23 2.72 ATE RMSE 22.17 3.44 数据帧数 4 544 4 544 轨迹长度/m 3 714 3 714 -

[1] 李宏刚, 王云鹏, 廖亚萍, 等. 无人驾驶矿用运输车辆感知及控制方法[J]. 北京航空航天大学学报, 2019, 45(11): 2335-2344. doi: 10.13700/j.bh.1001-5965.2019.0521LI H G, WANG Y P, LIAO Y P, et al. Perception and control method of driverless mining vehicle[J]. Journal of Beijing University of Aeronautics and Astronautics, 2019, 45(11): 2335-2344(in Chinese). doi: 10.13700/j.bh.1001-5965.2019.0521 [2] 宁海宽. 复杂环境下基于地图的多传感器融合低速无人车定位[D]. 武汉: 华中科技大学, 2019: 16-23.NING H K.Map-based localization of self-driving car using multi-sensor in diverse city scenes[D].Wuhan: Huazhong University of Science and Technology, 2019: 16-23(in Chinese). [3] SUZUKI T, INOUE D C, AMANO Y S H, et al.Robust UAV position and attitude estimation using multiple GNSS receivers for laser based 3D mapping[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS).Piscataway: IEEE Press, 2019: 4402-4408. [4] ZHANG M, XU X Y, CHEN Y M, et al. A lightweight and accurate localization algorithm using multiple inertial measurement units[J]. IEEE Robotics and Automation Letters, 2020, 5(2): 261-264. [5] QI H, MOORE J B. Direct Kalman filtering approach for GPS/INS integration[J]. IEEE Transactions on Aerospace and Electronic Systems, 2002, 38(2): 687-693. doi: 10.1109/TAES.2002.1008998 [6] YANG S, ZHU X, NIAN X, et al.A robust pose graph approach for city scale LiDAR mapping[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS).Piscataway: IEEE Press, 2018: 1175-1182. [7] WAN G, YANG X, CAI R, et al.Robust and precise vehicle localization based on multi-sensor fusion in diverse city scenes[C]//IEEE International Conference on Robotics and Automation(ICRA).Piscataway: IEEE Press, 2018: 4670-4677. [8] PANG S, KENT D, MORRIS D, et al.FLAME: Feature-likelihood based mapping and localization for autonomous vehicles[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS).Piscataway: IEEE Press, 2019: 5312-5319. [9] AHMED S Z, SAPUTRA D B, VERMA S, et al.Sparse-3D lidar outdoor map-based autonomous vehicle localization[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS).Piscataway: IEEE Press, 2019: 1614-1619. [10] 安平, 王国平, 余佳东, 等. 一种高效准确的视觉SLAM闭环检测算法[J]. 北京航空航天大学学报, 2021, 47(1): 24-30. doi: 10.13700/j.bh.1001-5965.2019.0642AN P, WANG G P, YU J D, et al. An efficient and accurate visual SLAM loop closure detection algorithm[J]. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(1): 24-30(in Chinese). doi: 10.13700/j.bh.1001-5965.2019.0642 [11] SAPUTRA M R U, MARKHAM A, TRIGONI N. Visual SLAM and structure from motion in dynamic environments: A survey[J]. ACM Computing Surveys, 2018, 51(2): 1-36. [12] ZHANG J, SINGH S. Low-drift and real-time lidar odometry and mapping[J]. Autonomous Robots, 2017, 41(2): 401-416. doi: 10.1007/s10514-016-9548-2 [13] SHAN T, ENGLOT B.Lego-LOAM: Lightweight and ground-optimized lidar odometry and mapping on variable terrain[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS).Piscataway: IEEE Press, 2018: 4758-4765. [14] JI X, ZUO L, ZHANG C, et al.LLOAM: LiDAR odometry and mapping with loop-closure detection based correction[C]//Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA).Piscataway: IEEE Press, 2019: 2475-2480. [15] DESCHAUD J E.IMLS-SLAM: Scan-to-model matching based on 3D data[C]//IEEE International Conference on Robotics and Automation(ICRA).Piscataway: IEEE Press, 2018: 2480-2485. [16] CHEN S W, NARDARI G V, LEE E S, et al. SLOAM: Semantic lidar odometry and mapping for forest inventory[J]. IEEE Robotics and Automation Letters, 2020, 4(2): 612-619. [17] GU X J, ZHANG F D, XU J, et al.Graph optimization based Long-distance GPS/IMU integrated navigation[C]//Proceedings of the Chinese Control Conference (CCC).Piscataway: IEEE Press, 2019: 3976-3981. [18] 张括嘉, 张云洲, 吕光浩, 等. 基于局部语义拓扑图的视觉SLAM闭环检测[J]. 机器人, 2019, 41(5): 649-659.ZHANG K J, ZHANG Y Z, LV G H, et al. Loop closure detection based on local semantic topology for visual SLAM system[J]. Robot, 2019, 41(5): 649-659(in Chinese). [19] MA L, KERL C, STUCKLER J, et al.CPA-SLAM: Consistent plane-model alignment for direct RGB-D SLAM[C]//IEEE International Conference on Robotics and Automation (ICRA).Piscataway: IEEE Press, 2016: 1285-1291. [20] 于志鹏, 蒋林. 改进3D SLAM算法在移动机器人上的应用[J]. 机械设计与制造, 2020(1): 29-32.YU Z P, JIANG L. The application of improved 3D SLAM algorithm in mobiel robot[J]. Mechanical Design and Manufacturing, 2020(1): 29-32(in Chinese). [21] RENAUD D, ANDREI C, DANIEL D, et al. SegMap: 3D segment mapping using data-driven descriptors[J]. International Journal of Robotics Research, 2020, 39(2-3): 339-355. doi: 10.1177/0278364919863090 [22] ZHAO Z R, MAO Y J, DING Y, et al.Visual semantic SLAM with landmarks for large-scale outdoor environment[C]//Accepted by 2019 China Symposium on Cognitive Computing and Hybrid Intelligence(CCHI).Piscataway: IEEE Press, 2019: 149-154. [23] DUBE R, DUGAS D, STUMM E, et al.Segmatch: SegMent based place recognition in 3D point clouds[C]//IEEE International Conference on Robotics and Automation (ICRA).Piscataway: IEEE Press, 2017: 5266-5272. [24] Al A, HE F N, HABIB A. Automated feature-based down-sampling approaches for fine registration of irregular point clouds[J]. Remote Sensing, 2020, 12(71224): 1224. [25] WEINMANN M, JUTZI B, MALLET C. Semantic 3D scene interpretation: A framework combining optimal neighborhood size selection with relevant features[J]. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2014, 2(3): 181-188. -

下载:

下载: