-

摘要:

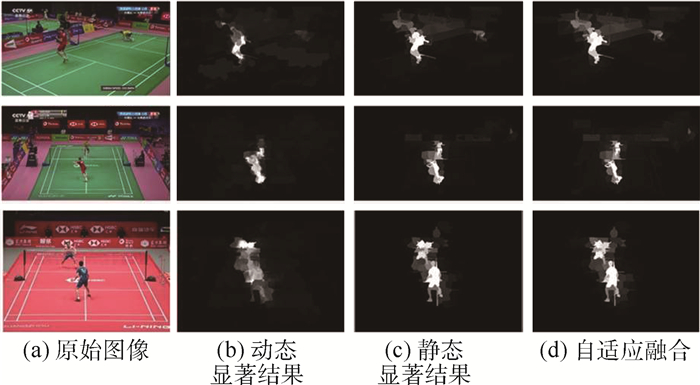

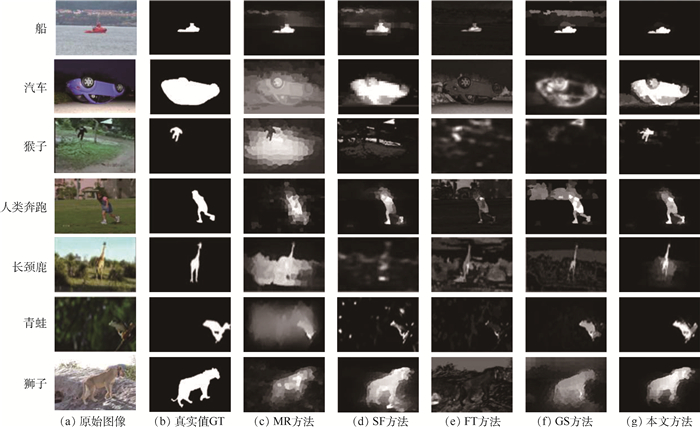

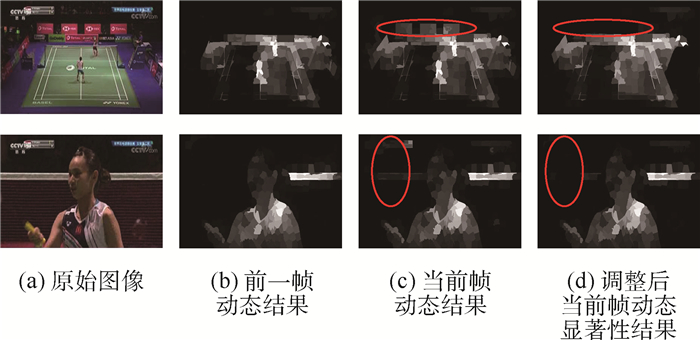

如何高效提取视频内容即视频摘要化,一直是计算机视觉领域研究的热点。简单通过图像颜色、纹理等特征进行检测已无法有效、完整地获取视频摘要。基于视觉注意力金字塔模型,提出了一种改进的可变比例及双对比度计算的中心-环绕视频摘要化方法。首先,以超像素方法对视频图像序列进行像素块划分以加速图像计算;然后,检测不同颜色背景下的图像对比度特征差异并进行融合;最后,结合光流运动信息,合并静态图像与动态图像显著性结果提取视频关键帧,在提取关键帧时,利用感知哈希函数进行相似性判断完成视频摘要化生成。在Segtrack V2、ViSal及OVP数据集上进行仿真实验,结果表明:所提方法可以有效提取图像感兴趣区域,得到以关键帧图像序列表示的视频摘要。

Abstract:How to extract video content efficiently, that is, video summarization, is a research hotspot in the field of computer vision. Video summary cannot be obtained effectively and completely by simply detecting the image color, texture and other features. Based on the visual attention pyramid model, this paper proposes an improved center-surround video summarization method with variable ratio and double contrast calculation. First, the video image sequence is divided into pixel blocks by superpixel method to speed up image calculation. Then, the contrast feature difference under different color backgrounds is detected and fused. Finally, combined with the optical flow motion information, the static and dynamic saliency results are merged to extract the key frames of the video. When extracting the key frames, the perceived Hash function is used to perform similarity judgment to complete the video summary generation. Simulation experiments are carried out on Segtrack V2, ViSal and OVP datasets. The experimental results show that the proposed method can be used to effectively extract the area of interest, and finally obtain the video summary expressed by the sequence of key frame images.

-

表 1 运动视频在不同摘要算法下的对比

Table 1. Comparison of sports videos under various summarization algorithms

算法 准确率 错误率 漏检率 精度 召回率 F-measure OV 0.58 0.08 0.42 0.88 0.58 0.7 VSUMM 0.42 0.08 0.58 0.83 0.42 0.56 STIMO 0.67 0.08 0.33 0.89 0.67 0.76 SD 0.33 0.25 0.67 0.57 0.33 0.42 KBKS 0.5 0.08 0.5 0.86 0.5 0.63 本文 0.92 0 0.08 0.92 0.92 0.92 -

[1] 唐铭谦. 基于对象的监控视频摘要算法研究[D]. 西安: 西安电子科技大学, 2018: 1-3.TANG M Q. The research of surveillance video synopsis algorithm based on objects[D]. Xi'an: Xidian University, 2018: 1-3(in Chinese). [2] 刘全, 翟建伟, 钟珊, 等. 一种基于视觉注意力机制的深度循环Q网络模型[J]. 计算机学报, 2017, 40(6): 1353-1366.LIU Q, ZHAI J W, ZHONG S, et al. A deep recurrent Q-network based on visual attention mechanism[J]. Chinese Journal of Computers, 2017, 40(6): 1353-1366(in Chinese). [3] 郎洪, 丁朔, 陆键, 等. 复杂场景下的交通视频显著性前景目标提取[J]. 中国图象图形学报, 2018, 24(1): 50-63.LANG H, DING S, LU J, et al. Traffic video significance foreground target extraction in complex scenes[J]. Journal of Image and Graphics, 2018, 24(1): 50-63(in Chinese). [4] 张芳, 王萌, 肖志涛, 等. 基于全卷积神经网络与低秩稀疏分解的显著性检测[J]. 自动化学报, 2019, 45(11): 2149-2158.ZHANG F, WANG M, XIAO Z T, et al. Saliency detection via full convolution neural network and low rank sparse decomposition[J]. Acta Automatica Sinica, 2019, 45(11): 2149-2158(in Chinese). [5] 李庆武, 马云鹏, 周亚琴, 等. 基于无监督栈式降噪自编码网络的显著性检测算法[J]. 电子学报, 2019, 47(4): 871-879. doi: 10.3969/j.issn.0372-2112.2019.04.015LI Q W, MA Y P, ZHOU Y Q, et al. Saliency detection based on unsupervised SDAE network[J]. Acta Electronica Sinica, 2019, 47(4): 871-879(in Chinese). doi: 10.3969/j.issn.0372-2112.2019.04.015 [6] 陈炳才, 陶鑫, 陈慧, 等. 融合边界连通性与局部对比性的图像显著性检测[J]. 计算机学报, 2020, 43(1): 16-28.CHEN B C, TAO X, CHEN H, et al. Saliency detection via fusion of boundary connectivity and local contrast[J]. Chinese Journal of Computers, 2020, 43(1): 16-28(in Chinese). [7] ABLAVATSKI A, LU S, CAI J. Enriched deep recurrent visual attention model for multiple object recognition[C]//Proceedings of IEEE Winter Conference on Applications of Computer Vision (WACV). Piscataway: IEEE Press, 2017: 971-978. [8] QU S, XI Y, DING S. Visual attention based on long-short term memory model for image caption generation[C]//Proceedings of Chinese Control and Decision Conference (CCDC). Piscataway: IEEE Press, 2017: 4789-4794. [9] LIU G H, YANG J Y. Exploiting color volume and color difference for salient region detection[J]. IEEE Transactions on Image Processing, 2019, 28(1): 6-16. doi: 10.1109/TIP.2018.2847422 [10] LI Z, TANG J, WANG X, et al. Multimedia news summarization in search[J]. ACM Transactions on Intelligent Systems and Technology, 2016, 7(3): 1-20. [11] HU T L, LI Z C. Video summarization via exploring the global and local importance[J]. Multimedia Tools and Applications, 2018, 77(17): 22083-22098. doi: 10.1007/s11042-017-5479-y [12] MENG J, WANG S, WANG H, et al. Video summarization via multi-view representative selection[C]//Proceedings of IEEE International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2017: 1189-1198. [13] ACHANTA R, SHAJI A, SMITH K, et al. SLIC superpixels compared to state-of-the-art superpixel methods[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(11): 2274-2282. doi: 10.1109/TPAMI.2012.120 [14] YANG C, ZHANG L, LU H, et al. Saliency detection via graph-based manifold ranking[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Piscataway: IEEE Press, 2013: 3166-3173. [15] PERAZZI F, KRAHENBUHL P, PRITCH Y, et al. Saliency filters: Contrast based filtering for salient region detection[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Piscataway: IEEE Press, 2012: 733-740. [16] ACHANTA R, HEMAMI S, ESTRADA F, et al. Frequency-tuned salient region detection[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Piscataway: IEEE Press, 2009: 1597-1604. [17] WEI Y C, WEN F, ZHU W, et al. Geodesic saliency using background priors[C]//Proceedings of European Conference on Computer Vision (ECCV). Berlin: Springer, 2012: 29-42. [18] DEMENTHON D, KOBLA V, DOERMANN D. Video summarization by curve simplification[C]//Proceedings of ACM International Conference on Multimedia. New York: ACM Press, 1998: 211-218. [19] DE AVILA S E F, LOPES A P B, DA LUZ A, et al. VSUMM: A mechanism designed to produce static video summaries and a novel evaluation method[J]. Pattern Recognition Letters, 2011, 32(1): 56-68. doi: 10.1016/j.patrec.2010.08.004 [20] FURINI M, GERACI F, MONTANGERO M, et al. STIMO: Still and moving video storyboard for the web scenario[J]. Multimedia Tools and Applications, 2010, 46(1): 47-69. doi: 10.1007/s11042-009-0307-7 [21] CONG Y, YUAN J, LUO J. Towards scalable summarization of consumer videos via sparse dictionary selection[J]. IEEE Transactions on Multimedia, 2012, 14(1): 66-75. doi: 10.1109/TMM.2011.2166951 [22] GUAN G, WANG Z, LU S, et al. Keypoint based keyframe selection[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2012, 23(4): 729-734. -

下载:

下载: