-

摘要:

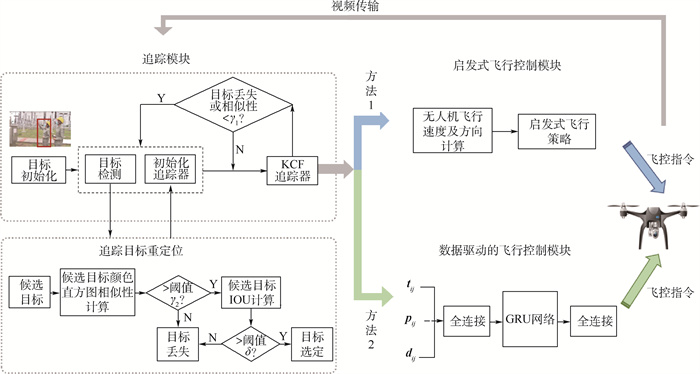

随着人工智能技术的发展,面向电力系统的运动目标追踪技术逐渐得到关注,现有方法虽有一定成效,但是大多基于固定摄像头的监控视频录制,不能灵活追踪运动目标,当运动目标离开摄像头视野时,存在运动目标丢失问题。为此,利用无人机设备,并基于深度学习和核相关滤波技术,提出了一个电力场景下基于无人机视觉的运动目标追踪方法(MTTS_UAV)。所提方法采用改进的目标追踪方法与目标检测方法相结合的方式来追踪运动目标隐患,并引入2种无人机飞行控制模块:启发式和数据驱动式,使得无人机的飞行速度和方向可以根据目标移动情况自适应地调节。在真实变电站的安全帽人员数据集上进行了大量实验,对所提方法的追踪效果进行评估,结果表明:所提方法在真实数据集上的平均像素误差(APE)和平均重叠率(AOR)分别可达到2.37和0.67,验证了方法的有效性。

Abstract:With the rapid development of artificial intelligence technology, the moving target tracking technology for the power system has gradually attracted researchers' attention. Although the existing methods have achieved great success, most of them are based on the fixed camera surveillance video recording, which cannot track the moving target flexibly. When the moving object leaves the camera's field of view, there is a problem of losing the moving object. In light of this, we propose a moving target tracking scheme based on UAV vision (MTTS_UAV) in electric scenario. In particular, to ensure the real-time feature, we combine the improved target tracking algorithm and target detection algorithm to track the hidden dangers. To ensure the accuracy, we introduce two UAV flight control modules: heuristic mode and data-driven mode, so that the UAV's flight speed and direction can be adjusted adaptively according to the target movement. Extensive experiments have been conducted on a real-world dataset of hardhat personnel in real substations, which demonstrate that the average pixel error (APE) and average overlap rate (AOR) are 2.37 and 0.67 respectively and verify the effectiveness of the proposed method.

-

Key words:

- target tracking /

- target detection /

- deep learning /

- UAV vision /

- electric scenario

-

表 1 平均像素误差结果统计

Table 1. Statistical results of average pixel error

w 平均像素误差 方法1 方法2 本文方法 1 20.03 13.61 2.37 2 2.64 2.66 2.37 3 2.50 2.50 2.37 4 2.41 2.31 2.37 5 2.35 2.56 2.37 表 2 平均重叠率结果统计

Table 2. Statistical results of average overlap rate

w 平均重叠率 方法1 方法2 本文方法 1 0.39 0.50 0.67 2 0.65 0.65 0.67 3 0.66 0.64 0.67 4 0.67 0.67 0.67 5 0.68 0.64 0.67 表 3 平均重启次数统计

Table 3. Statistics of average restart times

w 平均重启次数 方法2 本文方法 1 55.00 36.70 2 48.70 36.70 3 50.10 36.70 4 48.20 36.70 5 46.20 36.70 表 4 平均连续追踪帧数统计

Table 4. Statistics of the number of average consecutive tracking frames

w 平均连续追踪帧数 方法2 本文方法 1 38.20 41.00 2 35.50 41.00 3 33.90 41.00 4 35.30 41.00 5 36.90 41.00 -

[1] REDMON J, FARHADI A. YOLOv3: An incremental improvement[EB/OL]. (2018-04-08)[2021-11-01]. https://arxiv.org/abs/1804.02767. [2] HENRIQUES J F, RUI C, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583-596. [3] CHO K, MERRIENBOER B V, GÜLÇEHRE C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[C]//Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, 2014: 1724-1734. [4] VIOLA P, JONES M. Rapid object detection using a boosted cascade of simple features[C]//IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2001, 1: 511. [5] SUGIMURA D, FUJIMURA T, HAMAMOTO T. Enhanced cascading classifier using multi-scale HOG for pedestrian detection from aerial images[J]. International Journal of Pattern Recognition and Artificial Intelligence, 2016, 30(3): 1655009. [6] DALAL N, TRIGGS B. Histograms of oriented gradients for human detection[C]//IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2005: 886-893. [7] ZHU Q, AVIDAN S, YEH M C, et al. Fast human detection using a cascade of histograms of oriented gradients[C]//IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2006: 1491-1498. [8] GIRSHICK R. Fast R-CNN[C]//International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 1440-1448. [9] REN S, HE K, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [10] LIU W, ANGUELOV D, ERHAN D. SSD: Single shot multibox detector[C]//European Conference on Computer Vision. Berlin: Springer, 2016, 9905: 21-37. [11] 吴冬梅, 王慧, 李佳. 基于改进Faster RCNN的安全帽检测及身份识别[J]. 信息技术与信息化, 2020(1): 17-20. https://www.cnki.com.cn/Article/CJFDTOTAL-SDDZ202001009.htmWU D M, WANG H, LI J. Helmet detection and identification based on improved Faster RCNN[J]. Journal of Information Technology and Informatization, 2020(1): 17-20(in Chiense). https://www.cnki.com.cn/Article/CJFDTOTAL-SDDZ202001009.htm [12] 董召杰. 基于YOLOv3的电力线关键部件实时检测[J]. 电子测量技术, 2019, 42(43): 173-178. https://www.cnki.com.cn/Article/CJFDTOTAL-DZCL201923029.htmDONG Z J. Key components of power line based on YOLOv3 real-time detection[J]. Journal of Electronic Measurement Technique, 2019, 42(43): 173-178(in Chiense). https://www.cnki.com.cn/Article/CJFDTOTAL-DZCL201923029.htm [13] XIANG G. Real-time follow up tracking fast moving object with an active camera[C]//2nd Internationl Congress on Image and Signal Processing. Piscataway: IEEE Press, 2009: 2075-2078. [14] ZHANG J E, WANG Y T, CHEN J, et al. A framework of surveillance system using a PTZ camera[C]//IEEE International Conference on Computer Science and Information Technology(ICCSIT). Piscataway: IEEE Press, 2010: 658-662. [15] DONG Q, ZOU Q H. Visual UAV detection method with online feature classification[C]//Proceedings of 2017 IEEE 2nd Information Technology, Networking, Electronic and Automation Control Conference. Piscataway: IEEE Press, 2017: 464-467. [16] 林勇, 秦文静, 戚国庆. 基于机载视觉引导的无人机自主循迹研究[J]. 电子设计工程, 2018, 26(8): 21-24. https://www.cnki.com.cn/Article/CJFDTOTAL-GWDZ201808005.htmLIN Y, QING W J, QI G Q. Autonomous tracking research of UAV based on airborne visual guidance[J]. Journal of Electronic Design Engineering, 2018, 26(8): 21-24(in Chiense). https://www.cnki.com.cn/Article/CJFDTOTAL-GWDZ201808005.htm [17] XU Z H, ZHANG Y, LI H B, et al. A new shadow tracking method to locate the moving target in SAR imagery based on KCF[C]//Proceedings of the 2017 International Conference on Communications, Signal Processing, and Systems. Berlin: Springer, 2017: 2661-2669. [18] FAN J X, LI D W, WANG H L, et al. UAV low altitude flight threat perception based on improved SSD and KCF[C]//International Conference on Control and Automation. Piscataway: IEEE Press, 2019: 356-361. [19] 徐小超, 严华. 引入目标分块模型的核相关滤波目标追踪算法[J]. 计算机应用, 2020, 40(3): 683-688. https://www.cnki.com.cn/Article/CJFDTOTAL-JSJY202003013.htmXU X C, YAN H. A target tracking algorithm based on kernel correlation filter is introduced[J]. Computer Application, 2020, 40(3): 683-688(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JSJY202003013.htm [20] HAN S B, XIE Z J, LI H J, et al. Scale estimation for KCF tracker based on feature fusion[C]//The 4th International Conference on Innovation in Artificial Intelligence. New York: ACM, 2020: 32-36. [21] SIMON M, AMENDE K, KRAUS A, et al. Complexer-YOLO: Real-time 3D object detection and tracking on semantic point clouds[C]//Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE Press, 2019: 1190-1199. [22] ZHANG H B, QIN L F, LI J, et al. Real-time detection method for small traffic signs based on Yolov3[J]. IEEE Access, 2020, PP(99): 1. [23] WU Y, LIM J, YANG M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834-1848. [24] KRISTAN M, MATAS J, LEONARDIS A, et al. The visual object tracking VOT2013 challenge results[C]//IEEE International Conference on Computer Vision Workshops. Piscataway: IEEE Press, 2013: 98-111. [25] KRISTAN M, JIRI M, LEONARDIS A. The visual object tracking VOT2015 challenge results[C]//IEEE International Conference on Computer Vision Workshop. Piscataway: IEEE Press, 2015: 564-586. -

下载:

下载: