-

摘要:

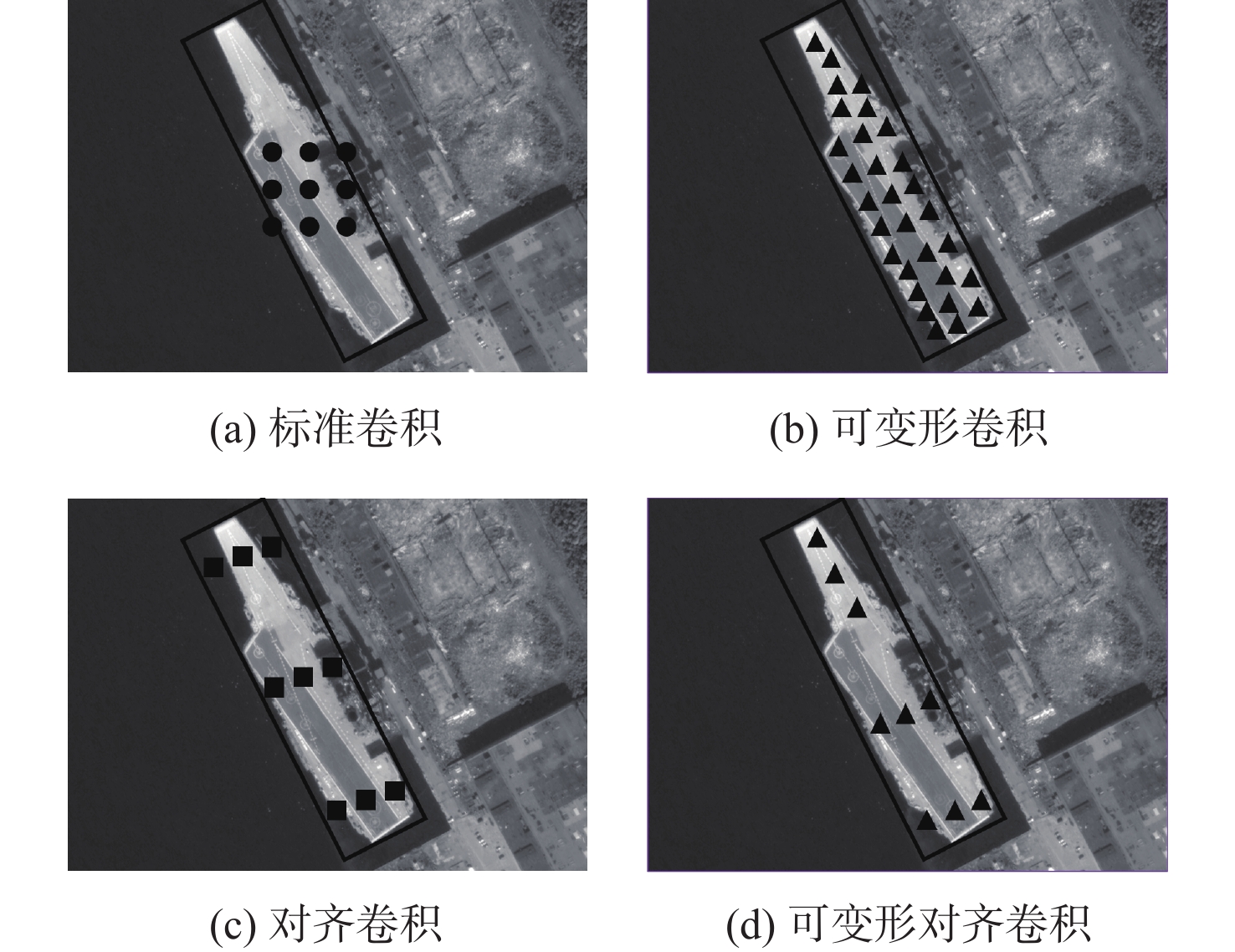

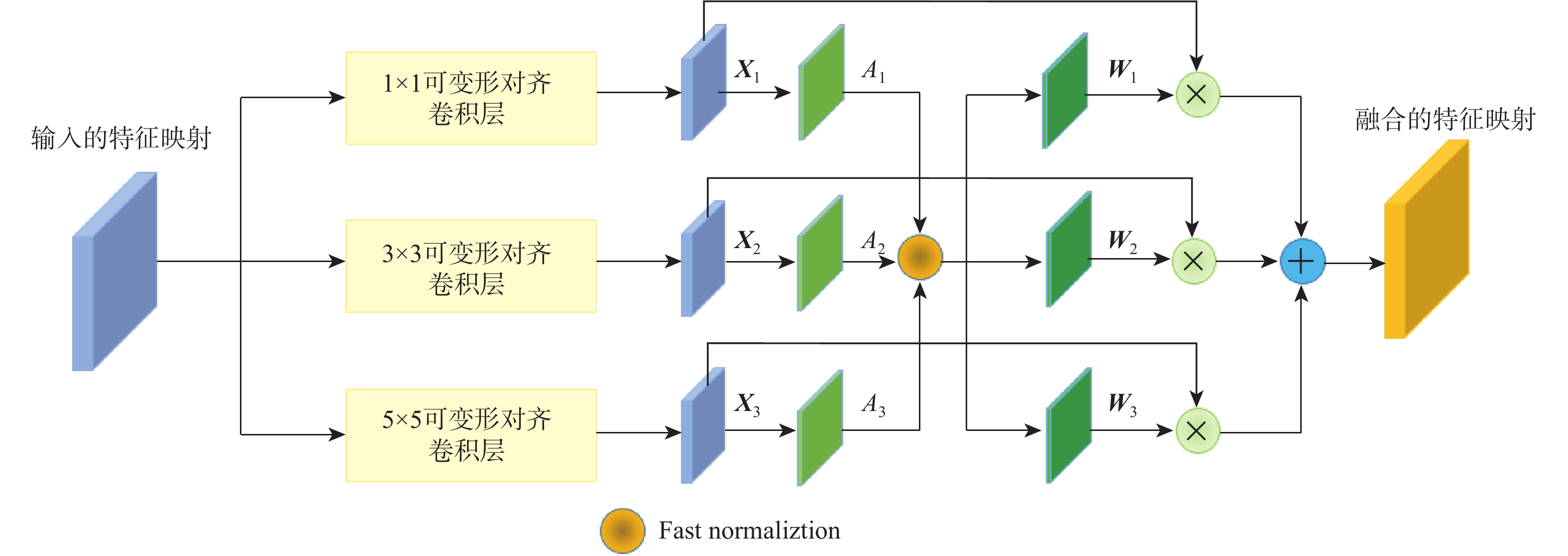

在遥感图像目标检测领域内,旋转物体的检测存在挑战,卷积神经网络在提取信息时会受制于固定的空间结构,采样点无法聚焦于目标;遥感图像尺度变化大,不同物体需要具有不同尺度感受野的特征映射,具有单一尺度感受野的特征映射无法包含所有有效信息。基于此,提出了可变形对齐卷积,根据候选边框调节采样点,并根据特征映射学习采样点的细微偏移,使采样点聚焦于目标,从而实现动态特征选择;同时提出了基于可变形对齐卷积的感受野自适应模块,对具有不同尺度感受野的特征映射进行融合,自适应地调整神经元的感受野。在公开数据集上的大量实验验证了所提算法可以提高遥感图像目标检测的精度。

Abstract:In the field of remote sensing image target detection, there still are challenges in oriented object detection. Convolutional neural network is subject to a fixed spatial structure when extracting information, and sampling locations cannot focus on objects. The scale of the remote sensing image varies greatly, and different objects require receptive fields of different scales to obtain feature map. Meanwhile, feature map with a single-scale receptive field cannot contain all effective information. In response to the first problem, deformable alignment convolution was proposed, which can first adjust the sampling locations according to the region of interest, and further learn slight offsets according to feature map, so that sampling locations can focus on objects and realize dynamic feature selection. For the second question, receptive field adaptive module based on deformable alignment convolution was proposed to fuse feature map with receptive fields of different scales and adaptively adjust the receptive field of neurons. Extensive experiments on public datasets showed that this method can improve the accuracy of remote sensing image target detection.

-

表 1 可变形对齐卷积与其他卷积对比

Table 1. Comparison between deformable alignment convolution and other convolutions

方法 mAP/% 标准卷积 71.17 可变形卷积 71.68 对齐卷积 72.45 可变形对齐卷积 73.18 表 2 DFSNet的消融实验对比

Table 2. Ablation studies of DFSNet

方法 感兴趣区域

转换模块动态特征

选择层感受野自

适应模块mAP 基线 68.05 DFSNet的

不同设置√ 71.17 √ √ 73.18 √ √ √ 74.04 表 3 DFSNet与其他模型在DOTA数据集上的对比结果

Table 3. Comparison of DFSNet and other methods on DOTA

双/单阶段 模型 AP/% mAP/% PL BD BR GTF SV 双阶段 FR-O[2] 79.42 77.13 17.70 64.05 35.30 54.72 RoI Trans former[8] 88.64 78.52 43.44 75.92 68.81 71.10 CAD-Net[20] 87.80 82.40 49.40 73.50 71.10 72.84 SCRDet[21] 89.98 80.65 52.09 68.36 68.36 71.89 单阶段 RetinaNet 88.82 81.74 44.44 65.72 67.11 69.57 DRN[16] 88.91 80.22 43.52 63.35 73.48 69.90 R3Det[22] 89.54 81.99 48.46 62.52 70.48 70.60 DFSNet 89.12 77.40 52.05 73.47 78.02 74.01 双/单阶段 模型 AP/% mAP/% LV SH TC BC ST 双阶段 FR-O[2] 38.02 37.16 89.41 69.64 59.28 58.70 RoI Trans former[8] 73.68 83.59 90.74 77.27 81.46 81.35 CAD-Net[20] 63.50 76.60 90.90 79.20 73.30 76.70 SCRDet[21] 60.32 72.41 90.85 87.94 86.86 79.68 单阶段 RetinaNet 55.82 72.77 90.55 82.83 76.30 75.65 DRN[16] 70.69 89.94 90.14 83.85 84.11 83.75 R3Det[22] 74.29 77.54 90.80 81.39 83.54 81.51 DFSNet 79.31 87.35 90.90 85.13 84.90 85.52 双/单阶段 模型 AP/% mAP/% SBF RA HA SP HC 双阶段 FR-O[2] 50.30 52.91 47.89 47.40 46.30 48.96 RoI Trans former[8] 58.39 53.54 62.83 58.93 47.67 56.27 CAD-Net[20] 48.40 60.90 62.00 67.00 62.20 60.10 SCRDet[21] 65.02 66.68 66.25 68.24 65.21 66.28 单阶段 RetinaNet 54.19 63.64 63.71 69.73 53.37 60.93 DRN[16] 50.12 58.41 67.62 68.60 52.50 59.45 R3Det[22] 61.97 59.82 65.44 67.46 60.05 62.95 DFSNet 60.90 63.83 67.31 67.56 53.35 62.59 -

[1] 王彦情, 马雷, 田原. 光学遥感图像舰船目标检测与识别综述[J]. 自动化学报, 2011, 37(9): 1029-1039.WANG Y Q, MA L, TIAN Y. State-of-the-art of ship detection and recognition in optical remotely sensed imagery[J]. Acta Automatica Sinica, 2011, 37(9): 1029-1039(in Chinese). [2] XIA G S, BAI X, DING J, et al. DOTA: A large-scale dataset for object detection in aerial images[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 3974-3983. [3] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic seg-mentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014: 580-587. [4] GIRSHICK R. Fast R-CNN[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 1440-1448. [5] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [6] HE K M, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 2961-2969. [7] DAI J F, QI H Z, XIONG Y W, et al. Deformable convolutional networks[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 764-773. [8] DING J, XUE N, LONG Y, et al. Learning RoI Transformer for oriented object detection in aerial images[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 2849-2858. [9] LIU Z K, HU J G, WENG L B, et al. Rotated region based CNN for ship detection[C]//2017 IEEE International Conference on Image Processing(ICIP). Piscataway: IEEE Press, 2017: 900-904. [10] MA J Q, SHAO W Y, YE H, et al. Arbitrary-oriented scene text detection via rotation proposals[J]. IEEE Transactions on Multimedia, 2018, 20(11): 3111-3122. doi: 10.1109/TMM.2018.2818020 [11] HAN J M, DING J, LI J, et al. Align deep features for oriented object detection[EB/OL]. (2021-07-12)[2021-07-12]. [12] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 2117-2125. [13] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 2980-2988. [14] HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 770-778. [15] LI Y H, CHEN Y T, WANG N Y, et al. Scale-aware trident networks for object detection[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6054-6063. [16] PAN X J, REN Y Q, SHENG K K, et al. Dynamic refinement network for oriented and densely packed object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 11207-11216. [17] IOFFE S , SZEGEDY C. Batch Normalization: Accelerating deep network training by reducing internal covariate shift[C]//International Conference on Machine Learning, 2015: 448-456. [18] TAN M X, PANG R M, LE Q V. EfficientDet: Scalable and efficient object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 10781-10790. [19] PASZKE A, GROSS S, MASSA F, et al. PyTorch: An imperative style, high-performance deep learning library[EB/OL]. (2019-12-03)[2021-06-01]. [20] ZHANG G J, LU S J, ZHANG W. CAD-Net: A context-aware detection network for objects in remote sensing imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(12): 10015-10024. doi: 10.1109/TGRS.2019.2930982 [21] YANG X, YANG J R, YAN J C, et al. SCRDet: Towards more robust detection for small, cluttered and rotated objects[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 8232-8241. [22] YANG X, LIU Q Q, YAN J C, et al. R3Det: Refined single-stage detector with feature refinement for rotating object[EB/OL]. (2020-12-08)[202-06-01]. -

下载:

下载: