-

摘要:

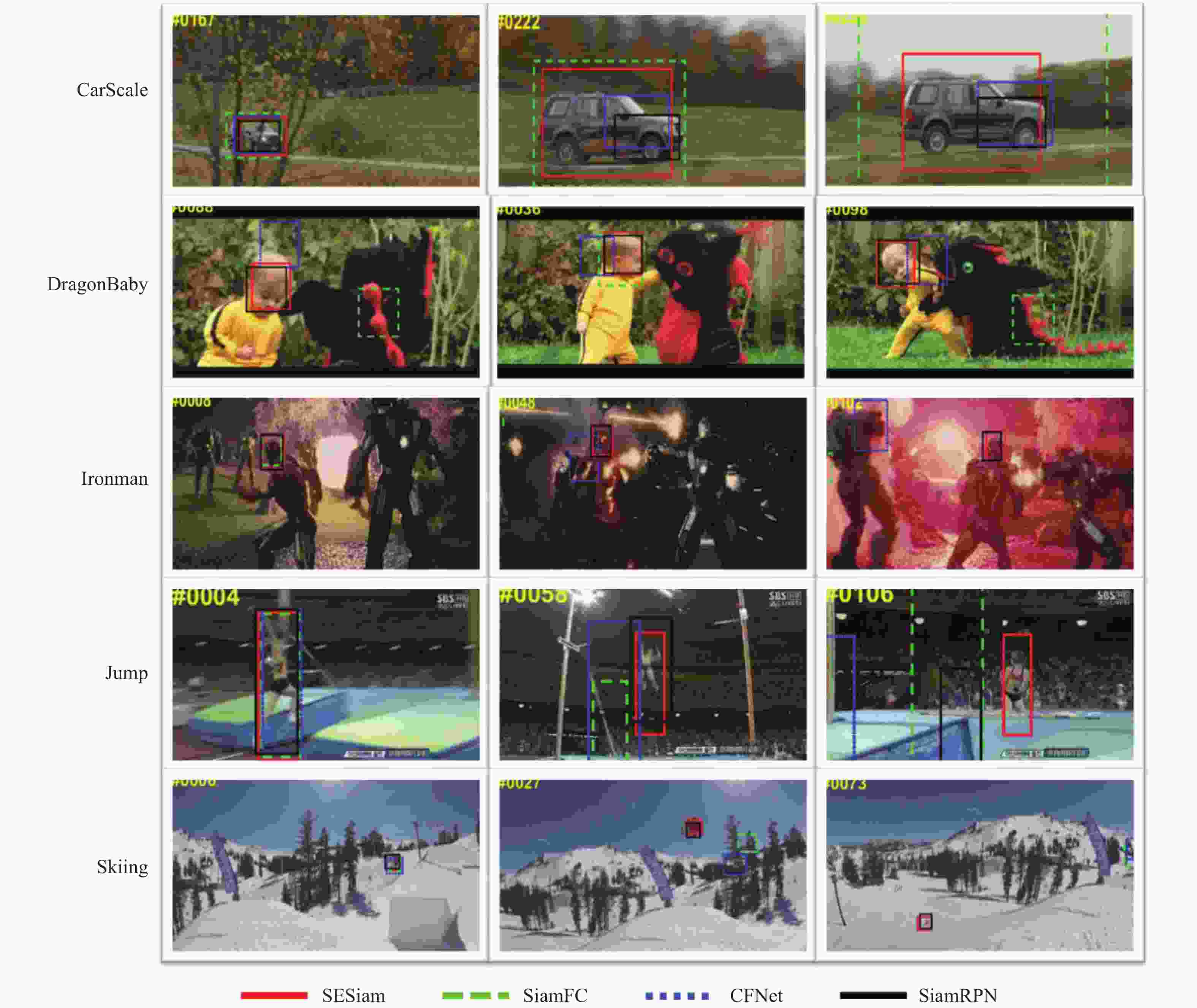

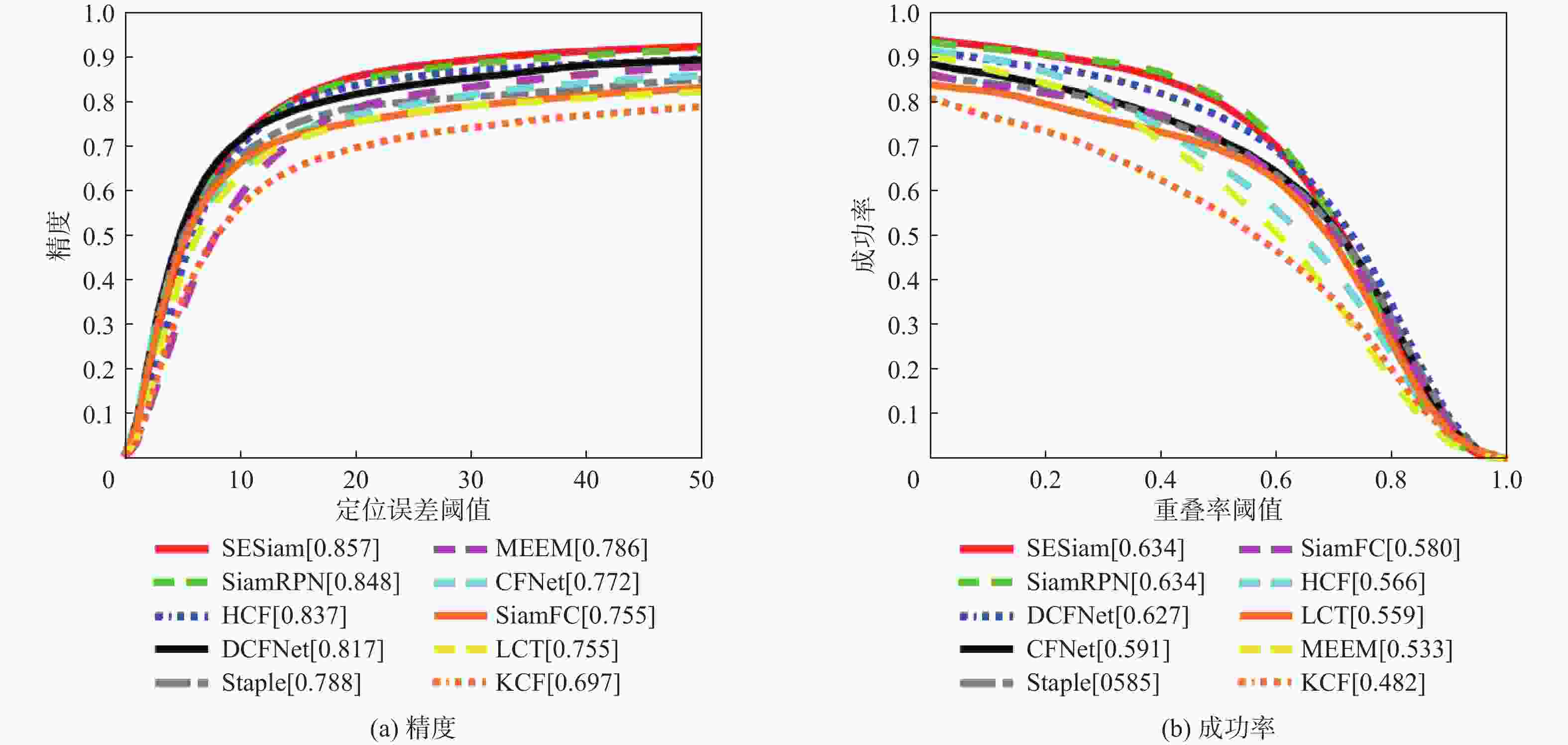

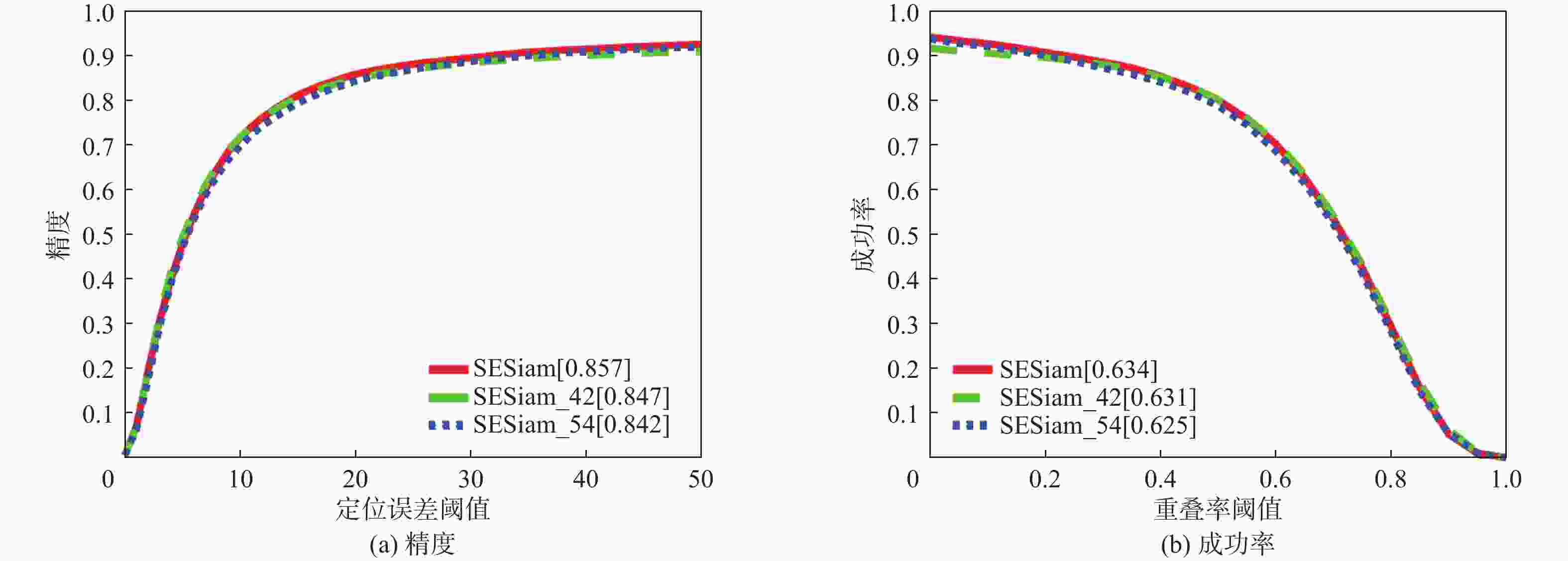

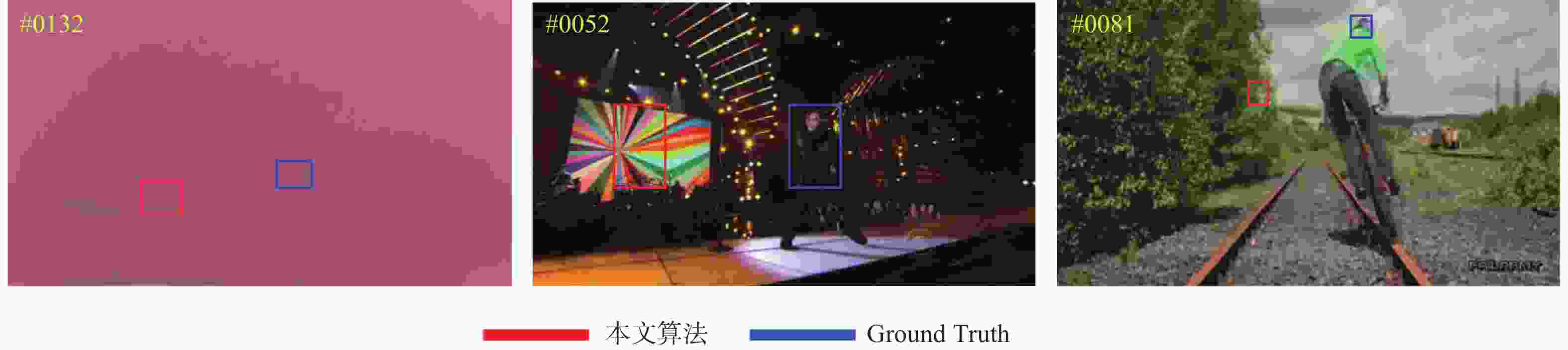

在不加深网络的前提下,为提高孪生网络的特征表达能力,提出基于高层语义嵌入的孪生网络跟踪算法。利用卷积和上采样运算设计了语义嵌入模块,有效融合了深层特征和浅层特征,达到了优化浅层特征的目的,且该模块可以针对任意网络进行灵活的设计与部署。在孪生网络框架下,对AlexNet骨干网络不同层之间添加2个语义嵌入模块。在离线训练阶段进行循环优化,使深层语义信息逐渐转移到较浅的特征层,在跟踪阶段,舍弃语义嵌入模块,仍采用原始的网络结构。实验结果表明:相比于SiamFC,所提算法在OTB2015数据集上精度提高了0.102,成功率提高了0.054。

Abstract:In order to improve the feature expression ability of the Siamese network without deepening the network, a Siamese network tracking algorithm was propose based on high-level semantic embedding. First, a semantic embedding module was designed with convolution and up-sampling operations, which effectively integrated deep features with shallow features, thus achieving the purpose of optimizing shallow features, and this module can be flexibly designed and deployed for any network. Then, under the Siamese network framework, two semantic embedding modules were added between different layers of the AlexNet backbone network. Cyclic optimization was carried out in the offline training stage to gradually transfer the deep semantic information to the shallow feature layer. In the tracking stage, the semantic embedding module was abandoned and the original network structure was adopted. The experimental results show that compared with SiamFC on the OTB2015 data set, the accuracy is improved by 0.102 and the success rate is increased by 0.054.

-

Key words:

- computer vision /

- visual tracking /

- Siamese network /

- semantic embedding /

- feature fusion

-

表 1 孪生网络系列算法跟踪性能对比

Table 1. Comparison of tracking performance of Siam-based algorithms

算法 精度 成功率 跟踪速度/fps SESiam 0.857 0.634 86 SiamRPN 0.848 0.634 160 GradNet 0.861 0.639 80 CFNet 0.772 0.591 78.4 SASiam 0.849 0.641 50 DCFNet 0.817 0.627 65.9 SiamFC 0.755 0.580 83.7 注:fps为帧/s。 -

[1] RAWAT W, WANG Z. Deep convolutional neural networks for image classification: A comprehensive review[J]. Neural Computation, 2017, 29(9): 2352-2449. doi: 10.1162/neco_a_00990 [2] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014: 580-587. [3] LONG J, SHELHAMER E, DARRELL T. Fully convolutional networks for semantic segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 3431-3440. [4] SMEULDERS A W M, CHU D M, CUCCHIARA R, et al. Visual tracking: An experimental survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7): 1442-1468. [5] BOLME D S, BEVERIDGE J R, DRAPER B A, et al. Visual object tracking using adaptive correlation filters[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2010: 2544-2550. [6] 蒲磊, 冯新喜, 侯志强, 等. 基于深度空间正则化的相关滤波跟踪算法[J]. 电子学报, 2020, 48(10): 2025-2032. doi: 10.3969/j.issn.0372-2112.2020.10.021PU L, FENG X X, HOU Z Q, et al. Correlation filter tracking based on deep spatial regularization[J]. Acta Electronica Sinica, 2020, 48(10): 2025-2032(in Chinese). doi: 10.3969/j.issn.0372-2112.2020.10.021 [7] HENRIQUES J F, RUI C, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583-596. doi: 10.1109/TPAMI.2014.2345390 [8] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]//European Conference on Computer Vision. Berlin: Springer, 2016: 850-865. [9] MA C, HUANG J B, YANG X K, et al. Hierarchical convolutional features for visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 3074-3082. [10] WU Y, LIM J, YANG M. Online object tracking: A benchmark[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2013: 2411-2418. [11] QI Y K, ZHANG S, QIN L, et al. Hedged deep tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 4303-4311. [12] HE Z, FAN Y, ZHUANG J, et al. Correlation filters with weighted convolution responses[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 1992-2000. [13] BHAT G, JOHNANDER J, DANELLJAN M, et al. Unveiling the power of deep tracking[C]//European Conference on Computer Vision. Berlin: Springer, 2018: 483-498. [14] DANELLJAN M, HAGER G, KHAN F S, et al. Convolutional features for correlation filter based visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 58-66. [15] DANELLJAN M, ROBINSON A, KHAN F S, et al. Beyond correlation filters: Learning continuous convolution operators for visual tracking[C]//European Conference on Computer Vision. Berlin: Springer, 2016: 472-488. [16] GUO Q, FENG W, ZHOU C, et al. Learning dynamic Siamese network for visual object tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 1781-1789. [17] LI P X, CHEN B Y, OUYANG W L, et al. GradNet: Gradient-guided network for visual object tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6162-6171. [18] LI B, YAN J J, WU W, et al. High performance visual tracking with Siamese region proposal network[C]//IProceedings of the EEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 8971-8980. [19] VALMADRE J, BERTINETTO L, HENRIQUES J, et al. End-to-end representation learning for correlation filter based tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 5000-5008. [20] WANG Q, GAO J , XING J L, et al. DCFNet: Discriminant correlation filters network for visual tracking[EB/OL]. (2017-04-13)[2021-06-01].https://arxiv.org/abs/1704.04057. [21] YAO Y J, WU X H, ZHANG L, et al. Joint representation and truncated inference learning for correlation filter based tracking[C]// European Conference on Computer Vision. Berlin: Springer, 2018: 552-567. [22] HE A, LUO C, TIAN X, et al. A twofold Siamese network for real-time object tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 4834-4843. [23] LI B, WU W, WANG Q, et al. SiamRPN++: Evolution of Siamese visual tracking with very deep networks[C]//Proceedings of the Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4282-4291. [24] WU Y, LIM J, YANG M. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834-1848. doi: 10.1109/TPAMI.2014.2388226 [25] BERTINETTO L, VALMADRE J, GOLODETZ S, et al. Staple: Complementary learners for real-time tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 1401-1409. [26] MA C, YANG X, ZHANG C, et al. Long-term correlation tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 5388-5396. [27] ZHANG J, MA S, SCLAROFF S. MEEM: Robust tracking via multiple experts using entropy minimization[C]//European Conference on Computer Vision. Berlin: Springer, 2014: 188-203. -

下载:

下载: