-

摘要:

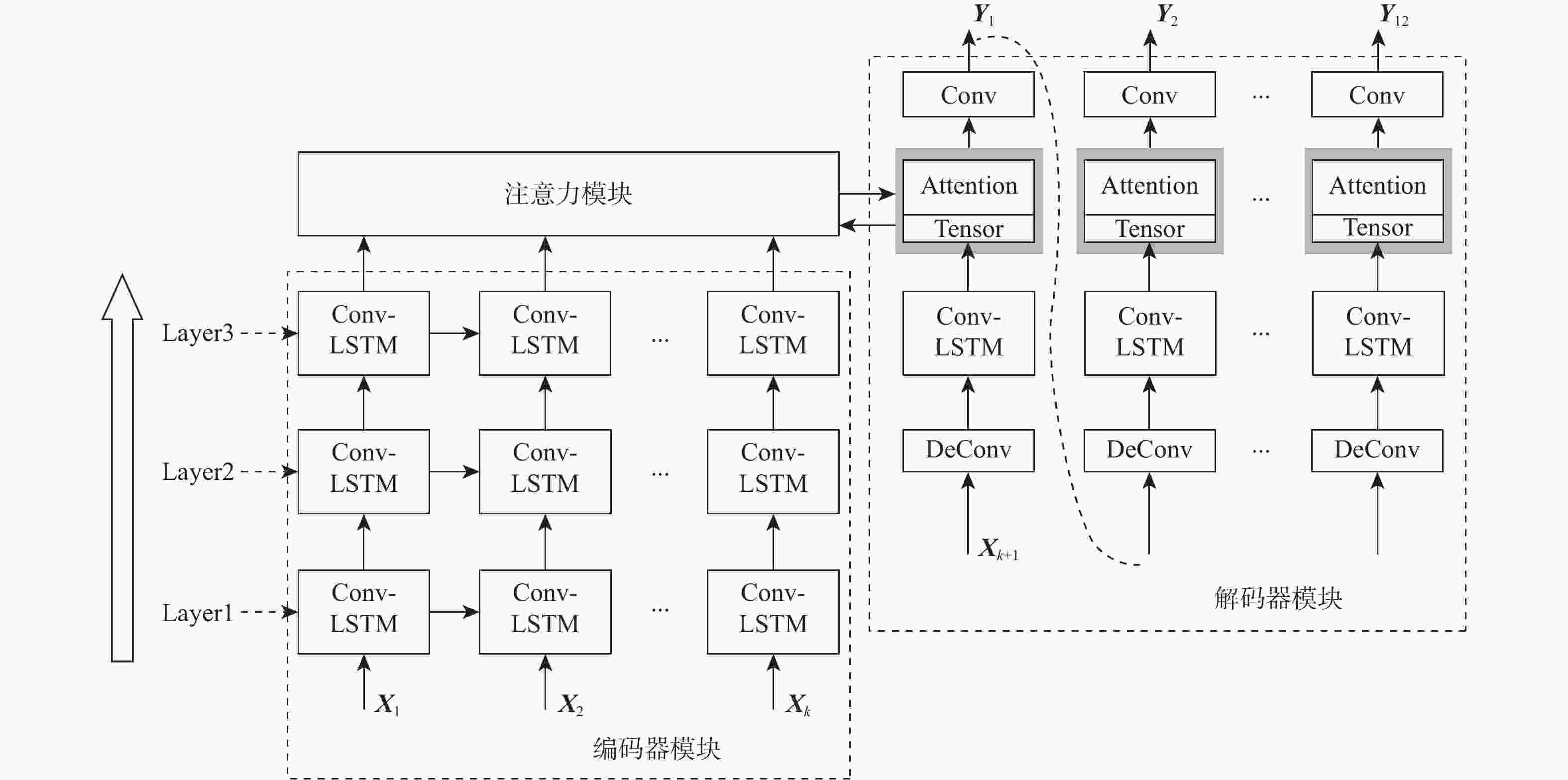

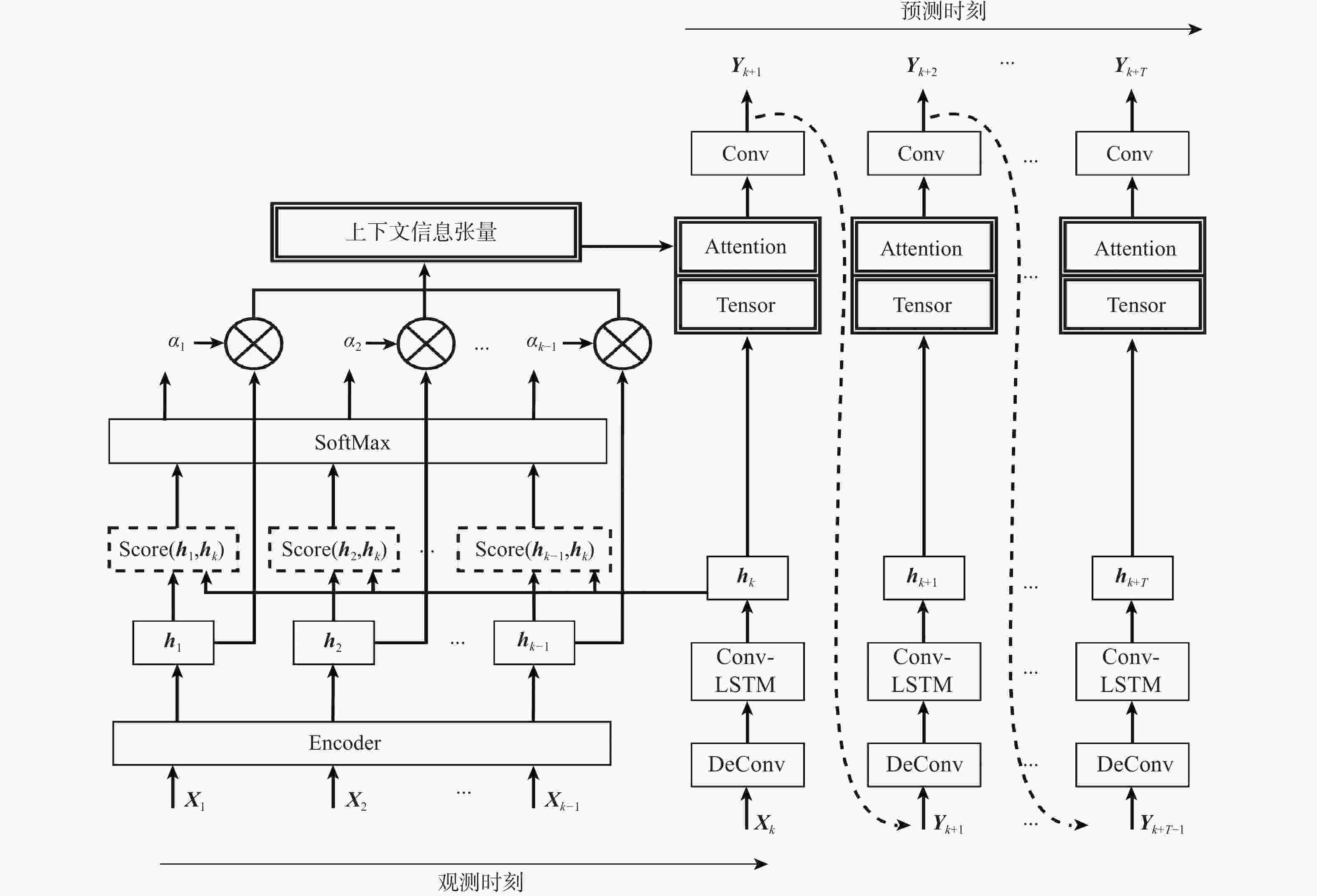

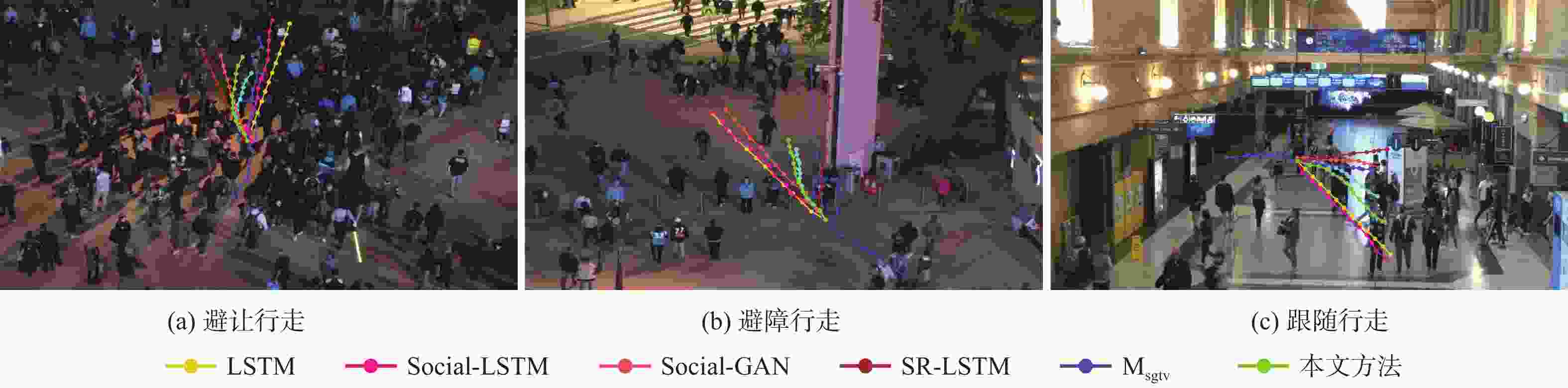

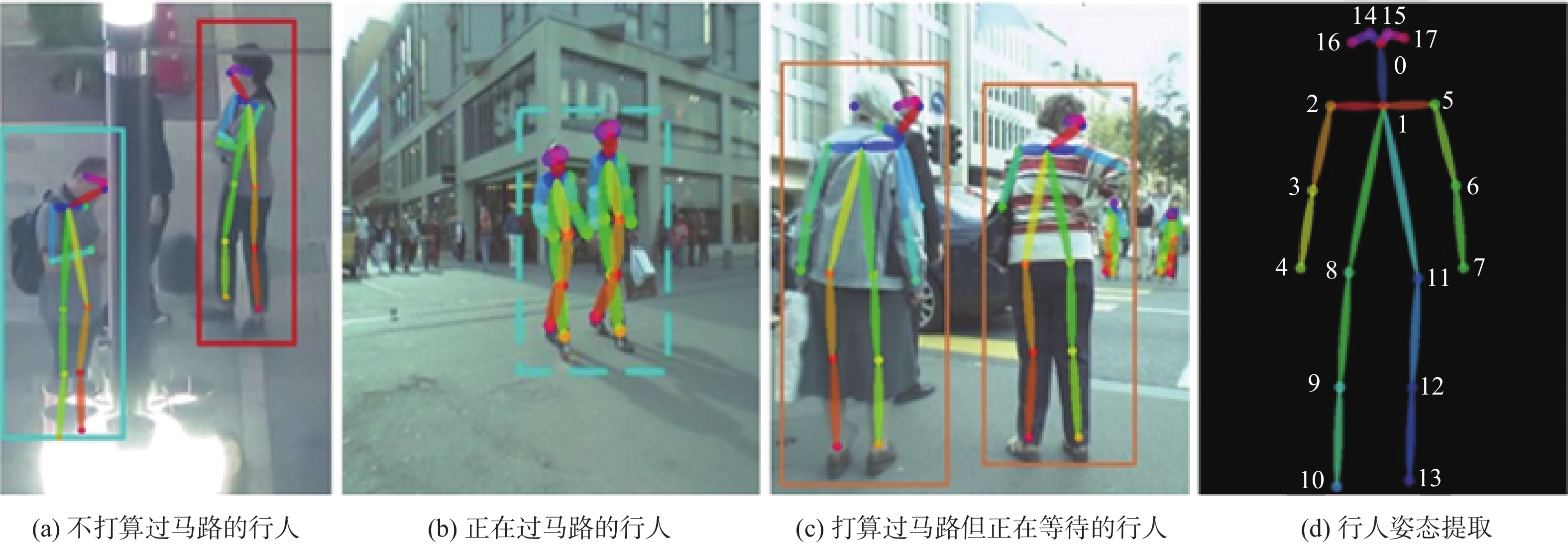

在自动驾驶领域,行人轨迹预测一直是研究热点之一,行人行为的不确定性给轨迹预测带来很大的挑战。目前大部分轨迹预测方法只专注于行人之间的信息交互,忽略了行人意图和场景中其他语义信息对行人轨迹的影响。为此,提出一种基于行人姿态的卷积编码器-解码器网络(PKCEDN)来预测目标行人轨迹的方法,所提方法包含基于卷积、长短时记忆(LSTM)网络的编码器-解码器模型和能够学习当前时刻与过去时刻轨迹相关性的注意力机制。所提方法在MOT16、MOT17和MOT20公开数据集上进行了相关测试,与Linear、LSTM、Social-LSTM、Social-生成对抗网络(GAN)、SR-LSTM和Msgtv等主流方法相比,在保证预测速度不降低的前提下,平均误差降低约36%。

Abstract:In the field of autonomous driving, pedestrian trajectory prediction has been one of the research hotspots, and the uncertainty of pedestrian behavior poses a great challenge to trajectory prediction. Most of the current trajectory prediction methods only focus on the information interaction between pedestrians, ignoring the influence of pedestrian intention and other semantic information in the scene on the pedestrian trajectory. In order to achieve this, this paper suggests a method for predicting target pedestrian trajectory using pose keypoints based convolutional encoder-decoder network (PKCEDN). The method includes an attention mechanism that can learn the relationship between the current moment and past moment trajectories, as well as an encoder-decoder model based on convolutional, long and short-term memory (LSTM) networks. The proposed method has been tested on the MOT16, MOT17, and MOT20 public datasets, and the average error is reduced by about 36% compared to mainstream methods such as Linear, LSTM, Social-LSTM, Social-GAN, SR-LSTM, and Msgtv, while ensuring no reduction in prediction speed.

-

表 1 网络层模型参数列表

Table 1. Network layer model parameters list

网络层 输入 输出 Layer1 16@11×11 32@11×11 Layer2 32@11×11 64@11×11 Layer3 64@11×11 128@11×11 Deconv 2@1×1 128@11×11 Conv-LSTM (Decoder Module) 128@11×11 128@11×11 Conv 128@11×11 2@1×1 表 2 本文方法与主流方法的轨迹预测结果在MOT16数据集上的比较

Table 2. Comparison of trajectory prediction results of proposed method and state-of-the-art methods on MOT16 dataset

像素 方法 ADE ADE均值 MOT16-02 MOT16-04 MOT16-09 MOT16-10 MOT16-13 LSTM 30.12 32.15 30.52 30.96 31.73 31.096 Social-LSTM 29.13 30.86 31.25 29.85 29.95 30.208 Social-GAN 27.63 29.42 28.75 29.54 30.83 29.234 SR-LSTM 28.73 29.65 30.74 29.31 29.03 29.492 Msgtv 27.32 29.21 27.49 30.38 29.72 28.824 本文方法 19.15 17.46 19.54 16.75 18.43 18.266 方法 FDE FDE均值 MOT16-02 MOT16-04 MOT16-09 MOT16-10 MOT16-13 LSTM 44.64 43.85 41.64 42.43 40.45 42.602 Social-LSTM 43.53 41.85 40.64 39.56 40.75 41.266 Social-GAN 34.54 35.46 32.64 37.85 38.85 35.868 SR-LSTM 38.35 37.31 38.62 34.18 32.48 36.188 Msgtv 33.07 34.38 31.83 38.01 35.74 34.606 本文方法 21.11 20.43 23.66 20.78 22.54 21.704 表 3 本文方法与主流方法的轨迹预测结果在MOT17数据集上的比较

Table 3. Comparison of trajectory prediction results of PKCEDN and state-of-the-art methods on MOT17 dataset

像素 方法 ADE ADE均值 MOT17-02 MOT17-03 MOT17-08 MOT17-11 MOT17-14 LSTM 35.15 36.31 33.85 35.21 39.38 35.98 Social-LSTM 30.01 31.48 32.89 35.02 33.64 32.608 Social-GAN 28.79 30.13 28.62 30.93 30.48 29.79 SR-LSTM 29.13 30.53 29.24 30.15 29.61 29.732 Msgtv 26.53 30.13 28.75 29.32 27.54 28.454 本文方法 20.04 17.86 18.42 15.84 18.05 18.042 方法 FDE FDE均值 MOT17-02 MOT17-03 MOT17-08 MOT17-11 MOT17-14 LSTM 50.64 55.97 61.57 60.02 63.42 58.324 Social-LSTM 44.64 52.18 54.15 43.63 42.17 47.354 Social-GAN 35.64 39.24 38.02 37.89 35.93 37.344 SR-LSTM 36.73 40.13 37.85 36.47 34.64 37.164 Msgtv 34.53 37.64 35.18 37.56 33.17 35.616 本文方法 24.75 23.84 25.03 22.41 25.75 24.356 表 4 本文方法与主流方法的轨迹预测结果在MOT20数据集上的比较

Table 4. Comparison of trajectory prediction results of PKCEDN and state-of-the-art methods on MOT20 dataset

像素 方法 ADE ADE均值 MOT20-01 MOT20-02 MOT20-03 MOT20-05 LSTM 32.13 34.98 29.76 30.65 31.88 Social-LSTM 30.10 33.21 30.41 29.98 30.93 Social-GAN 27.02 26.98 26.81 24.06 26.22 SR-LSTM 28.31 33.01 28.73 29.53 29.895 Msgtv 28.64 27.16 26.14 24.98 26.730 本文方法 18.64 17.85 20.13 14.43 17.763 方法 FDE FDE均值 MOT20-01 MOT20-02 MOT20-03 MOT20-05 LSTM 46.54 51.83 53.54 53.41 51.33 Social-LSTM 43.83 44.12 43.84 40.75 43.14 Social-GAN 32.79 30.81 33.56 31.84 32.25 SR-LSTM 36.64 40.37 39.21 34.78 37.750 Msgtv 33.69 32.42 34.15 31.19 32.863 本文方法 24.74 21.63 24.31 19..46 22.535 表 5 本文方法的消融实验

Table 5. Ablation study of proposed method

算法 参数 数值(MOT16)/像素 Tenl MADE 24.455 MFDE 31.324 Tenl + Tenp MADE 22.543 MFDE 30.041 Tenh MADE 21.412 MFDE 27.544 Tenp+Tenh MADE 18.266 MFDE 21.704 表 6 本文方法与主流方法在预处理和预测速度方面的比较

Table 6. Comparison with state-of-the-art methods on pre-processing speed and prediction speed

方法 数据预处理模块 预测模块速度/(次·s−1) 速度提升/% LSTM 0.03 0.04 76.29 Social-LSTM 2.13 3.21 1 Social-GAN 0.20 0.26 11.60 SR-LSTM 1.24 2.01 1.64 Msgtv 0.24 0.38 8.61 本文方法 0.54 0.28 6.51 -

[1] RODDENBERRY T M, GLAZE N, SEGARRA S. Principled simplicial neural networks for trajectory prediction[C]//Proceedings of Machine Learning Research. New York: Cornell Universitl, 2021: 9020-9029. [2] CHENG H, LIAO W T, YANG M Y, et al. AMENet: Attentive maps encoder network for trajectory prediction[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 172: 253-266. doi: 10.1016/j.isprsjprs.2020.12.004 [3] CHANDRA R, GUAN T R, PANUGANTI S, et al. Forecasting trajectory and behavior of road-agents using spectral clustering in graph-LSTMs[J]. IEEE Robotics and Automation Letters, 2020, 5(3): 4882-4890. doi: 10.1109/LRA.2020.3004794 [4] HADDAD S, LAM S K. Self-growing spatial graph networks for pedestrian trajectory prediction[C]//2020 IEEE Winter Conference on Applications of Computer Vision. Piscataway: IEEE Press, 2020: 1140-1148. [5] WANG R, CUI Y, SONG X, et al. Multi-information-based convolutional neural network with attention mechanism for pedestrian trajectory prediction[J]. Image and Vision Computing, 2021, 107: 104110. doi: 10.1016/j.imavis.2021.104110 [6] LIANG J W, JIANG L, NIEBLES J C, et al. Peeking into the future: Predicting future person activities and locations in videos[C]// 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition . Piscataway: IEEE Press, 2020: 5718-5727. [7] ALAHI A, GOEL K, RAMANATHAN V, et al. Social LSTM: Human trajectory prediction in crowded spaces[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition . Piscataway: IEEE Press, 2016: 961-971. [8] FERNANDO T, DENMAN S, SRIDHARAN S, et al. GD-GAN: Generative adversarial networks for trajectory prediction and group detection in crowds[M]. Computer Vision — ACCV 2018. Berlin: Springer, 2019: 314-330. [9] MANH H, ALAGHBAND G. ScenSe-LSTM: A model for human trajectory prediction[EB/OL]. (2019-04-15)[2021-08-20]. https://arxiv.orglabs/1808.04018. [10] SONG X, CHEN K, LI X, et al. Pedestrian trajectory prediction based on deep convolutional LSTM network[J]. IEEE Transactions on Intelligent Transportation Systems, 2021, 22(6): 3285-3302. doi: 10.1109/TITS.2020.2981118 [11] GRAVES A, GRAVES A. Long short-term memory[M]. Supervised Sequence Labelling with Recurrent Neural Networks. Berlin: Springer, 2012: 37-45. [12] HELBING D, FARKAS I, VICSEK T. Simulating dynamical features of escape panic[J]. Nature, 2000, 407(6803): 487-490. doi: 10.1038/35035023 [13] MORRIS B T, TRIVEDI M M. Trajectory learning for activity understanding: Unsupervised, multilevel, and long-term adaptive approach[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011, 33(11): 2287-2301. doi: 10.1109/TPAMI.2011.64 [14] HELBING D, MOLNÁR P. Social force model for pedestrian dynamics[J]. Physical Review E, 1995, 51(5): 4282-4286. doi: 10.1103/PhysRevE.51.4282 [15] KIM J, AHN C, LEE S. Modeling handicapped pedestrians considering physical characteristics using cellular automaton[J]. Physica A:Statistical Mechanics and Its Applications, 2018, 510: 507-517. doi: 10.1016/j.physa.2018.06.090 [16] QUAN R J, ZHU L C, WU Y, et al. Holistic LSTM for pedestrian trajectory prediction[J]. IEEE Transactions on Image Processing, 2021, 30: 3229-3239. doi: 10.1109/TIP.2021.3058599 [17] ZHAO T Y, XU Y F, MONFORT M, et al. Multi-agent tensor fusion for contextual trajectory prediction[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE press, 2019: 12126-12134. [18] XU Y Y, PIAO Z X, GAO S H. Encoding crowd interaction with deep neural network for pedestrian trajectory prediction[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 5275-5284. [19] ZOU H S, SU H, SONG S H, et al. Understanding human behaviors in crowds by imitating the decision-making process[C]//Thirty-Second AAAI Conference on Artificial Intelligence. Piscataway: AAAI Press, 2018. [20] TABUADA P, PAPPAS G J, LIMA P. Motion feasibility of multi-agent formations[J]. IEEE Transactions on Robotics, 2005, 21(3): 387-392. [21] ZHANG C J, LESSER V. Multi-agent learning with policy prediction[C]//Proceedings of the AAAI Conference on Artificial Intelligence. New York: ACM, 2010, 24(1): 927-934. [22] RUDENKO A, PALMIERI L, HERMAN M, et al. Human motion trajectory prediction: A survey[J]. The International Journal of Robotics Research, 2020, 39(8): 895-935. doi: 10.1177/0278364920917446 [23] GUPTA A, JOHNSON J, LI F F, et al. Social GAN: Socially acceptable trajectories with generative adversarial networks[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 2255-2264. [24] VEMULA A, MUELLING K, OH J. Social attention: Modeling attention in human crowds[C]//2018 IEEE international Conference on Robotics and Automation. Piscataway: IEEE Press, 2018: 4601-4607. [25] KITANI K M, ZIEBART B D, BAGNELL J A, et al. Activity Forecasting[C]//European Conference on Computer Vision. Berlin: Springer, 2012: 201-214. [26] XIE D, SHU T M, TODOROVIC S, et al. Learning and inferring “dark matter” and predicting human intents and trajectories in videos[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(7): 1639-1652. doi: 10.1109/TPAMI.2017.2728788 [27] JAIPURIA N, HABIBI G, How J P. A transferable pedestrian motion prediction model for intersections with different geometries[EB/OL]. (2018-06-25)[2021-08-06]. https://arxiv.orglabs/1806.09444. [28] SADEGHIAN A, KOSARAJU V, SADEGHIAN A, et al. SoPhie: An attentive GAN for predicting paths compliant to social and physical constraints[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition . Piscataway: IEEE Press, 2019: 1349-1358. [29] NIKHIL N, MORRIS B T. Convolutional Neural Network for Trajectory Prediction[C]//European Conference on Computer Vision. Berlin: Springer, 2019: 186-196. [30] SIMON T, JOO H, MATTHEWS I, et al. Hand keypoint detection in single images using multiview bootstrapping[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 4645-4653. [31] KOOIJ J F P, SCHNEIDER N, FLOHR F, et al. Context-Based Pedestrian Path Prediction[C]//European Conference on Computer Vision. Berlin: Springer, 2014: 618-633. [32] YAGI T, MANGALAM K, YONETANI R, et al. Future person localization in first-person videos[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscatawary: IEEE press, 2018: 7593-7602. [33] RASOULI A, KOTSERUBA I, KUNIC T, et al. Pie: A large-scale dataset and models for pedestrian intention estimation and trajectory prediction[C]//2019 IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6262-6271. [34] YANG S, LUO P, LOY C C, et al. Wider face: A face detection benchmark[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscatawary: IEEE Press, 2016: 5525-5533. [35] CAO Z, HIDALGO G, SIMON T, et al. OpenPose: Realtime multi-person 2D pose estimation using part affinity fields[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(1): 172-186. doi: 10.1109/TPAMI.2019.2929257 [36] PyTorch. [EB/OL]. (2018-06-23) [2021-07-12]. https://pytorch.org/. [37] MILAN A, LEAL-TAIXÉ L, REID I, et al. MOT16: A benchmark for multi-object tracking[EB/OL]. (2016-03-03)[2021-08-06]. https://arxiv.orglabs11603.00831. [38] DENDORFER P, REZATOFIGHI H, MILAN A, et al. Mot20: A benchmark for multi object tracking in crowded scenes[EB/OL]. (2020-03-19)[2021-08-07]. https://arxiv.orglabs/2003.0903. [39] ZHANG P, OUYANG W L, ZHANG P F, et al. SR-LSTM: State refinement for LSTM towards pedestrian trajectory prediction[C]// 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 12077-12086. -

下载:

下载: