-

摘要:

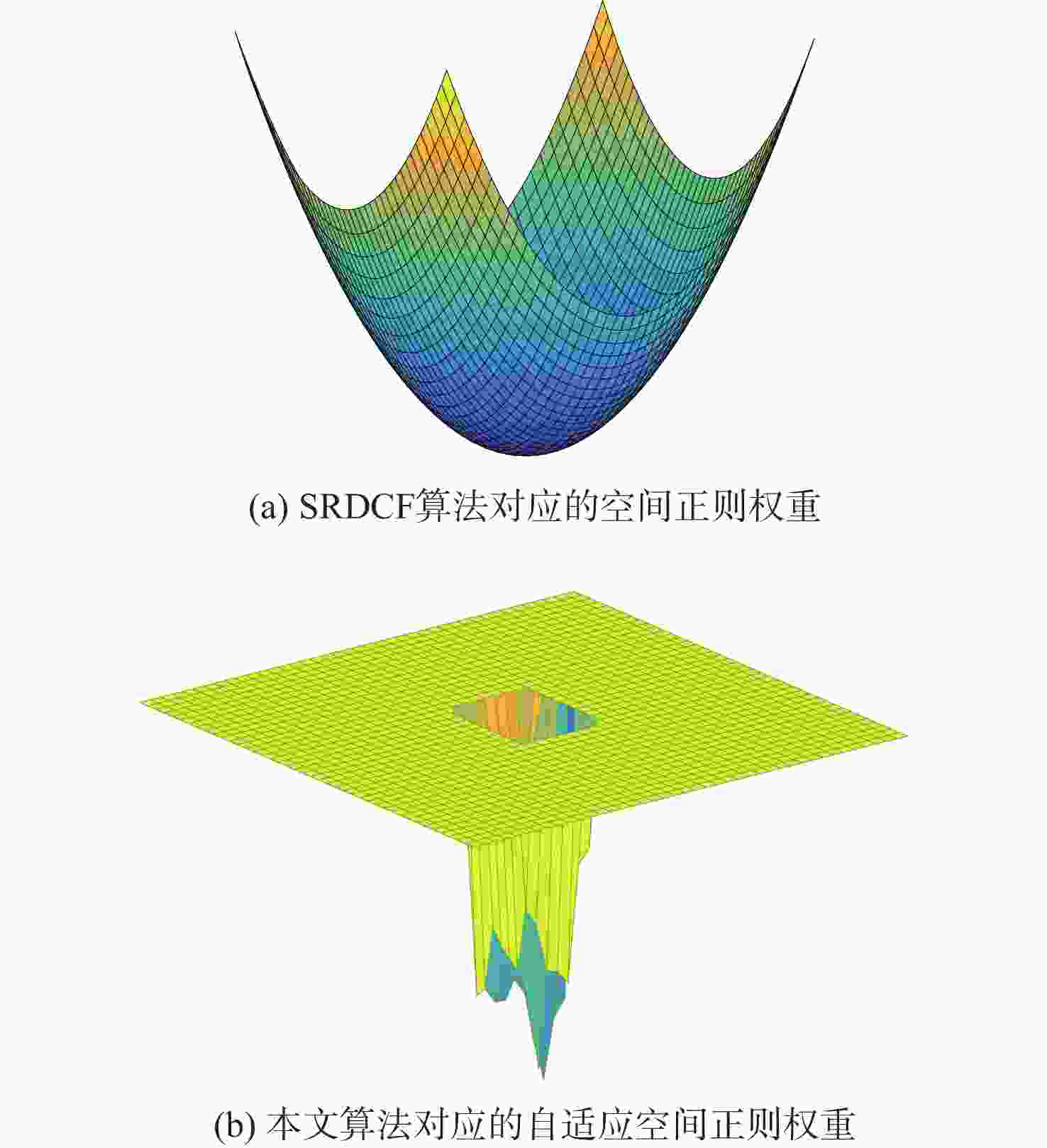

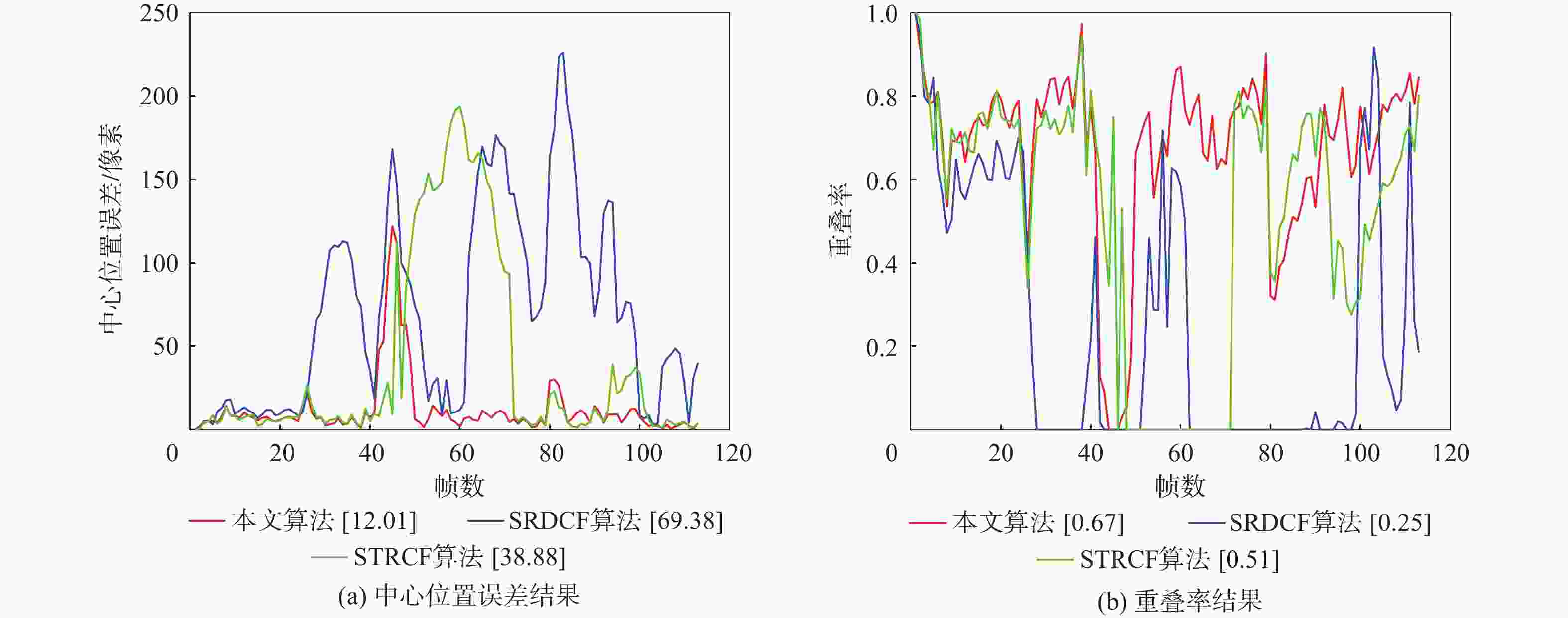

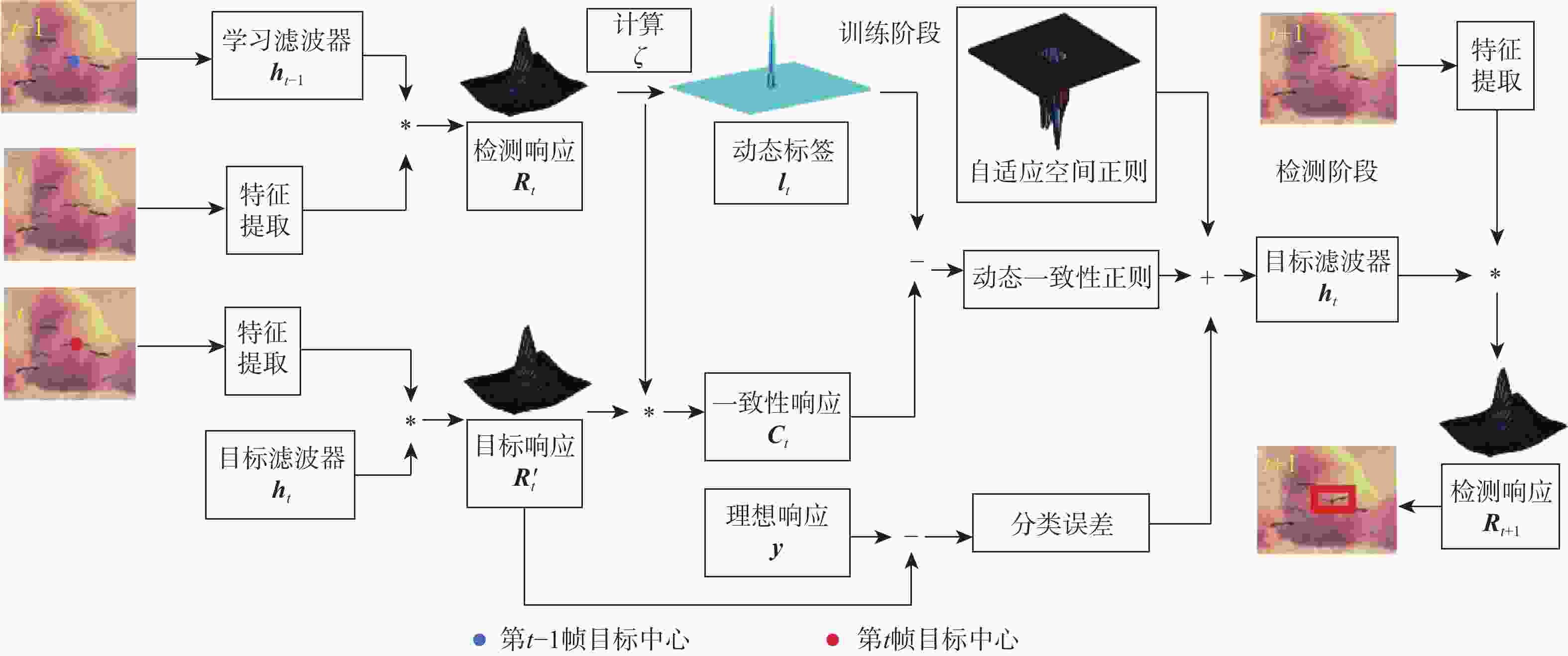

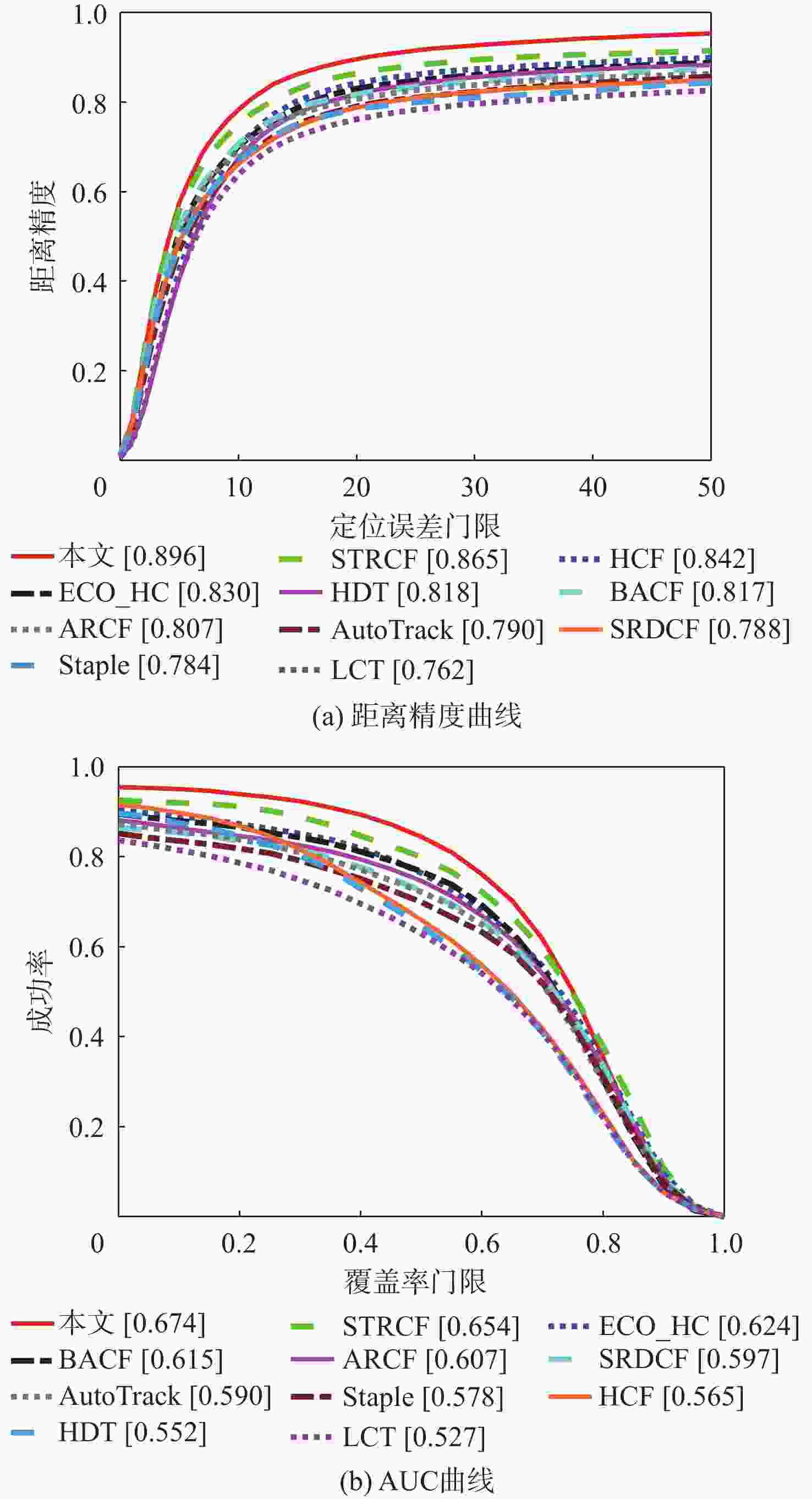

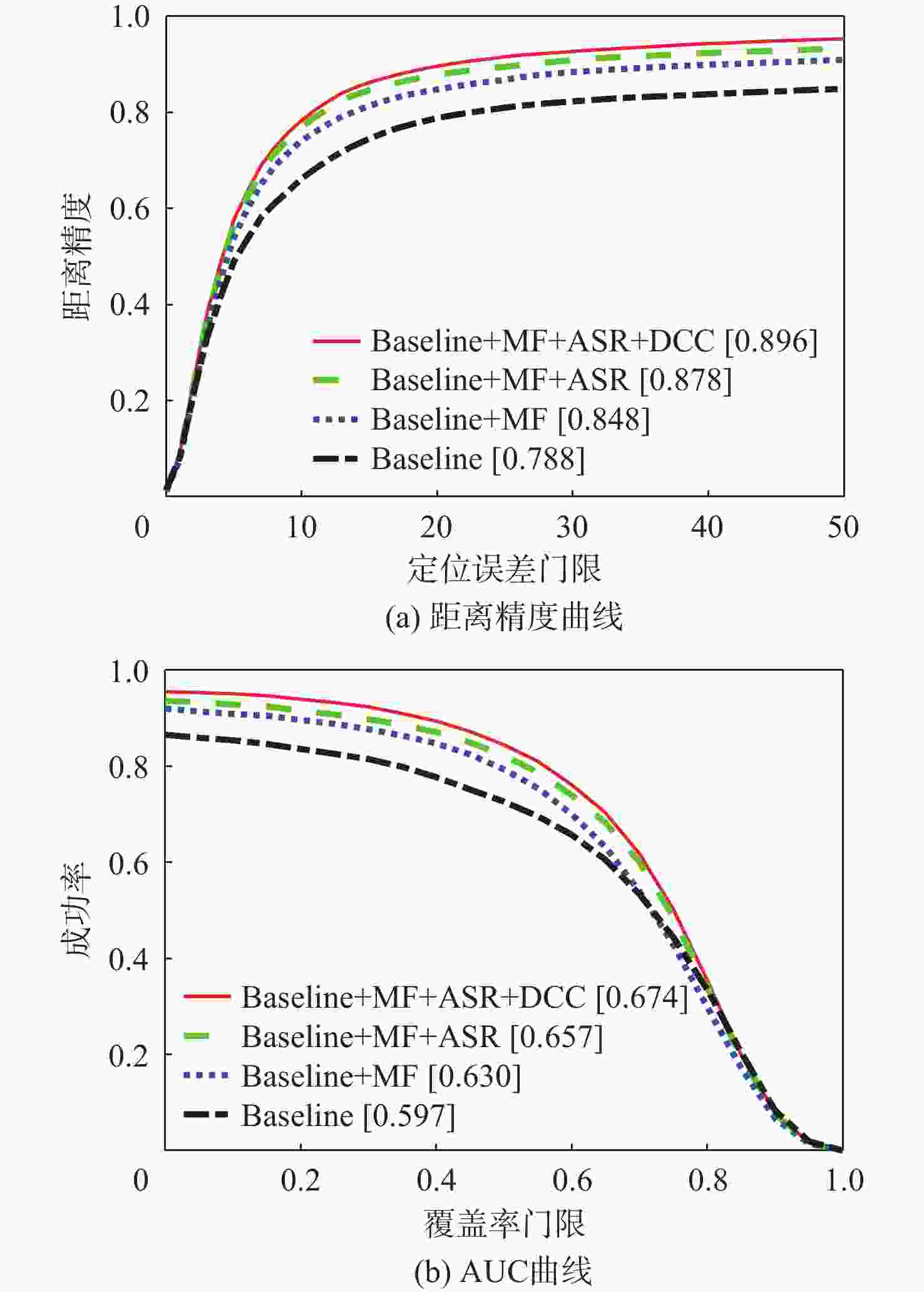

针对空间正则相关滤波(SRDCF)算法正则权重固定和模型退化等问题,提出了一种基于显著感知与一致性约束的目标跟踪算法。提取方向梯度直方图特征、浅层特征及中层特征进行融合,提升物体外观模型的表达能力;通过显著性检测算法获得初始帧的显著感知参考权重,建立正则权重在相邻2帧之间的关联;最小化实际一致性响应与理想一致性响应之间的差异,约束一致性水平防止滤波器模板退化;提出一种动态约束策略,进一步提高跟踪器在复杂场景下的适应性。在OTB2015、TempleColor128和UAV20L公开数据集上对所提算法进行测试,实验结果表明:相比于SRDCF算法,所提算法在OTB2015数据集上距离精度提高了0.108,AUC提高了0.077,速度为22.41帧/s,实时性较好。

Abstract:Aimed at the problems that the spatially regularized discriminative correlation filtering (SRDCF) algorithm with fixed regularization weight and model degradation, a correlation filtering tracking algorithm based on saliency awareness and consistency constraint was proposed. Firstly, the histogram of the oriented gradient feature, shallow feature, and the middle feature was extracted to improve the expression ability of the appearance model. Secondly, the regularization weight between the two adjacent frames was associated after the saliency detection algorithm determined the saliency-awareness reference weight of the initial frame.Furthermore, to prevent the degradation of the filter model,the difference between the practical and the scheduled ideal consistency map was minimized and the consistency level was constrained. In addition, a dynamic constraint strategy was proposed to further improve the adaptability of the tracker in complex scenarios. The algorithm is tested on the public OTB2015, TempleColor128, and UAV20L benchmarks. Experimental results show that compared with SRDCF, the proposed algorithm improves the accuracy by 0.108 and the success rate by 0.077 on OTB2015, with a speed of 22.41 frames per second, and has a good real-time effect.

-

Key words:

- machine vision /

- target tracking /

- correlation filtering /

- saliency awareness /

- consistency constraint

-

表 1 不同算法在3个数据集上的平均跟踪速度对比

Table 1. Comparison of average tracking speed of different algorithms on 3 datasets

帧/s 算法 OTB2015 TempleColor128 UAV20L 本文 22.41 20.84 19.49 BACF 35.43 36.75 27.13 STRCF 24.28 21.31 19.17 SRDCF 7.60 8.42 6.04 Staple 82.80 79.16 73.71 ARCF 17.80 16.49 16.08 AutoTrack 28.44 27.52 23.46 ECO_HC 59.39 58.64 63.21 LCT 22.78 25.26 29.54 HCF 1.97 1.92 6.70 HDT 3.69 3.57 2.88 表 2 不同算法在OTB2015数据集中11个视频属性上的距离精度

Table 2. Precision rate of different algorithms on OTB2015 dataset with 11 video attributes

算法 IV SV OC DEF MB FM IPR OPR OV BC LR 本文 0.881 0.861 0.841 0.895 0.867 0.853 0.856 0.882 0.870 0.897 0.782 BACF 0.803 0.769 0.730 0.764 0.745 0.790 0.792 0.781 0.756 0.805 0.741 STRCF 0.837 0.840 0.810 0.841 0.826 0.802 0.811 0.850 0.766 0.872 0.737 SRDCF 0.781 0.743 0.727 0.730 0.767 0.769 0.742 0.740 0.603 0.775 0.663 Staple 0.778 0.724 0.724 0.747 0.700 0.710 0.768 0.738 0.668 0.749 0.610 ARCF 0.763 0.770 0.737 0.767 0.757 0.768 0.785 0.769 0.671 0.760 0.749 AutoTrack 0.783 0.742 0.735 0.735 0.735 0.746 0.777 0.766 0.696 0.755 0.773 ECO_HC 0.775 0.792 0.777 0.793 0.770 0.799 0.762 0.801 0.764 0.807 0.847 LCT 0.743 0.678 0.678 0.685 0.670 0.681 0.781 0.746 0.592 0.734 0.537 HCF 0.830 0.798 0.776 0.790 0.804 0.815 0.864 0.816 0.677 0.843 0.831 HDT 0.809 0.774 0.744 0.802 0.783 0.779 0.799 0.787 0.616 0.789 0.849 注:黑色加粗字体代表当前属性下的最佳跟踪值。 表 3 不同算法在OTB2015数据集中11个视频属性上的成功率

Table 3. Success rate of different algorithms on OTB2015 dataset with 11 video attributes

算法 IV SV OC DEF MB FM IPR OPR OV BC LR 本文 0.678 0.639 0.647 0.664 0.673 0.651 0.624 0.652 0.638 0.682 0.550 BACF 0.622 0.572 0.565 0.571 0.575 0.599 0.582 0.578 0.548 0.605 0.532 STRCF 0.652 0.631 0.614 0.605 0.652 0.628 0.602 0.626 0.583 0.647 0.538 SRDCF 0.607 0.559 0.554 0.541 0.594 0.597 0.541 0.547 0.461 0.582 0.495 Staple 0.593 0.518 0.541 0.548 0.540 0.540 0.549 0.534 0.476 0.561 0.399 ARCF 0.600 0.561 0.560 0.583 0.605 0.591 0.562 0.559 0.500 0.588 0.512 AutoTrack 0.604 0.542 0.555 0.559 0.585 0.583 0.554 0.555 0.534 0.562 0.540 ECO_HC 0.603 0.592 0.587 0.587 0.604 0.618 0.553 0.587 0.560 0.603 0.589 LCT 0.517 0.428 0.476 0.481 0.516 0.507 0.529 0.505 0.446 0.528 0.299 HCF 0.550 0.485 0.533 0.530 0.585 0.570 0.566 0.540 0.474 0.585 0.439 HDT 0.529 0.477 0.522 0.540 0.577 0.555 0.536 0.528 0.453 0.544 0.463 注:黑色加粗字体代表当前属性下的最佳跟踪值。 -

[1] 孟晓燕, 段建民. 基于相关滤波的目标跟踪算法研究综述[J]. 北京工业大学学报, 2020, 46(12): 83-106. doi: 10.11936/bjutxb2019030011MENG X Y, DUAN J M. Advances in correlation filter-based object tracking algorithms: A review[J]. Journal of Beijing University of Technology, 2020, 46(12): 83-106(in Chinese). doi: 10.11936/bjutxb2019030011 [2] BOLME D S, BEVERIDGE J R, DRAPER B A, et al. Visual object tracking using adaptive correlation filters[C]//Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2010: 2544-2550. [3] HENRIQUES J F, RUI C, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583-596. doi: 10.1109/TPAMI.2014.2345390 [4] BERTINETTO L, VALMADRE J, GOLODETZ S, et al. Staple: Complementary learners for real-time tracking[C]//Proceedings of the International Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 1401-1409. [5] DANELLJAN M, HAGER G, KHAN F S, et al. Convolutional features for correlation filter based visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 58-66. [6] 刘芳, 孙亚楠, 王洪娟, 等. 基于残差学习的自适应无人机目标跟踪算法[J]. 北京航空航天大学学报, 2020, 46(10): 1874-1882. doi: 10.13700/j.bh.1001-5965.2019.0551LIU F, SUN Y N, WANG H J, et al. Adaptive UAV target tracking algorithm based on residual learning[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(10): 1874-1882(in Chinese). doi: 10.13700/j.bh.1001-5965.2019.0551 [7] MA C, HUANG J B, YANG X, et al. Hierarchical convolutional features for visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 3074-3082. [8] DANELLJAN M, ROBINSO A, KHAN F S, et al. Beyond correlational filters: Learning continuous convolution operators for visual tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 472-488. [9] DANELLJAN M, BHAT G, KHAN F S, et al. ECO: Efficient convolution operators for tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 6931-6939. [10] QI Y, ZHANG S P, QIN L, et al. Hedged deep tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 4303-4311. [11] GALOOGAHI H K, FAGG A, LUCEY S. Learning background-aware correlation filters for visualtracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 1144-1152. [12] DANELLJAN M, HAGER G, KHAN F S, et al. Learning spatially regularized correlation filters for visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 4310-4318. [13] FENG W, HAN R Z, GUO Q, et al. Dynamic saliency-aware regularization for correlation filter-based object tracking[J]. IEEE Transactions on Image Processing, 2019, 28(7): 3232-3245. doi: 10.1109/TIP.2019.2895411 [14] FU C H, YANG X X, LI F, et al. Learning consistency pursued correlation filters for real-time UAV tracking[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Piscataway: IEEE Press, 2020: 1-8. [15] WANG N Y, SHI J P, YEUNG D Y, et al. Understanding and diagnosing visual tracking systems[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 3101-3109. [16] WANG N, ZHOU W G, SONG Y B, et al. Real-time correlation tracking via joint model compression and transfer[J]. IEEE Transactions on Image Processing, 2020, 29: 6123-6135. doi: 10.1109/TIP.2020.2989544 [17] HOU X D, ZHANG L Q. Saliency detection: A spectral residual approach[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2007: 1-8. [18] LI F, TIAN C, ZUO W M, et al. Learning spatial-temporal regularized correlation filters for visual tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 4904-4913. [19] 胡昭华, 韩庆, 李奇. 基于时间感知和自适应空间正则化的相关滤波跟踪算法[J]. 光学学报, 2020, 40(3): 0315003. doi: 10.3788/AOS202040.0315003HU Z H, HAN Q, LI Q. Correlation filter tracking algorithm based on temporal awareness and adaptive spatial regularization[J]. Acta Optica Sinica, 2020, 40(3): 0315003(in Chinese). doi: 10.3788/AOS202040.0315003 [20] WANG M M, LIU Y, HUANG Z Y. Large margin object tracking with circulant feature maps[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 4800-4808. [21] BOYD S, PARIKH N, CHU E, et al. Distributed optimization and statistical learning via the alternating direction method of multipliers[J]. Foundations and Trends in Machine Leaning, 2010, 3(1): 1-122. doi: 10.1561/2200000016 [22] DAI K N, WANG D, LU H C, et al. Visual tracking via adaptive spatially-regularized correlation filters[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4670-4679. [23] WU Y, YANG J L, MING H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834-1848. doi: 10.1109/TPAMI.2014.2388226 [24] LIANG P P, BLASCH E, LING H B. Encoding color information for visual tracking: Algorithms and benchmark[J]. IEEE Transactions on Image Processing, 2015, 24(12): 5630-5644. doi: 10.1109/TIP.2015.2482905 [25] MUELLER M, SMITH N, GHANEM B. A benchmark and simulator for UAV tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 445-461. [26] MA C, YANG X K, ZAHNG C Y, et al. Long-term correlation tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 5388-5396. [27] HUANG Z Y, FU C H, LI Y M, et al. Learning aberrance repressed correlation filters for real-time UAV tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 2891-2900. [28] LI Y M, FU C H, DING F Q, et al. AutoTrack: Towards high-performance visual tracking for UAV with automatic spatio-temporal regularization[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 11920-11929. -

下载:

下载: