Multidimensional degradation data generation method based on variational autoencoder

-

摘要:

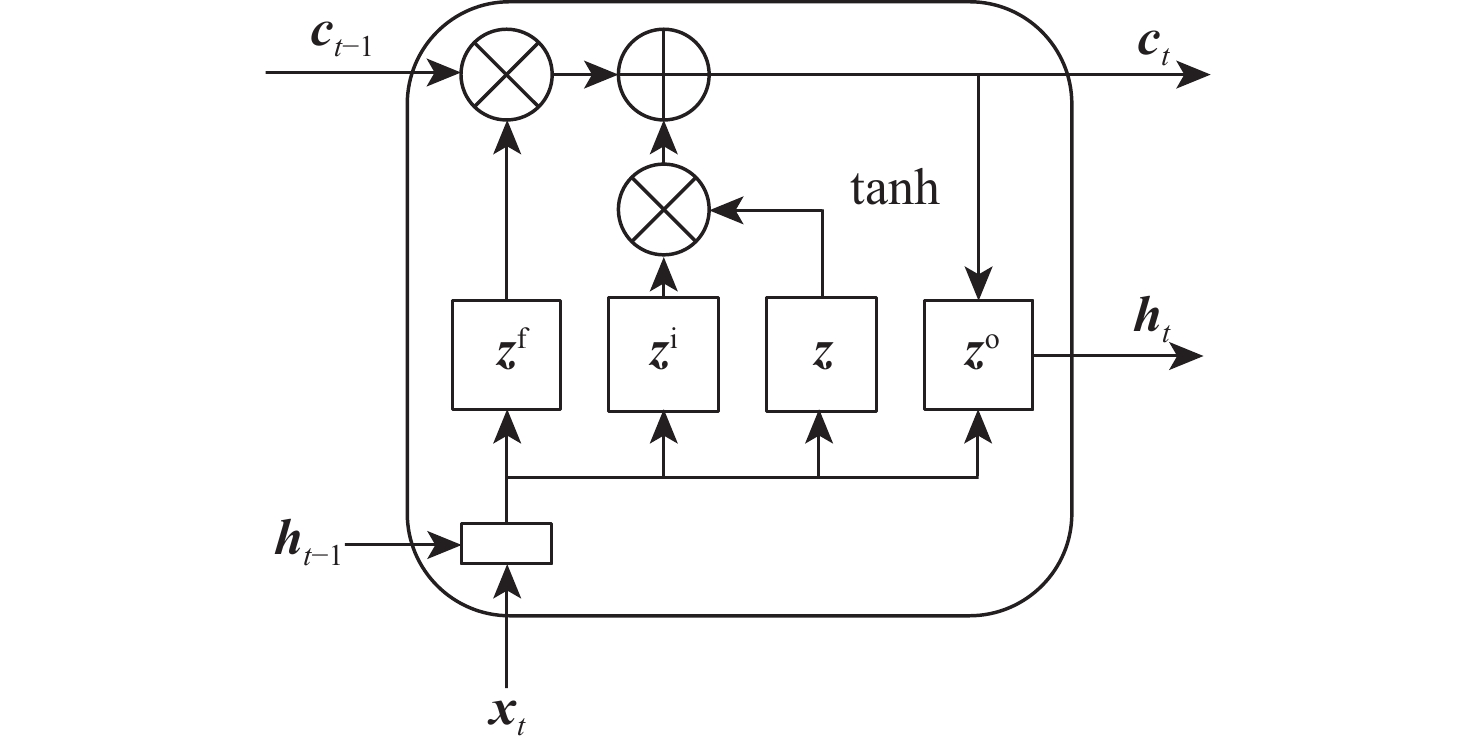

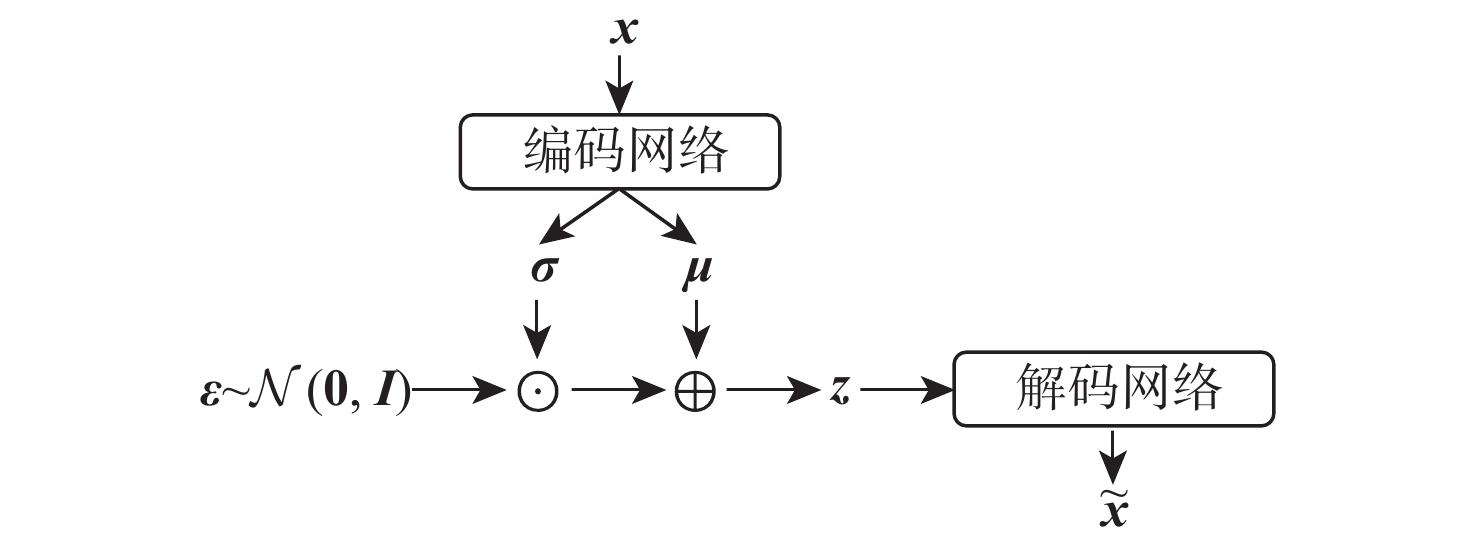

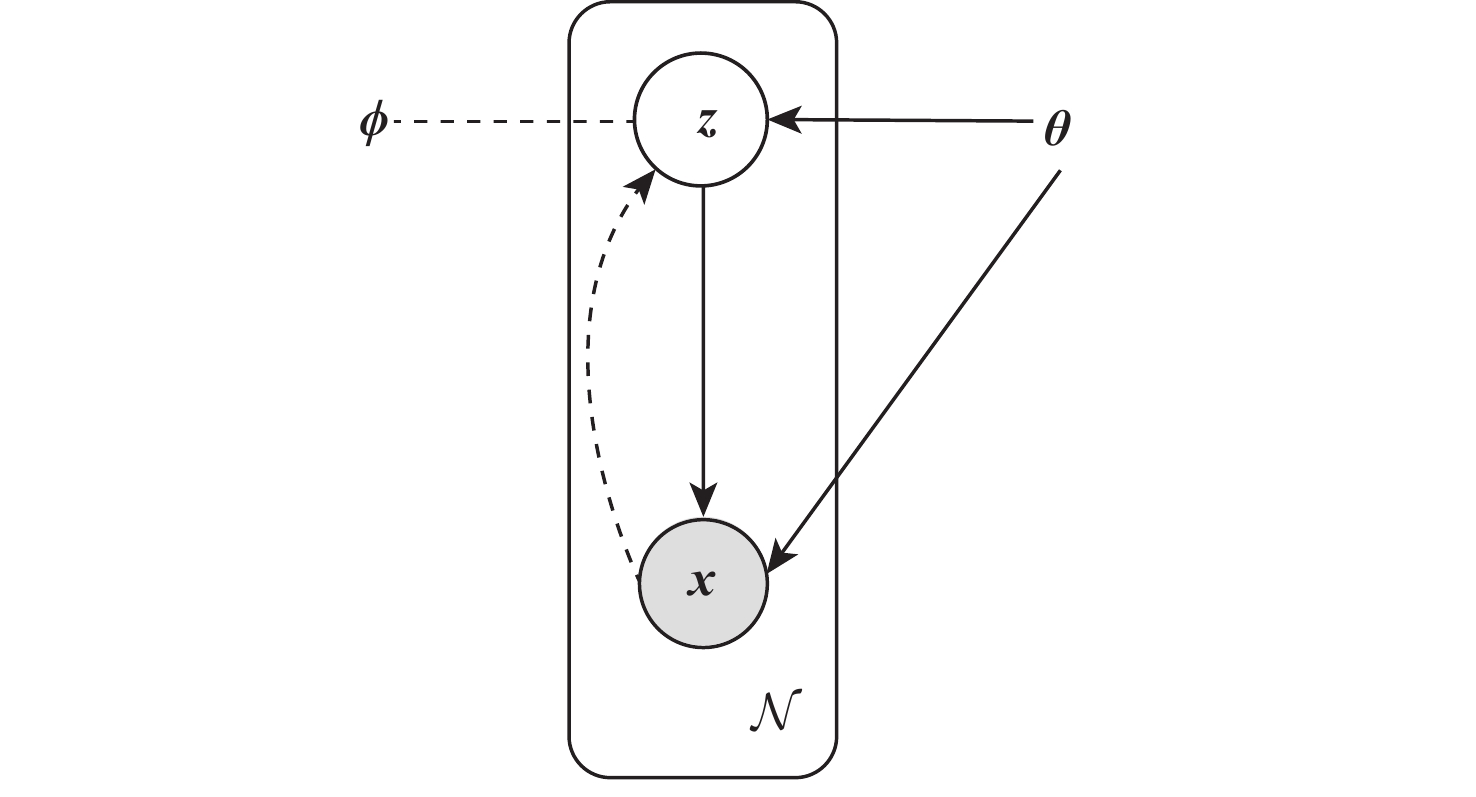

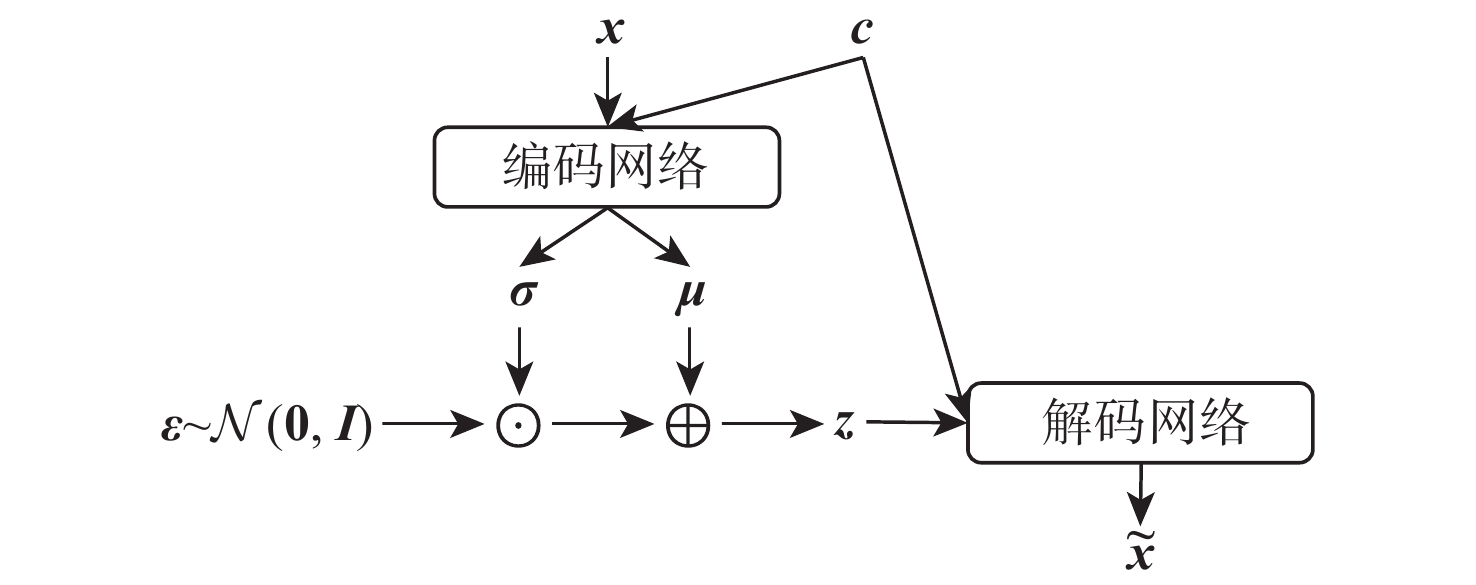

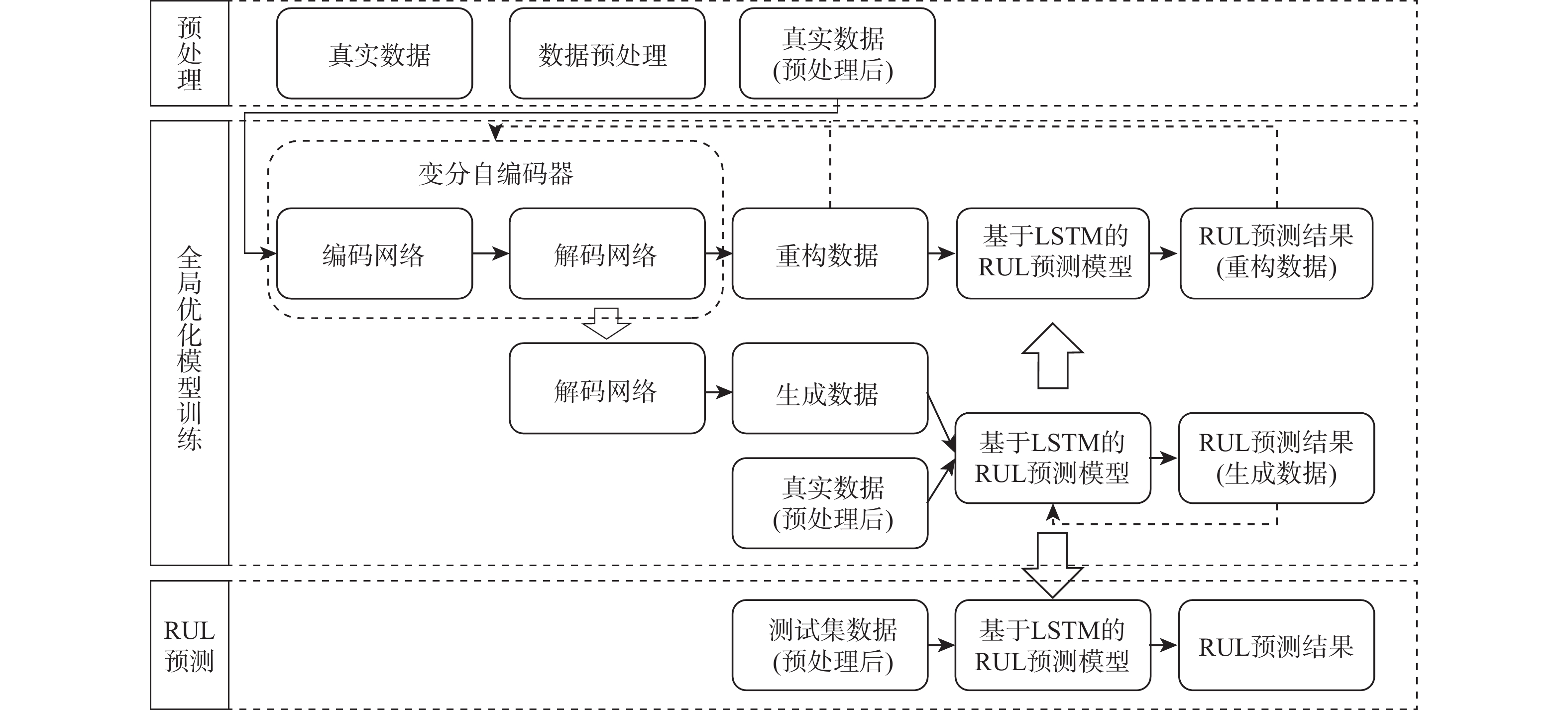

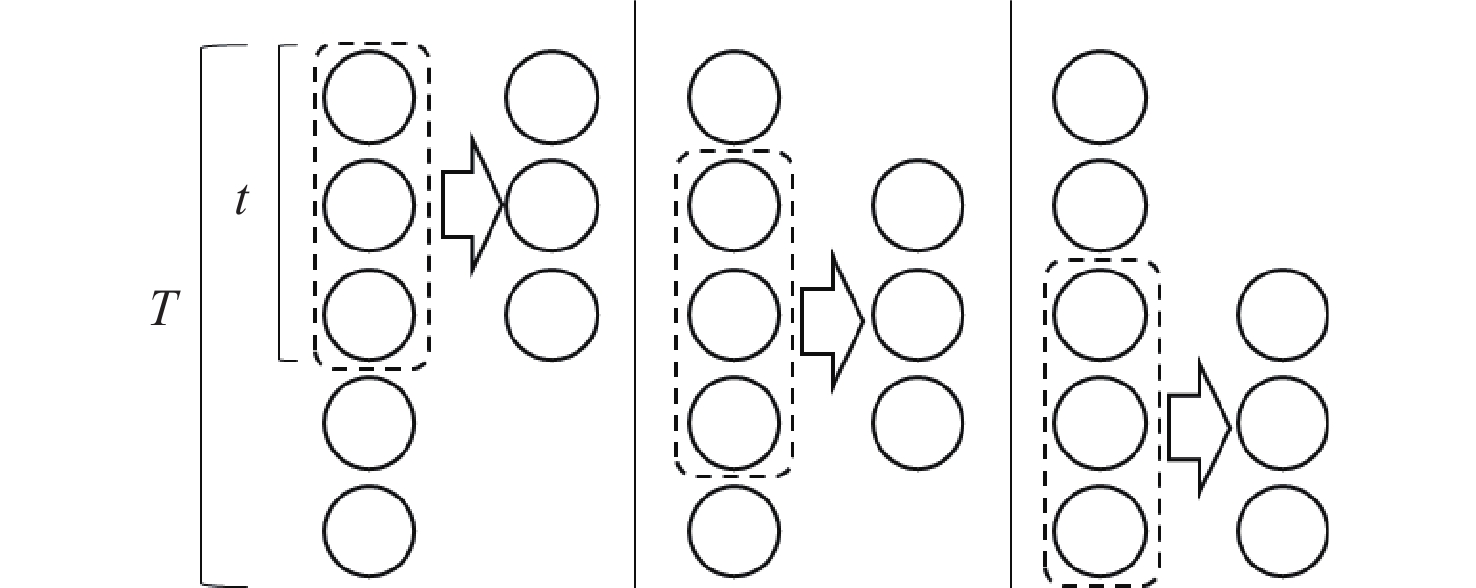

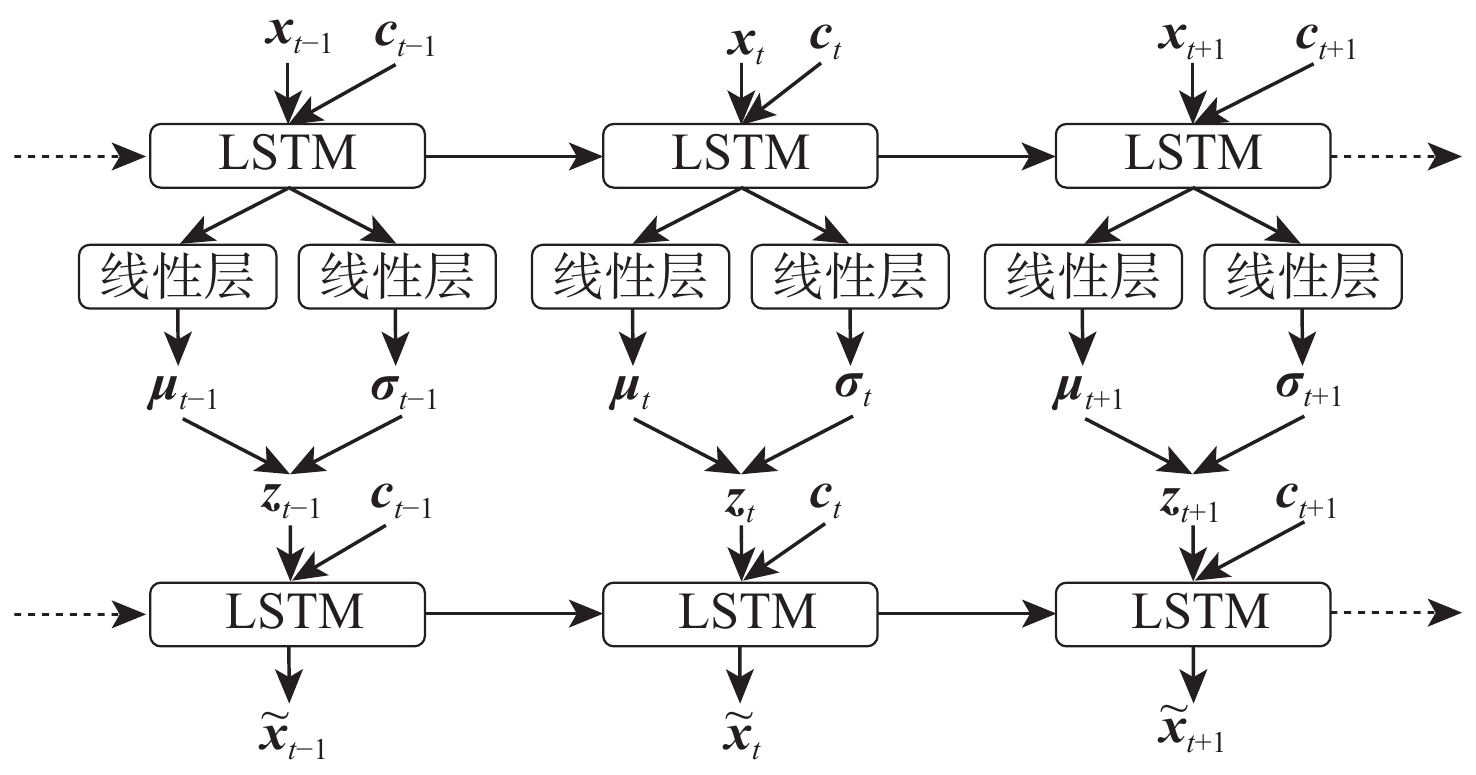

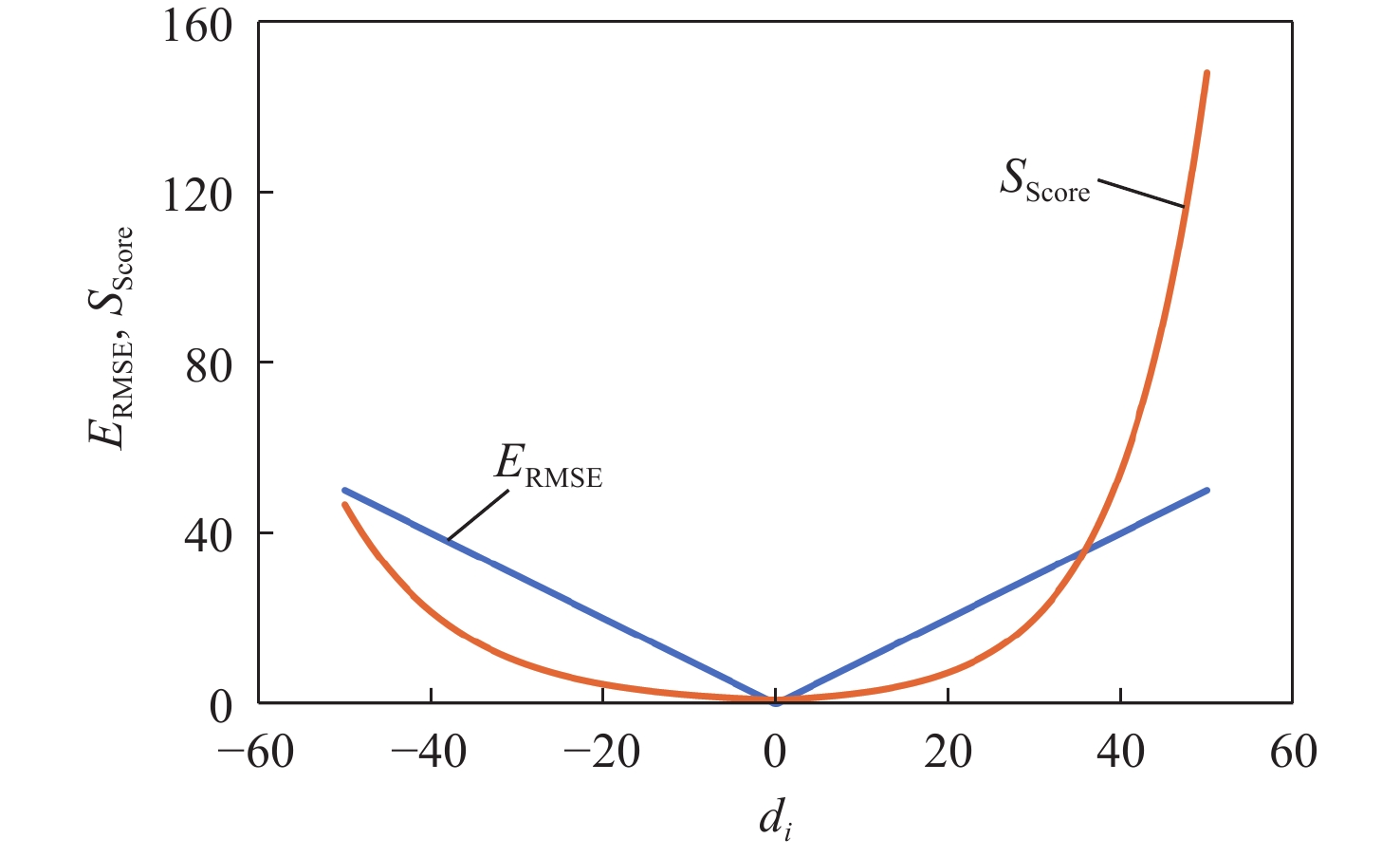

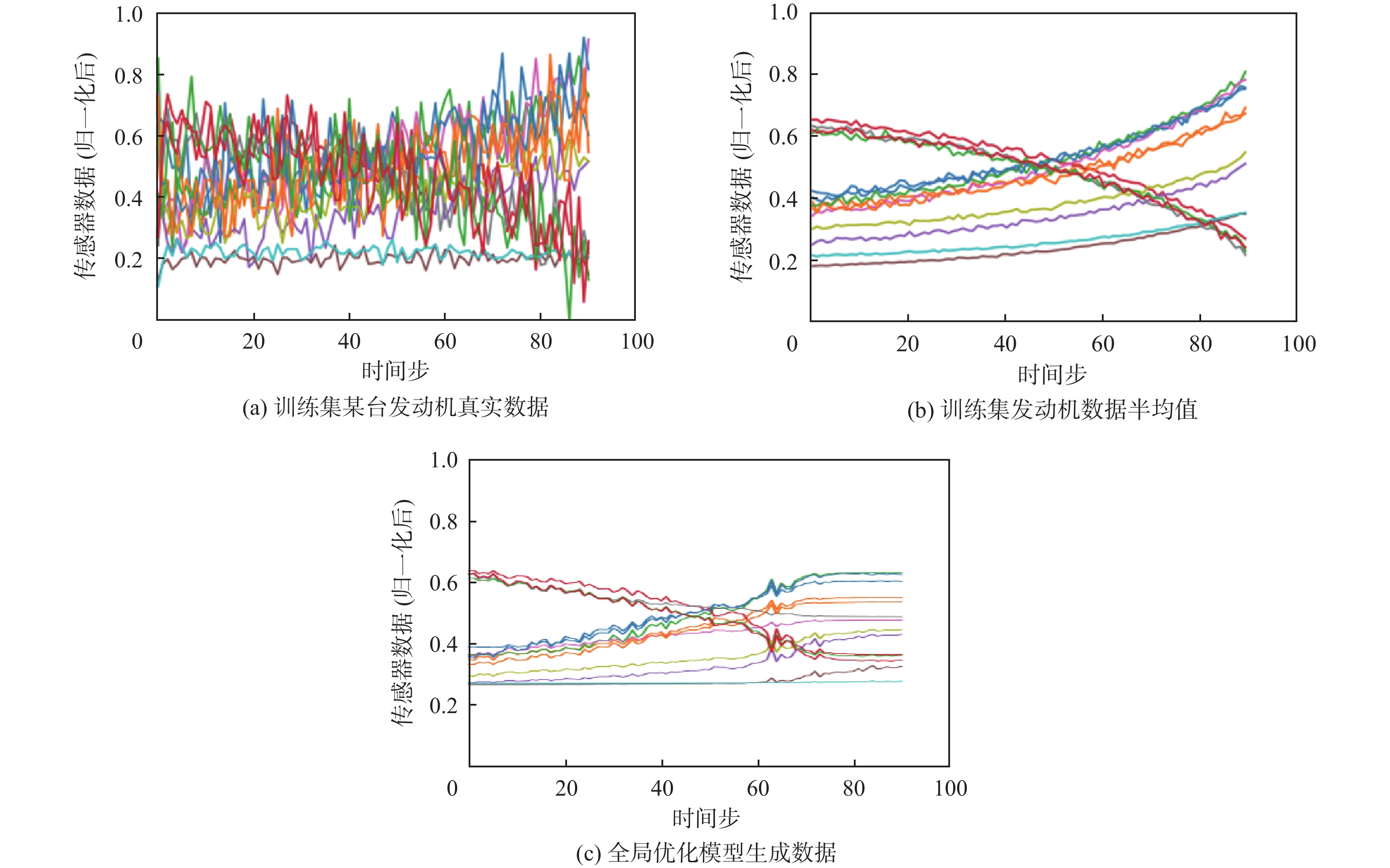

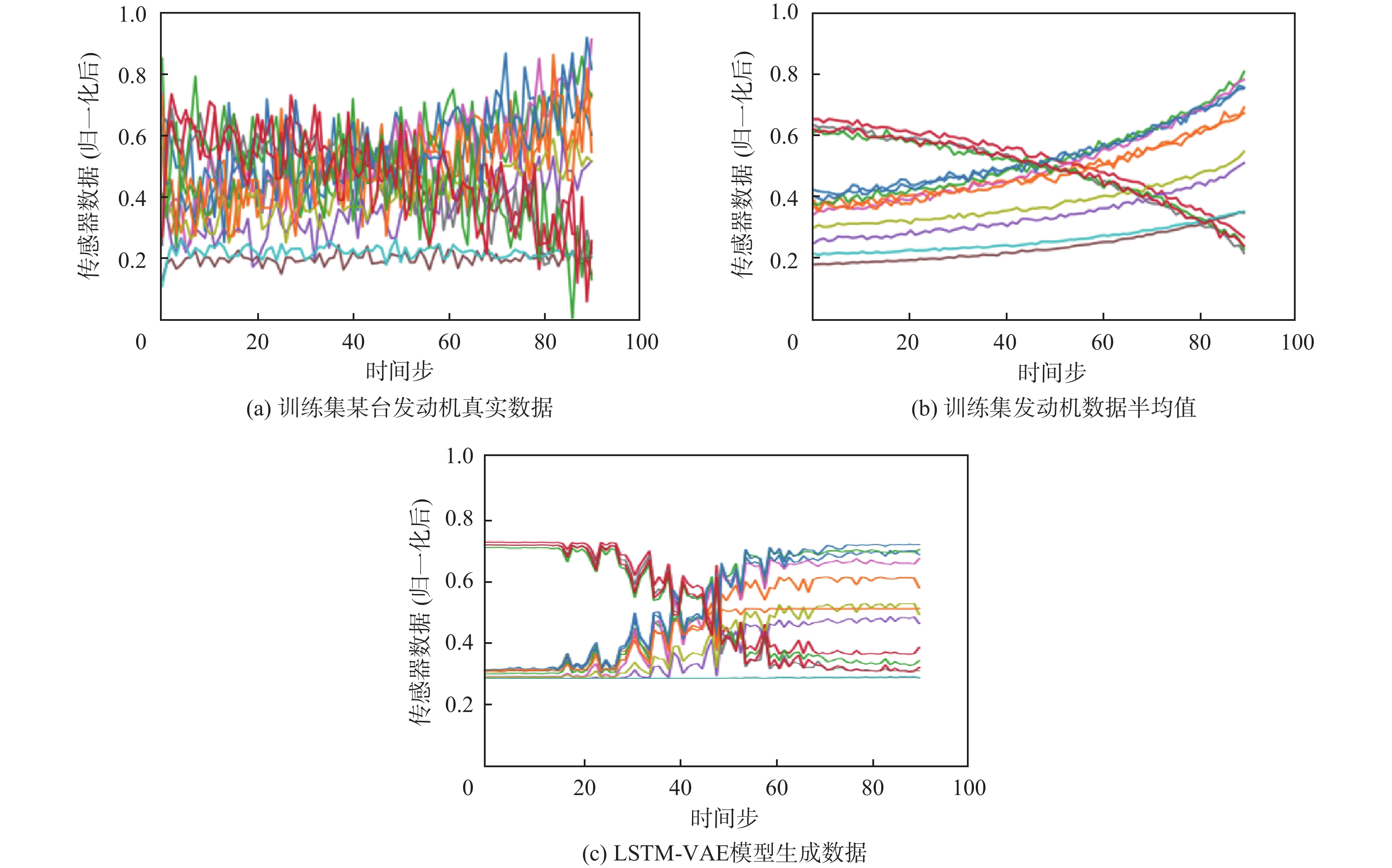

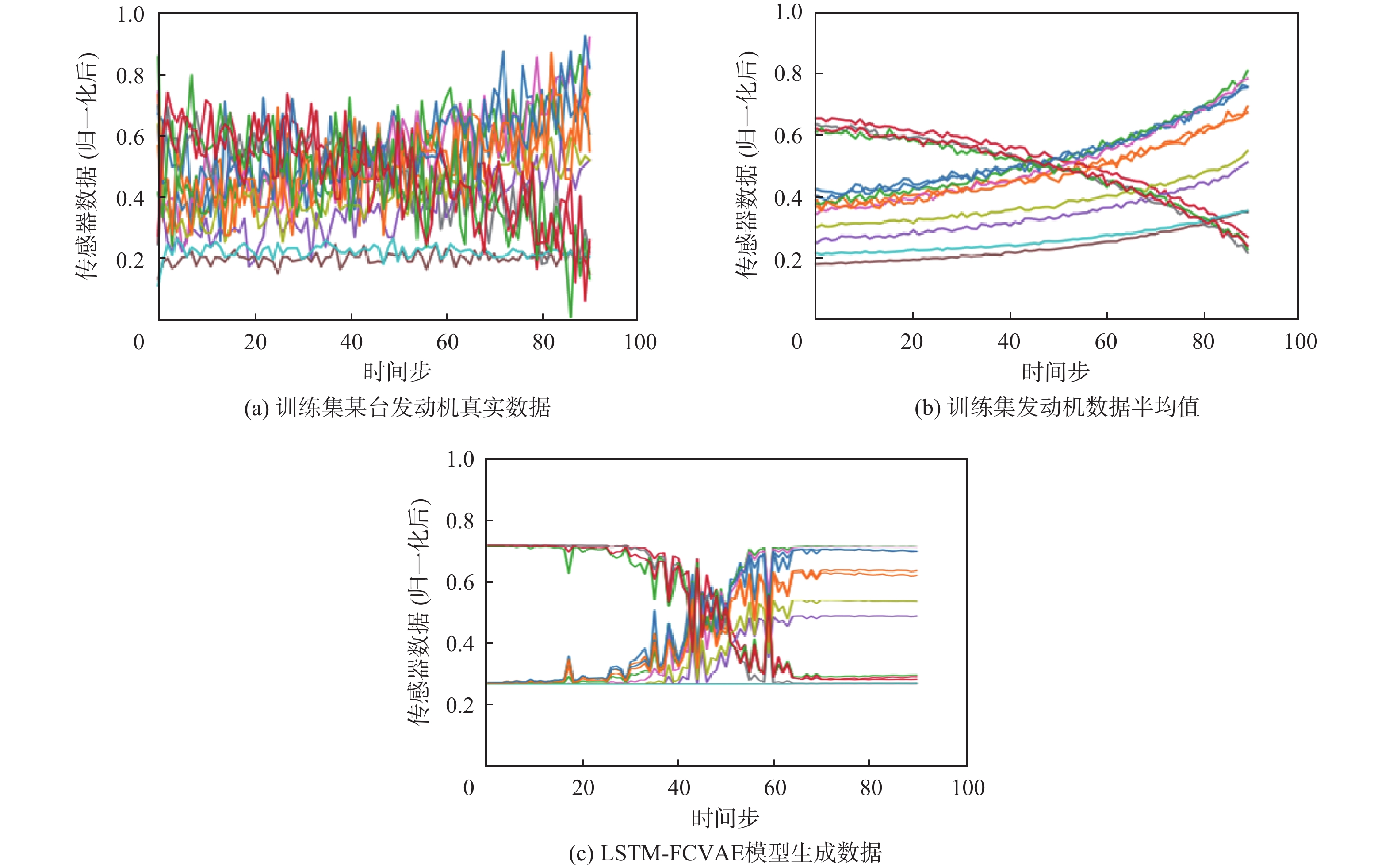

数据驱动的剩余使用寿命(RUL)预测方法不依赖于复杂的物理模型,可以直接利用设备历史运行数据与当前监测数据对设备RUL进行预测,对制定合理的维修策略,降低设备的维护成本具有重要意义。但是数据驱动的RUL预测方法依赖于大量历史数据,在数据不足时,尤其是多维退化数据,模型难以取得良好的预测效果。针对这一问题,提出一种多维退化数据生成方法,所提方法构建了一种全局优化模型,以条件变分自编码器作为生成模型,提取多维退化数据特征并生成相似数据扩充RUL预测模型训练集,利用长短时记忆网络作为RUL预测模型,所提方法能够通过RUL预测模型更新生成模型的参数提高模型的效果,同时利用更新后的生成模型提高剩余寿命预测模型在退化数据不足情况下的效果。使用航空发动机退化数据进行了案例验证,通过对比未加入生成数据训练得到的RUL预测模型与加入生成数据训练得到的RUL预测模型的表现,验证了所提方法在解决RUL预测模型训练数据不足方面的优越性。

Abstract:The data-driven remaining useful life (RUL) prediction method does not rely on complicated physical models; instead, it can use current monitoring data as well as historical operational data for the equipment, which is very important for developing a reasonable maintenance strategy and lowering the equipment's maintenance costs. However, the data-driven RUL prediction method relies on a large amount of historical data. When the data is insufficient, especially for multidimensional degradation data, the model is difficult to achieve good prediction results. To solve this problem, this paper proposes a multidimensional degradation data generation method.The technique creates a one-stage model using a conditional variational autoencoder as the generation model and a long short-term memory network as the RUL prediction model. The generation model can then be updated using the RUL prediction model, which can then be used to boost the RUL prediction model's performance in the absence of enough degradation data. On a dataset of aero-engine degradation, the approach is validated. The method is validated on an aero-engine degradation dataset. By comparing the performance of the RUL prediction model trained with and without generated data, the effectiveness of the method is demonstrated for RUL prediction with insufficient data.

-

表 1 CMAPSS数据集

Table 1. CMAPSS dataset

数据集

编号故障模式 工况类型 训练集

大小测试集

大小FD001 1 1 100 100 FD002 1 6 260 259 FD003 2 1 100 100 FD004 2 6 248 249 表 2 训练集与验证集

Table 2. Train dataset and validation dataset

发动机序号范围 训练集发动机序号 验证集发动机序号 1~10 1, 3, 7, 8 9 11~20 13, 14, 16, 18, 20 19 21~30 23, 25, 26 28 31~40 33, 34, 36, 37, 39 38 41~50 42, 43, 44, 46, 47, 50 45 51~60 51 54 61~70 60, 62, 63, 66 69 71~80 71, 73, 74, 76, 79 77 81~90 82, 83, 86, 88, 90 87 91~100 93, 95 100 表 3 RUL预测模型结构

Table 3. Structure of RUL prediction model

网络层 超参数 LSTM层1 输入维度为14,神经元数量为128 LSTM层2 输入维度为128,神经元数量为64 LSTM层3 输入维度为64,神经元数量为32 全连接层 输入维度为32,神经元数量为1,激活函数为σ(*) 表 4 LSTM-VAE的结构

Table 4. Structure of LSTM-VAE

网络名称 网络层 超参数 编码网络 LSTM层1 输入维度为14,神经元数量为32,dropout为0.5 LSTM层2 输入维度为32,神经元数量为16,dropout为0.5 全连接层1 输入维度为16,神经元数量为2 全连接层2 输入维度为16,神经元数量为2 解码网络 LSTM层1 输入维度为2,神经元数量为16,dropout为0.5 LSTM层2 输入维度为16,神经元数量为32,dropout为0.5 LSTM层3 输入维度为32,神经元数量为14,dropout为0.5 激活函数 激活函数为σ(*) 表 5 LSTM-FCVAE的结构

Table 5. Structure of LSTM-FCVAE

网络名称 网络层 超参数 编码网络 LSTM层1 输入维度为14,神经元数量为32,dropout为0.5 LSTM层2 输入维度为32,神经元数量为16,dropout为0.5 全连接层1 输入维度为16,神经元数量为2 全连接层2 输入维度为16,神经元数量为2 全连接层3 输入维度为16,神经元数量为2 解码网络 LSTM层1 输入维度为2,神经元数量为16,dropout为0.5 LSTM层2 输入维度为16,神经元数量为32,dropout为0.5 LSTM层3 输入维度为32,神经元数量为14,dropout为0.5 激活函数 激活函数为σ(*) 表 6 全局优化模型结构

Table 6. Structure of global optimization model

网络名称 网络层 超参数 编码网络 LSTM层1 输入维度为14,神经元数量为32,dropout为0.5 LSTM层2 输入维度为32,神经元数量为16,dropout为0.5 全连接层1 输入维度为16,神经元数量为2 全连接层2 输入维度为16,神经元数量为2 解码网络 LSTM层1 输入维度为2,神经元数量为16,dropout为0.5 LSTM层2 输入维度为16,神经元数量为32,dropout为0.5 LSTM层3 输入维度为32,神经元数量为14,dropout为0.5 激活函数 激活函数为σ(*) RUL预测模型 与表3所示RUL预测模型的结构相同 表 7 不同方法的预测结果

Table 7. Prediction result with different methods

训练方式 评估指标 RMSE Score 全数据集训练 6.816 62.124 部分数据集训练 7.439 79.802 LSTM-VAE生成数据 7.648 76.626 LSTM-FCVAE生成数据 7.256 74.793 全局优化模型 7.159 71.215 表 8 不同方法的训练时间

Table 8. Training time with different methods

训练方式 模型训练时间/s LSTM-VAE生成数据 355.121 LSTM-FCVAE生成数据 444.275 全局优化模型 424.306 -

[1] SHEPPARD J W, KAUFMAN M A, WILMER T J. IEEE standards for prognostics and health management[J]. IEEE Aerospace and Electronic Systems Magazine, 2009, 24(9): 34-41. [2] DING X X, HE Q B. Energy-fluctuated multiscale feature learning with deep ConvNet for intelligent spindle bearing fault diagnosis[J]. IEEE Transactions on Instrumentation and Measurement, 2017, 66(8): 1926-1935. doi: 10.1109/TIM.2017.2674738 [3] SUN C, MA M, ZHAO Z B, et al. Deep transfer learning based on sparse autoencoder for remaining useful life prediction of tool in manufacturing[J]. IEEE Transactions on Industrial Informatics, 2019, 15(4): 2416-2425. doi: 10.1109/TII.2018.2881543 [4] BROWN E R, MCCOLLOM N N, MOORE E E, et al. Prognostics and health management A data-driven approach to supporting the F-35 lightning II[C]// 2007 IEEE Aerospace Conference. Piscataway: IEEE Press, 2007: 1-12. [5] ZHANG L W, LIN J, LIU B, et al. A review on deep learning applications in prognostics and health management[J]. IEEE Access, 2019, 7: 162415-162438. doi: 10.1109/ACCESS.2019.2950985 [6] ZHAO R, YAN R Q, WANG J J, et al. Learning to monitor machine health with convolutional Bi-directional LSTM networks[J]. Sensors, 2017, 17(2): 273. doi: 10.3390/s17020273 [7] REN L, ZHAO L, HONG S, et al. Remaining useful life prediction for lithium-ion battery: A deep learning approach[J]. IEEE Access, 2018, 6: 50587-50598. doi: 10.1109/ACCESS.2018.2858856 [8] ZHANG Y Z, XIONG R, HE H W, et al. Long short-term memory recurrent neural network for remaining useful life prediction of lithium-ion batteries[J]. IEEE Transactions on Vehicular Technology, 2018, 67(7): 5695-5705. doi: 10.1109/TVT.2018.2805189 [9] ZHANG J J, WANG P, YAN R Q, et al. Long short-term memory for machine remaining life prediction[J]. Journal of Manufacturing Systems, 2018, 48: 78-86. doi: 10.1016/j.jmsy.2018.05.011 [10] MA J A, SU H A, ZHAO W L, et al. Predicting the remaining useful life of an aircraft engine using a stacked sparse autoencoder with multilayer self-learning[J]. Complexity, 2018, 2018: 1-13. [11] REN L, CHENG X J, WANG X K, et al. Multi-scale dense gate recurrent unit networks for bearing remaining useful life prediction[J]. Future Generation Computer Systems, 2019, 94: 601-609. doi: 10.1016/j.future.2018.12.009 [12] AJIBOYE A R, ABDULLAH-ARSHAH R, QIN H, et al. Evaluating the effect of dataset size on predictive model using supervised learning technique[J]. International Journal of Computer Systems & Software Engineering, 2015, 1(1): 75-84. [13] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C]//International Conference on Neural Information Processing Systems. Cambridge: MIT Press, 2014. [14] KINGMA D P, WELLING M. Auto-encoding variational Bayes[EB/OL]. (2022-12-10) [2021-12-16]. [15] WANG Z R, WANG J, WANG Y R. An intelligent diagnosis scheme based on generative adversarial learning deep neural networks and its application to planetary gearbox fault pattern recognition[J]. Neurocomputing, 2018, 310: 213-222. doi: 10.1016/j.neucom.2018.05.024 [16] WANG Y R, SUN G D, JIN Q. Imbalanced sample fault diagnosis of rotating machinery using conditional variational auto-encoder generative adversarial network[J]. Applied Soft Computing, 2020, 92: 106333. doi: 10.1016/j.asoc.2020.106333 [17] BEHERA S, MISRA R. Generative adversarial networks based remaining useful life estimation for IIoT[J]. Computers & Electrical Engineering, 2021, 92: 107195. [18] 张晟斐, 李天梅, 胡昌华, 等. 基于深度卷积生成对抗网络的缺失数据生成方法及其在剩余寿命预测中的应用[J]. 航空学报, 2022, 43(8): 225708.ZHANG S F, LI T M, HU C H, et al. Missing data generation method and its application in remaining useful life prediction based on deep convolutional generative adversarial network[J]. Acta Aeronautica et Astronautica Sinica, 2022, 43(8): 225708(in Chinese). [19] LEE H, HAN S Y, PARK K J. Generative adversarial network-based missing data handling and remaining useful life estimation for smart train control and monitoring systems[J]. Journal of Advanced Transportation, 2020, 2020: 1-15. [20] HUANG Y, TANG Y F, VANZWIETEN J. Prognostics with variational autoencoder by generative adversarial learning[J]. IEEE Transactions on Industrial Electronics, 2022, 69(1): 856-867. doi: 10.1109/TIE.2021.3053882 [21] HOCHREITER S, SCHMIDHUBER J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735-1780. doi: 10.1162/neco.1997.9.8.1735 [22] KINGMA D P, WELLING M. An introduction to variational autoencoders[EB/OL]. (2019-12-11) [2021-12-16]. [23] CARL D. Tutorial on variational autoencoders[EB/OL]. (2021-01-03) [2021-12-16]. [24] SAXENA A, GOEBEL K, SIMON D, et al. Damage propagation modeling for aircraft engine Run-to-failure simulation[C]// 2008 International Conference on Prognostics and Health Management. Piscataway: IEEE Press, 2008: 1-9. [25] HUANG C G, HUANG H Z, LI Y F. A bidirectional LSTM prognostics method under multiple operational conditions[J]. IEEE Transactions on Industrial Electronics, 2019, 66(11): 8792-8802. doi: 10.1109/TIE.2019.2891463 [26] KIM M, LIU K B. A Bayesian deep learning framework for interval estimation of remaining useful life in complex systems by incorporating general degradation characteristics[J]. IISE Transactions, 2021, 53(3): 326-340. doi: 10.1080/24725854.2020.1766729 [27] ZHANG J J, WANG P, YAN R Q, et al. Deep learning for improved system remaining life prediction[J]. Procedia CIRP, 2018, 72: 1033-1038. doi: 10.1016/j.procir.2018.03.262 [28] ZHENG S, RISTOVSKI K, FARAHAT A, et al. Long short-term memory network for remaining useful life estimation[C]// 2017 IEEE International Conference on Prognostics and Health Management. Piscataway: IEEE Press, 2017: 88-95. [29] WEN L, DONG Y, GAO L A. A new ensemble residual convolutional neural network for remaining useful life estimation[J]. Mathematical Biosciences and Engineering, 2019, 16(2): 862-880. doi: 10.3934/mbe.2019040 期刊类型引用(2)

1. 谌丽. 基于混合神经网络的通信网络数据多维特征属性挖掘方法. 佳木斯大学学报(自然科学版). 2024(12): 60-63+82 .  百度学术

百度学术2. 赵峰,李妞妞. 基于VAE-GWO-LightGBM的信用卡欺诈检测方法. 东北师大学报(自然科学版). 2023(04): 77-84 .  百度学术

百度学术其他类型引用(2)

-

下载:

下载:

百度学术

百度学术