Person re-identification based on random occlusion and multi-granularity feature fusion

-

摘要:

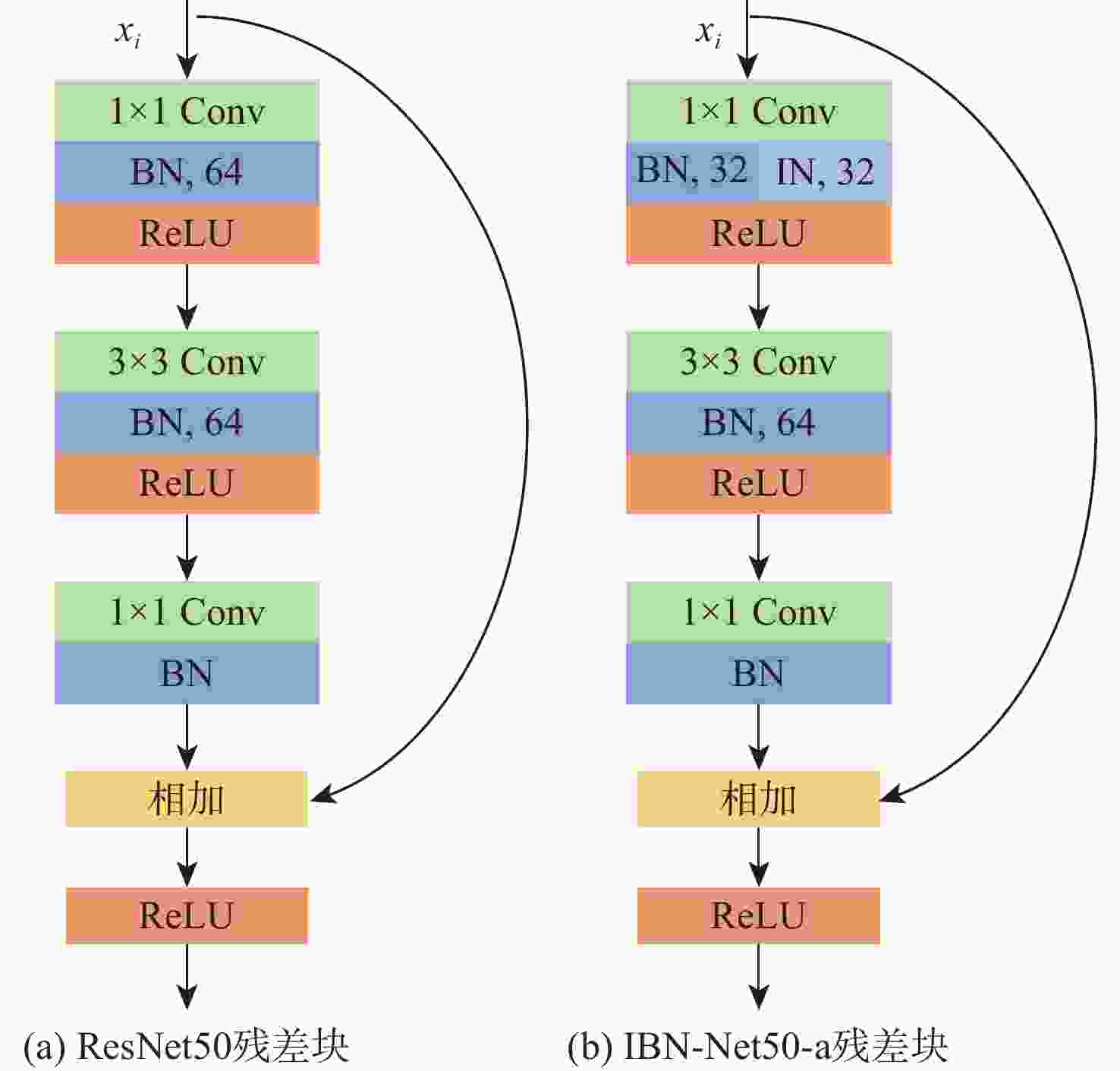

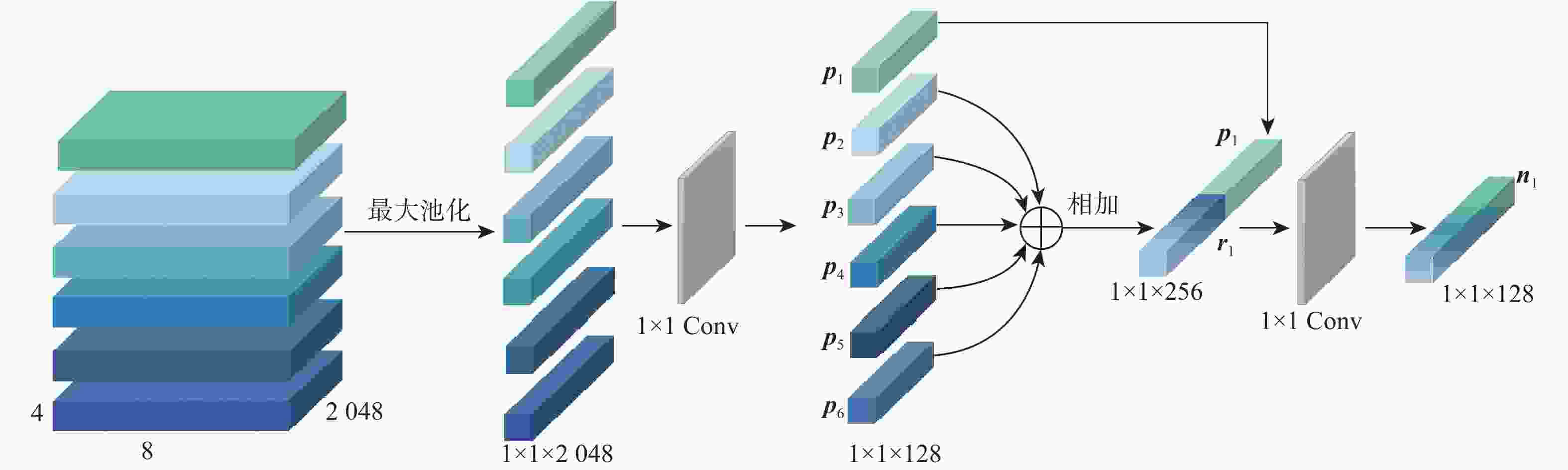

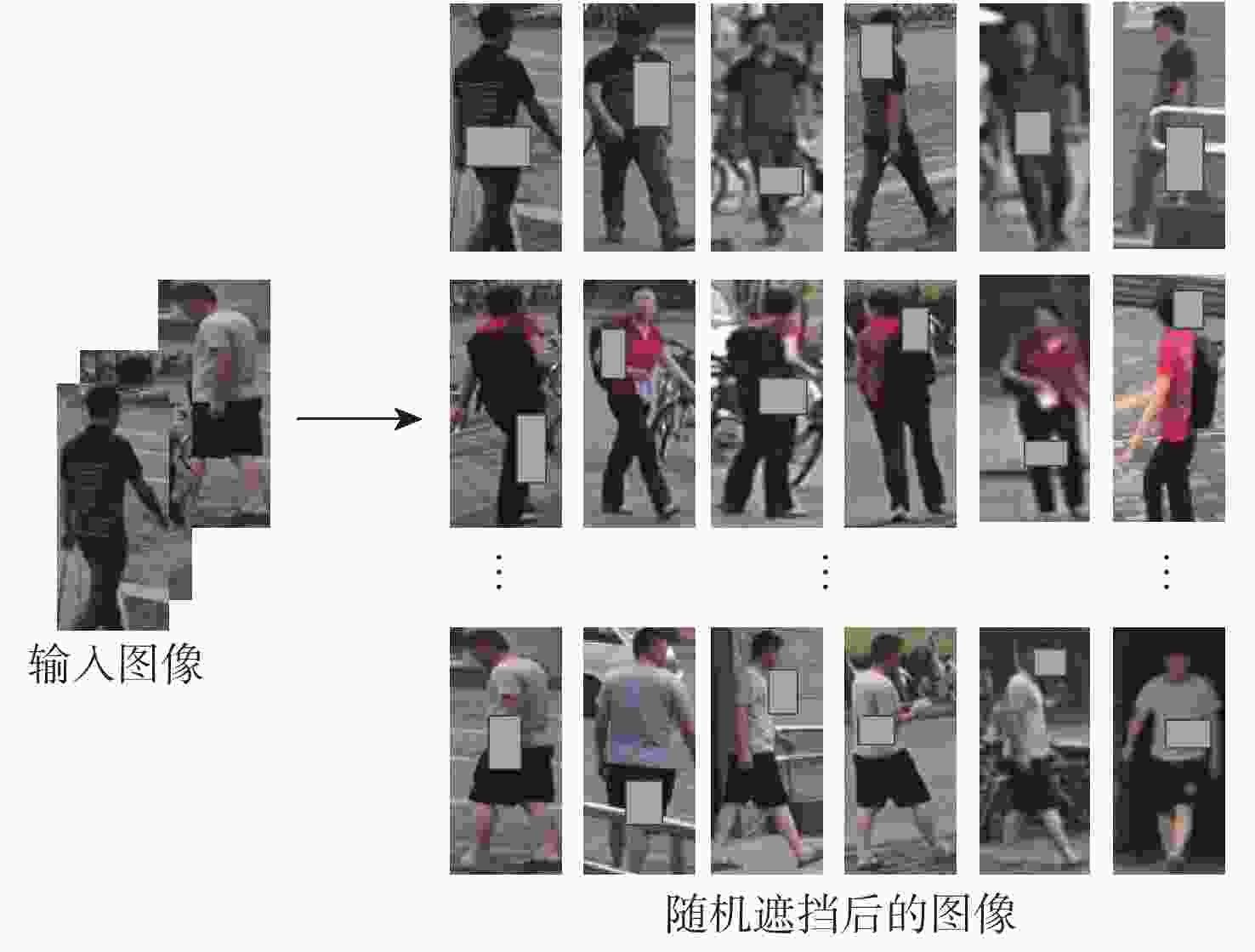

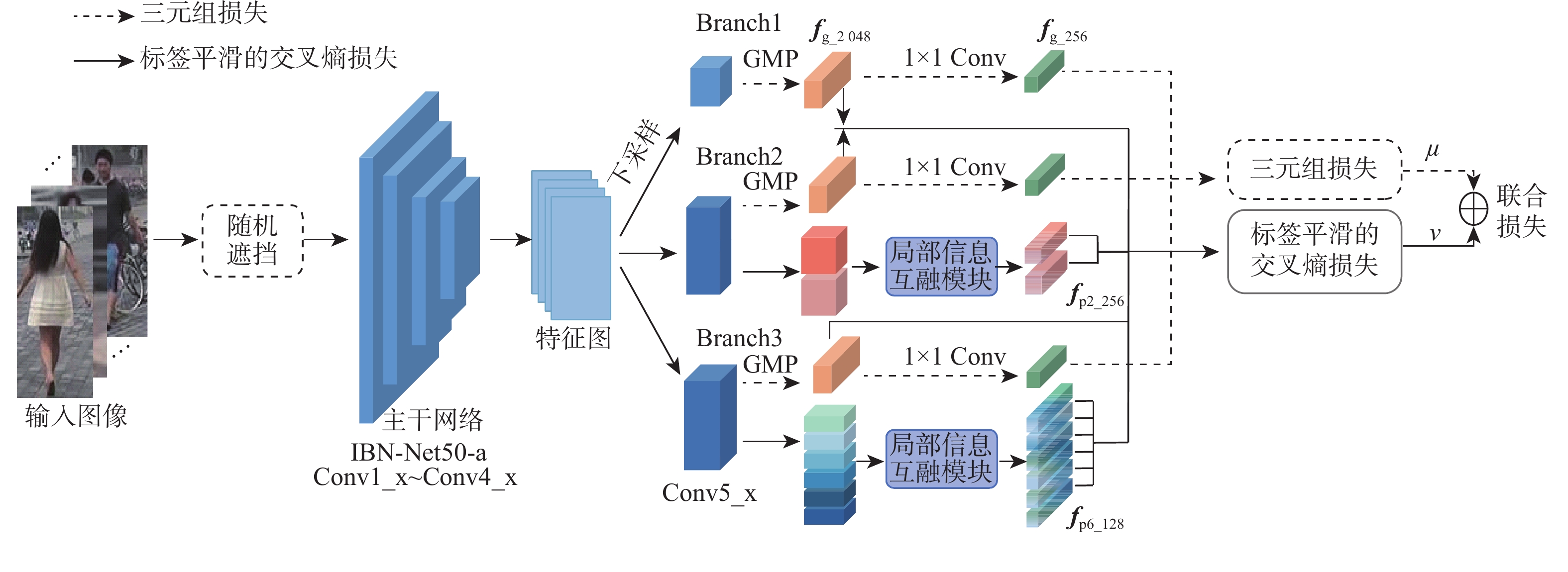

针对行人重识别中存在遮挡及行人判别特征层次单调的问题,在IBN-Net50-a网络的基础上,提出了一种结合随机遮挡和多粒度特征融合的网络模型。通过对输入图像进行随机遮挡处理,模拟行人被遮挡的真实情景,以增强应对遮挡的鲁棒性;将网络分为全局分支、局部粗粒度互融分支和局部细粒度互融分支,提取全局显著性特征,同时补充局部多粒度深层特征,丰富行人判别特征的层次性;进一步挖掘局部多粒度特征间的相关性进行深度融合;联合标签平滑交叉熵损失和三元组损失训练网络。在3个标准公共数据集和1个遮挡数据集上,将所提方法与先进的行人重识别方法进行比较,实验结果表明:在Market1501、DukeMTMC-reID、CUHK03标准公共数据集上,所提方法的Rank-1分别达到了95.2%、89.2%、80.1%,在遮挡数据集Occluded-Duke上,所提方法的Rank-1和mAP分别达到了60.6%和51.6%,均优于对比方法,证实了方法的有效性。

Abstract:Aiming at the problems of occlusion and monotony of pedestrian discriminative feature hierarchy in person re-identification, this paper proposes a method combining random occlusion and multi-granularity feature fusion based on the IBN-Net50-a network. First, in order to enhance the robustness against occlusion, random occlusion processing is performed on the input images to simulate the real scene of pedestrians being occluded. Secondly, the network includes a global branch, a local coarse-grained fusion branch and a local fine-grained fusion branch, which can extract global salient features while supplementing local multi-grained deep features, enriching the hierarchy of pedestrian discrimination features. Furthermore, further mining the correlation between local multi-granularity features for deeper fusion. Finally, the label smoothing loss and triplet loss jointly train the network. Comparing the proposed method with current state-of-the-art person re-identification algorithms on three standard public datasets and one occlusion dataset. The experimental results show that the Rank-1 of the proposed algorithm on Market1501, DukeMTMC-reID and CUHK03 is 95.2%, 89.2% and 80.1%, respectively. In Occluded-Duke dataset, Rank-1 and mAP achieved 60.6% and 51.6%. The experimental results are better than those of the compared methods, which fully confirm the effectiveness of the proposed method.

-

Key words:

- person re-identification /

- global features /

- random occlusion /

- local feature fusion /

- joint loss

-

表 1 不同方法在Market1501和DukeMTMC-reID数据集上的结果对比

Table 1. Comparison of results of different methods on Market1501 and DukeMTMC-reID datasets

% 方法 Rank-1 mAP Market1501 DukeMTMC-reID Market1501 DukeMTMC-reID PCB+RPP[12] 93.8 83.3 81.6 69.2 Mancs[14] 93.1 84.9 82.3 71.8 VPM[15] 93.0 83.6 80.8 72.6 SVDNet[9] 82.3 76.7 62.1 56.8 MHN-6+

IDE[28]93.6 87.5 83.6 75.2 SGGNN[29] 92.3 81.1 82.8 68.2 MGN[17] 95.7 88.7 86.9 78.4 HPM[16] 94.2 86.6 82.7 74.3 DG-Net[30] 94.8 86.6 86.0 74.8 CASN+IDE[31] 92.0 84.5 78.0 67.0 SNR[32] 94.4 84.4 84.7 72.9 Top-DB-

Net[33]94.9 87.5 85.8 73.5 Self-supervised person[34] 94.7 89.0 86.7 78.2 FPO+GBS[35] 93.4 82.1 DCNN[36] 90.2 81.0 82.7 78.0 PCB-U+

RPP[37]93.8 84.5 81.6 71.5 本文方法 95.2 89.2 87.3 79.2 表 2 不同方法在CUHK03数据集上的结果对比

Table 2. Comparison of results of different methods on CUHK03 dataset

% 方法 Rank-1 mAP CUHK03 Detected Labeled CUHK03 Detected Labeled Mancs[14] 65.5 69.0 60.5 63.9 HA-CNN[13] 41.7 44.4 38.6 41.0 PCB+RPP[12] 62.8 56.7 MGN[17] 66.8 68.0 66.0 67.4 HPM[16] 63.9 57.5 CASN+IDE[31] 57.4 58.9 50.7 52.2 MHN-6+IDE[28] 67.0 69.7 61.2 65.1 Auto-ReID[38] 73.3 77.9 69.3 73.0 Top-DB-Net[33] 77.3 79.4 73.2 75.4 Self-supervised

person[34]70.4 72.7 65.8 67.8 FPO+GBS[35] 68.2 71.7 62.0 66.7 DCNN[36] 60.5 67.8 64.8 72.7 PCB-U+RPP[37] 62.8 56.7 本文方法 78.9 80.1 75.7 78.7 表 3 不同方法在Occluded-Duke数据集上的结果对比

Table 3. Comparison of results of different methods on Occluded-Duke datase

% 表 4 不同分支及模块消融实验结果

Table 4. Ablation experiments of different branches and modules

% 分支 Rank-1 mAP Market1501 DukeMTMC-reID Market1501 DukeMTMC-reID Branch1 88.4 80.5 71.2 62.0 Branch2 92.8 84.6 78.1 69.9 Branch3 91.5 86.8 78.3 72.6 Branch12 92.3 87.0 79.3 73.1 Branch123

(Baseline)92.2 87.2 80.4 74.6 Branch123+随机遮挡 94.2 88.8 85.8 78.0 Branch123+随机遮挡+局部信息互融 95.2 89.2 87.3 79.2 表 5 不同主干网络的性能比较

Table 5. Performance comparison of different backbone networks

% 主干网络 Rank-1 mAP Market1501 DukeMTMC-

reIDMarket1501 DukeMTMC-

reIDResNet50 93.2 87.1 84.9 77.4 IBN-Net50-a 95.2 89.2 87.3 79.2 表 6 不同联合损失函数系数的结果对比

Table 6. Results comparison of different joint loss function coefficients

$ \nu $ $ \mu $ Rank-1/% mAP/% Market1501 DukeMTMC-reID Market1501 DukeMTMC-reID 1 0.5 94.2 89.3 86.5 79.0 0.5 1 94.0 87.8 85.0 77.1 1 1 94.5 88.9 87.0 78.8 1 2 94.3 88.6 86.6 78.7 2 1 95.2 89.2 87.3 79.2 -

[1] LI J H, CHENG D Q, LIU R H, et al. Unsupervised person re-identification based on measurement axis[J]. IEEE Signal Processing Letters, 2021, 28: 379-383. doi: 10.1109/LSP.2021.3055116 [2] 谢彭宇, 徐新. 基于多尺度联合学习的行人重识别[J]. 北京航空航天大学学报, 2021, 47(3): 613-622.XIE P Y, XU X. Multi-scale joint learning for person re-identification[J]. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(3): 613-622(in Chinese). [3] LIAO S C, HU Y, ZHU X Y, et al. Person re-identification by local maximal occurrence representation and metric learning[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 2197-2206. [4] ZHAO R, OUYANG W L, WANG X G, et al. Person re-identification by salience matching[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2014: 2528-2535. [5] ZHAO R, OUYANG W L, WANG X G, et al. Unsupervised salience learning for person re-identification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2013: 3586-3593. [6] GE Y X, LI Z W, ZHAO H Y, et al. FD-GAN: Pose-guided feature distilling GAN for robust person re-identification[EB/OL]. (2018-12-12)[2022-02-13]. https://arxiv.org/abs/1810.02936v2. [7] FAN H H, ZHENG L A, YAN C G, et al. Unsupervised person re-identification: Clustering and fine-tuning[J]. ACM Transactions on Multimedia Computing Communications and Applications, 2018, 14(4): 1-18. [8] ZHENG L, YANG Y, HAUPTMANN A G. Person re-identification: Past, present and future[EB/OL]. (2016-10-10) [2022-02-27]. https://arxiv.org/abs/1610.02984v1. [9] SUN Y F, ZHENG L, DENG W J, et al. SVDNet for pedestrian retrieval[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 3820-3828. [10] SU C, LI J N, ZHANG S L, et al. Pose-driven deep convolutional model for person re-identification[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 3980-3989. [11] ZHAO H Y, TIAN M Q, SUN S Y, et al. Spindle Net: Person re-identification with human body region guided feature decomposition and fusion[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 907-915. [12] SUN Y F, ZHENG L, YANG Y, et al. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline)[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018, 11208: 501-518. [13] LI W, ZHU X T, GONG S G. Harmonious attention network for person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 2285-2294. [14] WANG C, ZHANG Q, HUANG C, et al. Mancs: A multi-task attentional network with curriculum sampling for person re-identification[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018, 11208: 384-400. [15] SUN Y F, XU Q, LI Y L, et al. Perceive where to focus: learning visibility-aware part-level features for partial person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 393-402. [16] FU Y, WEI Y C, ZHOU Y Q, et al. Horizontal pyramid matching for person re-identification[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33(1): 8295-8302. doi: 10.1609/aaai.v33i01.33018295 [17] WANG G S, YUAN Y F, CHEN X, et al. Learning discriminative features with multiple granularities for person re-identification[C]//Proceedings of the 26th ACM International Conference on Multimedia. New York: ACM, 2018: 274-282. [18] PAN X G, LUO P, SHI J P, et al. Two at once: Enhancing learning and generalization capacities via IBN-Net[C]//Proceedings of the European Conference on Computer. Vision Berlin: Springer, 2018, 11208: 484-500 [19] ULYANOV D, VEDALDI A, LEMPITSKY V. Instance normalization: The missing ingredient for fast stylization[EB/OL]. (2017-11-06)[2016-02-01].https://arxiv.org/abs/1607.08022v3 [20] CHONG Y W, PENG C W, ZHANG C, et al. Learning domain invariant and specific representation for cross-domain person re-identification[J]. Applied Intelligence, 2021, 51(8): 5219-5232. doi: 10.1007/s10489-020-02107-2 [21] PARK H, HAM B. Relation network for person re-identification[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 11839-11847. [22] HERMANS A, BEYER L, LEIBE B. In defense of the triplet loss for person re-identification[EB/OL]. (2017-11-21)[2022-02-01].https://arxiv.org/abs/1703.07737v4 [23] SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 2818-2826. [24] ZHENG L, SHEN L Y, TIAN L, et al. Scalable person re-identification: A benchmark[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2016: 1116-1124. [25] RISTANI E, SOLERA F, ZOU R, et al. Performance measures and a data set for multi-target, multi-camera tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 17-35. [26] LI W, ZHAO R, XIAO T, et al. DeepReID: Deep filter pairing neural network for person re-identification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014: 152-159. [27] MIAO J X, WU Y, LIU P, et al. Pose-guided feature alignment for occluded person re-identification[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2020: 542-551. [28] CHEN B H, DENG W H, HU J N, et al. Mixed high-order attention network for person re-identification[C]//Proceeeings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2020: 371-381. [29] SHEN Y T, LI H S, YI S A, et al. Person re-identification with deep similarity-guided graph neural network[C]//Proceeeings of the European Conference on Computer Vision. Berlin: Springer, 2018, 11219: 508-526. [30] ZHENG Z D, YANG X D, YU Z D, et al. Joint discriminative and generative learning for person re-identification[C]//Proceeeings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 2133-2142. [31] ZHENG M, KARANAM S, WU Z Y, et al. Re-identification with consistent attentive siamese networks[C]//Proceeeings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 5728-5737. [32] JIN X, LAN C L, ZENG W J, et al. Style normalization and restitution for generalizable person re-identification[C]//Proceeeings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 3140-3149. [33] QUISPE R, PEDRINI H. Top-DB-Net: Top DropBlock for activation enhancement in person re-identification[C]//Proceedings of the IEEE International Conference on Pattern Recognition. Piscataway: IEEE Press, 2021: 2980-2987. [34] CHEN F, WANG N, TANG J, et al. A feature disentangling approach for person re-identification via self-supervised data augmentation[J]. Applied Soft Computing, 2021, 100: 106939. doi: 10.1016/j.asoc.2020.106939 [35] TANG Y Z, YANG X, WANG N N, et al. Person re-identification with feature pyramid optimization and gradual background suppression[J]. Neural Networks, 2020, 124: 223-232. doi: 10.1016/j.neunet.2020.01.012 [36] LI Y, JIANG X Y, HWANG J N. Effective person re-identification by self-attention model guided feature learning[J]. Knowledge-Based Systems, 2020, 187: 104832. doi: 10.1016/j.knosys.2019.07.003 [37] SUN Y F, ZHENG L, LI Y L, et al. Learning part-based convolutional features for person re-identification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(3): 902-917. doi: 10.1109/TPAMI.2019.2938523 [38] QUAN R J, DONG X Y, WU Y, et al. Auto-ReID: Searching for a part-aware ConvNet for person re-identification[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2020: 3749-3758. [39] HE L X, LIANG J, LI H Q, et al. Deep spatial feature reconstruction for partial person re-identification: Alignment-free approach[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 7073-7082. [40] WANG G A, YANG S, LIU H Y, et al. High-order information matters: Learning relation and topology for occluded person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6448-6457. [41] TAN H C, LIU X P, YIN B C, et al. MHSA-Net: Multi-head self-attention network for occluded person re-identification[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 99: 1-15. [42] CHEN X S, FU C M, ZHAO Y, et al. Salience-guided cascaded suppression network for person re-identification[C]//Proceedings of the Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 3297-3307. [43] CHEN T L, DING S J, XIE J Y, et al. ABD-Net: Attentive but diverse person re-identification[C]//Proceedings of the International Conference on Computer Vision. Piscataway: IEEE Press, 2020: 8350-8360. [44] TAY C P, ROY S, YAP K H, et al. AANet: Attribute attention network for person re-identifications[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 7127-7136. [45] HE L X, SUN Z N, ZHU Y H, et al. Recognizing partial biometric patterns[EB/OL]. (2018-10-17)[2022-02-01]. https://arxiv.org/abs/1810.07399. [46] CHANG X B, HOSPEDALES T M, XIANG T, et al. Multi-level factorisation net for person re-identification[C]//Proceedings of the Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 2109-2118. [47] SARFRAZ M S, SCHUMANN A, EBERLE A, et al. A pose-sensitive embedding for person re-identification with expanded cross neighborhood re-ranking[C]//Proceedings of the Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 420-429. -

下载:

下载: