-

摘要:

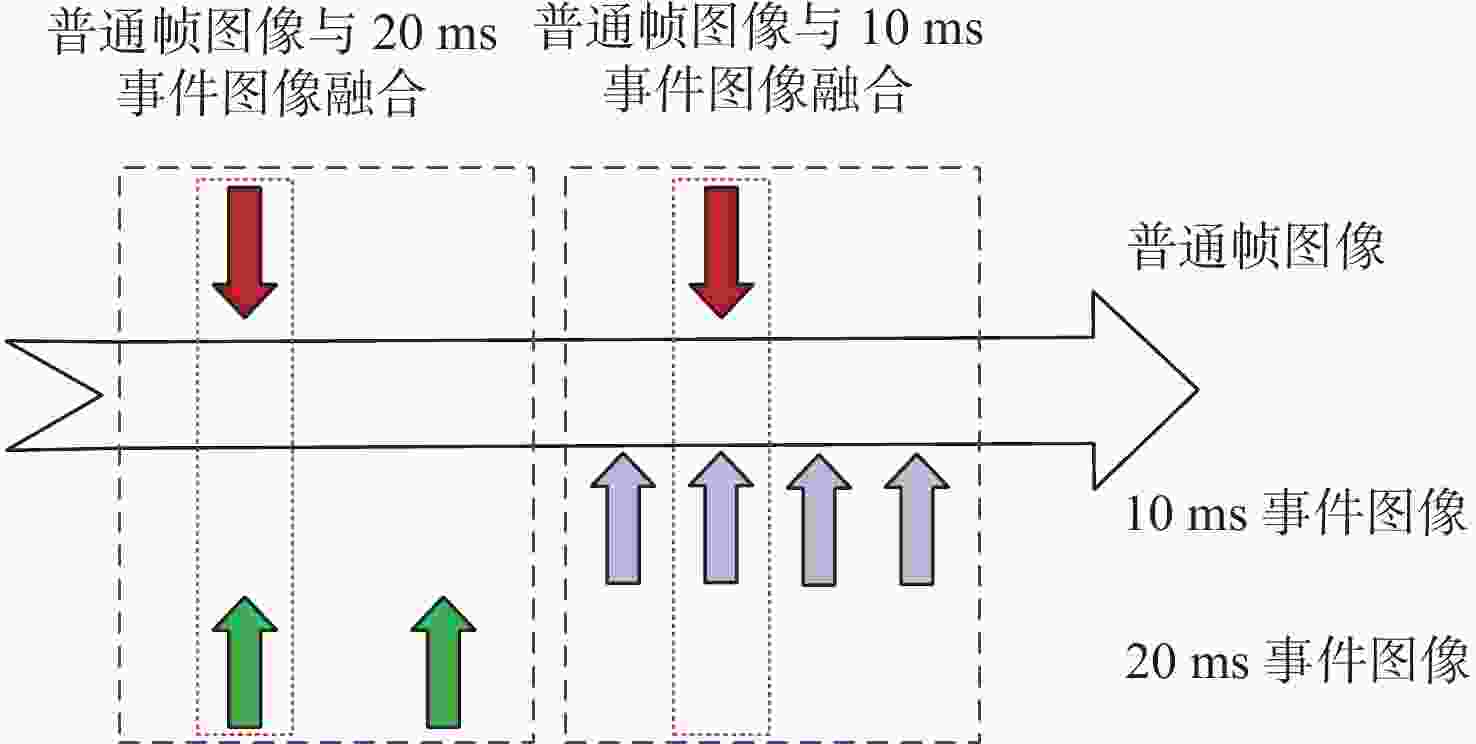

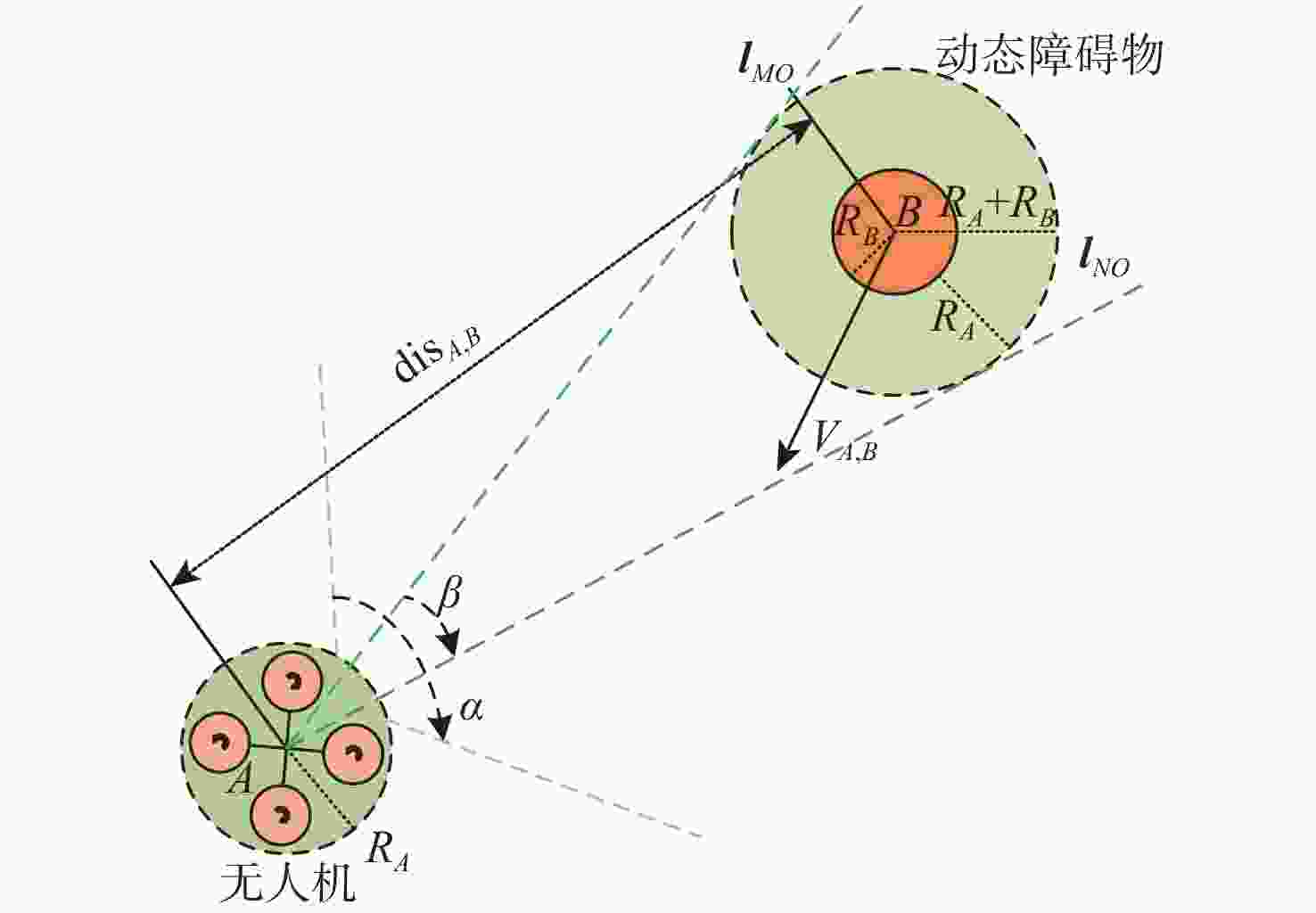

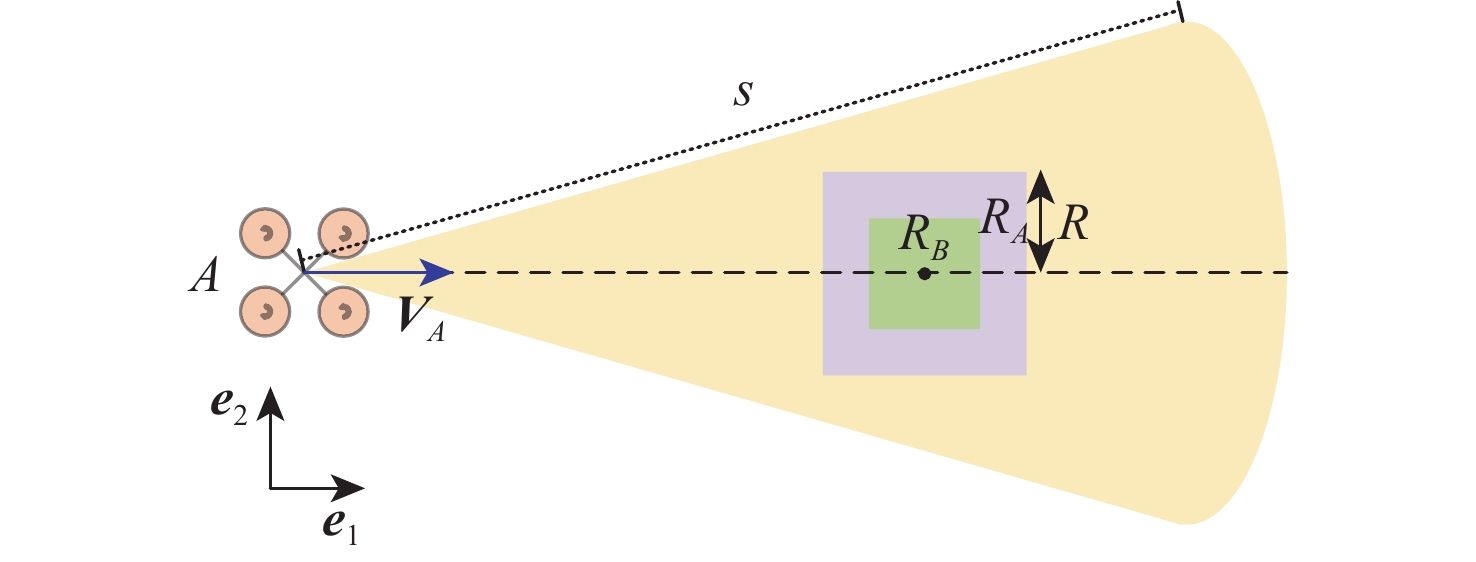

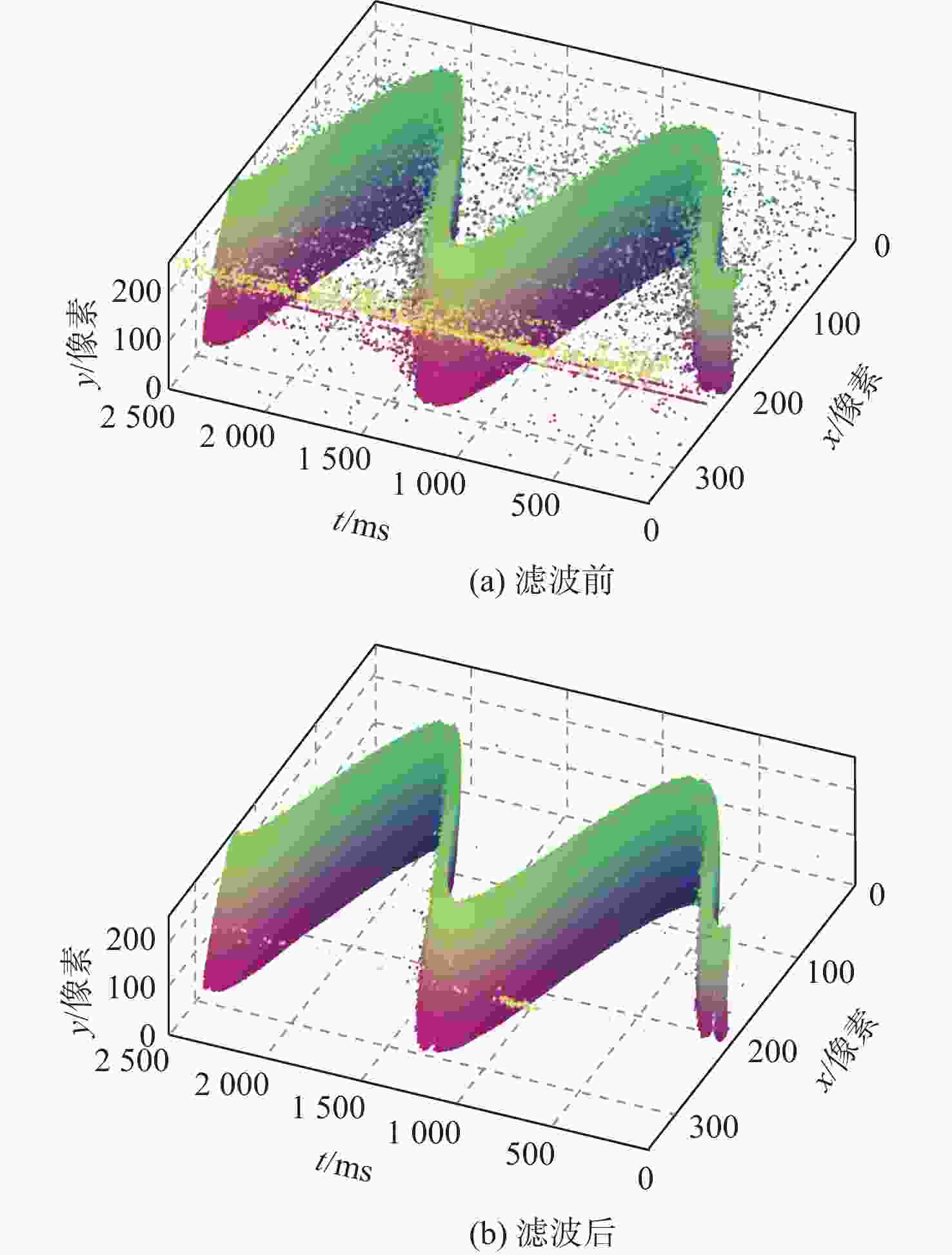

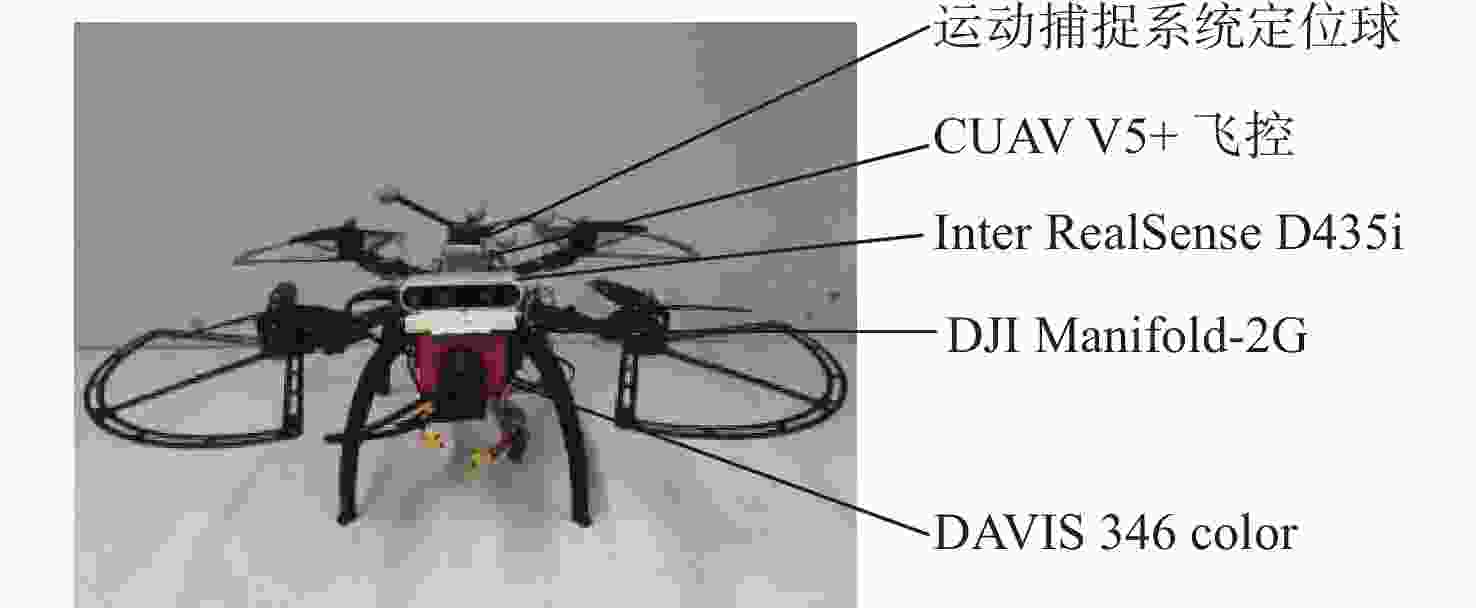

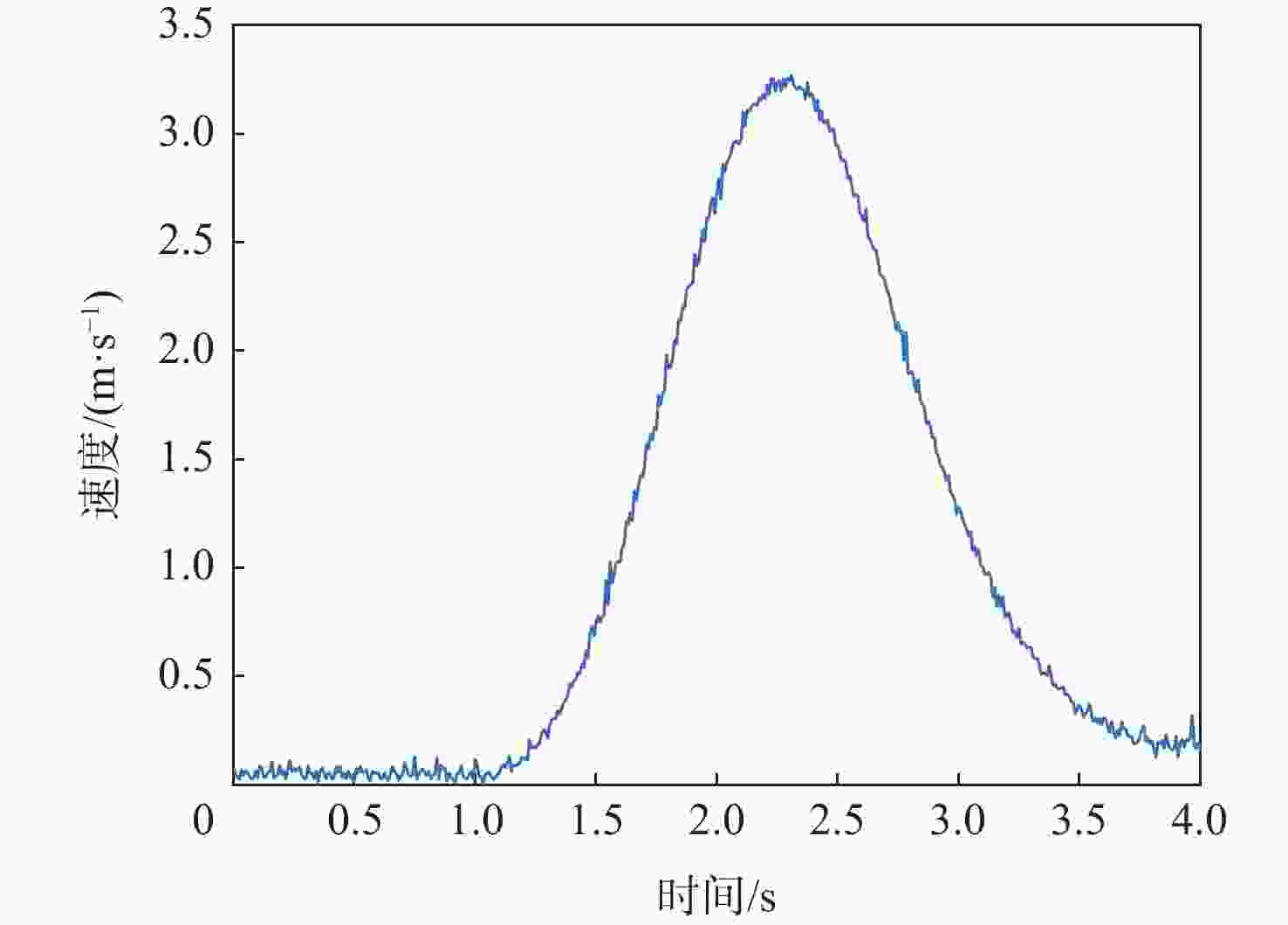

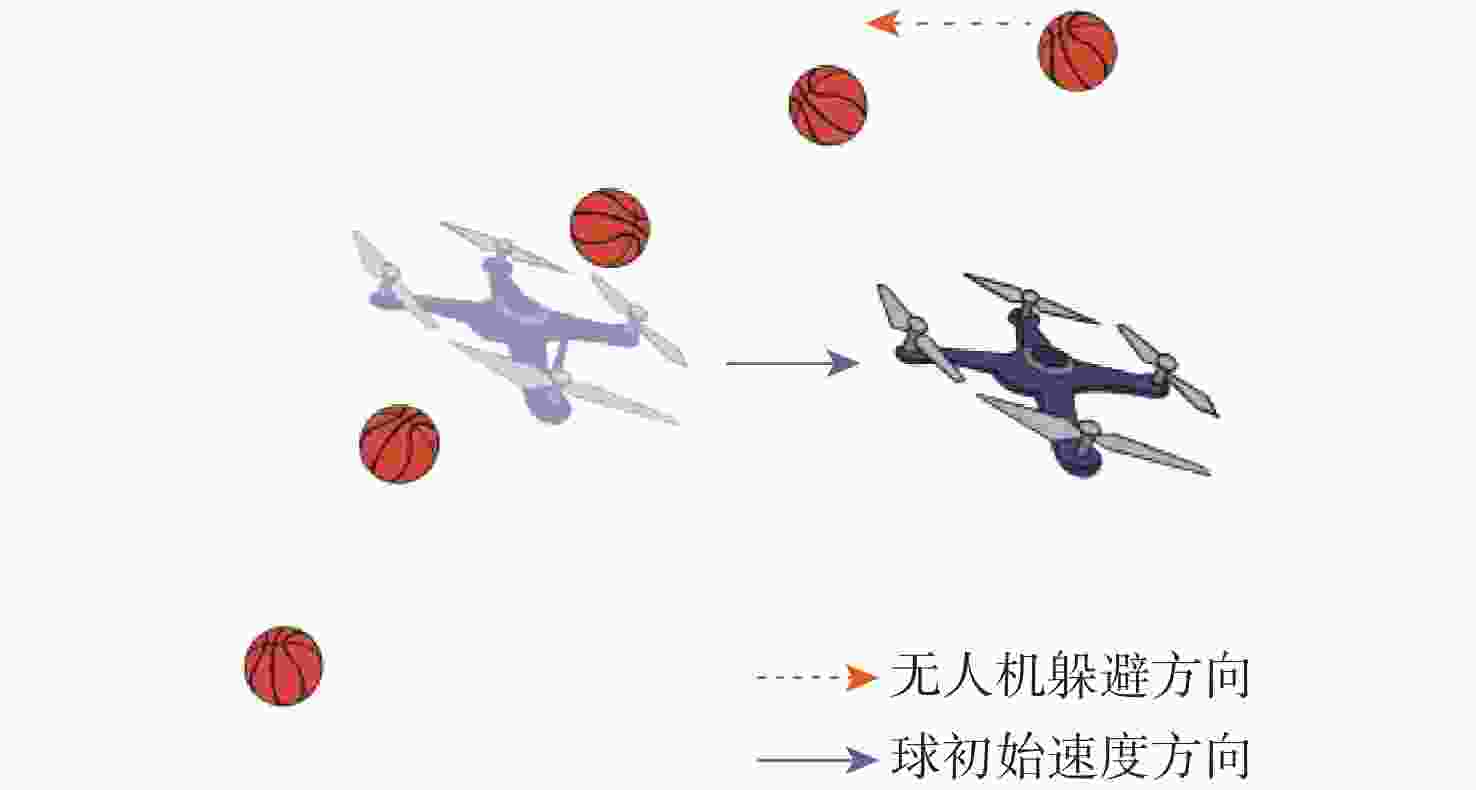

针对无人机在动态环境中感知动态目标与躲避高速动态障碍物,提出了基于动态视觉传感器的目标检测与避障算法。设计了滤波方法和运动补偿算法,滤除事件流中背景噪声、热点噪声及由相机自身运动产生的冗余事件;设计了一种融合事件图像和RGB图像的动态目标融合检测算法,保证检测的可靠性。根据检测结果对目标运动轨迹进行估计,结合障碍物运动特点和无人机动力学约束改进速度障碍法躲避动态障碍物。大量仿真试验、手持试验及飞行试验验证了所提算法的可行性。

Abstract:UAVs face a significant problem while trying to avoid moving objects while in flight. In order to detect and avoid high-speed dynamic obstacles in a dynamic environment, an algorithm for target detection and obstacle avoidance based on a dynamic vision sensor was designed. Firstly, we propose an event filter method and to filter the background noise, hot noise, and the method preserves the asynchrony of events. The motion compensation algorithm is designed to filter redundant events caused by the camera’s own motion in the event stream. For dynamic object detection, a fusion detection algorithm of event image and RGB image is designed, it has higher robustness in a highly dynamic environment. Finally, to avoid dynamic obstacles combined with the features of obstacle movement and UAV dynamic restrictions, finally, we enhanced the velocity obstacle method and estimated the target trajectory in accordance with the detection results. A large number of simulation tests, hand-held tests, and flight tests are carried out to verify the feasibility of the algorithm.

-

Key words:

- event-based camera /

- event filter /

- motion compensation /

- fusion detection /

- velocity obstacle method

-

表 1 检测结果对比

Table 1. Comparison of detection results

数据来源 检测帧数 检测率/% RGB图像 293 67.9 事件图像 376 87.2 融合结果 415 96.3 表 2 测距结果对比

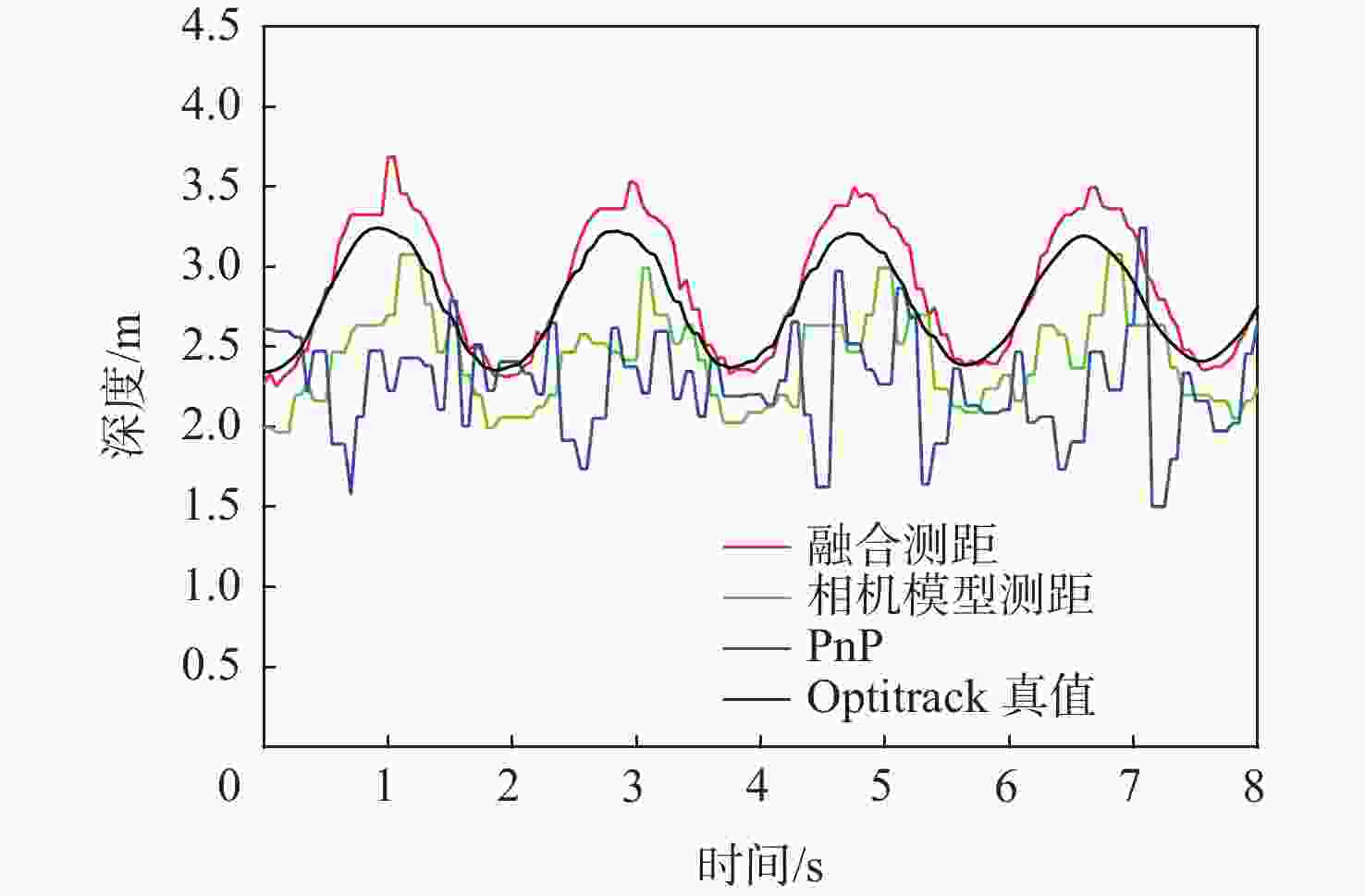

Table 2. Comparison of ranging results

m 方法类型 最大误差 平均误差 PnP 1.523 3 0.619 3 相机模型测距 0.826 1 0.351 3 融合测距 0.477 0 0.136 8 表 3 避障仿真参数

Table 3. Obstacle avoidance simulation parameter

参数 数值 无人机质量$ {m_A} $/kg 1.2 无人机推重比$ \tau $ 6 无人机安全半径$ {R_A} $/cm 30 无人机最大速度$ \left| {{{\boldsymbol{V}}_{A\max }}} \right| $/(m·s−1) 5 无人机视角$ \alpha $/(°) 120 无人机最大检测距离$ s $/m 4 障碍物半径$ {R_B} $/cm 12.3 障碍物最大速度$ \left| {{{\boldsymbol{V}}_{B\max }}} \right| $/(m·s−1) 12 表 4 避障仿真结果对比

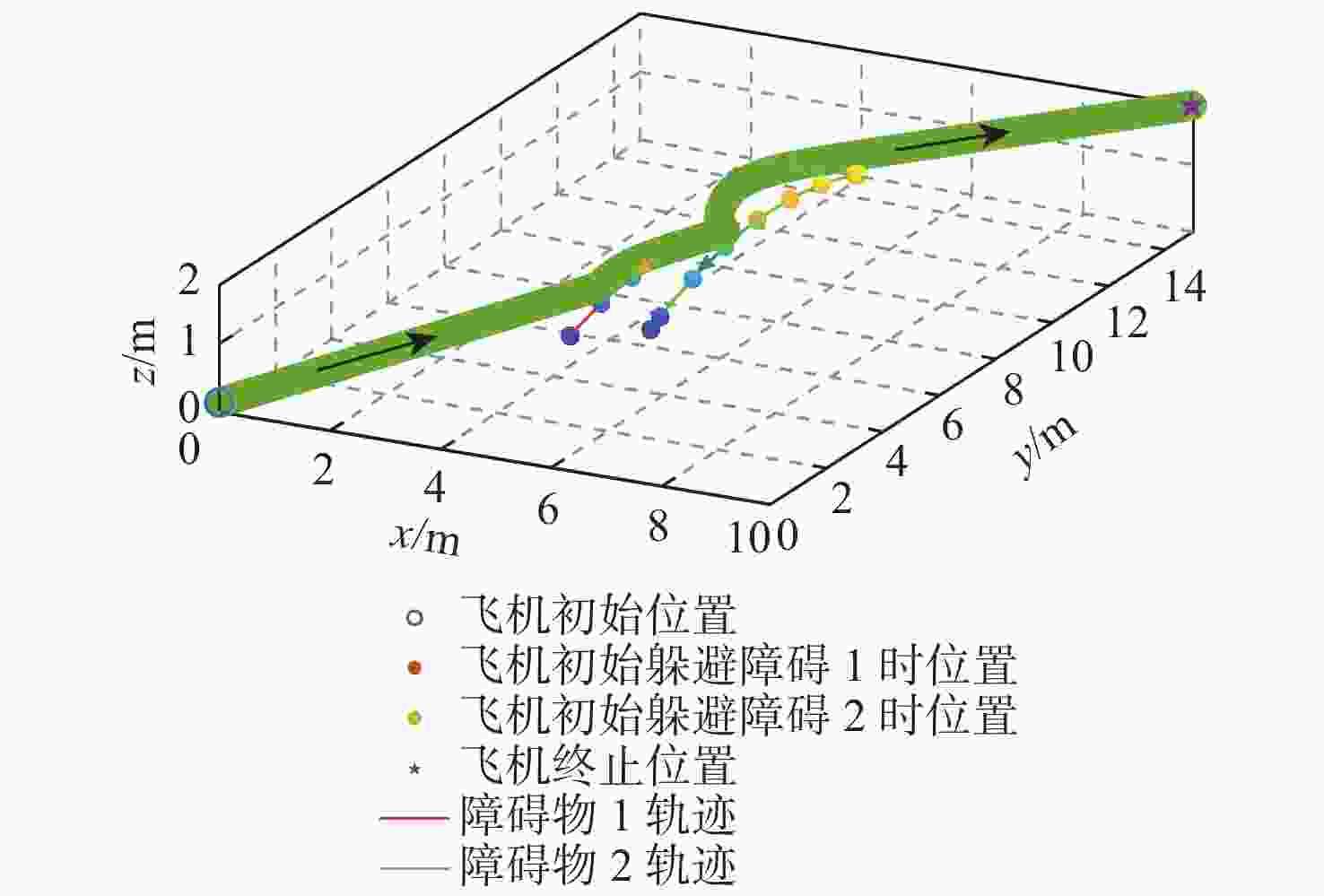

Table 4. Comparison of obstacle avoidance simulation results

障碍物速度/(m·s−1) 飞行时间/ms 文献[12]方法 本文方法 2.4 3274 3082 9.6 3956 3086 -

[1] 王亭亭, 蔡志浩, 王英勋. 无人机室内视觉/惯导组合导航方法[J]. 北京航空航天大学学报, 2018, 44(1): 176-186. doi: 10.13700/j.bh.1001-5965.2016.0965WANG T T, CAI Z H, WANG Y X. Integrated indoor vision/inertial navigation method of UAVs in indoor environment[J]. Journal of Beijing University of Aeronautics and Astronautics, 2018, 44(1): 176-186(in Chinese). doi: 10.13700/j.bh.1001-5965.2016.0965 [2] FALANGA D, KIM S, SCARAMUZZA D. How fast is too fast? The role of perception latency in high-speed sense and avoid[J]. IEEE Robotics and Automation Letters, 2019, 4(2): 1884-1891. doi: 10.1109/LRA.2019.2898117 [3] DELBRUCK T. Neuromorophic vision sensing and processing[C]//Proceedings of the 46th European Solid State Device Research Conference. Piscataway: IEEE Press, 2016: 7-14. [4] BRANDLI C, BERNER R, YANG M H, et al. A 240 × 180 130 dB 3 µs latency global shutter spatiotemporal vision sensor[J]. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333-2341. doi: 10.1109/JSSC.2014.2342715 [5] GALLEGO G, DELBRÜCK T, ORCHARD G M, et al. Event-based vision: A survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 44(1): 154-180. [6] KHODAMORADI A, KASTNER R. O( N)-space spatiotemporal filter for reducing noise in neuromorphic vision sensors[J]. IEEE Transactions on Emerging Topics in Computing, 2021, 9(1): 15-23. [7] FENG Y, LV H Y, LIU H L, et al. Event density based denoising method for dynamic vision sensor[J]. Applied Sciences, 2020, 10(6): 2024. doi: 10.3390/app10062024 [8] 闫昌达, 王霞, 左一凡, 等. 基于事件相机的可视化及降噪算法[J]. 北京航空航天大学学报, 2021, 47(2): 342-350. doi: 10.13700/j.bh.1001-5965.2020.0192YAN C D, WANG X, ZUO Y F, et al. Visualization and noise reduction algorithm based on event camera[J]. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(2): 342-350(in Chinese). doi: 10.13700/j.bh.1001-5965.2020.0192 [9] MUEGGLER E, FORSTER C, BAUMLI N, et al. Lifetime estimation of events from dynamic vision sensors[C]//Proceedings of the IEEE International Conference on Robotics and Automation. Piscataway: IEEE Press, 2015: 4874-4881. [10] MITROKHIN A, FERMÜLLER C, PARAMESHWARA C, et al. Event-based moving object detection and tracking[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Piscataway: IEEE Press, 2019: 1-9. [11] HE B T, LI H J, WU S Y, et al. FAST-dynamic-vision: Detection and tracking dynamic objects with event and depth sensing[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Piscataway: IEEE Press, 2021: 3071-3078. [12] FALANGA D, KLEBER K, SCARAMUZZA D. Dynamic obstacle avoidance for quadrotors with event cameras[J]. Science Robotics, 2020, 5(40): 1-14. doi: 10.1126/scirobotics.aaz9712 [13] SANKET N J, PARAMESHWARA C M, SINGH C D, et al. EVDodgeNet: Deep dynamic obstacle dodging with event cameras[C]//Proceedings of the IEEE International Conference on Robotics and Automation. Piscataway: IEEE Press, 2020: 10651-10657. [14] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 779-788. [15] JULIER S J, UHLMANN J K. Unscented filtering and nonlinear estimation[J]. Proceedings of the IEEE, 2004, 92(3): 401-422. doi: 10.1109/JPROC.2003.823141 [16] MOSHTAGH N. Minimum volume enclosing ellipsoids[J]. Convex Optimization, 2005, 111: 1-9. [17] VAN DEN BERG J, LIN M C, MANOCHA D. Reciprocal velocity obstacles for real-time multi-agent navigation[C]//Proceedings of the IEEE International Conference on Robotics and Automation. Piscataway: IEEE Press, 2008: 1928-1935. -

下载:

下载: