-

摘要:

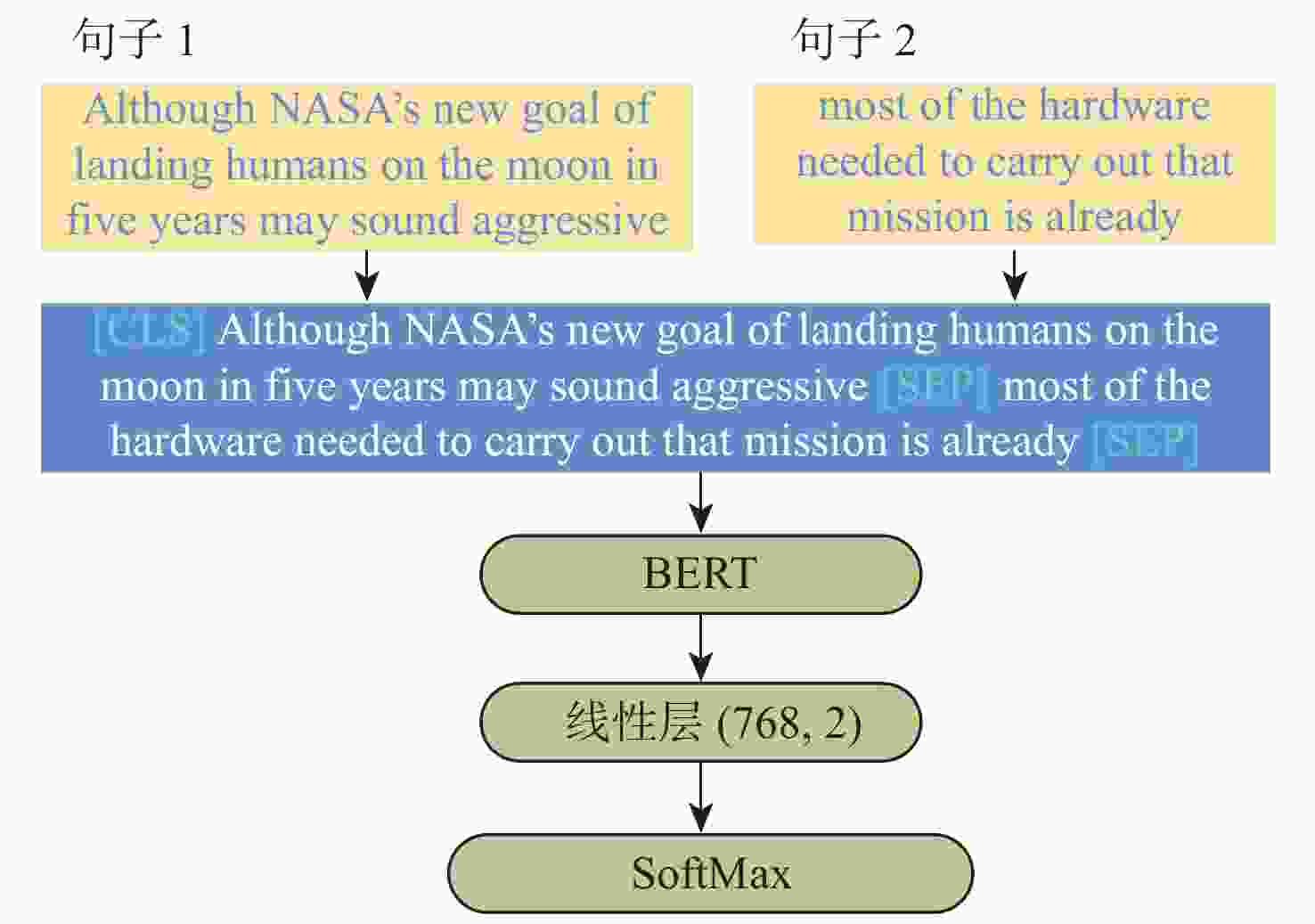

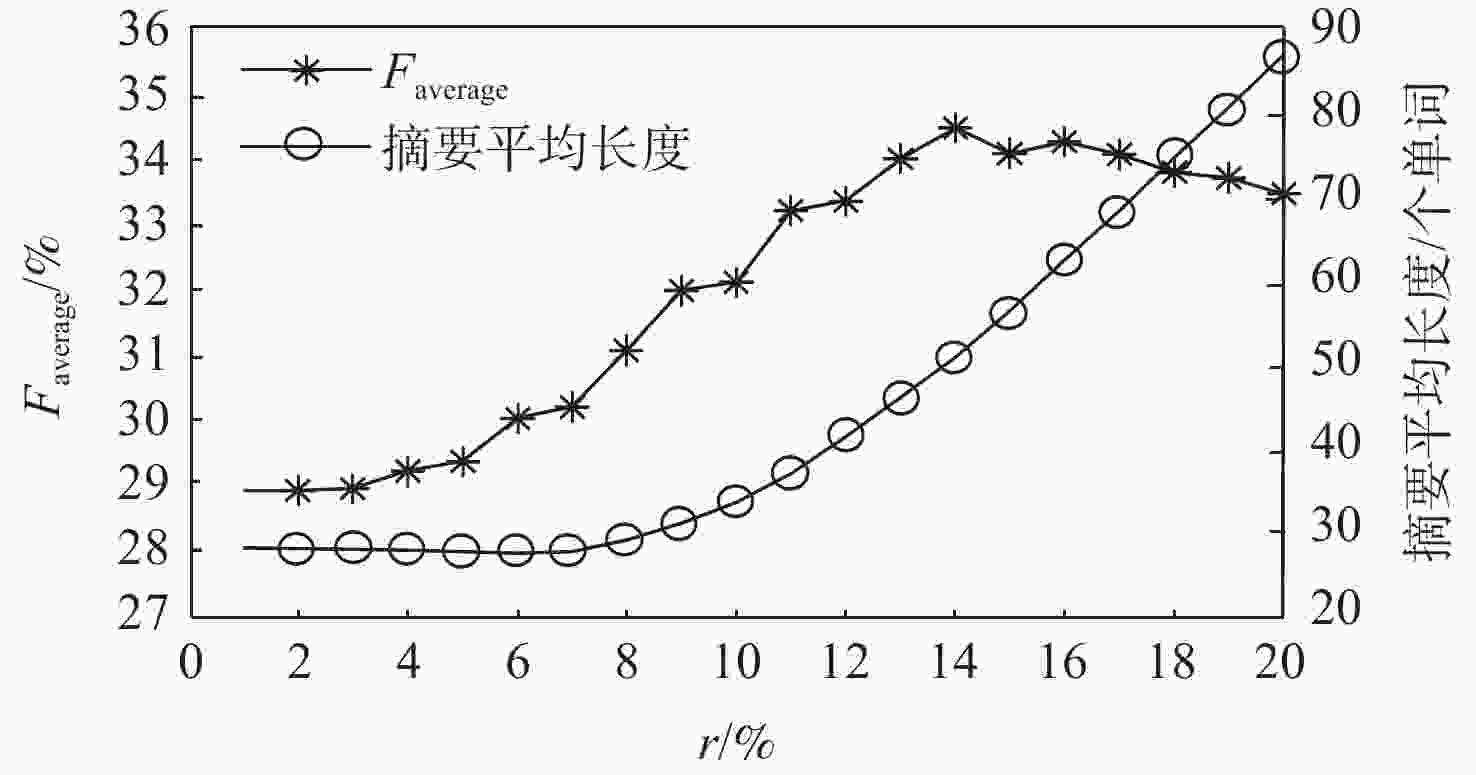

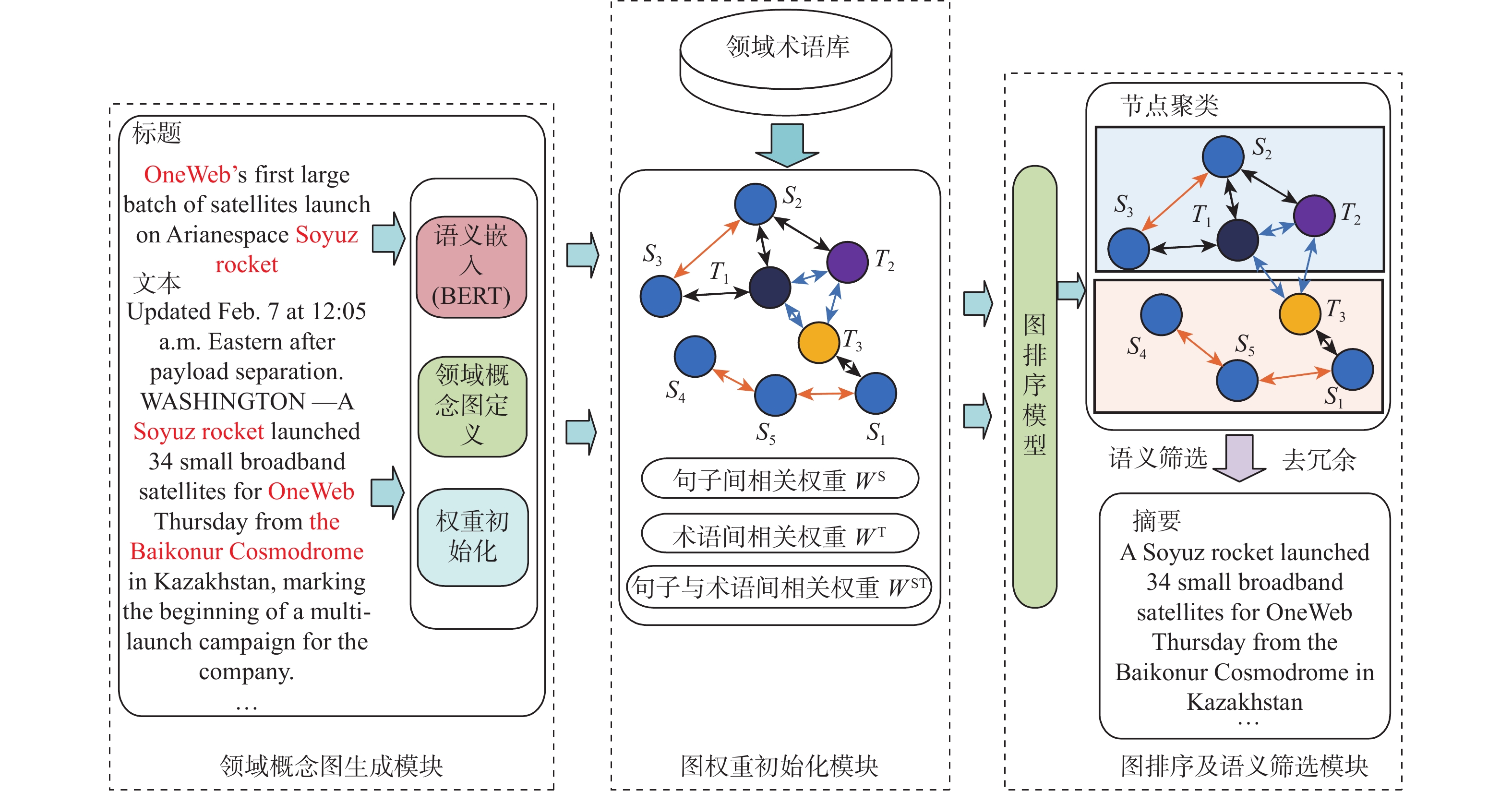

互联网海量的航天新闻中隐含着大量航天情报信息,对其进行理解与压缩是提高后续情报分析效率的基础。然而通用的自动摘要算法往往会忽略很多航天领域关键信息,且有监督自动摘要算法需要对领域文本进行大量的数据标注,费时费力。因此,提出一种基于领域概念图的无监督自动摘要(DCG-TextRank)模型,利用领域术语辅助引导图排序,提高模型对领域文本的理解力。该模型分3个模块:领域概念图生成、图权重初始化、图排序及语义筛选。根据句向量相似度和领域术语库,将文本转换为包含句子节点和领域术语节点的领域概念图;根据航天新闻文本特征初始化领域概念图权值;采用TextRank模型对句子进行排序,并在语义筛选模块通过图节点聚类及设置摘要语义保留度的方法改进TextRank的输出,充分保留文本的多语义信息并降低冗余。所提模型具有领域可移植性,且实验结果表明:在航天新闻数据集中,所提模型相比传统TextRank模型性能提升了14.97%,相比有监督抽取式文本摘要模型BertSum和MatchSum性能提升了4.37%~12.97%。

Abstract:The effectiveness of subsequent intelligence analysis can be increased by comprehending and compressing the vast amount of aerospace information that is hidden in the Internet's aerospace news. However the general automatic summarization algorithms tend to ignore many domain key Information, and the existing supervised automatic summarization algorithms need to annotate a lot of data in the domain text. It is time-consuming and laborious. Therefore, we proposed an unsupervised automatic summarization model TextRank based on domain concept graph (DCG-TextRank). It is based on a domain concept graph, which uses domain terms to help guide graph ordering and improve the model's understanding of domain text. The model has three modules: domain concept graph generation, graph weight initialization, graph sorting and semantic filtering. Transform the text into domain concept graph containing sentence nodes and domain term nodes according to sentence vector similarity and domain term database. Initialize the domain concept graph weight according to the features of aerospace news text. Use the TextRank algorithm to sort the sentences, and in the semantic filtering module, the output of TextRank is improved by clustering the graph nodes and setting the semantic retention of the abstract, which fully preserves the semantic Information of text and reduces redundancy. The proposed model is domain portable, and experimental findings indicate that in the aerospace news dataset, the proposed model performs 14.97% better than the conventional TextRank model and 4.37%~12.97% better than the supervised extraction text summary models BertSum and MatchSum.

-

表 1 航天术语库

Table 1. Glossary of aerospace terms

术语类型 个数 示例 政府空间机构 78 UK Space Agency 组织机构 29 Defense Department 私营航天公司 122 SpaceX 火箭/卫星/卫星网络 5933 StarLink 航天中心/发射场 70 Andoya Spaceport 其他关键词 450 satellite 表 2 本文模型与传统无监督模型实验结果对比

Table 2. Comparison of experimental results between the proposed model and traditional unsupervised models

% 模型 F1 F2 FL Faverage TextRank 26.98 13.44 24.06 21.50 TFIDF+TextRank 31.32 19.29 29.86 26.82 doc2vec+TextRank 30.05 16.95 27.87 24.96 BERT(未微调)+ TextRank 30.71 17.43 28.54 25.56 BERT+TextRank 31.08 17.96 28.84 25.96 DCG-TextRank-S1 33.12 20.90 31.25 28.42 DCG-TextRank-S2 34.95 23.10 33.12 30.39 DCG-TextRank 37.97 26.43 36.03 33.48 表 3 本文模型与主流有监督模型实验结果对比

Table 3. Comparison of experimental results between proposed model and mainstream supervised models

% 模型 F1 F2 FL Faverage BertSum + Classifier 31.71 14.60 24.18 23.50 BertSum+RNN 36.25 25.20 33.74 31.73 BertSum+Transformer 38.09 24.78 33.42 32.10 MatchSum 37.73 25.05 32.54 31.77 Seq2Seq+Attention 13.69 5.35 11.94 10.32 Pointer-generator 38.11 9.76 36.78 28.22 Pointer-generator+Coverage 45.67 21.61 44.17 37.15 DCG-TextRank 41.17 30.06 38.18 36.47 表 4 摘要对比实例

Table 4. Example of summary comparison

模型 摘要 TFIDF+TextRank The spacecraft will deposit the samples collected by TAGSAM into a sample return canister based on the same design used for the Stardust comet dust sample return mission. Collection of the sample will mark the end of the science phase of the mission as the spacecraft moves to a safe distance from the asteroid. BERT+TextRank OSIRIS-REx is the third mission in NASA’s New Frontiers program of medium-sized planetary missions, with an estimated cost of $\$800 million plus launch and operations. A few days before Juno’s arrival, NASA formally extended the mission for New Horizons, the first New Frontiers mission, which flew past the dwarf planet Pluto in July 2015. BertSum+Transformer An Atlas 5 successfully launched a NASA mission to visit a near Earth asteroid and return samples of it to Earth Sept. 8.

The United Launch Alliance Atlas 5 411 lifted off at 7:05 p.m. Eastern from Space Launch Complex 41 at Cape Canaveral, Florida.MatchSum An Atlas 5 successfully launched a NASA mission to visit a near Earth asteroid and return samples of it to Earth Sept. 8. TAGSAM is designed to collect at least 60 grams of material, but project officials are confident it can collect much more, perhaps up to 2 kilograms. The spacecraft will deposit the samples collected by TAGSAM into a sample return canister based on the same design used for the Stardust comet dust sample return mission. Pointer-generator Atlas atlas successfully launched a nasa mission mission visit a earth earth earth earth of of to to earth …(后面有较长重复单词,未列出) Pointer-generator+Coverage Atlas 5 launched launched a nasa nasa mission to a a earth earth and and of of to to earth earth sept sept sept sept …(后面有较长重复单词,未列出) DCG-TextRank An Atlas 5 successfully launched a NASA mission to visit a near Earth asteroid and return samples of it to Earth Sept.8. OSIRIS-REx is the third mission in NASA’s New Frontiers program of medium-sized planetary missions, with an estimated cost of $800 million plus launch and operations. 表 5 参考摘要与关键信息

Table 5. Reference summary and key information

参考摘要 关键信息 An Atlas 5 successfully launched a NASA mission to visit a near Earth asteroid and return samples of it to Earth Sept.8. OSIRIS-REx is the third mission in NASA’s New Frontiers program of medium-sized planetary missions, with an estimated cost of $800 million plus launch and operations. NASA is also preparing for the competition for the fourth New Frontiers mission. 运载火箭:Atlas 5 机构:NASA 本次发射任务:visit a near Earth asteroid and return samples of it to Earth 任务系列:medium-sized planetary missions 第三次任务:OSIRIS-Rex 计划:the fourth New Frontiers mission -

[1] 冯鸾鸾, 李军辉, 李培峰, 等. 面向国防科技领域的技术和术语语料库构建方法[J]. 中文信息学报, 2020, 34(8): 41-50.FENG L L, LI J H, LI P F, et al. Constructing a technology and terminology corpus oriented national defense science[J]. Journal of Chinese Information Processing, 2020, 34(8): 41-50(in Chinese). [2] MAURYA P, JAFARI O, THATTE B, et al. Building a comprehensive NER model for satellite domain[J]. SN Computer Science, 2022, 3(3): 1-8. [3] JAFARI O, NAGARKAR P, THATTE B, et al. SatelliteNER: An effective named entity recognition model for the satellite domain[C]//12th International Conference on Knowledge Management and Information Systems. Beijing: SCITEPRESS, 2020: 100-107. [4] LU Y, YANG R, JIANG X, et al. A military named entity recognition method based on pre-training language model and BiLSTM-CRF[J]. Journal of Physics: Conference Series, 2020, 1693(1): 012161 [5] 高翔, 张金登, 许潇, 等. 基于LSTM-CRF的军事动向文本实体识别方法[J]. 指挥信息系统与技术, 2020, 11(6): 91-95GAO X, ZHANG J D, XU X, et al. Military trend text entity recognition method based on LSTM-CRF[J]. Command Information System and Technology, 2020, 11(6): 91-95(in Chinese). [6] SHINDE M, MHATRE D, MARWAL G. Techniques and research in text summarization—A survey[C]//2021 International Conference on Advance Computing and Innovative Technologies in Engineering. Piscataway: IEEE Press, 2021: 260-263. [7] ZHENG C, ZHANG K, WANG H J, et al. Enhanced Seq2Seq autoencoder via contrastive learning for abstractive text summarization[C]//2021 IEEE International Conference on Big Data. Piscataway: IEEE Press, 2021: 1764-1771. [8] PRASAD C, KALLIMANI J S, HAREKAL D, et al. Automatic text summarization model using Seq2Seq technique[C]//2020 Fourth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud). Piscataway: IEEE Press, 2020: 599-604. [9] SHI T, KENESHLOO Y, RAMAKRISHNAN N, et al. Neural abstractive text summarization with sequence-to-sequence models[J]. ACM Transactions on Data Science, 2021, 2(1): 1-37. [10] WANG Q, REN J. Summary-aware attention for social media short text abstractive summarization[J]. Neurocomputing, 2021, 425: 290-299. doi: 10.1016/j.neucom.2020.04.136 [11] MIAO W, ZHANG G, BAI Y, et al. Improving accuracy of key information acquisition for social media text summarization[C]//2019 IEEE International Conferences on Ubiquitous Computing & Communications(IUCC) and Data Science and Computational Intelligence(DSCI) and Smart Computing, Networking and Services. Piscataway: IEEE Press, 2019: 408-415. [12] NALLAPATI R, FEIFEI Z, Bowen Z. Summarunner: A recurrent neural network based sequence model for extractive summarization of documents[C]//Thirty-first AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2017: 3075-3081. [13] WANG Y, TANG S, ZHU F, et al. Revisiting the transferability of supervised pretraining: An MLP perspective[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 9173-9183. [14] VANDERWENDE L, SUZUKI H, BROCKETT C, et al. Beyond SumBasic: Task-focused summarization with sentence simplification and lexical expansion[J]. Information Processing & Management, 2007, 43(6): 1606-1618. [15] CAO Z, WEI F, DONG L, et al. Ranking with recursive neural networks and its application to multi-document summarization[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2015, 29(1): 2153-2159. [16] HONG K, CONROY J M, FAVRE B, et al. A repository of state of the art and competitive baseline summaries for generic news summarization[C]//Proceedings of the LREC. Marseille: LREC, 2014: 1608−1616. [17] LIU N, LU Y, TANG X J, et al. Multi-document summarization algorithm based on significance sentences[C]//2016 Chinese Control and Decision Conference. Piscataway: IEEE Press, 2016: 3847-3852. [18] WU R S, LIU K, WANG H L. An evolutionary summarization system based on local-global topic relationship[J]. Journal of Chinese Information Processing, 2018, 32(9): 75-83. [19] LITVAK M, VANETIK N, LIU C, et al. Improving summarization quality with topic modeling[C]//Proceedings of the 2015 Workshop on Topic Models: Post-Processing and Applications. New York: ACM, 2015: 39-47. [20] ERKAN G, RADEV D R. LexRank: Graph-based lexical centrality as salience in text summarization[J]. Journal of Artificial IntelliGence Research, 2004, 22(1): 457-479. [21] MIHALCEA R, TARAU P. TextRank: Bringing order into text[C]//Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing. Barcelona: EMNLP, 2004: 404-411. [22] 叶雷, 余正涛, 高盛祥, 等. 多特征融合的汉越双语新闻摘要方法[J]. 中文信息学报, 2018, 32(12): 84-91.YE L, YU Z T, GAO S X, et al. A bilingual news summarization in Chinese and Vietnamese based on multiple features[J]. Journal of Chinese Information Processing, 2018, 32(12): 84-91(in Chinese). [23] 余珊珊, 苏锦钿, 李鹏飞. 基于改进的TextRank的自动摘要提取方法[J]. 计算机科学, 2016, 43(6): 240-247.YU S S, SU J D, LI P F. Improved TextRank-based method for automatic summarization[J]. Computer Science, 2016, 43(6): 240-247(in Chinese). [24] WAN X, YANG J, XIAO J. Towards an iterative reinforcement approach for simultaneous document summarization and keyword extraction[C]//Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics. Stroudsburg: ACL, 2007: 552-559. [25] 汪旭祥, 韩斌, 高瑞, 等. 基于改进TextRank的文本摘要自动提取[J]. 计算机应用与软件, 2021, 38(6): 155-160.WANG X X, HAN B, GAO R, et al. Automatic extraction of text summarization based on improved TextRank[J]. Computer Applications and Software, 2021, 38(6): 155-160(in Chinese). [26] 黄波, 刘传才. 基于加权TextRank的中文自动文本摘要[J]. 计算机应用研究, 2020, 37(2): 407-410.HUANG B, LIU C C. Chinese automatic text summarization based on weighted TextRank[J]. Application Research of Computers, 2020, 37(2): 407-410(in Chinese). [27] 李峰, 黄金柱, 李舟军, 等. 使用关键词扩展的新闻文本自动摘要方法[J]. 计算机科学与探索, 2016, 10(3): 372-380.LI F, HUANG J Z, LI Z J, et al. Automatic summarization method of news texts using keywords expansion[J]. Journal of Frontiers of Computer Science and Technology, 2016, 10(3): 372-380(in Chinese). [28] 方萍, 徐宁. 基于BERT双向预训练的图模型摘要抽取算法[J]. 计算机应用研究, 2021, 38(9): 2657-2661.FANG P, XU N. Graph model summary extraction algorithm based on BERT bidirectional pretraining[J]. Application Research of Computers, 2021, 38(9): 2657-2661(in Chinese). [29] FERREIRA R, LINS R D, FREITAS F, et al. A new sentence similarity method based on a three-layer sentence representation[C]//2014 IEEE/WIC/ACM International Joint Conferences on Web Intelligence and Intelligent Agent Technologies. Piscataway: IEEE Press , 2014: 110-117. [30] DEVLIN J, CHANG M W, LEE K, et al. BERT: Pre-training of deep bidirectional transformers for language understanding[C]//Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg: ACL, 2019: 4171–4186. [31] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]//Advances in Neural Information Processing Systems. New York: ACM, 2017: 5998-6008. [32] LIN C Y, HOVY E. Identifying topics by position[C]//Fifth Conference on Applied Natural Language Processing. New York: ACM, 1997: 283-290. [33] SEIFIKAR M, FARZI S, BARATI M. C-blondel: An efficient Louvain-based dynamic community detection algorithm[J]. IEEE Transactions on Computational Social Systems, 2020, 7(2): 308-318. doi: 10.1109/TCSS.2020.2964197 [34] LIU Y, LAPATA M. Text summarization with pretrained encoders[C]//Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Stroudsburg: ACL, 2019: 3721–3731. [35] ZHONG M, LIU P, CHEN Y, et al. Extractive summarization as text matching[C]//Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: ACL, 2020: 6197-6208. [36] SEE A, LIU P J, MANNING C D. Get to the point: summarization with pointer-generator networks[C]//Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: ACL, 2017: 1073-1083. -

下载:

下载: