-

摘要:

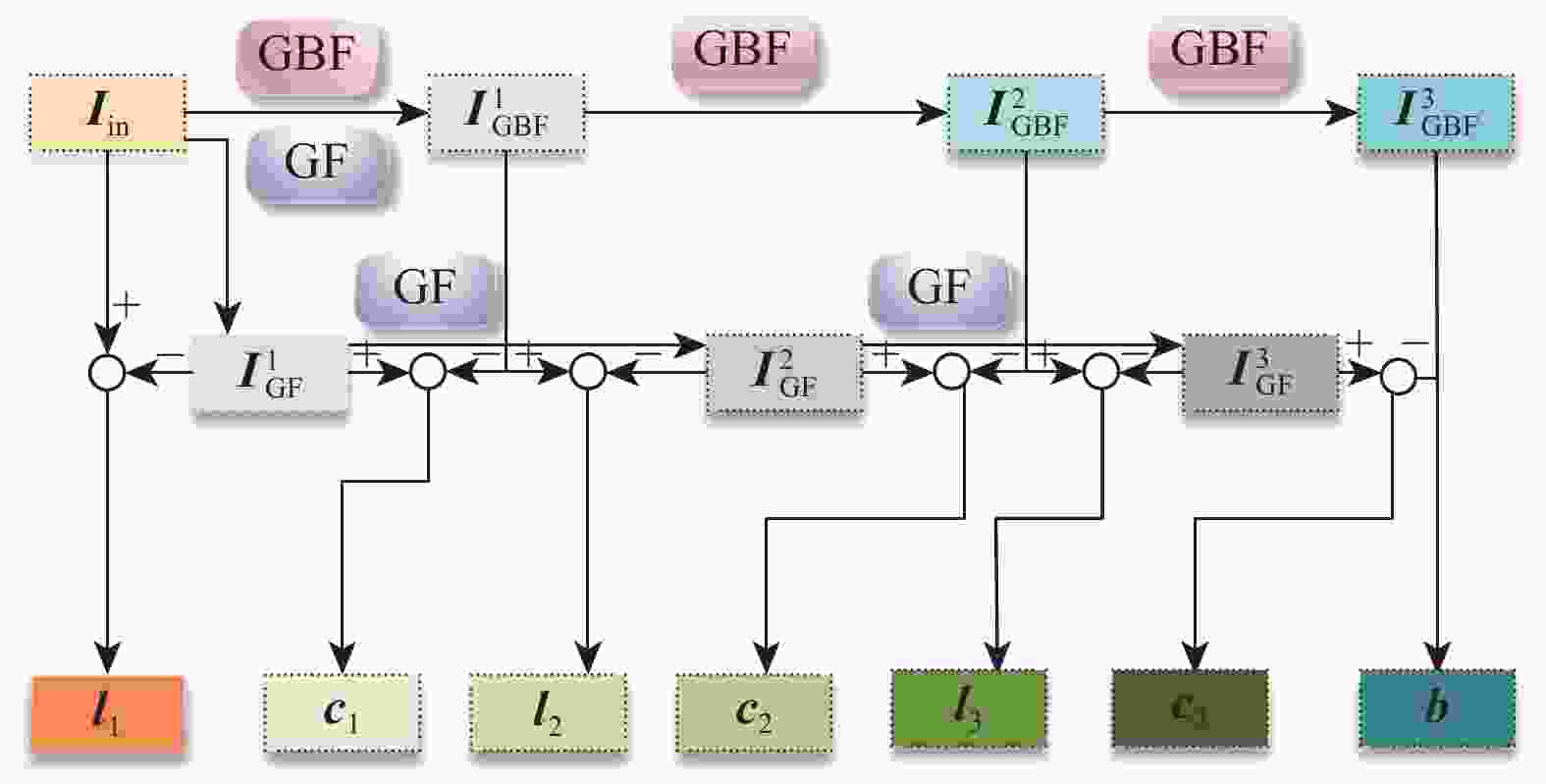

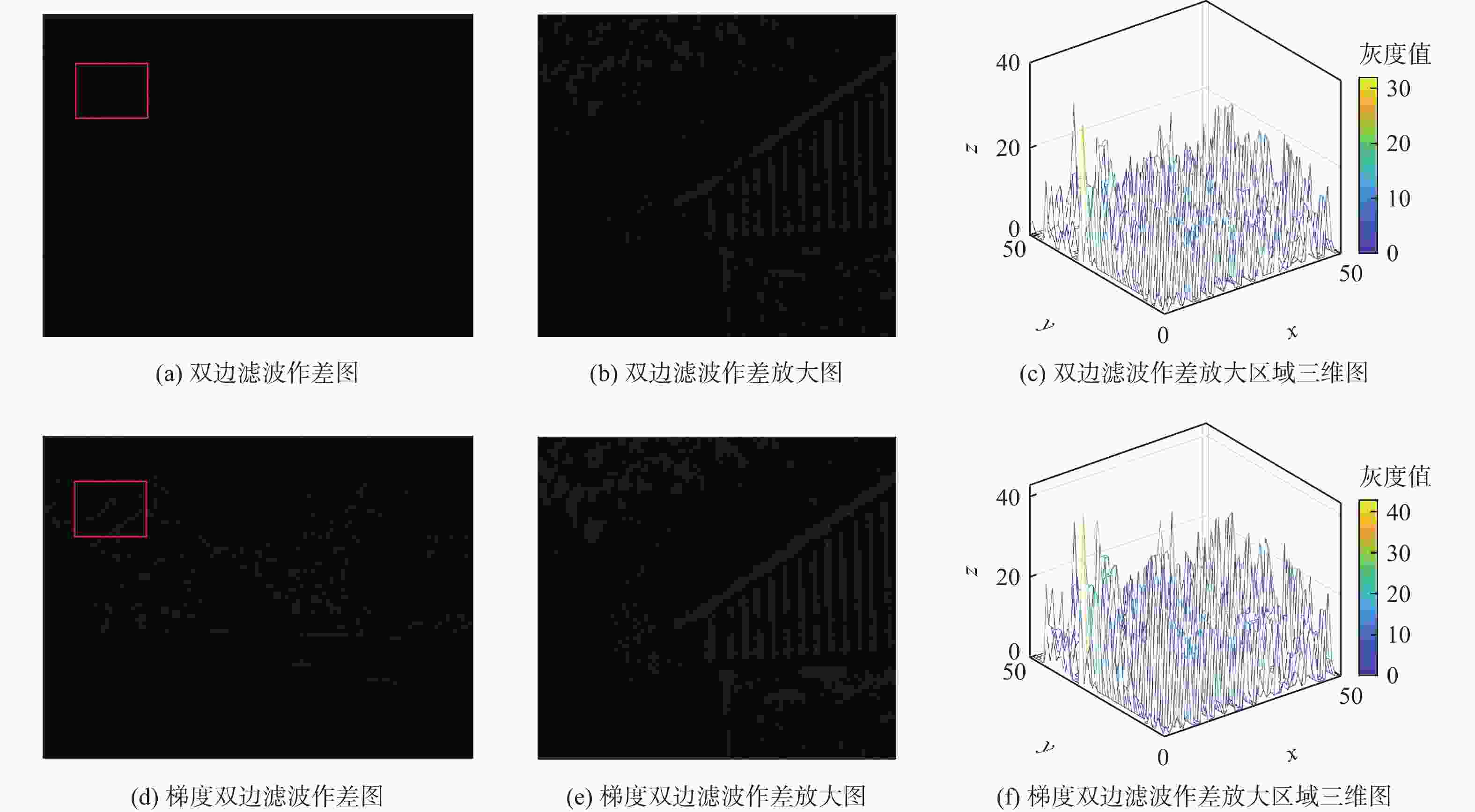

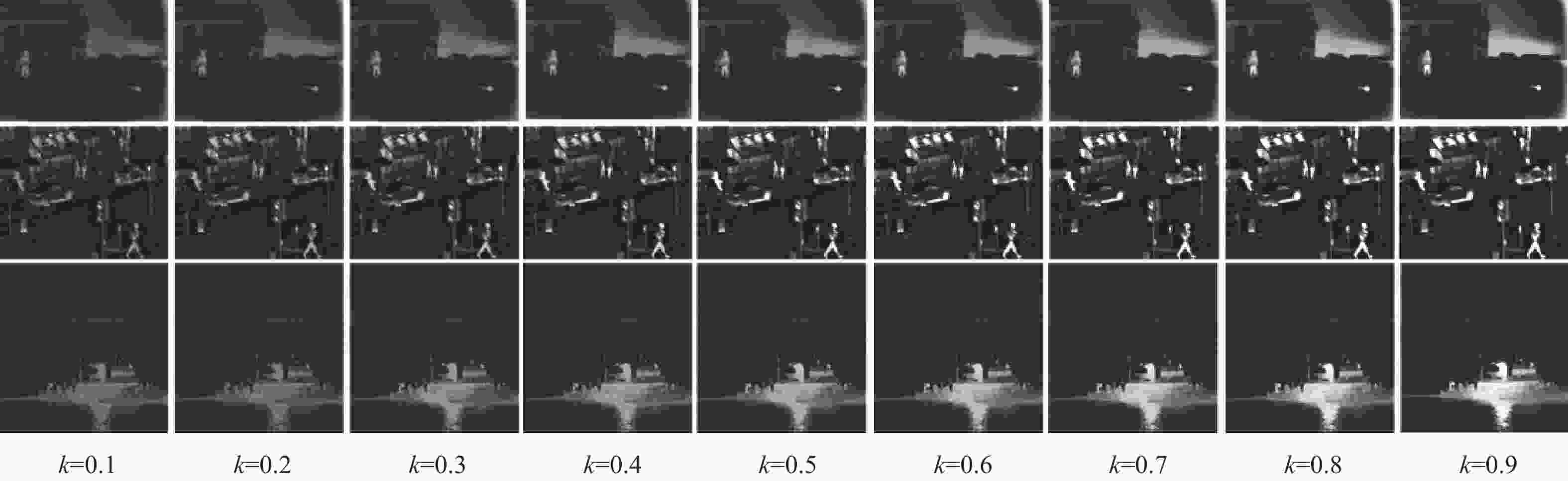

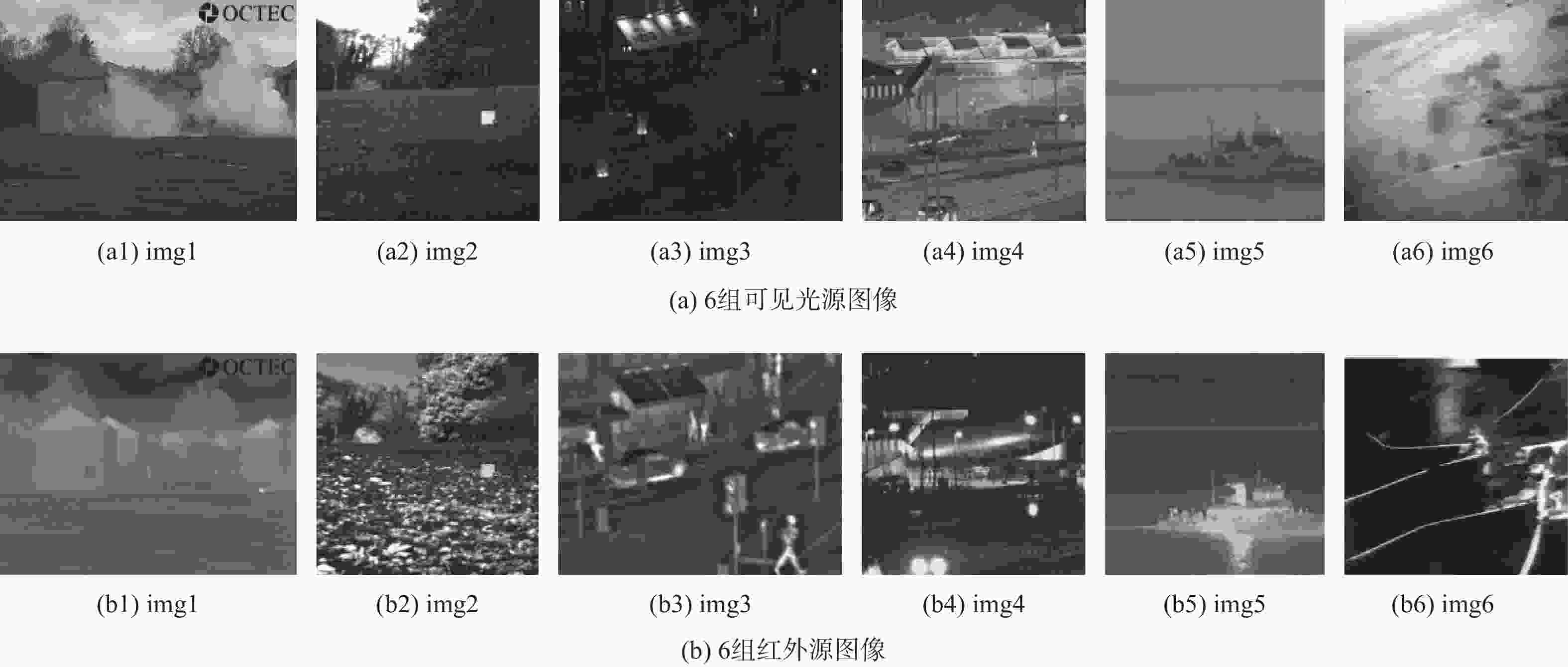

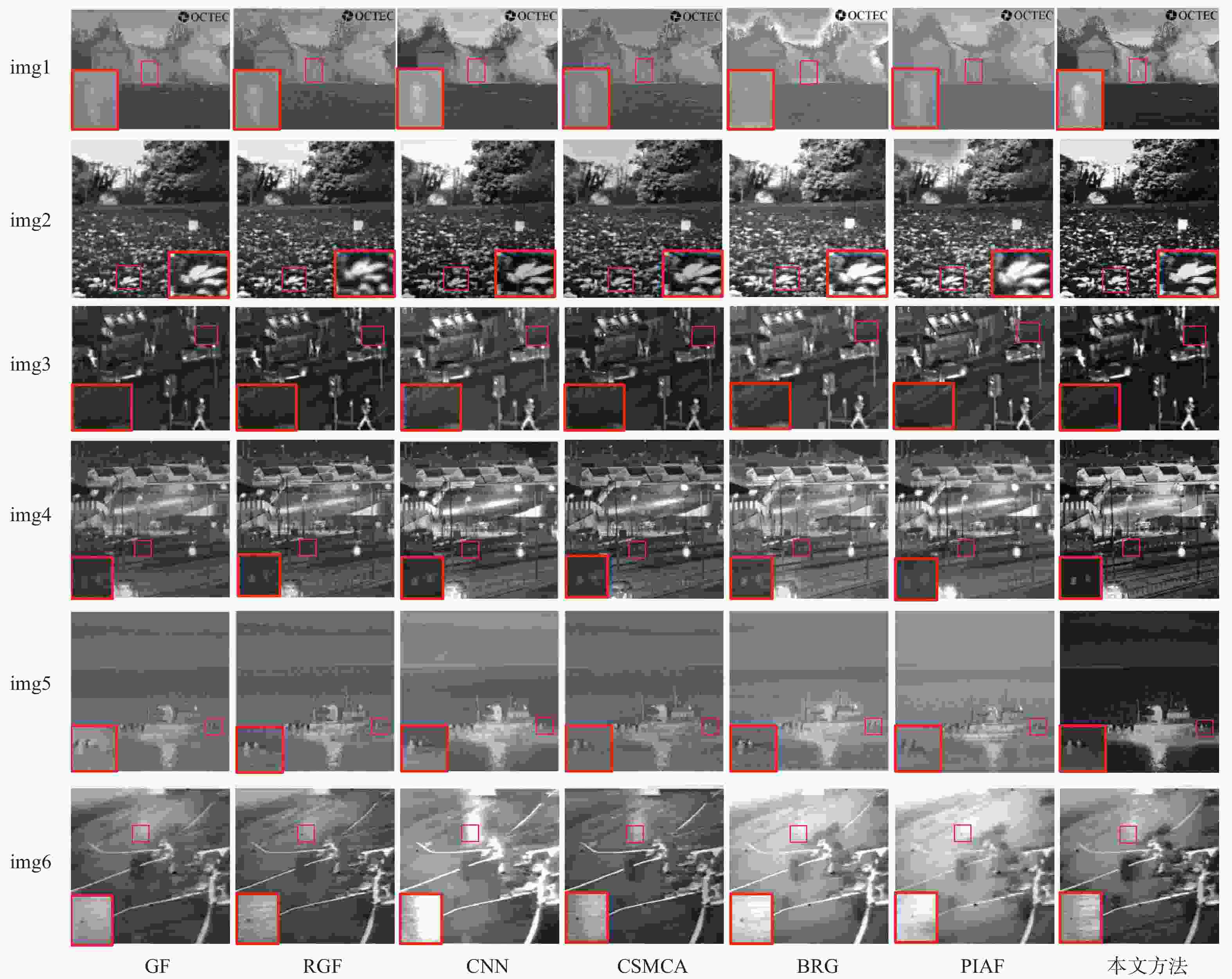

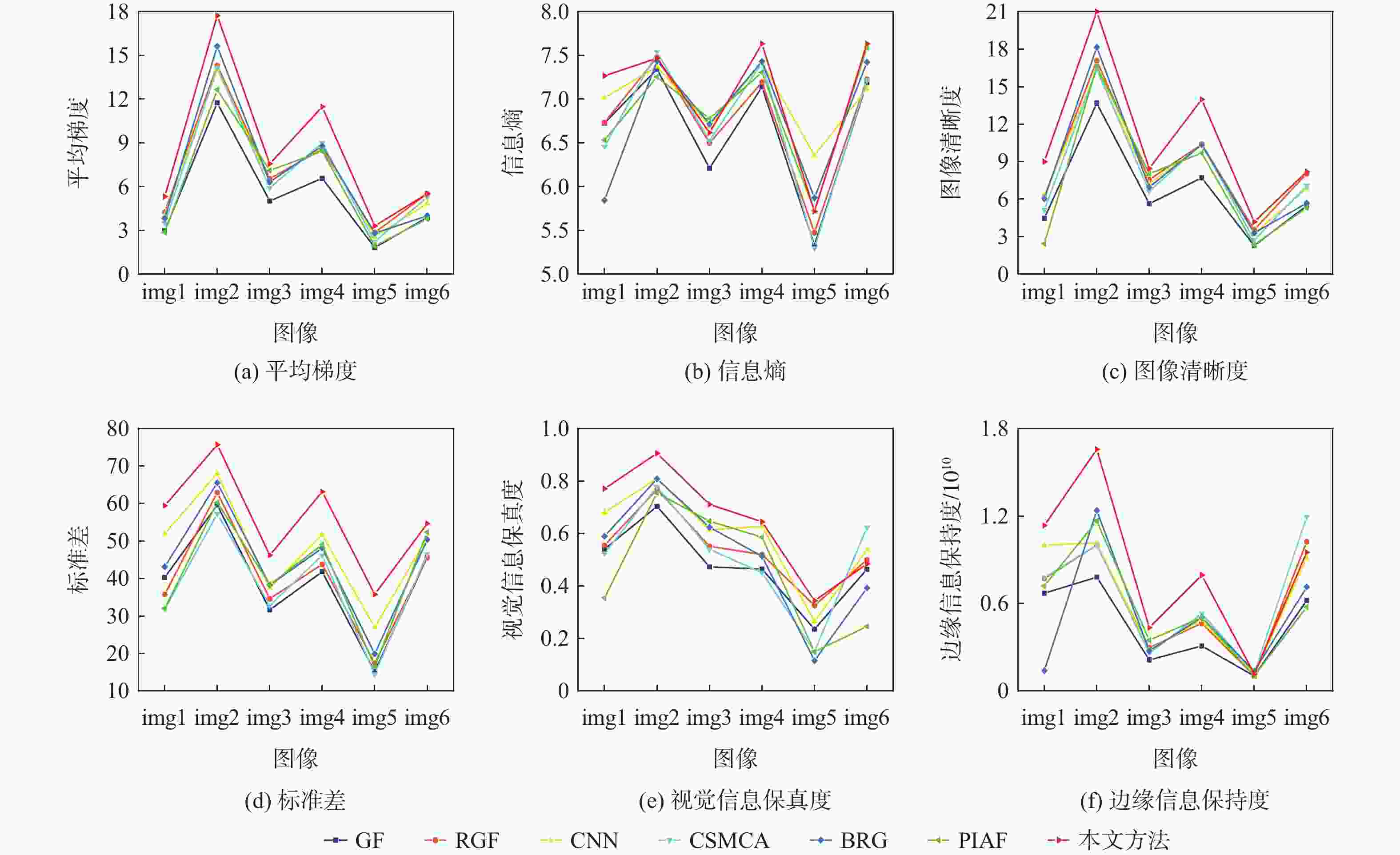

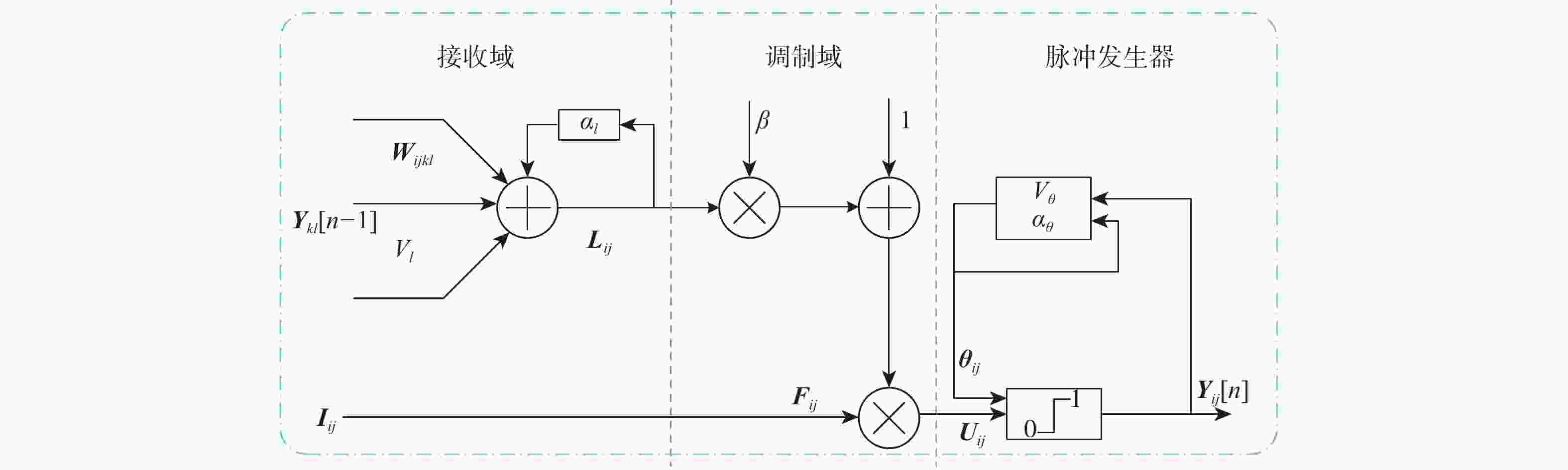

针对传统图像融合方法因分解工具的局限性使融合图像边缘出现伪影与亮度、对比度下降的问题,提出一种基于梯度保边多层级分解(GEMD)与改进脉冲耦合神经网络(PCNN)的红外与可见光图像融合方法。利用梯度双边滤波器(GBF)与梯度滤波器(GF)构造一种多层级分解模型,将源图像分解为3层特征图与1个基础层,且每层特征图有细、粗2个结构;根据各特征图所包含信息特点,分别采用在输入刺激中引入改进拉普拉斯算子来增强对图像中弱细节信息捕捉的PCNN、区域能量和对比度显著的融合规则进行子图融合,得到各子特征融合图像与基础层融合图像;将各子融合图像进行叠加,获得最终融合图像。实验结果表明:所提方法在视觉效果方面与定量评价方面均有所提高,提高了红外与可见光融合图像的亮度与对比度信息。

Abstract:Because of the limitations of decomposition tools in traditional image fusion methods, artifacts, decreased brightness, and contrast appear on fused image edges. A gradient edge-preserving multi-level decomposition(GEMD)-based approach for infrared and visible image fusion, as well as an enhanced pulse-coupled neural network(PCNN), are described. The gradient bilateral filter(GBF) is proposed based on the bilateral filter and the gradient filter(GF), which can preserve the edge structure, brightness, and contrast information while smoothing the detail information. Firstly, the source images are divided into three layers of feature maps and a base layer, and then a multi-level decomposition model is built using a gradient bilateral filter and gradient filter. Each layer of feature maps has two different structures, thin and thick. Then, according to the characteristics of the information contained in each feature map, the PCNN, which introduces an improved Laplacian operator in the input stimulus to enhance weak details captured in the image, regional energy, and contrast saliency are respectively adopted to obtain the fusion images of each sub-feature and the fusion image of the base layer as the fusion rules. Finally, the sub-fusion images are superimposed to obtain the final fusion image. Through experimental verification, the proposed method has improved both visual effect and quantitative evaluation and improved the brightness and contrast information of infrared and visible fusion images.

-

表 1 实验图像各评价指标值

Table 1. The evaluation index values of experimental images

图像编号 方法 AG IE ID SD VIFF EIR/109 img1 GF 2.9693 6.7261 4.4568 40.3069 0.5405 6.7065 RGF 4.2436 6.7333 6.2152 35.7304 0.5547 7.7050 CNN 4.0077 7.0141 6.2199 52.0127 0.6810 10.0100 CSMCA 3.4873 6.4559 5.1348 31.7815 0.5246 7.7815 BRG 3.8183 5.8444 6.0292 43.1289 0.5897 1.3966 PIAF 2.8606 6.5322 2.4365 31.9968 0.3546 7.2065 本文方法 5.3117 7.2662 8.9986 59.4290 0.7711 11.3470 img2 GF 11.7449 7.3420 13.6928 59.6281 0.7038 7.8094 RGF 14.3084 7.4782 17.0913 62.9388 0.7687 10.0070 CNN 14.0207 7.3708 16.3577 68.0267 0.8093 10.1630 CSMCA 14.1432 7.5362 16.3964 57.2800 0.7777 10.0060 BRG 15.6231 7.4355 18.1672 65.5647 0.8082 12.3750 PIAF 12.6485 7.2546 16.6218 60.2984 0.7548 11.6570 本文方法 17.7200 7.4675 21.0224 75.6961 0.9057 16.5710 img3 GF 5.0065 6.2106 5.6306 31.6452 0.4731 2.1302 RGF 6.5371 6.4985 7.5590 34.5977 0.5516 2.9668 CNN 6.3240 6.6925 7.2309 37.3868 0.6143 2.8205 CSMCA 5.9061 6.5271 6.6218 32.8467 0.5399 2.5836 BRG 6.3112 6.7150 6.9639 38.2892 0.6241 2.7484 PIAF 7.1007 6.7781 8.0320 38.3769 0.6471 3.4837 本文方法 7.5605 6.6161 8.4529 46.2119 0.7112 4.3446 img4 GF 6.5674 7.1388 7.7132 41.8089 0.4647 3.0893 RGF 8.5620 7.1932 10.4039 43.8182 0.5198 4.6488 CNN 8.7445 7.4203 10.2977 51.7043 0.6275 4.8712 CSMCA 8.9949 7.4118 10.4165 46.1752 0.4512 5.3171 BRG 8.7851 7.4321 10.3676 48.1222 0.5135 5.0349 PIAF 8.4339 7.3056 9.7002 49.0388 0.5860 5.0457 本文方法 11.4676 7.6322 13.9933 63.1705 0.6450 7.9446 img5 GF 1.8168 5.3317 2.2802 15.1546 0.2358 1.0312 RGF 2.8488 5.4740 3.5476 17.4340 0.3261 1.0513 CNN 2.5592 6.3555 3.2363 26.9346 0.2652 1.1193 CSMCA 2.2246 5.2983 2.7360 14.5069 0.1507 1.2474 BRG 2.8004 5.8712 3.3013 19.9049 0.1157 1.3562 PIAF 1.9253 5.7237 2.3440 16.5379 0.1497 1.0371 本文方法 3.3037 5.7163 4.1779 35.7829 0.3438 1.1655 img6 GF 3.8828 7.1879 5.4636 45.7428 0.4640 6.2097 RGF 5.5071 7.2264 8.0458 45.5467 0.5000 10.2460 CNN 4.8318 7.1152 6.8625 51.0002 0.5378 9.1301 CSMCA 5.2578 7.2167 7.0550 46.5250 0.6233 11.9430 BRG 3.9936 7.4221 5.6747 50.3374 0.3925 7.1322 PIAF 3.7770 7.5917 5.3198 52.3620 0.2461 5.7678 本文方法 5.5097 7.6339 8.2027 54.6256 0.4846 9.5102 注:加粗数据表示最优结果 -

[1] 沈瑜, 陈小朋. 基于DLatLRR与VGG Net的红外与可见光图像融合[J]. 北京航空航天大学学报, 2021, 47(6): 1105-1114.SHEN Y, CHEN X P. Infrared and visible image fusion based on latent low-rank representation decomposition and VGG Net[J]. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(6): 1105-1114(in Chinese). [2] LI G F, LIN Y J, QU X D. An infrared and visible image fusion method based on multi-scale transformation and norm optimization[J]. Information Fusion, 2021, 71: 109-129. doi: 10.1016/j.inffus.2021.02.008 [3] 朱雯青, 汤心溢, 张瑞, 等. 基于边缘保持和注意力生成对抗网络的红外与可见光图像融合[J]. 红外与毫米波学报, 2021, 40(5): 696-708.ZHU W Q, TANG X Y, ZHANG R, et al. Infrared and visible image fusion based on edge-preserving and attention generative adversarial network[J]. Journal of Infrared and Millimeter Waves, 2021, 40(5): 696-708(in Chinese). [4] 刘佳妮, 金伟其, 李力, 等. 自适应参考图像的可见光与热红外彩色图像融合算法[J]. 光谱学与光谱分析, 2016, 36(12): 3907-3914.LIU J N, JIN W Q, LI L, et al. Visible and infrared thermal image fusion algorithm based on self-adaptive reference image[J]. Spectroscopy and Spectral Analysis, 2016, 36(12): 3907-3914(in Chinese). [5] LUO Y Y, HE K J, XU D, et al. Infrared and visible image fusion based on visibility enhancement and hybrid multiscale decomposition[J]. Optik, 2022, 258: 168914. doi: 10.1016/j.ijleo.2022.168914 [6] GUO Z Y, YU X T, DU Q L. Infrared and visible image fusion based on saliency and fast guided filtering[J]. Infrared Physics & Technology, 2022, 123: 104178. [7] MA J Y, ZHOU Y. Infrared and visible image fusion via gradientlet filter[J]. Computer Vision and Image Understanding, 2020, 197-198: 103016. doi: 10.1016/j.cviu.2020.103016 [8] 王满利, 王晓龙, 张长森. 基于动态范围压缩增强和NSST的红外与可见光图像融合算法[J]. 光子学报, 2022, 51(9): 277-291.WANG M L, WANG X L, ZHANG C S. Infrared and visible image fusion algorithm based on dynamic range compression enhancement and NSST[J]. Acta Photonica Sinica, 2022, 51(9): 277-291(in Chinese). [9] ZHOU Z Q, WANG B, LI S, et al. Perceptual fusion of infrared and visible images through a hybrid multi-scale decomposition with Gaussian and bilateral filters[J]. Information Fusion, 2016, 30: 15-26. doi: 10.1016/j.inffus.2015.11.003 [10] LI X S, ZHOU F Q, TAN H S, et al. Multimodal medical image fusion based on joint bilateral filter and local gradient energy[J]. Information Sciences, 2021, 569: 302-325. doi: 10.1016/j.ins.2021.04.052 [11] HU J W, LI S T. The multiscale directional bilateral filter and its application to multisensor image fusion[J]. Information Fusion, 2012, 13(3): 196-206. doi: 10.1016/j.inffus.2011.01.002 [12] 周志强, 汪渤, 李立广, 等. 基于双边与高斯滤波混合分解的图像融合方法[J]. 系统工程与电子技术, 2016, 38(1): 8-13.ZHOU Z Q, WANG B, LI L G, et al. Image fusion based on a hybrid decomposition via bilateral and Gaussian filters[J]. Systems Engineering and Electronics, 2016, 38(1): 8-13(in Chinese). [13] 刘峰, 沈同圣, 马新星. 交叉双边滤波和视觉权重信息的图像融合[J]. 仪器仪表学报, 2017, 38(4): 1005-1013. doi: 10.3969/j.issn.0254-3087.2017.04.027LIU F, SHEN T S, MA X X. Image fusion via cross bilateral filter and visual weight information[J]. Chinese Journal of Scientific Instrument, 2017, 38(4): 1005-1013(in Chinese). doi: 10.3969/j.issn.0254-3087.2017.04.027 [14] 戴进墩, 刘亚东, 毛先胤, 等. 基于FDST和双通道PCNN的红外与可见光图像融合[J]. 红外与激光工程, 2019, 48(2): 67-74.DAI J D, LIU Y D, MAO X Y, et al. Infrared and visible image fusion based on FDST and dual-channel PCNN[J]. Infrared and Laser Engineering, 2019, 48(2): 67-74(in Chinese). [15] MA J L, ZHOU Z Q, WANG B, et al. Infrared and visible image fusion based on visual saliency map and weighted least square optimization[J]. Infrared Physics & Technology, 2017, 82: 8-17. [16] LIU Y, CHEN X, CHENG J A, et al. Infrared and visible image fusion with convolutional neural networks[J]. International Journal of Wavelets, Multiresolution and Information Processing, 2018, 16(3): 1850018. doi: 10.1142/S0219691318500182 [17] LIU Y, CHEN X, WARD R K, et al. Medical image fusion via convolutional sparsity based morphological component analysis[J]. IEEE Signal Processing Letters, 2019, 26(3): 485-489. doi: 10.1109/LSP.2019.2895749 [18] ZHANG Y, ZHANG L J, BAI X Z, et al. Infrared and visual image fusion through infrared feature extraction and visual information preservation[J]. Infrared Physics & Technology, 2017, 83: 227-237. [19] TANG L F, YUAN J T, ZHANG H, et al. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware[J]. Information Fusion, 2022, 83-84: 79-92. doi: 10.1016/j.inffus.2022.03.007 [20] 张小利, 李雄飞, 李军. 融合图像质量评价指标的相关性分析及性能评估[J]. 自动化学报, 2014, 40(2): 306-315.ZHANG X L, LI X F, LI J. Validation and correlation analysis of metrics for evaluating performance of image fusion[J]. Acta Automatica Sinica, 2014, 40(2): 306-315(in Chinese). [21] 王晓文, 赵宗贵, 汤磊. 一种新的红外与可见光图像融合评价方法[J]. 系统工程与电子技术, 2012, 34(5): 871-875.WANG X W, ZHAO Z G, TANG L. Novel quality metric for infrared and visible image fusion[J]. Systems Engineering and Electronics, 2012, 34(5): 871-875(in Chinese). -

下载:

下载: