-

摘要:

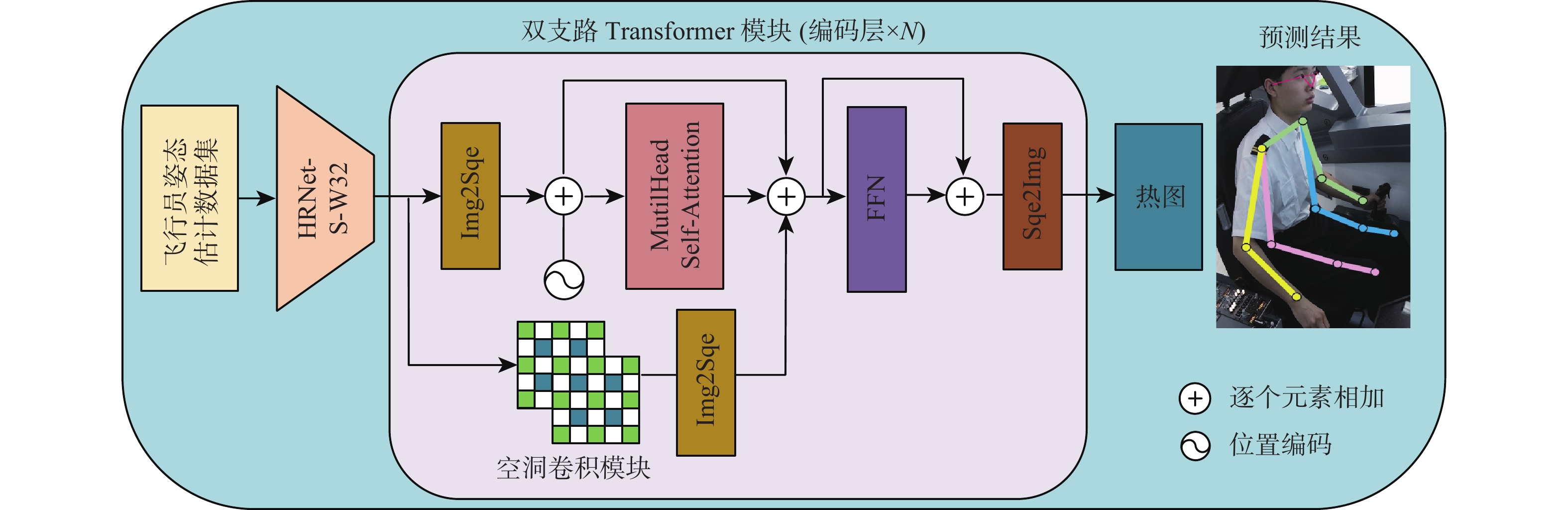

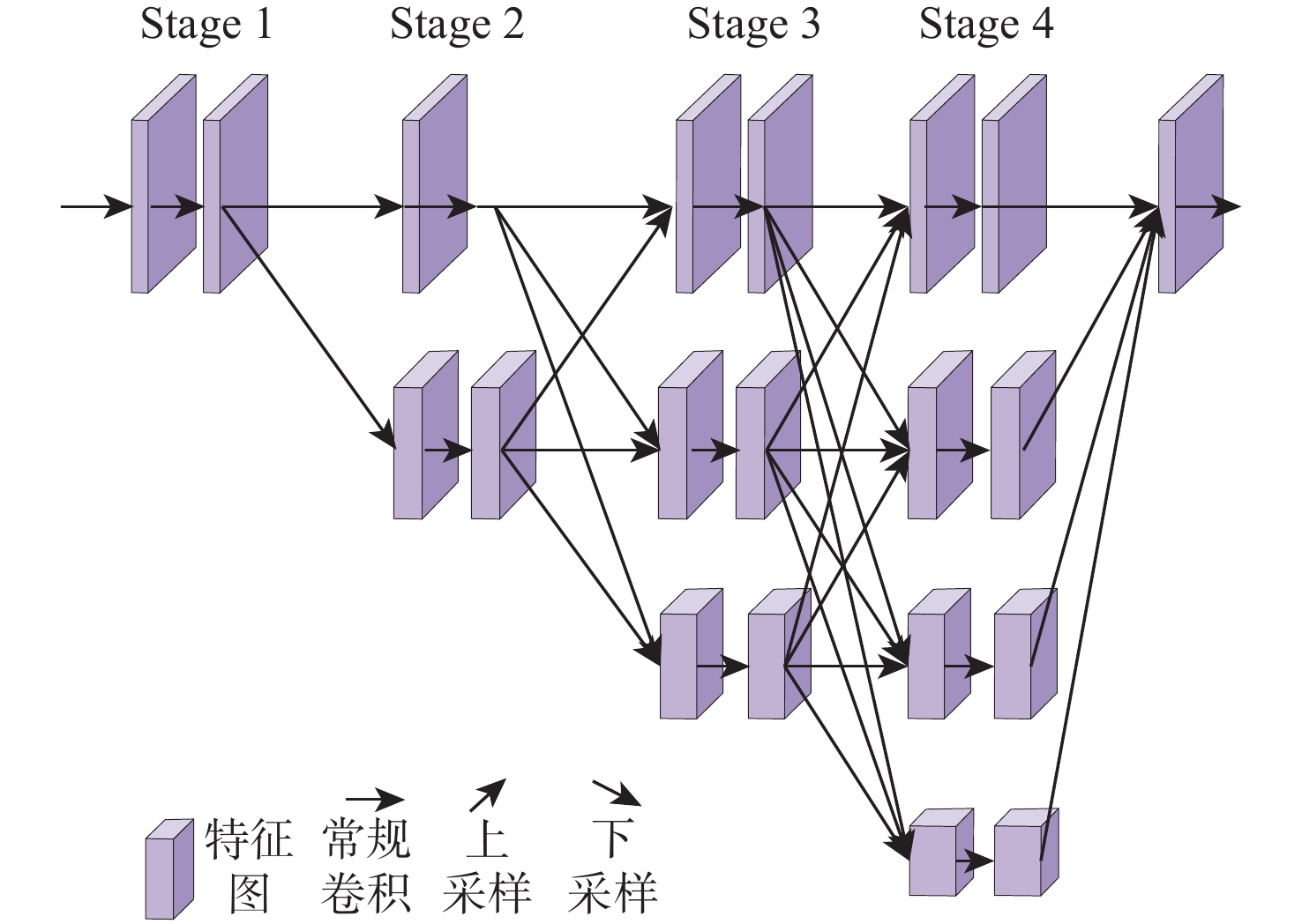

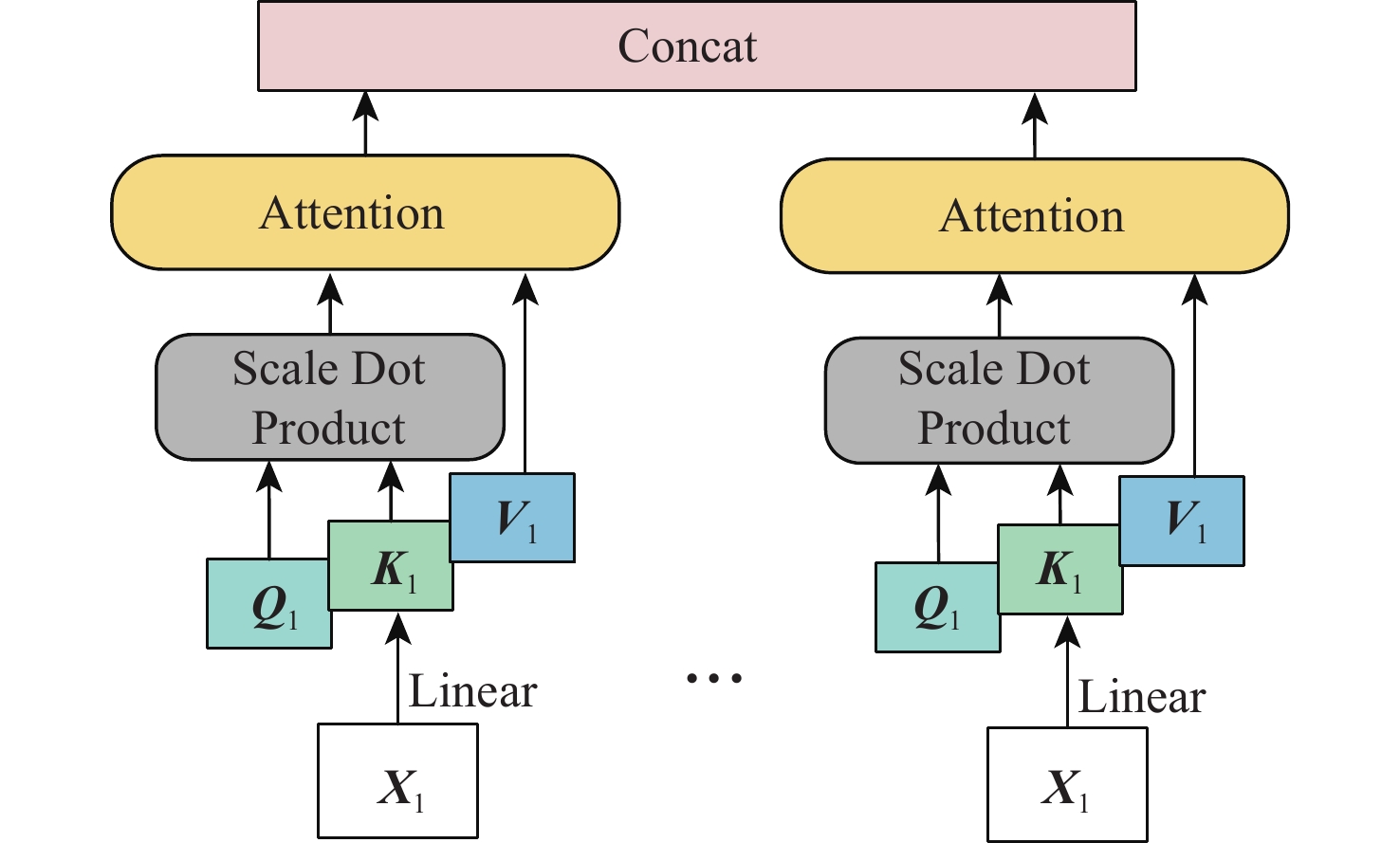

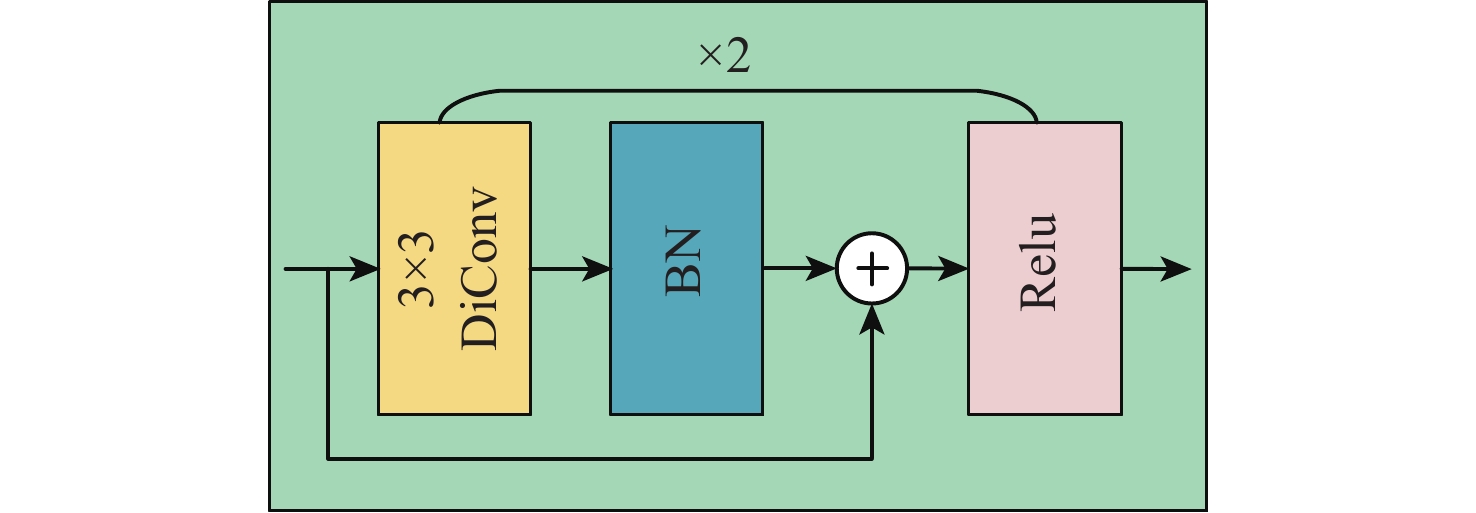

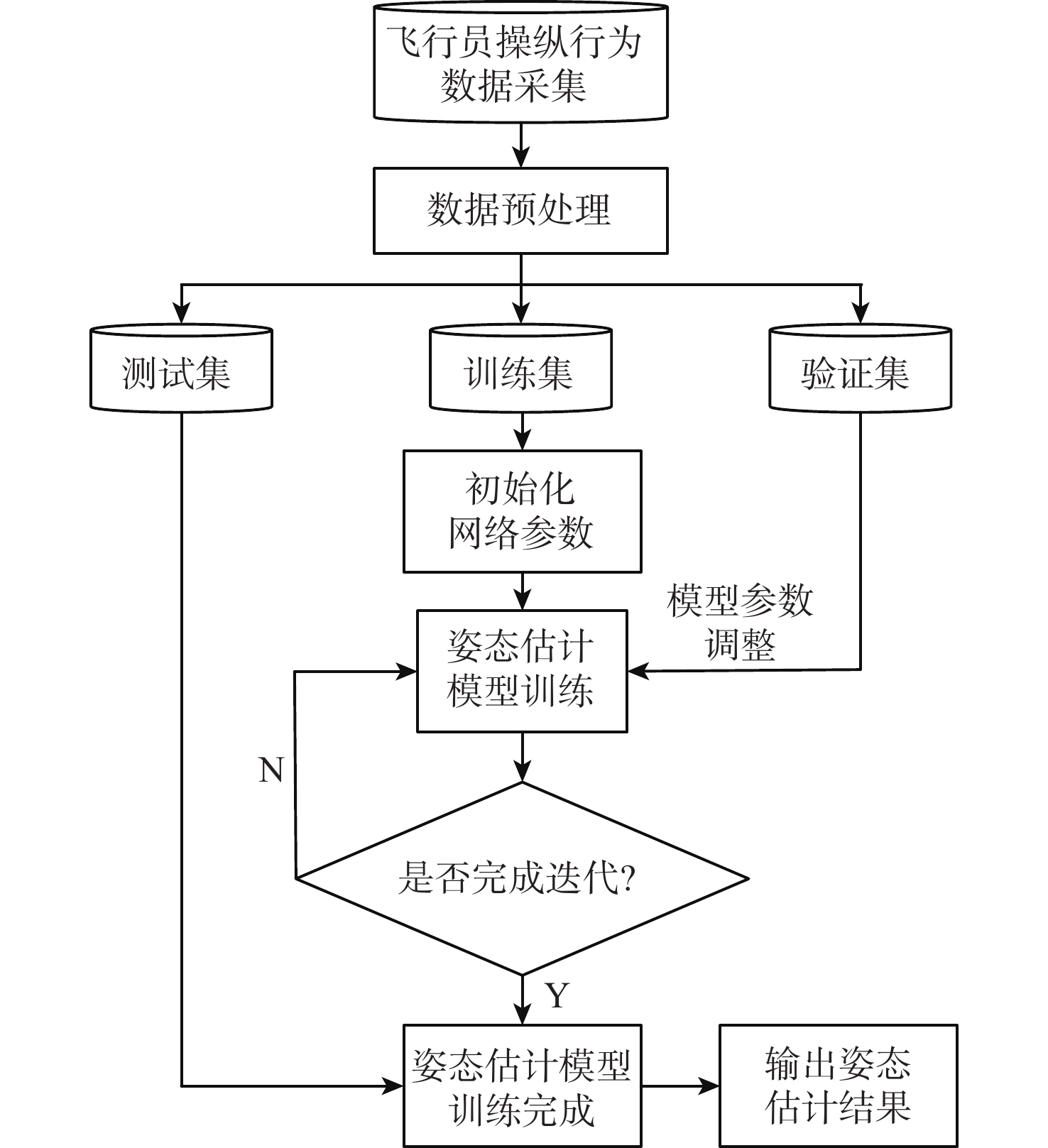

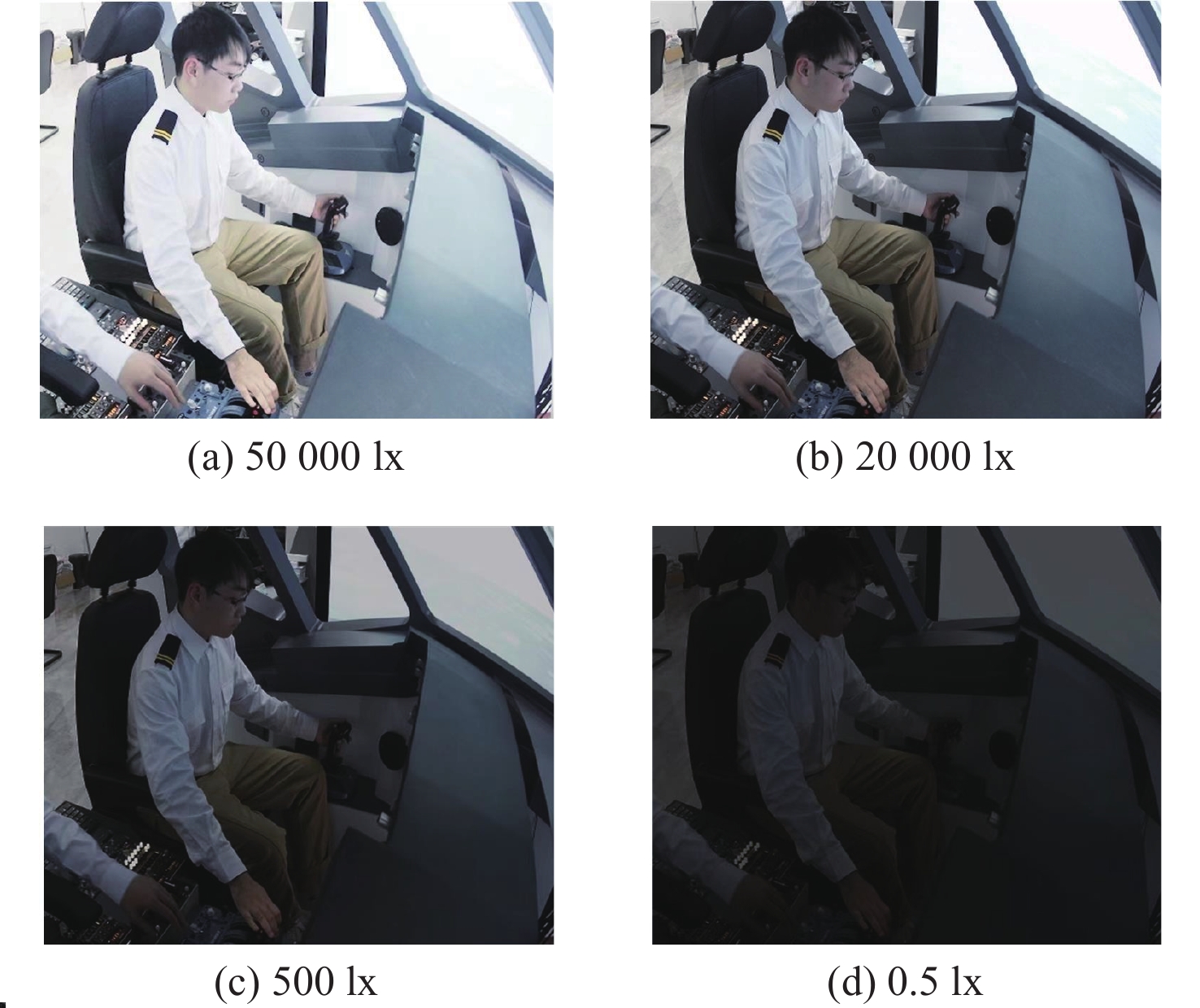

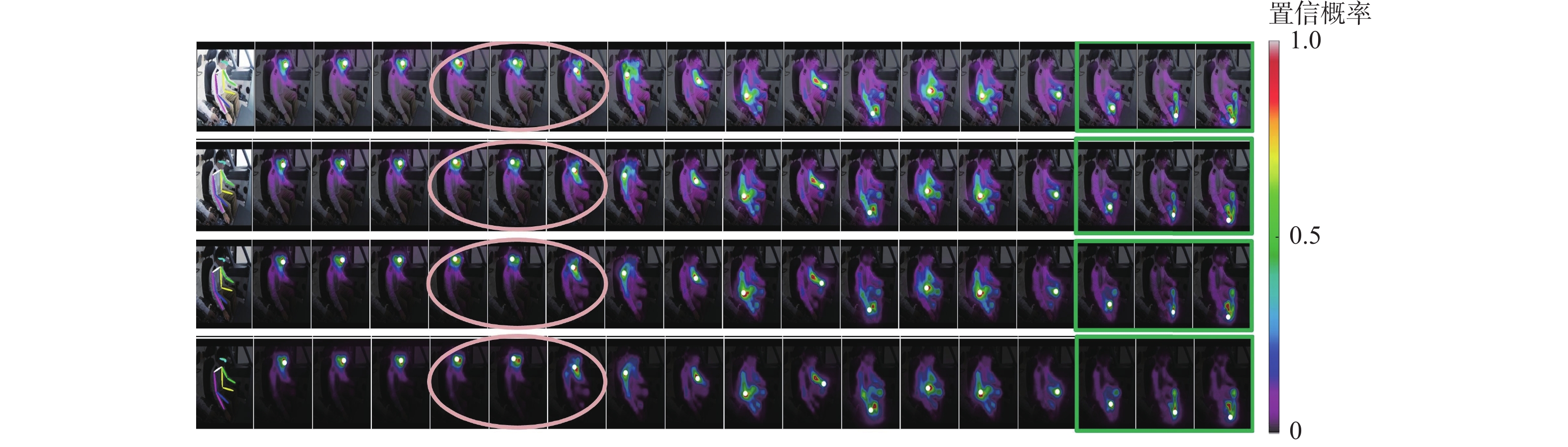

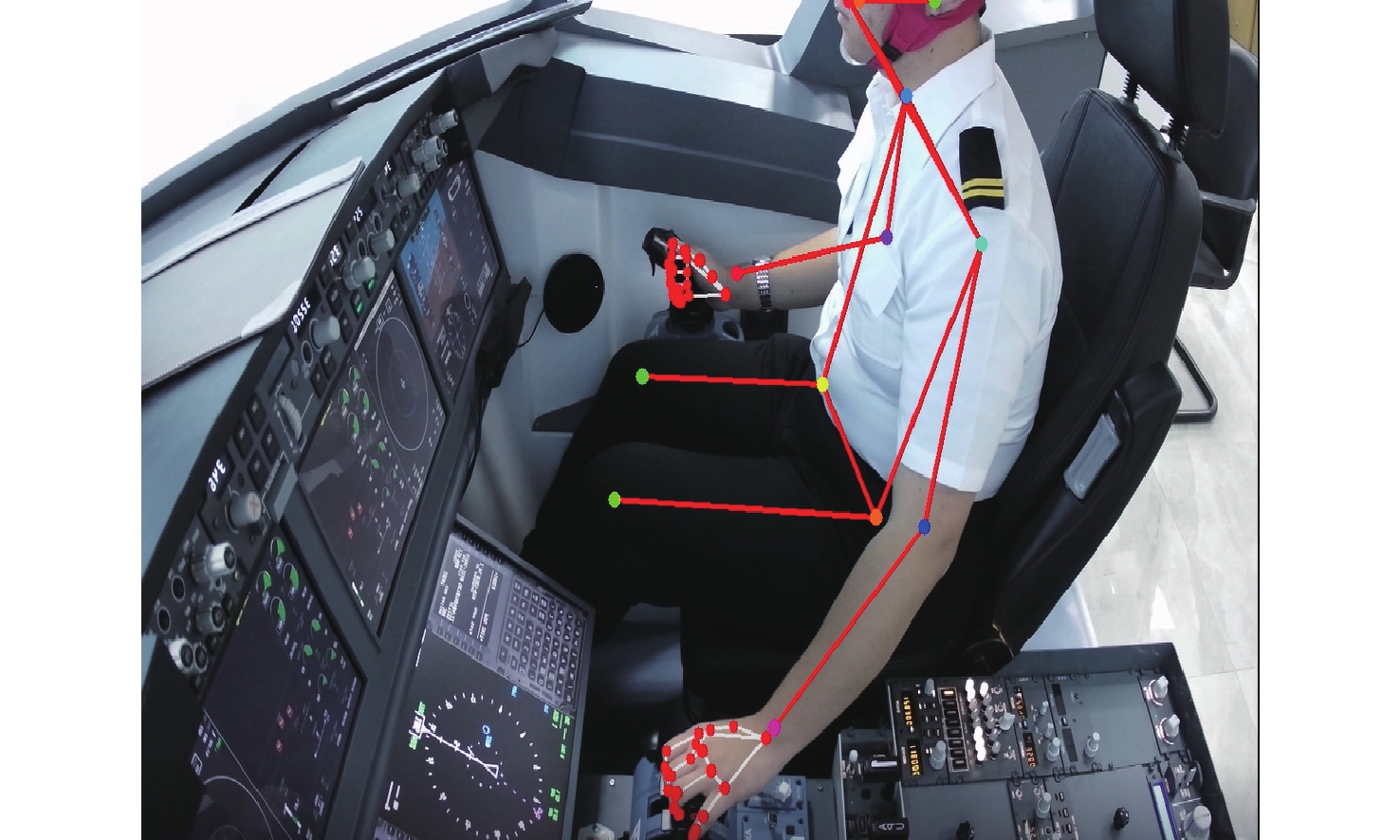

人体姿态估计是行为感知领域中的一个重要环节,也是民用飞机驾驶舱智能交互方式的一项关键技术。为建立民用飞机驾驶舱复杂光照环境与飞行员姿态估计模型性能的可解释联系,提出基于视觉Transformer飞行员姿态(ViTPPose)估计模型,该模型在卷积神经网络(CNN)主干网络末端使用包含多层编码层的双支路 Transformer 模块,编码层联合 Transformer 和空洞卷积,在增大感受野的同时捕捉后期高阶特征的全局相关性。基于飞行机组标准操作程序,建立飞行模拟场景下的飞行员操纵行为关键点检测数据集,ViTPPose估计模型在此数据集上完成飞行员坐姿估计,并通过与基准模型对比,验证了其有效性。在驾驶舱复杂光照的背景下,构建坐姿估计热图,分析ViTPPose估计模型对光照强度的偏好,测试其在不同光照等级下的性能,揭示其对不同光照强度的依赖关系。

Abstract:Human pose estimation is an important aspect in the field of behavioral perception and a key technology in the way of intelligent interaction in the cockpit of civil aircraft. To establish an explainable link between the complex lighting environment in the cockpit of civil aircraft and the performance of the pilot pose estimation model, the visual Transformer-based pilot pose (ViTPPose) estimation model is proposed. In order to capture the global correlation of subsequent higher-order features while expanding the perceptual field, this model employs a two-branch Transformer module with several coding layers at the end of the convolutional neural networks (CNN)backbone network. The coding layers combine the Transformer and the dilated convolution. Based on the flight crew’s standard operating procedures, a pilot maneuvering behavior keypoint detection dataset is established for flight simulation scenarios. ViTPPose estimation model completes the pilot seating estimation on this dataset and verifies its validity by comparing it with the benchmark model. The seating estimation heatmap is created in the context of the cockpit’s complicated lighting to examine the model’s preferred lighting intensity, evaluate the ViTPPose estimation model’s performance under various lighting conditions, and highlight the model’s reliance on various lighting intensities.

-

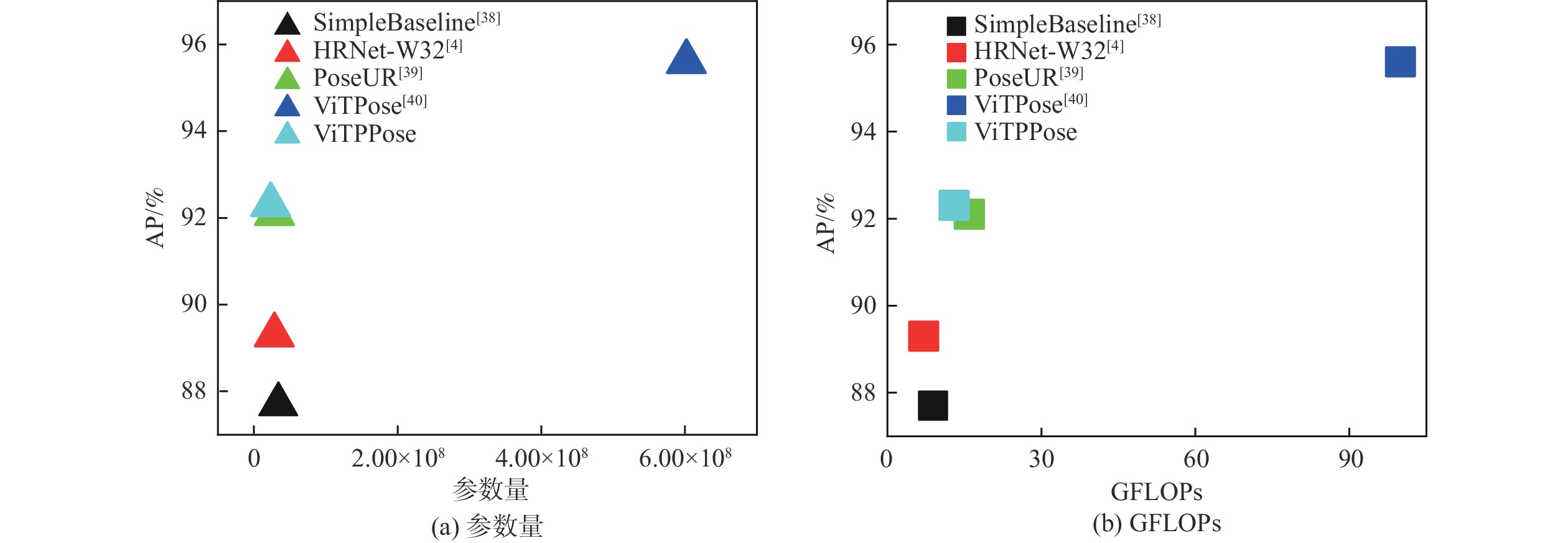

表 1 模型在飞行员关键点检测测试数据集上的性能比较

Table 1. Performance comparison of models on pilot keypoint detection test dataset

模型 主干网络 输入尺寸/像素 参数量 GFLOPs AP/% AP50/% AP75/% APM/% AR/% SimpleBaseline[38] ResNet-50 256×192 3.40×107 8.90 87.7 89.9 89.1 85.3 87.8 SimpleBaseline[38] ResNet-152 384×288 6.86×107 35.6 89.4 91.0 91.1 87.8 88.2 HRNet-W32[4] HRNet-W32 256×192 2.85×107 7.10 89.3 91.4 91.3 87.3 86.9 HRNet-W32[4] HRNet-W32 384×288 2.85×107 16.0 90.6 92.5 93.6 90.9 92.5 PoseUR[39] HRFormer-B 256×192 2.88×107 12.6 92.1 93.6 91.8 93.0 93.8 ViTPose[40] ViTAE-H 256×192 6.32×108 95.6 95.2 94.3 95.6 96..4 ViTPPose HRNet-S-W32 256×192 2.35×107 13.0 92.3 93.5 92.5 92.6 93.5 表 2 模型使用不同编码层数目在飞行员关键点检测测试数据集上的性能比较

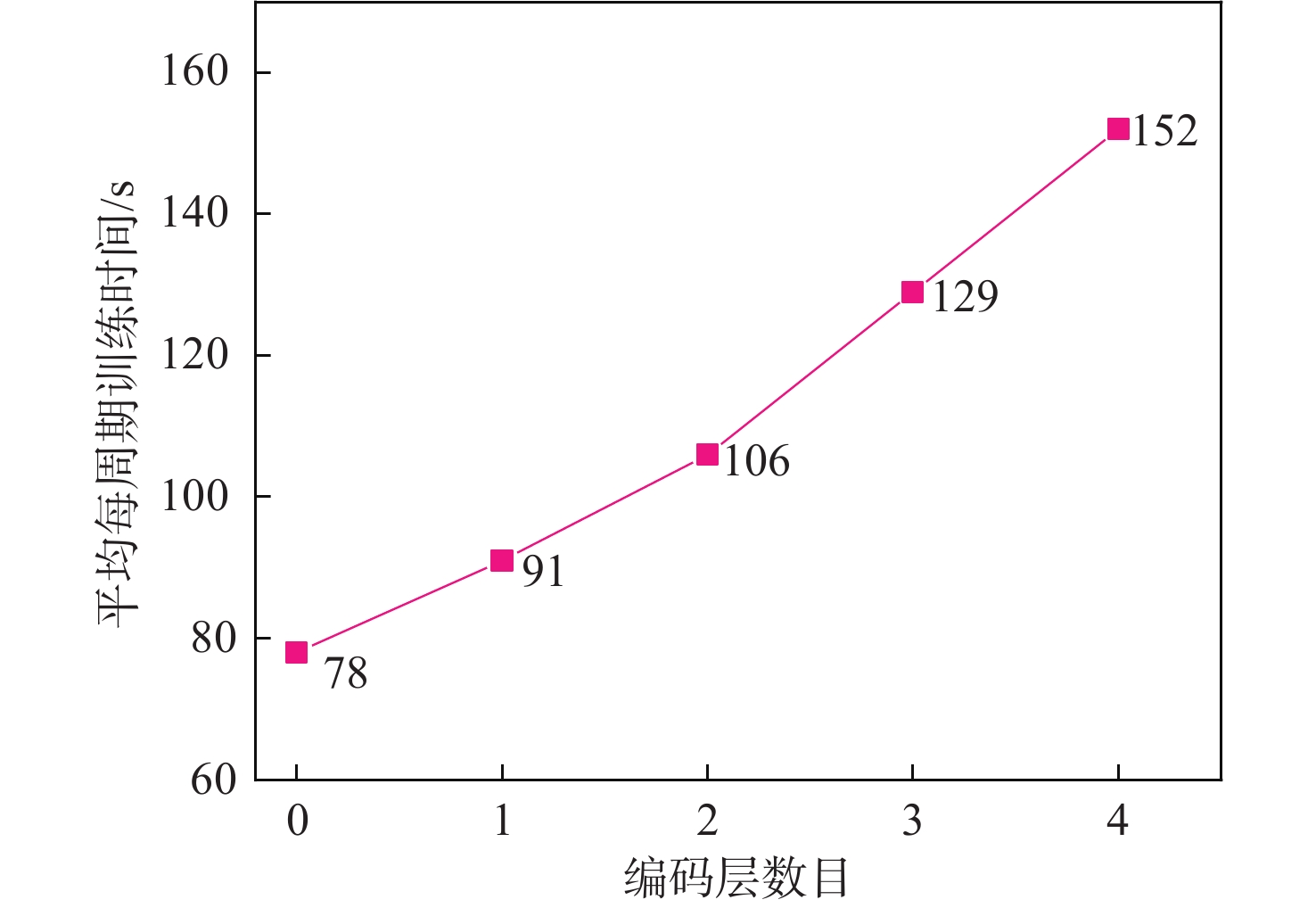

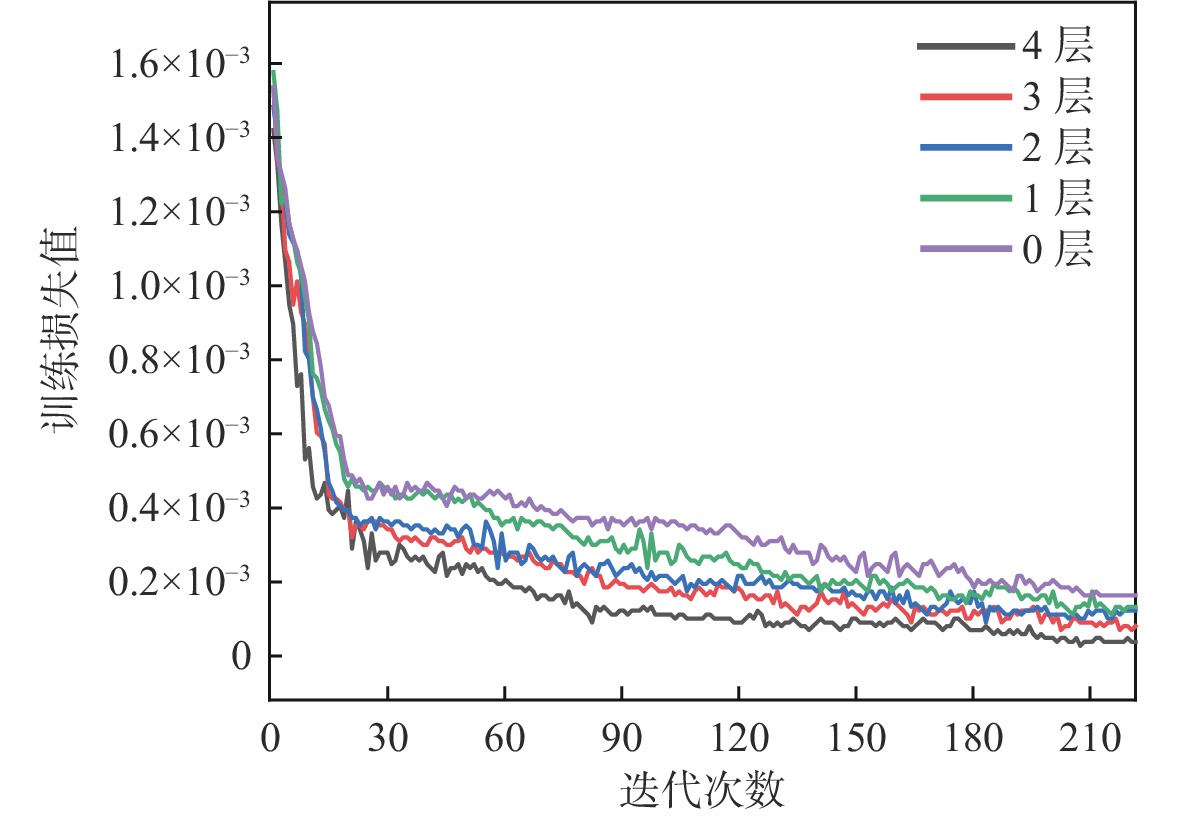

Table 2. Performance comparison of using different numbers of encoder layers on pilot keypoint detection test dataset

编码层数目 输入尺寸/像素 参数量 GFLOPs AP/% 0 256×192 2.15×107 6.2 90.3 1 256×192 2.20×107 7.9 90.7 2 256×192 2.24×107 9.6 91.3 3 256×192 2.29×107 11.3 92.0 4 256×192 2.35×107 13.0 92.3 表 3 模型使用空洞卷积模块在飞行员关键点测试数据集上的性能比较

Table 3. Performance comparison of models using dilated convolution module on pilot keypoint test dataset

模型 输入尺寸/像素 参数量 GFLOPs AP/% ViTPPose* 256×192 2.28×107 12.6 91.8 ViTPPose& 256×192 2.35×107 13.0 92.0 ViTPPose$ 256×192 2.36×107 13.0 91.5 ViTPPose 256×192 2.35×107 13.0 92.3 表 4 本文模型在4种级别光照强度等级下飞行员关键点测试数据集上的性能比较

Table 4. Performance of the proposed model is compared on pilot keypoint test dataset under four levels of light intensity levels

光照强度/lx 输入尺寸/像素 AP/% 50000 256×192 86.2 20000 256×192 93.8 500 256×192 90.7 0.5 256×192 85.1 -

[1] 中华人民共和国国务院. “十三五”国家战略性新兴产业发展规划67号[R]. 北京: 中华人民共和国国务院, 2016.The State Council of the People’s Republic of China. “13th Five-Year”national strategic emerging industry development plan No 67[R]. Bejing: State Council of the People’s Republic of China, 2016(in Chinese). [2] 中华人民共和国国务院. 中华人民共和国国民经济和社会发展第十四个五年规划和2035年远景目标纲要[R]. 北京: 中华人民共和国国务院, 2021.The State Council of the People’s Republic of China. Outline of the People’s Republic of China 14th five-year plan for national economic and social development and long-range objectives for 2035[R]. Bejing: State Council of the People’s Republic of China, 2021(in Chinese). [3] 杨志刚, 张炯, 李博, 等, 民用飞机智能飞行技术综述[J]. 航空学报, 2021, 42 (4): 525198.YANG Z G, ZHANG J, LI B, et al. Reviews on intelligent flight technology of civil aircraft[J]. Acta Aeronautica et Astronautica Sinica, 2021, 42(4): 525198(in Chinese). [4] TOSHEV A, SZEGEDY C. DeepPose: Human pose estimation via deep neural networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014: 1653-1660. [5] CHEN Y P, DAI X Y, LIU M C, et al. Dynamic ReLU[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2020: 351-367. [6] HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 770-778. [7] HE K M, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 386-397. doi: 10.1109/TPAMI.2018.2844175 [8] SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 618-626. [9] ZHANG Q L, YANG Y B. Group-CAM: Group score-weighted visual explanations for deep convolutional networks[EB/OL]. (2021-03-25)[2022-08-19]. [10] LIU Z, MAO H Z, WU C Y, et al. A ConvNet for the 2020s[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 11966-11976. [11] SUN K, XIAO B, LIU D, et al. Deep high-resolution representation learning for human pose estimation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 5686-5696. [12] CHENG B W, XIAO B, WANG J D, et al. HigherHRNet: Scale-aware representation learning for bottom-up human pose estimation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 5385-5394. [13] ANDRILUKA M, PISHCHULIN L, GEHLER P, et al. 2D human pose estimation: New benchmark and state of the art analysis[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2014: 3686-3693. [14] JOHNSON S, EVERINGHAM M. Clustered pose and nonlinear appearance models for human pose estimation[C]//Proceedings of the British Machine Vision Conference 2010. London: British Machine Vision Association, 2010: 1-11. [15] LIN T Y, MAIRE M, BELONGIE S, et al. Microsoft COCO: Common objects in context[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2014: 740-755. [16] DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16x16 words: Transformers for image recognition at scale[EB/OL]. (2020-10-22)[2022-08-20]. [17] DENG J, DONG W, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2009: 248-255. [18] TOUVRON H, CORD M, DOUZE M, et al. Training data-efficient image transformers & distillation through attention[EB/OL]. (2020-12-23)[2022-08-20]. [19] TOUVRON H, CORD M, SABLAYROLLES A, et al. Going deeper with Image Transformers[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2021: 32-42. [20] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems. New York: ACM, 2017: 6000-6010. [21] XIAO T T, SINGH M, MINTUN E, et al. Early convolutions help transformers see better[EB/OL]. (2021-06-28)[2022-08-21]. [22] NEWELL A, YANG K Y, DENG J. Stacked hourglass networks for human pose estimation[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 483-499. [23] CHEN Y L, WANG Z C, PENG Y X, et al. Cascaded pyramid network for multi-person pose estimation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 7103-7112. [24] NEWELL A, HUANG Z A, DENG J. Associative embedding: end-to-end learning for joint detection and grouping[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems. New York: ACM, 2017: 2274–2284. [25] CAO Z, SIMON T, WEI S H, et al. Realtime multi-person 2D pose estimation using part affinity fields[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 1302-1310. [26] WEI S H, RAMAKRISHNA V, KANADE T, et al. Convolutional pose machines[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 4724-4732. [27] LIU Z, LIN Y T, CAO Y, et al. Swin Transformer: Hierarchical vision transformer using shifted windows[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2021: 9992-10002. [28] 王静, 李沛橦, 赵容锋, 等. 融合卷积注意力和Transformer架构的行人重识别方法[J]. 北京航空航天大学学报, 2024, 50(2): 466-476.WANG J, LI P T, ZHAO R F, et al. A person re-identification method for fusing convolutional attention and Transformer architecture[J]. Journal of Beijing University of Aeronautics and Astronautics, 2024, 50(2): 466-476 (in Chinese). [29] YUAN Y H, FU R, HUANG L, et al. HRFormer: High-resolution transformer for dense prediction[EB/OL]. (2021-10-18)[2022-08-21]. [30] XIONG Z N, WANG C X, LI Y, et al. Swin-Pose: Swin transformer based human pose estimation[C]//Proceedings of the IEEE 5th International Conference on Multimedia Information Processing and Retrieval. Piscataway: IEEE Press, 2022: 228-233. [31] YANG S, QUAN Z B, NIE M, et al. TransPose: Keypoint localization via transformer[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2021: 11782-11792. [32] SAMEK W, MONTAVON G, LAPUSCHKIN S, et al. Explaining deep neural networks and beyond: A review of methods and applications[J]. Proceedings of the IEEE, 2021, 109(3): 247-278. doi: 10.1109/JPROC.2021.3060483 [33] KOH P W, LIANG P. Understanding black-box predictions via influence functions[EB/OL]. (2020-12-29)[2022-08-22]. [34] ZEILER M D, FERGUS R. Visualizing and understanding convolutional networks[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2014: 818-833. [35] ZHANG S S, YANG J, SCHIELE B. Occluded pedestrian detection through guided attention in CNNs[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 6995-7003. [36] ZHOU B L, KHOSLA A, LAPEDRIZA A, et al. Learning deep features for discriminative localization[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 2921-2929. [37] WANG H F, WANG Z F, DU M N, et al. Score-CAM: Score-weighted visual explanations for convolutional neural networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE Press, 2020: 111-119. [38] XIAO B, WU H P, WEI Y C. Simple baselines for human pose estimation and tracking[EB/OL]. (2018-08-21)[2022-08-22]. [39] MAO W A, GE Y T, SHEN C H, et al. PoseUR: Direct human pose regression with transformers[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2022: 72-88. [40] XU Y F, ZHANG J, ZHANG Q M, et al. ViTPose: Simple vision transformer baselines for human pose estimation[EB/OL]. (2022-04-26)[2022-08-22]. -

下载:

下载: