-

摘要:

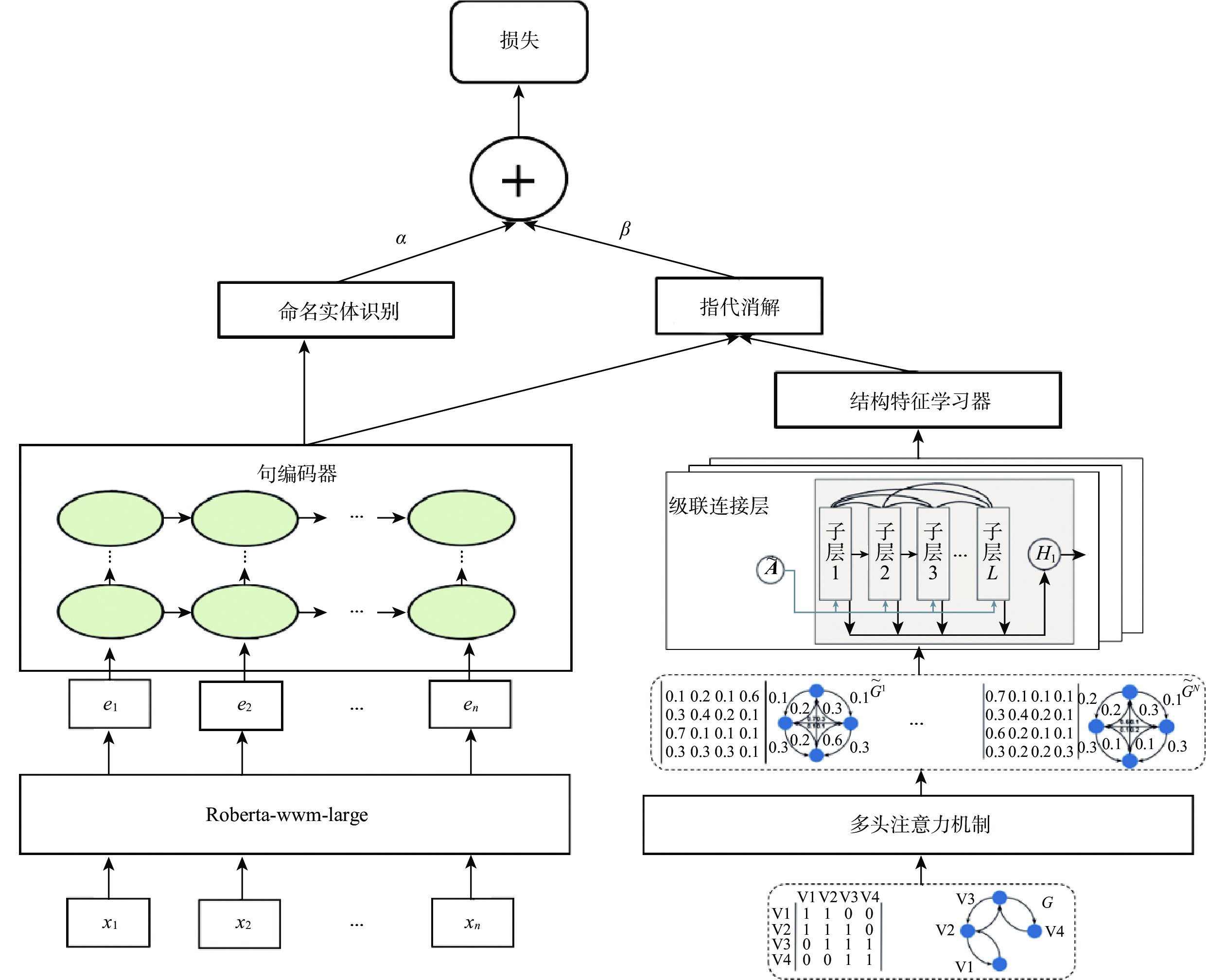

指代提取是自然语言处理应用中的一项重要任务。学习有效的指称特征表示是指代消解的核心问题。现有的研究大多把指称文本片段识别和共指关系预测作为两阶段来学习,不能有效反映文本片段中的命名实体等信息和共指对之间的内在联系。因此,提出一种新的基于图结构和多任务学习的指代消解模型。该模型将序列语义和结构信息结合起来指称特征向量学习,利用多任务学习框架结合指代消解和命名实体识别2个任务,通过参数共享的底层网络结构实现指代消解和命名实体识别2个任务的互相学习和互相提高。在公共数据集和手动构建的数据集上进行了大量实验,验证了所提模型的优越性。

Abstract:Coreference resolution is an important task in the domain of natural language processing. Learning effective referential feature representation is a core problem of coreference resolution. It is ineffective to reflect the internal relationships between the information, such as named entities in text fragments and coreference pairs, because the majority of current research views the identification of reference text fragments and the prediction of coreference relationships as two stages of learning. This research proposes a new model of coreference resolution based on graph structure and multitask learning. It combines sequence semantics and structure information to learn referential feature vectors. A multitask learning framework is used to combine the two tasks of coreference resolution and named entity recognition. The two tasks, named entity recognition and coreference resolution, can learn from each other and get better at each other by sharing parameters in the underlying network. Extensive experiments are conducted to verify the superior performance of the proposed model.

-

标注类别 数量 任务 1284 方法 2091 度量标准 340 材料 771 通用 1338 其他实体 2270 共指链接 2754 共指团 1015 表 2 CSGC-3数据集统计信息

Table 2. CSGC-3 dataset statistics information

标注类别 数量 发电设备 2204 电动设备 2992 环境信息 3321 电力电缆 1046 显示设备 1543 控制器件 865 其他实体 4134 共指链接 4589 共指团 1657 表 4 CSGC-3数据集上的指代消解结果

Table 4. Result of coreference resolution on CSGC-3 dataset

% 表 6 CSGC-3数据集上的命名实体识别结果

Table 6. Result of named entity recognition on CSGC-3 dataset

% 模型 F1 MUC B3 CEAFϕ4 NER GMCor-BERT 71.23 61.55 59.48 GMCor-Graph 70.67 59.87 58.83 GMCor-Entity 68.97 58.34 57.45 GMCor-Core 78.34 GMCor 73.82 63.23 61.69 81.13 表 8 CSGC-3数据集上的消融实验结果

Table 8. Result of ablation tests on CSGC-3 dataset

% 模型 F1 MUC B3 CEAFϕ4 NER GMCor-BERT 67.33 58.22 56.24 GMCor-Graph 66.12 56.34 55.52 GMCor-Entity 64.45 55.21 54.73 GMCor-Core 85.79 GMCor 70.32 60.51 58.68 90.40 -

[1] DODDINGTON G R, MITCHELL A, PRZYBOCKI M A, et al. The automatic content extraction (ACE) program-tasks, data, and evaluation[C]//Proceedings of the International Conference on Language Resources and Evaluation. Brussels: European Language Resources Association, 2004: 837-840. [2] HOBBS J R. Resolving pronoun references[J]. Lingua, 1978, 44(4): 311-338. doi: 10.1016/0024-3841(78)90006-2 [3] GE N, HALE J, CHARNIAK E. A statistical approach to anaphora resolution[C]//Proceedings of the 6th Workshop on Very Large Corpora. Stroudsbury: Association for Computational Linguistics, 1998: 161-170. [4] ZHENG J P, CHAPMAN W W, MILLER T A, et al. A system for coreference resolution for the clinical narrative[J]. Journal of the American Medical Informatics Association, 2012, 19(4): 660-667. doi: 10.1136/amiajnl-2011-000599 [5] ZHANG R, DOS SANTOS C N, YASUNAGA M, et al. Neural coreference resolution with deep biaffine attention by joint mention detection and mention clustering[C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: Association for Computational Linguistics, 2018: 2450-2355. [6] LEE K, HE L H, ZETTLEMOYER L. Higher-order coreference resolution with coarse-to-fine inference[EB/OL]. (2018-04-15)[2022-08-01]. [7] JOSHI M, LEVY O, WELD D S, et al. BERT for coreference resolution: Baselines and analysis[EB/OL]. (2019-08-24)[2022-08-01]. [8] LAPPIN S, LEASS H J. An algorithm for pronominal anaphora resolution[J]. Computational Linguistics, 1994, 20(4): 535-561. [9] DAGAN I, ITAI A. Automatic acquisition of constraints for the resolution of anaphoric references and syntactic ambiguities[C]//Proceedings of the 28th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: Association for Computational Linguistics, 1990: 122-129. [10] CLARK K, MANNING C D. Improving coreference resolution by learning entity-level distributed representations[C]//Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Stroudsburg: Association for Computational Linguistics, 2016: 1231-1241. [11] LEE K, HE L, LEWIS M, et al. End-to-end neural coreference resolution[C]//Proceedings of the Conference on Empirical Methods in Natural Language Processing. Stroudsbury: Association for Computational Linguistics, 2017: 561-570. [12] WU W, WANG F, YUAN A, et al. CorefQA: Coreference resolution as query-based span prediction[C]//Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Stroudsbury: Association for Computational Linguistics, 2020: 6953-6963. [13] LUAN Y, WADDEN D, HE L H, et al. A general framework for information extraction using dynamic span graphs[EB/OL]. (2019-05-05)[2022-08-01]. [14] GANDHI N, FIELD A, TSVETKOV Y. Improving span representation for domain-adapted coreference resolution[EB/OL]. (2021-09-20)[2022-08-01]. [15] 付健, 孔芳. 融入结构化信息的端到端中文指代消解[J]. 计算机工程, 2020, 46(1): 45-51.FU J, KONG F. End to end Chinese coreference resolution with structural information[J]. Computer Engineering, 2020, 46(1): 45-51(in Chinese). [16] AL-RFOU R, PEROZZI B, SKIENA S. Polyglot: Distributed word representations for multilingual NLP[EB/OL]. (2013-07-05)[2022-08-01]. [17] JIANG F, COHN T. Incorporating syntax and semantics in coreference resolution with heterogeneous graph attention network[C]// Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg: Association for Computational Linguistics, 2021: 1584-1591. [18] HUANG Z H, XU W, YU K. Bidirectional LSTM-CRF models for sequence tagging[EB/OL]. (2015-08-09)[2022-08-01]. [19] LIU Y H, OTT M, GOYAL N, et al. RoBERTa: A robustly optimized BERT pretraining approach[EB/OL]. (2019-07-26)[2022-08-01]. [20] LI J, WANG X, TU Z P, et al. On the diversity of multi-head attention[J]. Neurocomputing, 2021, 454: 14-24. doi: 10.1016/j.neucom.2021.04.038 [21] HUANG G, LIU Z, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 2261-2269. [22] LAFFERTY J, MCCALLUM A, PEREIRA F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data[C]//Proceedings of the Eighteenth International Conference on Machine Learning. Williamstown: Morgan Kaufmann, 2001: 282-289. [23] RAM R V S, AKILANDESWARI A, DEVI S L. Linguistic features for named entity recognition using CRFs[C]//Proceedings of the International Conference on Asian Language Processing. Piscataway: IEEE Press, 2010: 158-161. [24] AUGENSTEIN I, DAS M, RIEDEL S, et al. SemEval 2017 task 10: ScienceIE-extracting keyphrases and relations from scientific publications[EB/OL]. (2017-04-10)[2022-08-01]. [25] GÁBOR K, BUSCALDI D, SCHUMANN A K, et al. SemEval2018 task 7: Semantic relation extraction and classification in scientific papers[C]//Proceedings of the 12th International Workshop on Semantic Evaluation. Stroudsburg: Association for Computational Linguistics, 2018: 679-688. [26] MA X Z, HOVY E. End-to-end sequence labeling via bi-directional LSTM-CNNs-CRF[EB/OL]. (2016-03-04)[2022-08-01]. [27] ZHOU R J, HU Q, WAN J, et al. WCL-BBCD: A contrastive learning and knowledge graph approach to named entity recognition[EB/OL]. (2022-03-14)[2022-08-01]. -

下载:

下载: