-

摘要:

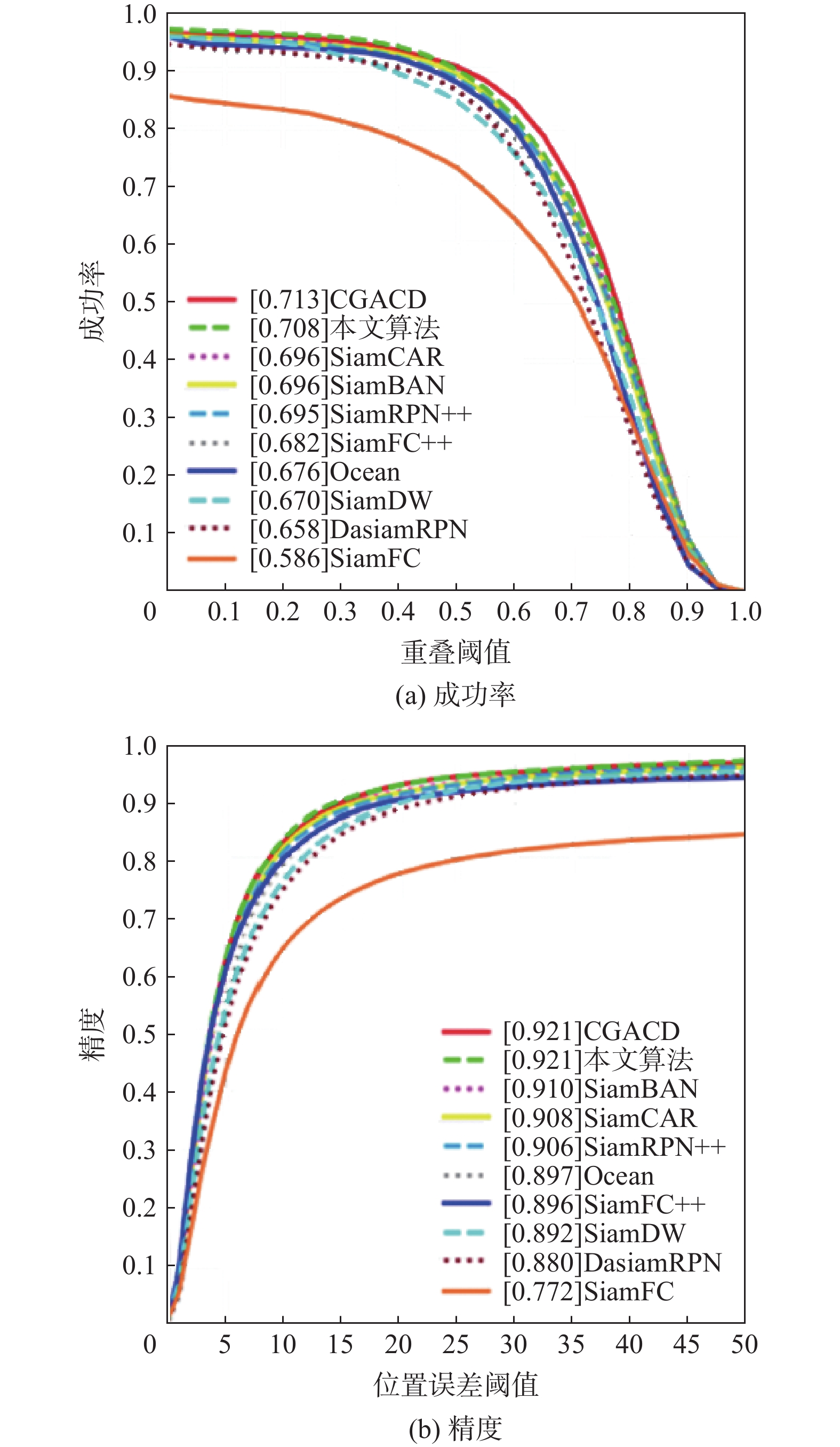

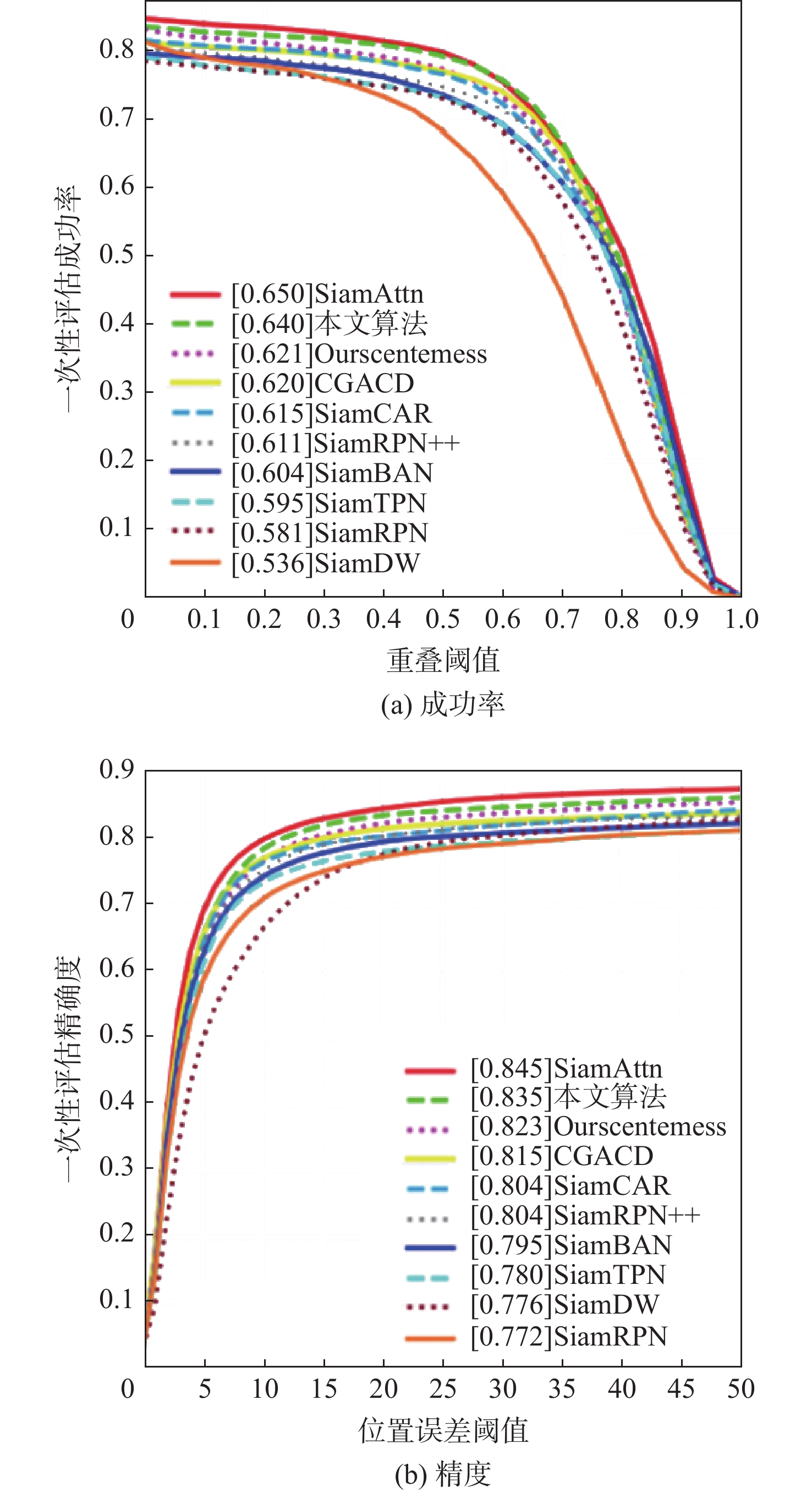

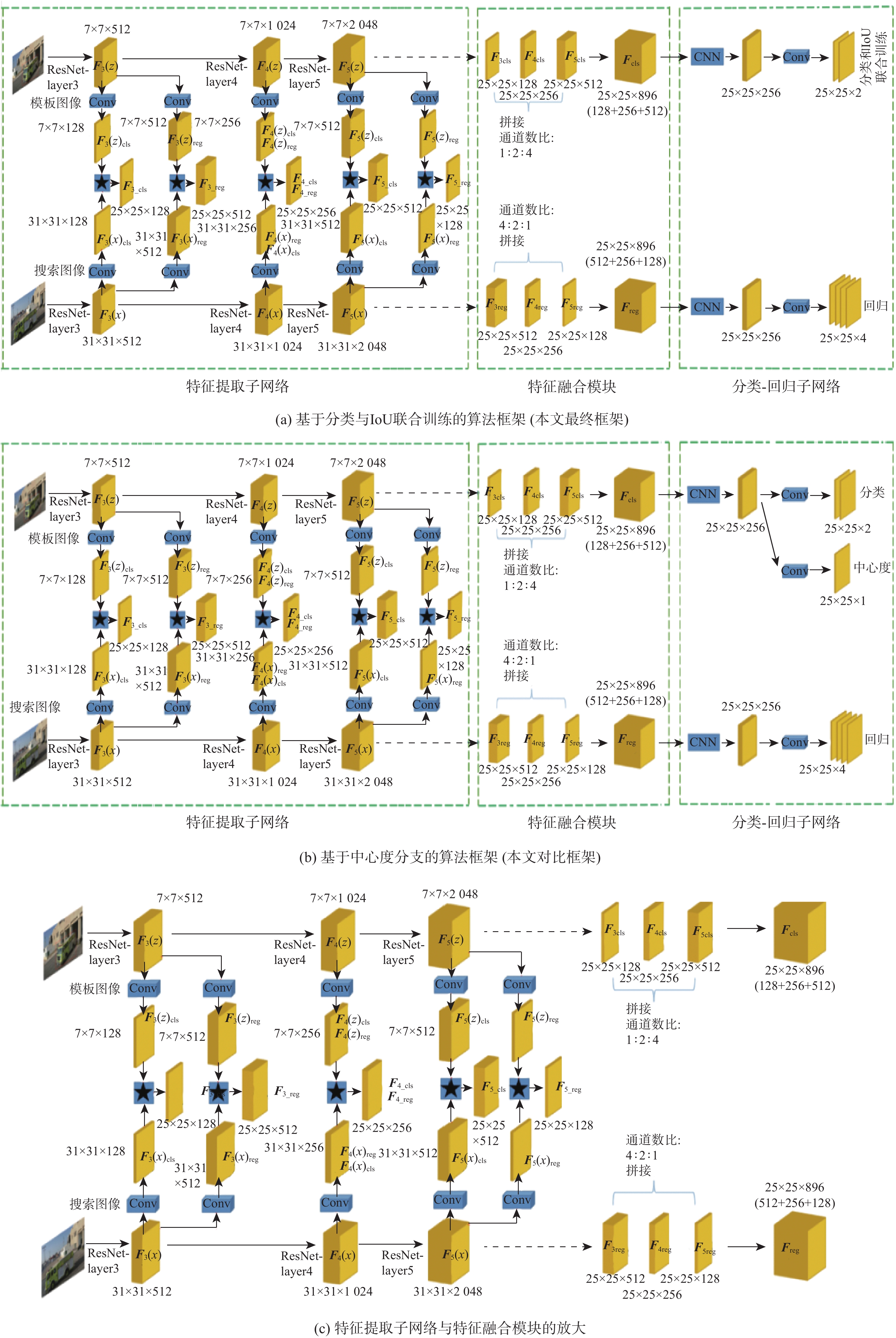

深度学习相关的目标跟踪算法在利用深浅层特征融合时,未考虑分类分支与回归分支的差异性,两分支均使用同一融合特征,不能同时满足各自分支的不同任务要求。依据分类分支与回归分支的不同任务要求与深浅层特征之间的关系,提出了一种新的特征融合方式用于视觉目标跟踪。将骨干网络中不同特征层的通道数按比例进行微调,分别形成适合分类分支与回归分支的融合特征。为验证所提特征融合方式的有效性,在基于SiamCAR算法的基础上进行优化,改变特征提取与融合方式,在UAV123、GOT-10K、LaSOT数据集上提高了2%~3%的精度。实验结果证明:所提特征融合方式是有效的,同时框架整体以75 帧/s的实时运行速率实现了良好的跟踪性能。

Abstract:Until recently, object tracking algorithms connected to deep learning have not considered the distinctions between regression and classification branches while employing shallow and deep feature fusion. Both branches used the same fusion feature, which could not meet the different task requirements of each branch well at the same time. According to the relationship between different task requirements of branches and features, a new feature fusion method was proposed for the object tracking algorithm. The channels of different feature layers in the backbone network were adjusted proportionally to form the fusion features suitable for the classification branch and regression branch. To compare and prove the effectiveness of the new feature fusion method, optimization was carried out on the basis of the SiamCAR algorithm. By changing the method of feature extraction and fusion, the accuracy of 2%−3% was improved on the three datasets of UAV123, GOT-10k and LaSOT. The experimental findings demonstrate the efficacy of the novel feature fusion technique, and the framework as a whole achieves good tracking performance at a real-time running speed of 75 frames per second.

-

表 1 GOT-10k数据集上不同跟踪器的实验结果

Table 1. Experimental results of different trackers on the GOT-10K dataset

跟踪器 平均重叠率 SR0.5 ECO 0.299 0.303 SiamFC 0.325 0.328 DSiam 0.417 0.461 SPM 0.513 0.593 SiamRPN++ 0.517 0.616 ATOM 0.556 0.634 SiamCAR 0.569 0.670 Ocean 0.592 0.695 DiMP 0.611 0.712 Ourscenterness 0.584 0.691 本文算法 0.593 0.698 表 2 LaSOT数据集上不同跟踪器的实验结果

Table 2. Experimental results of different trackers on the LaSOT dataset

跟踪器 一次性评估成功率 精确度 ECO 0.324 0.301 SiamFC 0.336 0.339 SiamRPN++ 0.496 0.491 SiamCAR 0.507 0.510 ATOM 0.514 0.505 DiMP-18 0.537 0.541 DiMP-50 0.558 0.564 Ocean 0.555 0.566 Ourscenterness 0.532 0.542 本文算法 0.544 0.553 表 3 不同通道比例的实验结果

Table 3. Experimental results of different channel ratios

通道比例 一次性评估成功率 一次性评估精确度 1∶2∶4(分类),4∶2∶1(回归) 0.640 0.835 1∶3∶9(分类),9∶3∶1(回归) 0.617 0.819 1∶1∶2(分类),2∶1∶1(回归) 0.629 0.824 表 4 不同分支结构的实验结果

Table 4. Experimental results of different branch structure

分支结构 一次性评估

成功率一次性评估

精确度分类分支、回归分支、中心度分支 0.621 0.823 分类分支、回归分支、IoU分支 0.633 0.830 分类分支、回归分支、中心度权重 0.612 0.813 分类分支、回归分支、IoU权重 0.616 0.819 分类与IoU联合训练分支、回归分支 0.640 0.835 -

[1] TAO R, GAVVES E, SMEULDERS A W M. Siamese instance search for tracking[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 1420-1429. [2] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 850-865. [3] LI B, YAN J J, WU W, et al. High performance visual tracking with Siamese region proposal network[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 8971-8980. [4] LI B, WU W, WANG Q, et al. SiamRPN++: evolution of Siamese visual tracking with very deep networks[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4277-4286. [5] HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 770-778. [6] KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84-90. doi: 10.1145/3065386 [7] GUO D Y, WANG J, CUI Y, et al. SiamCAR: Siamese fully convolutional classification and regression for visual tracking[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6268-6276. [8] ZHANG Z P, PENG H W, FU J L, et al. Ocean: Object-aware anchor-free tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2020: 771-787. [9] 蒲磊, 李海龙, 侯志强, 等. 基于高层语义嵌入的孪生网络跟踪算法[J]. 北京航空航天大学学报, 2023, 49(4): 792-803.PU L, LI H L, HOU Z Q, et al. Siamese network tracking based on high level semantic embedding[J]. Journal of Beijing University of Aeronautics and Astronautics, 2023, 49(4): 792-803(in Chinese). [10] SHELHAMER E, LONG J, DARRELL T. Fully convolutional networks for semantic segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(4): 640-651. [11] BELL S, ZITNICK C L, BALA K, et al. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 2874-2883. [12] NÚÑEZ-GÓMEZ C, GARCIA-FONT V. HyperNet: A conditional k-anonymous and censorship resistant decentralized hypermedia architecture[J]. Expert Systems with Applications, 2022, 208: 118079. doi: 10.1016/j.eswa.2022.118079 [13] GUAN B, YAO J K, ZHANG G S, et al. Thigh fracture detection using deep learning method based on new dilated convolutional feature pyramid network[J]. Pattern Recognition Letters, 2019, 125: 521-526. doi: 10.1016/j.patrec.2019.06.015 [14] OTÁLORA S, ATZORI M, ANDREARCZYK V, et al. Image magnification regression using DenseNet for exploiting histopathology open access content[C]//Proceedings of the Internetional Workshop on Ophthalmic Medical Image Analysis. Berlin: Springer, 2018: 148-155. [15] LI K, ZOU C Q, BU S H, et al. Multi-modal feature fusion for geographic image annotation[J]. Pattern Recognition, 2018, 73: 1-14. doi: 10.1016/j.patcog.2017.06.036 [16] TIAN Z, SHEN C H, CHEN H, et al. FCOS: Fully convolutional one-stage object detection[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 9626-9635. [17] WU S K, LI X P, WANG X G. IoU-aware single-stage object detector for accurate localization[J]. Image and Vision Computing, 2020, 97: 103911. doi: 10.1016/j.imavis.2020.103911 [18] LI X, WANG W H, WU L J, et al. Generalized focal loss: learning qualified and distributed bounding boxes for dense object detection[EB/OL]. (2020-06-08)[2023-03-10]. https://doi.org/10.48550/arXiv.2006.04388. [19] HENRIQUES J F, CASEIRO R, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583-596. doi: 10.1109/TPAMI.2014.2345390 [20] VALMADRE J, BERTINETTO L, HENRIQUES J, et al. End-to-end representation learning for correlation filter based tracking[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 5000-5008. [21] ZHANG L, VARADARAJAN J, SUGANTHAN P N, et al. Robust visual tracking using oblique random forests[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 5825-5834. [22] DANELLJAN M, BHAT G, KHAN F S, et al. ATOM: accurate tracking by overlap maximization[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4655-4664. [23] GUO Q, FENG W, ZHOU C, et al. Learning dynamic Siamese network for visual object tracking[C]//Proceedings of the 2017 IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 1781-1789. [24] HE A F, LUO C, TIAN X M, et al. A twofold Siamese network for real-time object tracking[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 4834-4843. [25] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [26] DANELLJAN M, BHAT G, KHAN F S, et al. ECO: efficient convolution operators for tracking[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 6931-6939. [27] YOO H J. Deep convolution neural networks in computer vision: A review[J]. IEIE Transactions on Smart Processing and Computing, 2015, 4(1): 35-43. doi: 10.5573/IEIESPC.2015.4.1.035 [28] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]//Proceedings of the 3rd International Conference on Learning Representations. San Diego: ICLR Press, 2015: 149801. [29] SZEGEDY C, LIU W, JIA Y Q, et al. Going deeper with convolutions[C]//Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 1-9. [30] SANDLER M, HOWARD A, ZHU M L, et al. MobileNetV2: Inverted residuals and linear bottlenecks[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 4510-4520. [31] TYCHSEN-SMITH L, PETERSSON L. Improving object localization with fitness NMS and bounded IoU loss[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 6877-6885. [32] JIANG B R, LUO R X, MAO J Y, et al. Acquisition of localization confidence for accurate object detection[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 816-832. [33] HUANG Z J, HUANG L C, GONG Y C, et al. Mask scoring R-CNN[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 6402-6411. [34] CAO Y H, CHEN K, LOY C C, et al. Prime sample attention in object detection[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 11580-11588. [35] WU S K, YANG J R, WANG X G, et al. IoU-balanced loss functions for single-stage object detection[J]. Pattern Recognition Letters, 2022, 156: 96-103. doi: 10.1016/j.patrec.2022.01.021 [36] 周丽芳, 刘金兰, 李伟生, 等. 基于IoU约束的孪生网络目标跟踪方法[J]. 北京航空航天大学学报, 2022, 48(8): 1390-1398.ZHOU L F, LIU J L, LI W S, et al. Object tracking method based on IoU-constrained Siamese network[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(8): 1390-1398(in Chinese). [37] CAI Z W, VASCONCELOS N. Cascade R-CNN: delving into high quality object detection[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 6154-6162. [38] CHOI J, CHUN D, KIM H, et al. Gaussian YOLOv3: an accurate and fast object detector using localization uncertainty for autonomous driving[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 502-511. [39] WU Y, LIM J, YANG M H. Online object tracking: a benchmark[C]//Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2013: 2411-2418. [40] HUANG L H, ZHAO X, HUANG K Q. GOT-10k: a large high-diversity benchmark for generic object tracking in the wild[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(5): 1562-1577. doi: 10.1109/TPAMI.2019.2957464 [41] FAN H, LIN L T, YANG F, et al. LaSOT: a high-quality benchmark for large-scale single object tracking[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 5369-5378. [42] DU F, LIU P, ZHAO W, et al. Correlation-guided attention for corner detection based visual tracking[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6835-6844. [43] ZHU Z, WANG Q, LI B, et al. Distractor-aware Siamese networks for visual object tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 103-119. [44] XU Y D, WANG Z Y, LI Z X, et al. SiamFC++: towards robust and accurate visual tracking with target estimation guidelines[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12549-12556. doi: 10.1609/aaai.v34i07.6944 [45] ZHANG Z P, PENG H W. Deeper and wider Siamese networks for real-time visual tracking[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4586-4595. [46] CHEN Z D, ZHONG B N, LI G R, et al. Siamese box adaptive network for visual tracking[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6667-6676. [47] LAZEBNIK S, SCHMID C, PONCE J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories[C]//Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2006: 2169-2178. [48] BHAT G, DANELLJAN M, VAN GOOL L, et al. Learning discriminative model prediction for tracking[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6181-6190. [49] YU Y C, XIONG Y L, HUANG W L, et al. Deformable Siamese attention networks for visual object tracking[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6727-6736. [50] LI Y, ZHANG X, WANG J, et al. Siamese transformer pyramid networks for real-time UAV tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(8): 10001-10015. [51] DANELLJAN M, HÄGER G, KHAN F S, et al. Learning spatially regularized correlation filters for visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 4310-4318. -

下载:

下载: