Infrared image super-resolution reconstruction based on visible image guidance and recursive fusion

-

摘要:

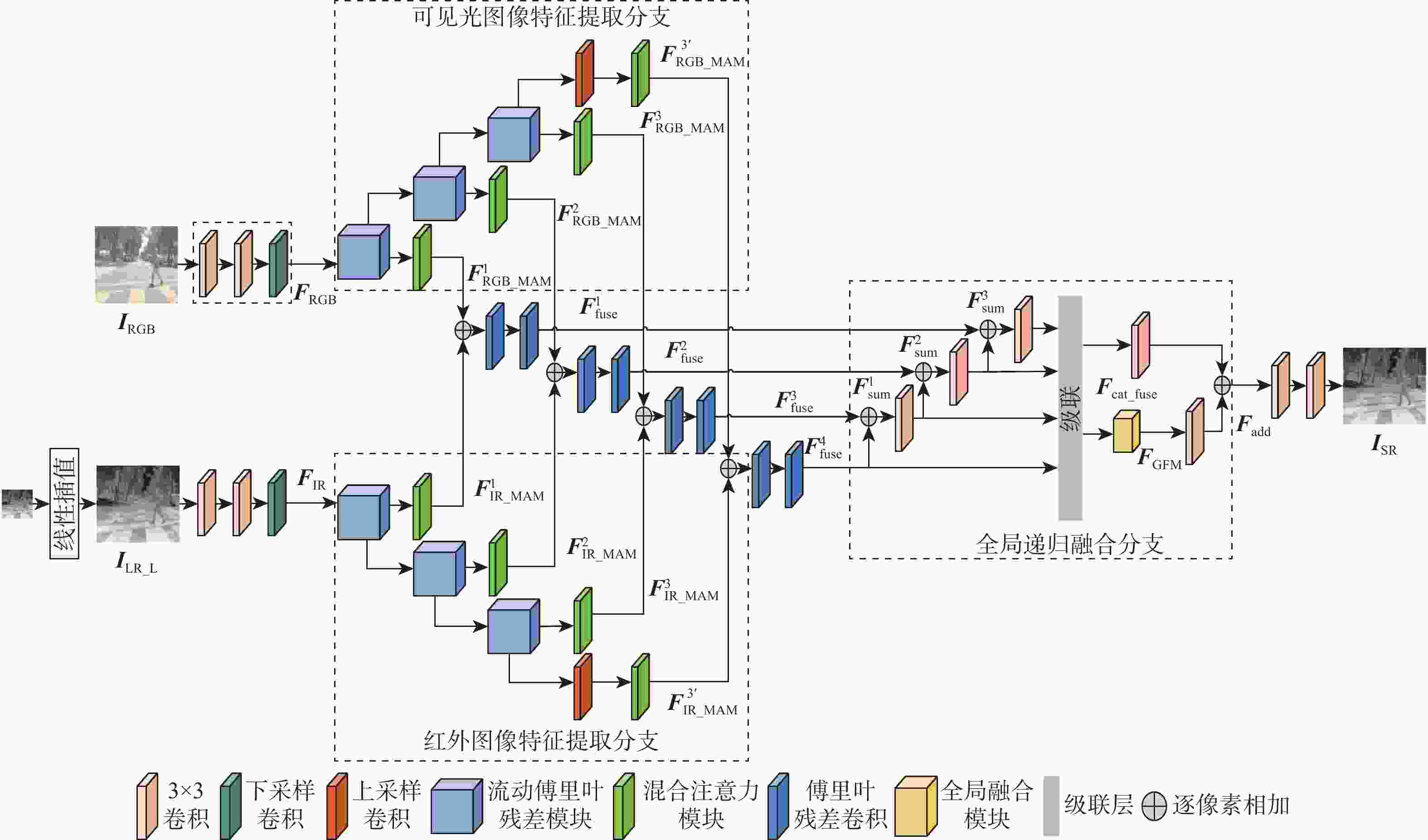

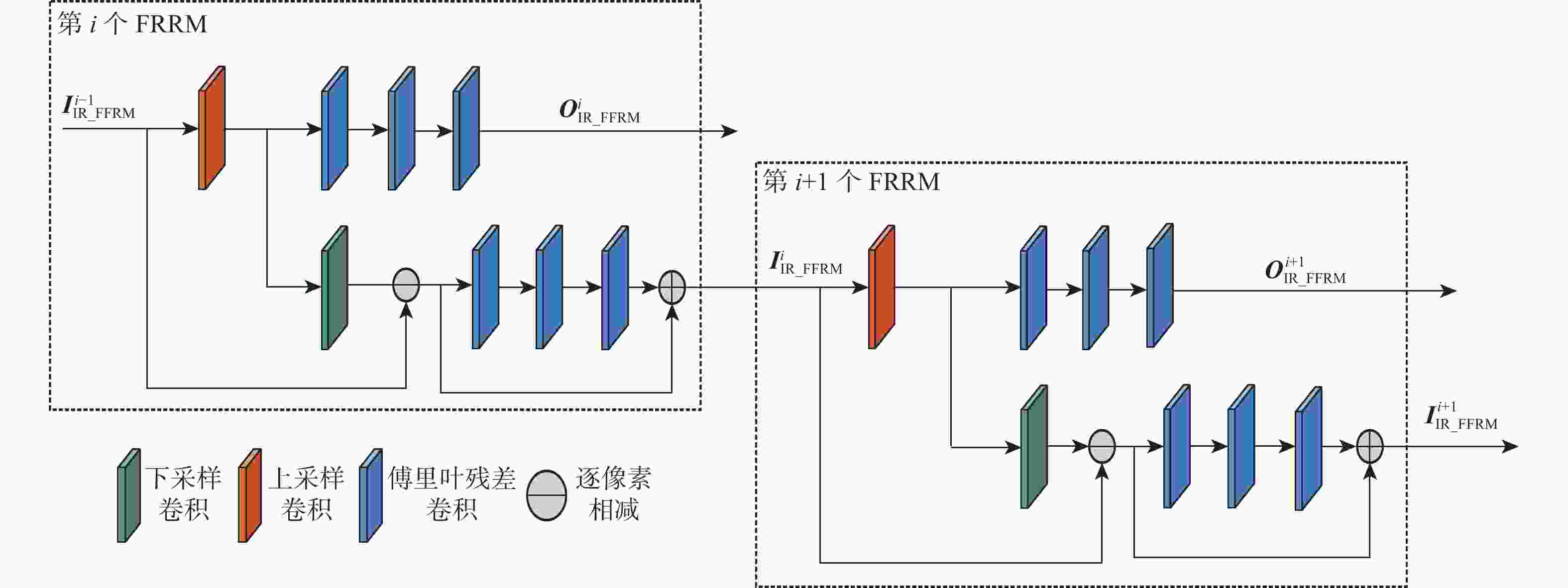

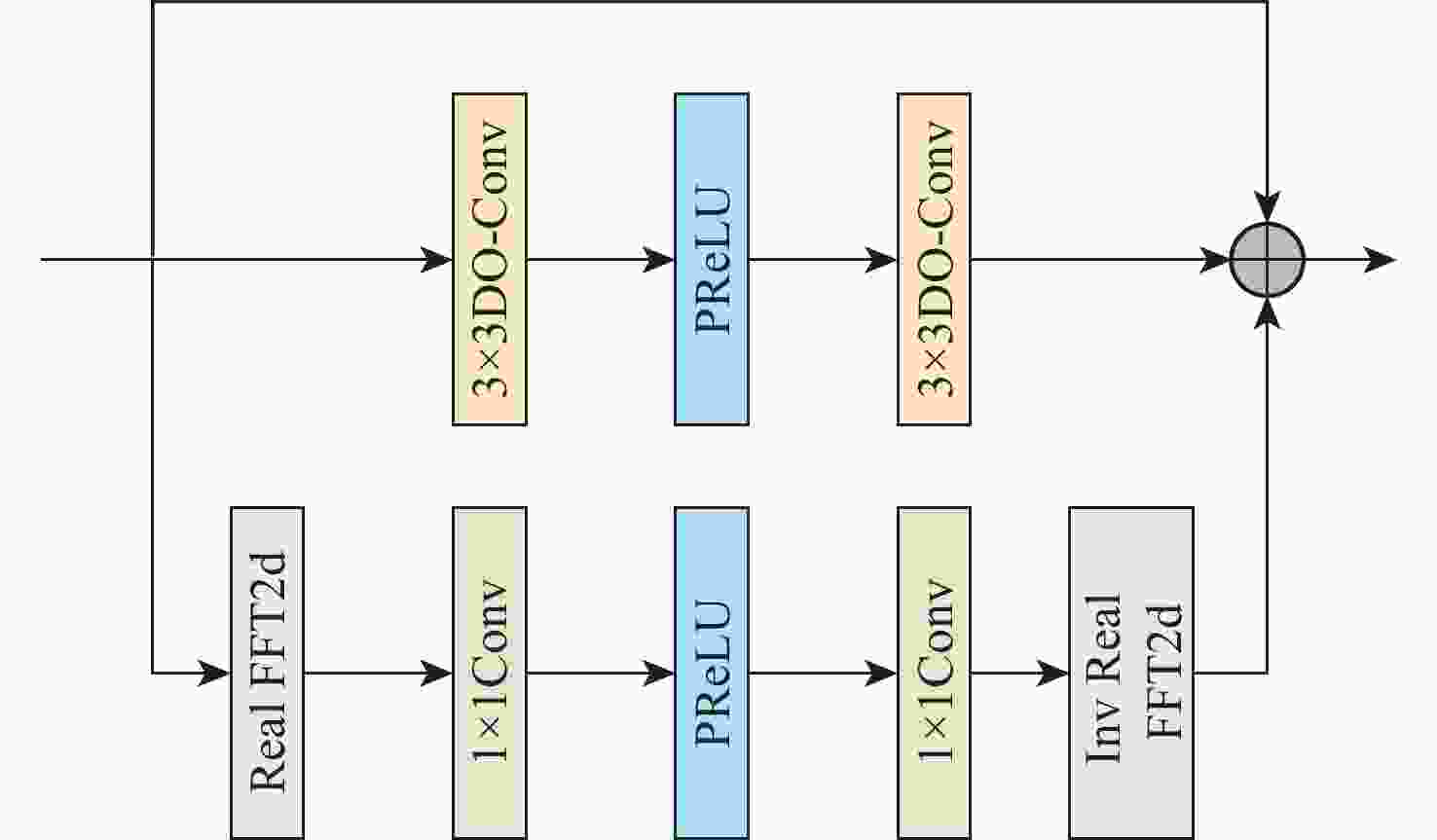

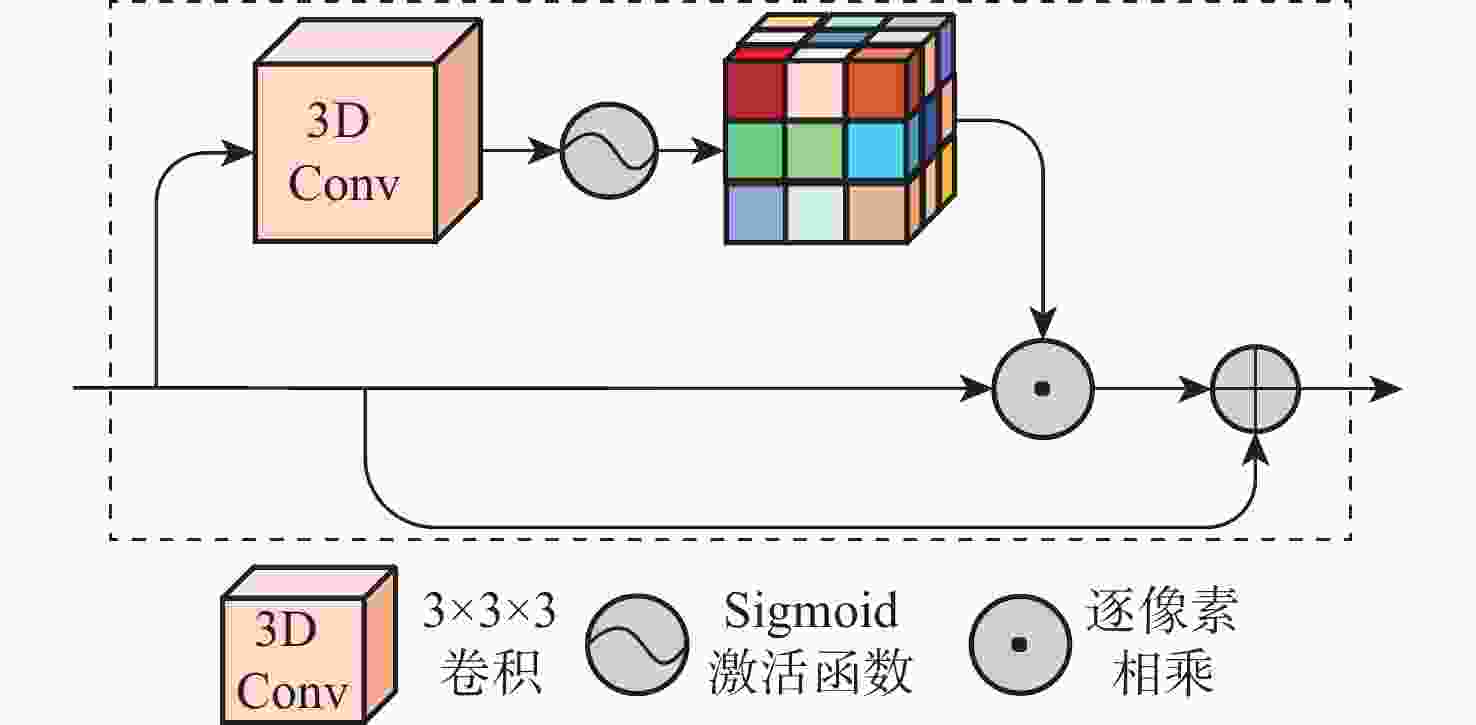

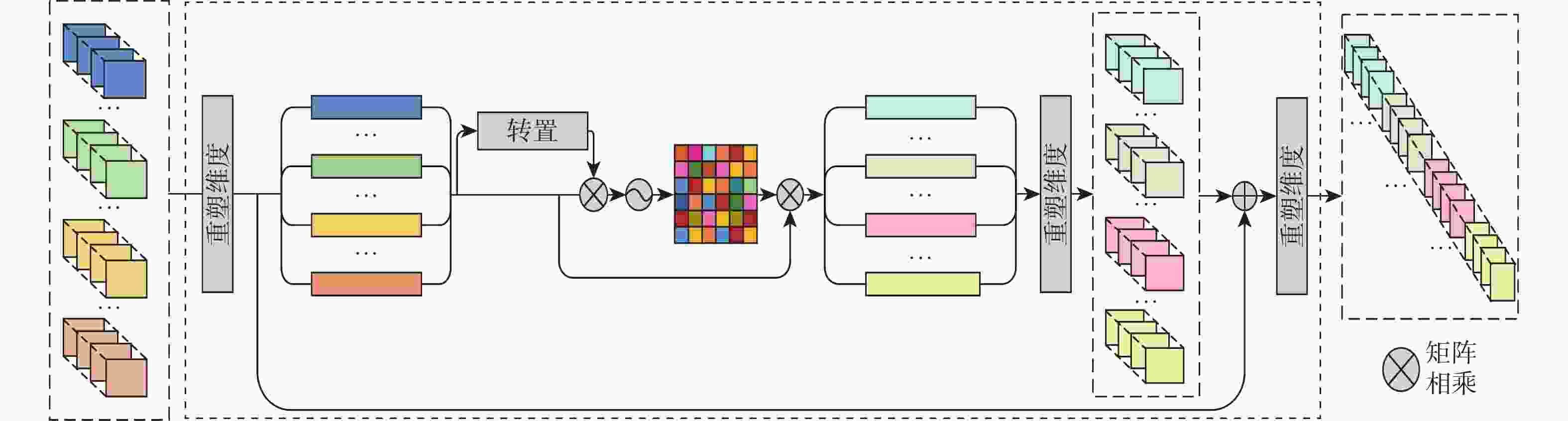

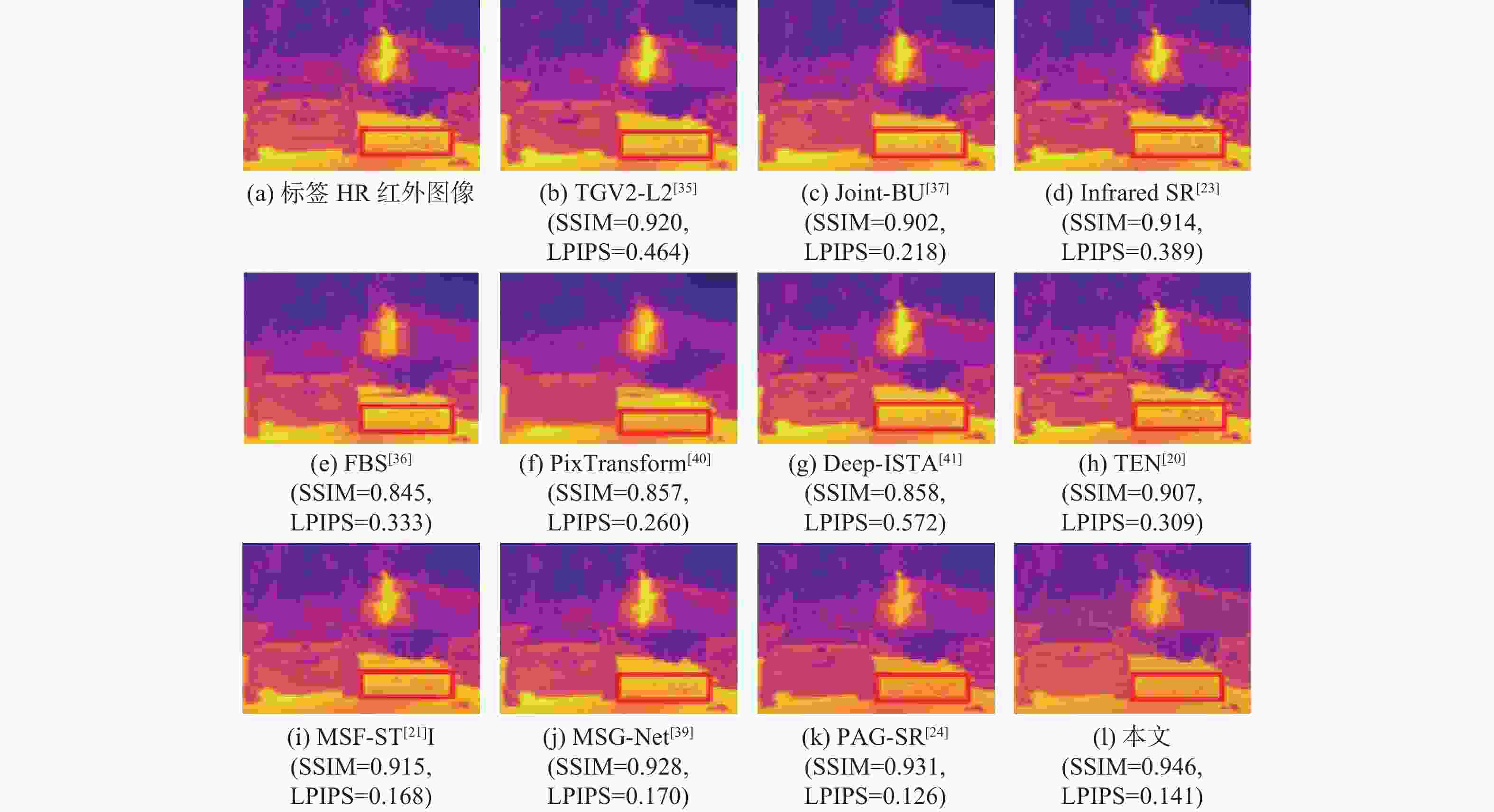

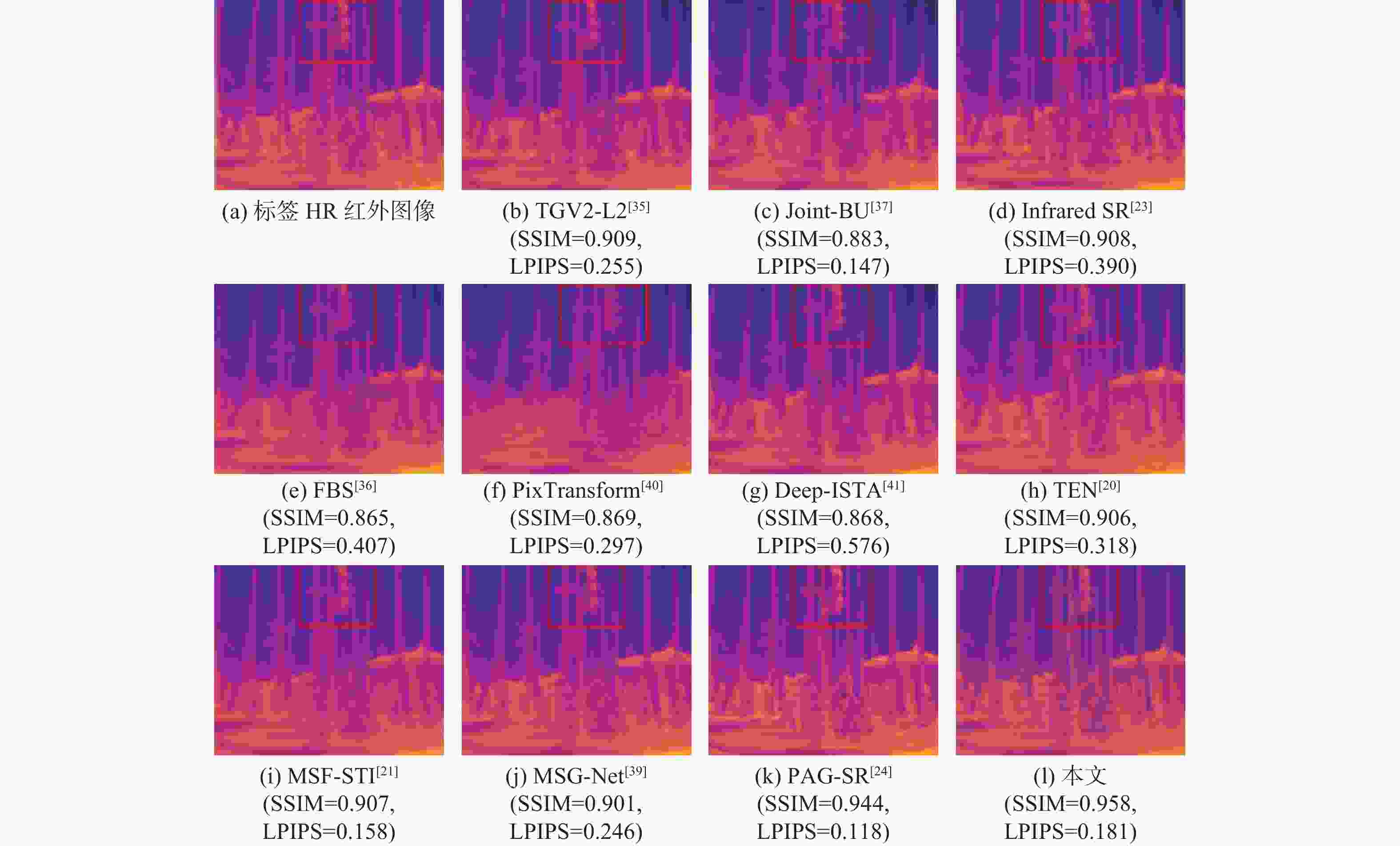

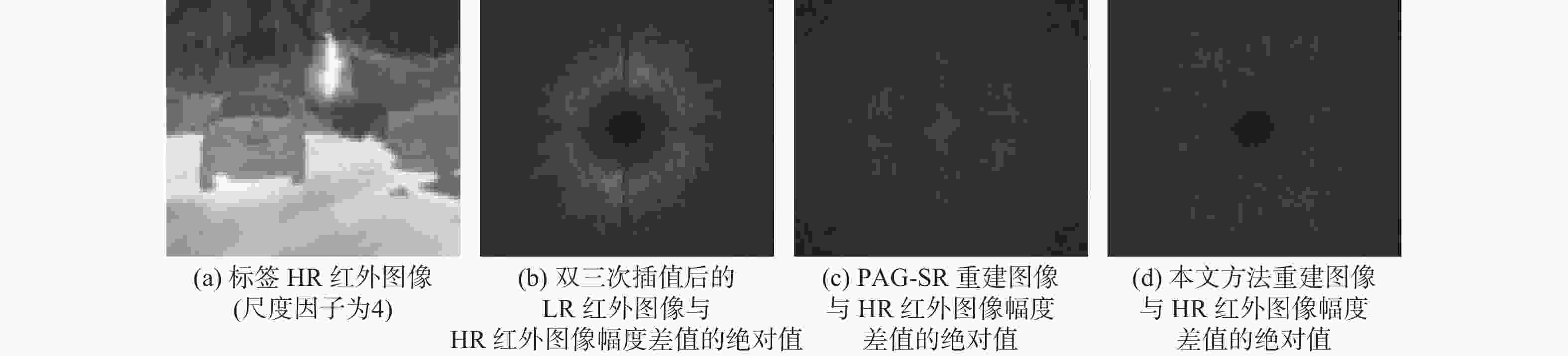

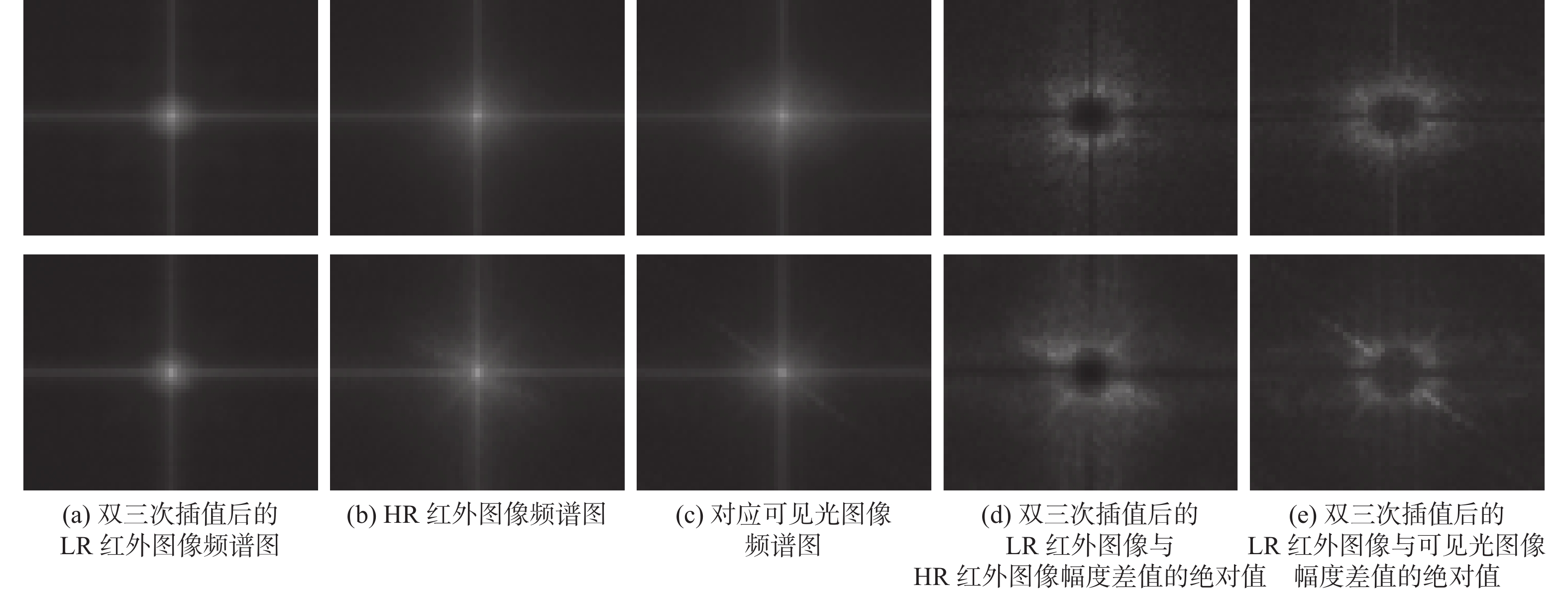

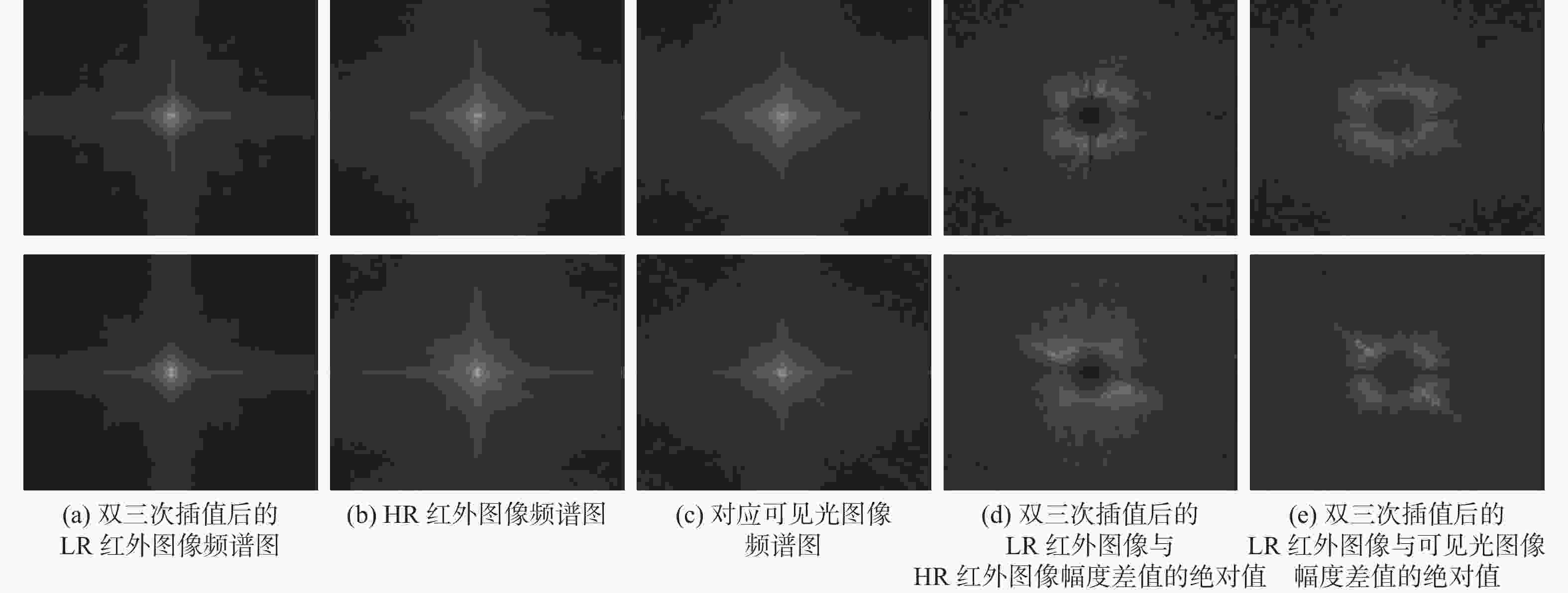

由于硬件设备的局限,红外图像在获取时普遍存在分辨率低、细节模糊等问题。尽管可见光图像能为红外图像的超分辨率重建提供有效指导,但因两者成像原理不同,图像细节信息的差异易导致重建出现模糊和鬼影等问题。基于此,提出一种基于可见光图像引导和递归融合的红外图像超分辨率重建网络。在该网络中,设计流动傅里叶残差模块,利用不同深度的模块提取可见光图像和红外图像中不同频率的信息,使每个模块关注适当的频率信息。同时,利用混合注意力模块,从通道和空间角度获取多模态图像中的细节信息,并以互补的方式进行融合,有助于消除伪影的生成。在此基础上,设计全局递归融合分支,考虑多层特征之间的相关性,自适应地融合多层特征,生成更加清晰的高分辨率红外图像。实验结果表明:所提方法与Deep-ISTA、PAG-SR等其他方法相比,在客观评价指标上表现出了较好的水平,在主观视觉比较方面,重建图像具有更清晰的纹理和更少的伪影,复杂环境中具有更佳的物体区分度。

Abstract:Due to hardware limitations, infrared images typically suffer from low resolution and blurred details when captured. Although visible light images can effectively guide the super-resolution reconstruction of infrared images, differences in image detail caused by their distinct imaging principles often result in issues such as blurring and ghosting during reconstruction. This paper proposes a super-resolution reconstruction network for infrared images based on visible image guidance and recursive fusion. In this network, a flowing Fourier residual module is designed to extract different frequency information from infrared and visible images using modules at different depths, enabling each module to focus on the appropriate frequency information. Simultaneously, a hybrid attention module is employed to capture detailed information in multimodal images from both channel and spatial perspectives, and to fuse it in a complementary manner. Based on this, a global recursive fusion branch is designed to model the correlations across multiple feature layers and adaptively fuse them, thus generating clearer high-resolution infrared images. Experimental results show that compared with comparison methods like Deep-ISTA and PAG-SR, the proposed method demonstrates better level in objective evaluation indicators. In terms of subjective visual comparison, the images reconstructed by this method exhibit clearer textures, fewer artifacts, and better object discrimination in complex environments.

-

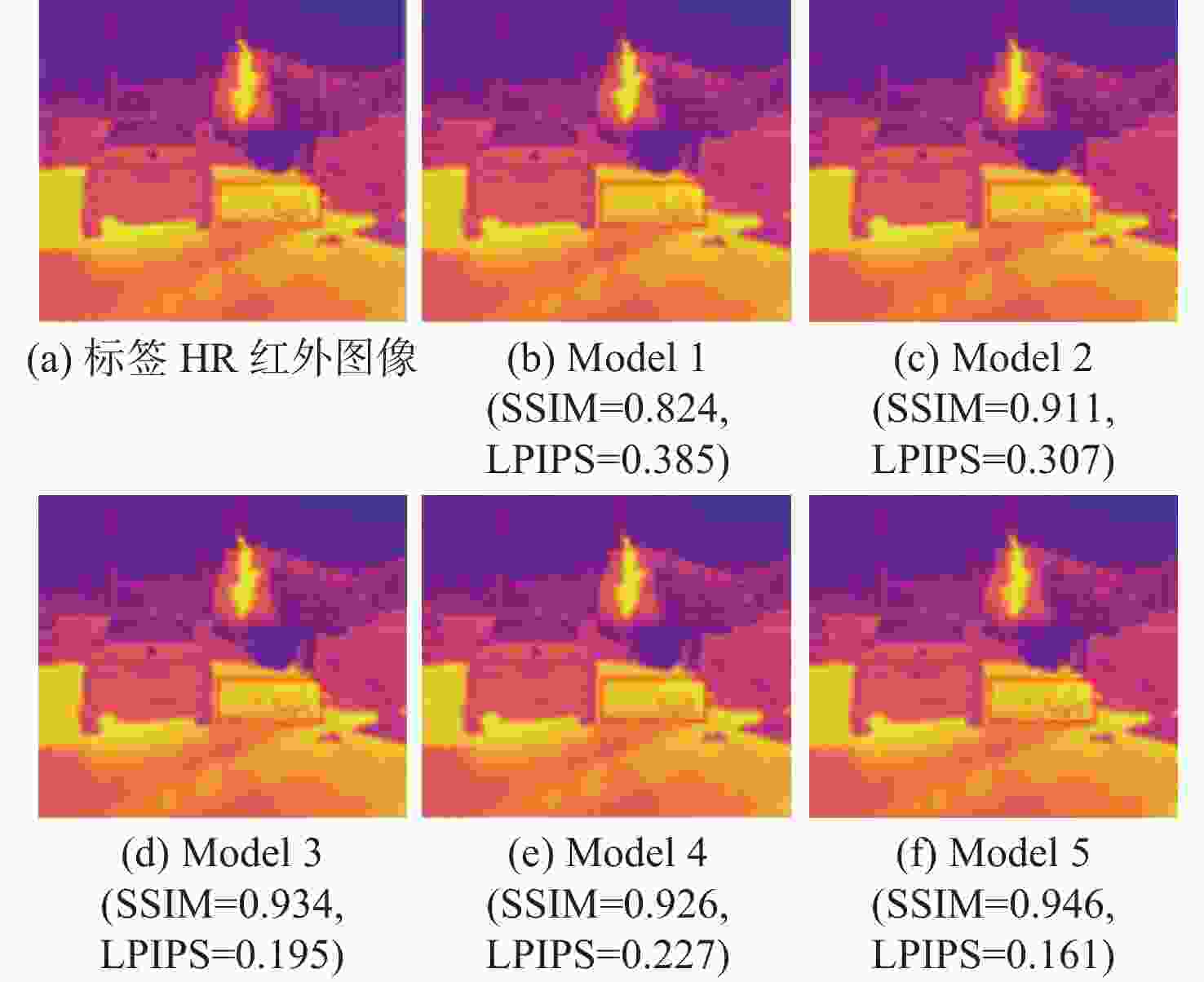

表 1 消融实验结果

Table 1. Ablation experiments results

方法 FFRM MAM GFM PSNR/dB SSIM LPIPS Model 1 × × × 30.916 0.918 0.189 Model 2 √ × × 31.032 0.921 0.189 Model 3 √ × √ 31.039 0.920 0.189 Model 4 √ √ × 31.038 0.920 0.194 Model 5 √ √ √ 31.048 0.921 0.189 表 2 本文方法在不同损失函数下的定量对比

Table 2. Quantitative comparison of the proposed algorithm with different loss functions

损失函数 PSNR/dB SSIM LPIPS $ {L_{\rm{total}}} = {L_1} $ 30.58 0.919 0.268 $ {L_{\rm{total}}} = {L_1} + {L_{\rm{FR}}} $ 30.66 0.920 0.283 表 3 不同方法的客观评价指标对比

Table 3. Comparison of objective evaluation metrics with different algorithms

方法 是否引导 PSNR/dB SSIM LPIPS 尺度因子为4 尺度因子为8 尺度因子为4 尺度因子为8 尺度因子为4 尺度因子为8 RDN[14] 非引导 29.28 26.80 0.906 0.833 0.282 0.389 RCAN[33] 非引导 29.18 22.35 0.908 0.758 0.228 0.414 SAN[34] 非引导 26.47 25.38 0.859 0.811 0.229 0.536 TGV2-L2[35] 引导 28.77 26.42 0.892 0.821 0.422 0.399 FBS[36] 引导 25.48 25.03 0.787 0.770 0.387 0.476 Joint-BU[37] 引导 27.77 25.61 0.874 0.803 0.284 0.406 Infrared SR[23] 引导 28.21 26.03 0.889 0.817 0.405 0.521 SDF[38] 引导 28.70 26.72 0.875 0.819 0.321 0.363 MSF-SR[13] 引导 29.21 27.92 0.901 0.835 0.200 0.249 MSG-Net[39] 引导 29.46 27.29 0.897 0.827 0.184 0.296 PixTransform[40] 引导 24.84 23.31 0.787 0.836 0.329 0.371 Deep-ISTA[41] 引导 25.86 25.56 0.828 0.778 0.529 0.598 PAG-SR[24] 引导 29.56 28.77 0.912 0.919 0.147 0.214 本文 引导 31.05 30.66 0.921 0.920 0.189 0.283 -

[1] GOLDBERG A C, FISCHER T, DERZKO Z I. Application of dual-band infrared focal plane arrays to tactical and strategic military problems[C]//Proceedings of the Infrared Technology and Applications XXVIII. Bellingham: SPIE, 2003. [2] 熊光明, 罗震, 孙冬, 等. 基于红外相机和毫米波雷达融合的烟雾遮挡目标检测与跟踪技术[J]. 兵工学报, 2024, 45(3): 893-906.XIONG G M, LUO Z, SUN D, et al. Detection and tracking technology of smoke occlusion target based on infrared camera and millimeter wave radar fusion[J]. Acta Armamentarii, 2024, 45(3): 893-906(in Chinese). [3] QI H, DIAKIDES N A. Thermal infrared imaging in early breast cancer detection-a survey of recent research[C]//Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Piscataway: IEEE Press, 2004: 1109-1112. [4] 张倩, 杨颖, 刘刚, 等. 融合数据增强与改进ResNet34的奶牛热红外图像乳腺炎检测[J]. 光谱学与光谱分析, 2023, 43(1): 280-288. doi: 10.3964/j.issn.1000-0593(2023)01-0280-09ZHANG Q, YANG Y, LIU G, et al. Detection of dairy cow mastitis from thermal images by data enhancement and improved ResNet34[J]. Spectroscopy and Spectral Analysis, 2023, 43(1): 280-288(in Chinese). doi: 10.3964/j.issn.1000-0593(2023)01-0280-09 [5] 侯义锋, 丁畅, 刘海, 等. 逆光海况下低质量红外目标的增强与识别[J]. 光学学报, 2023, 43(6): 0612003. doi: 10.3788/AOS221387HOU Y F, DING C, LIU H, et al. Enhancement and recognition of infrared target with low quality under backlight maritime condition[J]. Acta Optica Sinica, 2023, 43(6): 0612003(in Chinese). doi: 10.3788/AOS221387 [6] 翁静, 袁盼, 王铭赫, 等. 基于支持向量机的泄漏气体云团热成像检测方法[J]. 光学学报, 2022, 42(9): 0911002. doi: 10.3788/AOS202242.0911002WENG J, YUAN P, WANG M H, et al. Thermal imaging detection method of leak gas clouds based on support vector machine[J]. Acta Optica Sinica, 2022, 42(9): 0911002(in Chinese). doi: 10.3788/AOS202242.0911002 [7] XIONG K N, JIANG J B, PAN Y Y, et al. Deep learning approach for detection of underground natural gas micro-leakage using infrared thermal images[J]. Sensors, 2022, 22(14): 5322. doi: 10.3390/s22145322 [8] ZHANG L, WU X L. An edge-guided image interpolation algorithm via directional filtering and data fusion[J]. IEEE Transactions on Image Processing, 2006, 15(8): 2226-2238. doi: 10.1109/TIP.2006.877407 [9] PAPYAN V, ELAD M. Multi-scale patch-based image restoration[J]. IEEE Transactions on Image Processing, 2016, 25(1): 249-261. doi: 10.1109/TIP.2015.2499698 [10] ZHANG K, VAN GOOL L, TIMOFTE R. Deep unfolding network for image super-resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 3214-3223. [11] SUN Y M, ZHANG Y, LIU S D, et al. Image super-resolution using supervised multi-scale feature extraction network[J]. Multimedia Tools and Applications, 2021, 80(2): 1995-2008. doi: 10.1007/s11042-020-09488-z [12] ZHANG X D, ZENG H, ZHANG L. Edge-oriented convolution block for real-time super resolution on mobile devices[C]//Proceedings of the 29th ACM International Conference on Multimedia. New York: ACM, 2021: 4034-4043. [13] ALMASRI F, DEBEIR O. Multimodal sensor fusion in single thermal image super-resolution[C]//Proceedings of the 14th Asian Conference on Computer Vision. Berlin: Springer, 2019: 418-433. [14] ZHANG Y L, TIAN Y P, KONG Y, et al. Residual dense network for image super-resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 2472-2481. [15] ZHANG Y, LIU Z Y, LIU S D, et al. Frequency aggregation network for blind super-resolution based on degradation representation[J]. Digital Signal Processing, 2023, 133: 103837. doi: 10.1016/j.dsp.2022.103837 [16] KIM J, LEE J K, LEE K M. Deeply-recursive convolutional network for image super-resolution[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 1637-1645. [17] LIM B, SON S, KIM H, et al. Enhanced deep residual networks for single image super-resolution[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE Press, 2017: 1132-1140. [18] ZHANG Y, XU F, SUN Y, et al. Spatial and frequency information fusion transformer for image super-resolution[J]. Neural Networks, 2025, 187: 107351. [19] QIU Y J, WANG R X, TAO D P, et al. Embedded block residual network: a recursive restoration model for single-image super-resolution[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 4179-4188. [20] CHOI Y, KIM N, HWANG S, et al. Thermal image enhancement using convolutional neural network[C]//Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. Piscataway:IEEE Press, 2016: 223-230. [21] ALMASRI F, DEBEIR O. Multimodal sensor fusion in single thermal image super-resolution[C]//Proceedings of the Asian Conference on Computer Vision. Berlin: Springer, 2018: 418-433. [22] LEE K, LEE J, LEE J, et al. Brightness-based convolutional neural network for thermal image enhancement[J]. IEEE Access, 2017, 5: 26867-26879. doi: 10.1109/ACCESS.2017.2769687 [23] HAN T Y, KIM Y J, SONG B C. Convolutional neural network-based infrared image super resolution under low light environment[C]//Proceedings of the 25th European Signal Processing Conference. Piscataway: IEEE Press, 2017: 803-807. [24] GUPTA H, MITRA K. Pyramidal edge-maps and attention based guided thermal super-resolution[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2020: 698-715. [25] MAO X, LIU Y, SHEN W, et al. Deep residual fourier transformation for single image deblurring[EB/OL]. (2022-11-29)[2023-09-01]. https://arxiv.org/abs/2111.11745. [26] BRIGHAM E O, MORROW R E. The fast Fourier transform[J]. IEEE Spectrum, 1967, 4(12): 63-70. doi: 10.1109/MSPEC.1967.5217220 [27] CAO J M, LI Y Y, SUN M C, et al. DO-Conv: depthwise over-parameterized convolutional layer[J]. IEEE Transactions on Image Processing, 2022, 31: 3726-3736. doi: 10.1109/TIP.2022.3175432 [28] JI S W, XU W, YANG M, et al. 3D convolutional neural networks for human action recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(1): 221-231. doi: 10.1109/TPAMI.2012.59 [29] NIU B, WEN W, REN W, et al. Single image super-resolution via a holistic attention network[C]//Proceedings of the 16th European Conference on Computer Vision. Berlin: Springer, 2020: 191-207. [30] ZHANG R, ISOLA P, EFROS A A, et al. The unreasonable effectiveness of deep features as a perceptual metric[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 586-595. [31] LEWIS J. FLIR releases machine learning thermal dataset for advanced driver assistance systems[J]. Vision Systems Design, 2018, 23(9): 1-10. [32] ZHANG K, ZUO W M, ZHANG L. Learning a single convolutional super-resolution network for multiple degradations[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 3262-3271. [33] ZHANG Y L, LI K P, LI K, et al. Image super-resolution using very deep residual channel attention networks[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 294-310. [34] DAI T, CAI J, ZHANG Y, et al. Second-order attention network for single image super-resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 11065-11074. [35] FERSTL D, REINBACHER C, RANFTL R, et al. Image guided depth upsampling using anisotropic total generalized variation[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2013: 993-1000. [36] BARRON J T, POOLE B. The fast bilateral solver[C]//Proceedings of the 14th European Conference on Computer Vision. Berlin: Springer, 2016: 617-632. [37] KOPF J, COHEN M F, LISCHINSKI D, et al. Joint bilateral upsampling[J]. ACM Transactions on Graphics, 2007, 26(99): 96. doi: 10.1145/1276377.1276497 [38] HAM B, CHO M, PONCE J. Robust image filtering using joint static and dynamic guidance[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2015: 4823-4831. [39] HUI T W, LOY C C, TANG X O. Depth map super-resolution by deep multi-scale guidance[C]//Proceedings of the 14th European Conference on Computer Vision. Berlin: Springer, 2016: 353-369. [40] DE LUTIO R, D’ARONCO S, WEGNER J D, et al. Guided super-resolution as pixel-to-pixel transformation[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 8828-8836. [41] DENG X, DRAGOTTI P L. Deep coupled ISTA network for multi-modal image super-resolution[J]. IEEE Transactions on Image Processing, 2019, 29: 1683-1698. -

下载:

下载: