-

摘要:

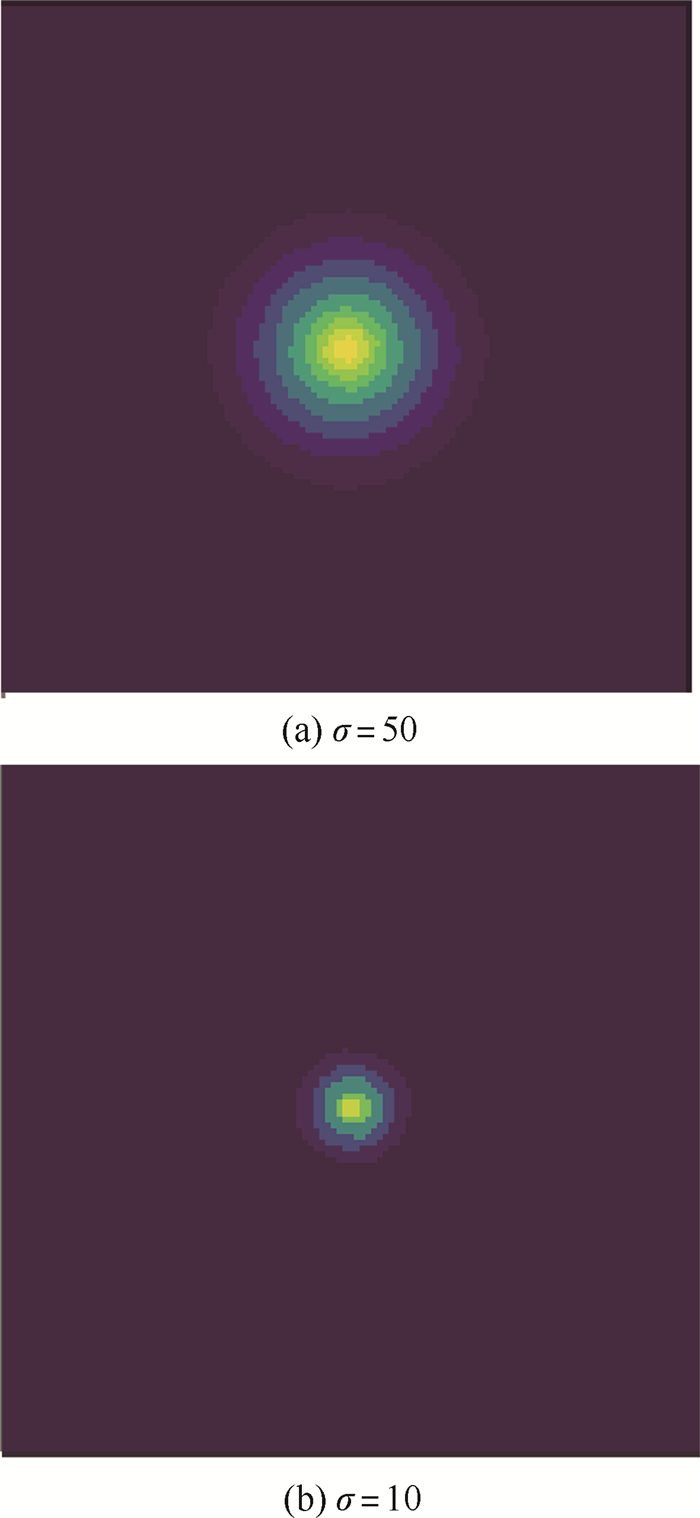

针对传统人群密度估计方法在鱼眼图像畸变下不适用的问题,提出了一个面向鱼眼图像的人群密度估计方法,实现了在鱼眼镜头场景下对人流量的监控。在模型结构方面,引入了可变形卷积,提高了模型对鱼眼畸变的适应能力。在生成目标数据方面,利用鱼眼图像的畸变特点,基于高斯变换,对人群标注转换的密度图进行符合鱼眼畸变的分布匹配。在训练方面,对损失函数的计算进行了优化,避免了模型在训练中陷入局部最优解的问题。由于鱼眼人群计数的数据集比较匮乏,采集并标注了相应的数据集。通过主客观实验与经典方法进行了对比,所提方法在测试集中的平均绝对误差达3.78,低于对比方法,证明了面向鱼眼图像的人群密度估计方法的优越性。

Abstract:Aiming at the problem that the traditional crowd density estimation methods are not applicable under the distortion of fisheye images, this paper presents a crowd density estimation method for fisheye images, which realizes the monitoring of human traffic in scene of using fisheye lens. For model structure, we introduced deformable convolution to improve the adaptability of the model to fisheye distortion. For generating the training targets, we used Gaussian transform to perform a distribution match on the density maps of annotations, which depends on the features of fisheye distortion. For training, we optimized the loss function to avoid the model from falling into local optimal solutions. In addition, we collected and labeled the corresponding dataset due to the lack of dataset for fisheye crowd estimation. At last, by comparing the subjective and objective experiments with classical algorithms, we proved the superiority of the crowd estimation method for fisheye images in this paper with the mean absolute error of 3.78 in the test dataset, which is lower than others.

-

Key words:

- fisheye image /

- crowd estimation /

- distortion processing /

- distribution matching /

- fisheye image dataset

-

表 1 不同方法的最优结果

Table 1. The best result of different methods

方法 MAE Bias D2CNet 6.18 0.44 MCNN 12.21 0.88 CSRNet 5.66 0.41 Bayesian Loss 3.96 0.29 本文方法 3.78 0.27 表 2 消融实验结果

Table 2. Ablation results

对比方法 MAE Bias 方案1 5.59 0.40 方案2 4.22 0.30 方案3 3.89 0.28 对照组 3.78 0.27 -

[1] DAI J F, QI H Z, XIONG Y W, et al. Deformable convolutional networks[C]//2017 IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 764-773. [2] XUE Z C, XUE N, XIA G S, et al. Learning to calibrate straight lines for fisheye image rectification[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Piscataway: IEEE Press, 2019: 1643-1651. [3] LIAO K, LIN C, Y ZHAO. A deep ordinal distortion estimation approach for distortion rectification[J]. IEEE Transactions on Image Processing, 2021, 30: 3362-3375. doi: 10.1109/TIP.2021.3061283 [4] 徐佳, 杨鸿波, 宋阳, 等. 基于鱼眼摄像头的一种人脸识别技术[J]. 信息通信, 2018, 31(1): 131-132. https://www.cnki.com.cn/Article/CJFDTOTAL-HBYD201801057.htmXU J, YANG H B, SONG Y, et al. A face recognition technology based on fisheye camera[J]. Information & Communications, 2018, 31(1): 131-132(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-HBYD201801057.htm [5] ZHANG Y Y, ZHOU D S, CHEN S Q, et al. Single-image crowd counting via multi-column convolutional neural network[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 589-597. [6] LI Y H, ZHANG X F, CHEN D M. CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR). Piscataway: IEEE Press, 2018: 1091-1100. [7] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. (2015-04-10)[2021-09-01]. https://arxiv.org/abs/1409.1556. [8] GUO D, LI K, ZHA Z J, et al. DADNet: Dilated-attention-deformable ConvNet for crowd counting[C]//Proceedings of the 27th ACM International Conference on Multimedia. New York: ACM, 2019: 1823-1832. [9] ZHANG A R, SHEN J Y, XIAO Z H, et al. Relational attention network for crowd counting[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2019: 6787-6796. [10] WANG Q, BRECKON T P. Crowd counting via segmentation guided attention networks and curriculum loss[EB/OL]. (2020-08-03)[2021-09-01]. https://arxiv.org/abs/1911.07990. [11] DAS S S S, RASHID S M M, ALI M E. CCCNet: An attention based deep learning framework for categorized crowd counting[EB/OL]. (2019-11-12)[2021-09-01]. https://avxiv.org/abs/1912.05765. [12] ZHANG A R, YUE L, SHEN J Y, et al. Attentional neural fields for crowd counting[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2019: 5713-5722. [13] GAO J Y, HAN T, WANG Q, et al. Domain-adaptive crowd counting via inter-domain features segregation and Gaussian-prior reconstruction[EB/OL]. (2019-11-08)[2021-09-01]. https://arxiv.org/abs/1912.03677. [14] WAN J, CHAN A. Adaptive density map generation for crowd counting[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2019: 1130-1139. [15] MA Z H, WEI X, HONG X P, et al. Bayesian loss for crowd count estimation with point supervision[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Piscataway: IEEE Press, 2019: 6141-6150. [16] CHENG J, XIONG H P, CAO Z G, et al. Decoupled two-stage crowd counting and beyond[J]. IEEE Transactions on Image Processing, 2021, 30: 2862-2875. doi: 10.1109/TIP.2021.3055631 -

下载:

下载: