-

摘要:

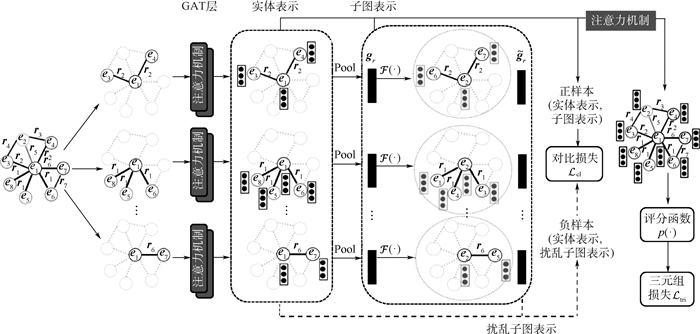

知识图谱(KG)补全旨在通过知识库中已知三元组来预测缺失的链接。由于大多数方法都是独立地处理三元组,而忽略了知识图谱所具有的异质结构和相邻节点中固有的丰富的信息,导致不能充分挖掘三元组的特征。考虑基于端到端的知识图谱补全任务,提出了一种图对比注意力网络(GCAT),通过注意力机制同时捕获局部邻域内实体和关系的特征,并封装实体邻域上下文信息。为了有效封装三元组特征,引入一个子图级别的对比训练对象用于增强生成的实体嵌入的质量。为了验证GCAT的有效性,在链接预测任务上评估了所提方法,实验结果表明,在数据集FB15k-237中,MRR比InteractE提高0.005,比A2N模型提高0.042;在数据集WN18RR中,MRR比InteractE提高0.019,比A2N模型提高0.032。实验证明提出的GCAT模型能够有效预测知识图谱中缺失的链接。

Abstract:Knowledge graph (KG) completion aims to predict missing links based on the known triples in a knowledge base. Since most KG completion methods dealt with triples independently without capture the heterogeneous structure of KG and the rich information that was inherent the in neighbor nodes, which resulted in incomplete mining of triple features. This study revisits the end-to-end KG completion task, and proposes a novel graph contrastive attention network (GCAT), which can capture latent representations of entities and relations simultaneously through attention mechanism, and encapsulate more neighborhood context information from the entity. Specifically, to effectively encapsulate the features of triples, a subgraph-level contrastive training object is introduced, enhancing the quality of generated entity representation. To justify the effectiveness of GCAT, the proposed model is evaluated on link prediction tasks. Experimental results show that on the dataset FB15k-237, MRR of the model is 0.005 and 0.042 higher than that of InteractE and A2N, respectively, and that on the dataset WN18RR, MRR is 0.019 and 0.032 higher than that of InteractE and A2N, respectively. Experiments prove that the proposed model can effectively predict the missing links in KGs.

-

表 1 数据集统计数据

Table 1. Statistics of datasets

数据集 Ne Nr Train Valid Test FB15k-237 14 541 237 272 115 17 535 20 466 WN18RR 40 943 11 86 835 3 034 3 134 表 2 数据集FB15k-237的实验结果

Table 2. Experimental results on FB15k-237 dataset

模型 MRR Hit@1 Hit@3 Hit@10 TransE[3] 0.294 0.465 DistMult[4] 0.241 0.155 0.263 0.419 ComplEx[5] 0.247 0.158 0.276 0.428 ConvE[6] 0.325 0.237 0.356 0.501 RotatE[21] 0.338 0.241 0.375 0.533 R-GCN[10] 0.248 0.417 HypER[22] 0.341 0.252 0.376 0.520 TuckER[23] 0.358 0.266 0.394 0.544 A2N[11] 0.317 0.232 0.348 0.486 CompGCN[12] 0.355 0.264 0.390 0.535 InteractE[7] 0.354 0.263 0.535 GCAT 0.359 0.269 0.395 0.540 表 3 数据集WN18RR的实验结果

Table 3. Experimental results on WN18RR dataset

模型 MRR Hit@1 Hit@3 Hit@10 TransE[3] 0.226 0.501 DistMult[4] 0.430 0.390 0.440 0.490 ComplEx[5] 0.440 0.410 0.460 0.510 ConvE[6] 0.430 0.400 0.440 0.520 RotatE[21] 0.476 0.428 0.492 0.571 R-GCN[10] 0.137 HypER[22] 0.465 0.436 0.477 0.522 TuckER[23] 0.470 0.443 0.482 0.526 A2N[11] 0.450 0.420 0.460 0.510 CompGCN[12] 0.479 0.443 0.494 0.546 InteractE[7] 0.463 0.430 0.528 GCAT 0.482 0.447 0.495 0.546 表 4 GCAT和其变体方法在数据集FB15k-237上的实验结果

Table 4. Experimental results of GCAT and its variant on FB15k-237 dataset

方法 MRR Hit@1 Hit@3 Hit@10 GCAT-wo 0.357 0.266 0.392 0.540 GCAT 0.359 0.269 0.395 0.540 表 5 GCAT和其变体方法在数据集WN18RR上的实验结果

Table 5. Experimental results of GCAT and its variant on WN18RR dataset

方法 MRR Hit@1 Hit@3 Hit@10 GCAT-wo 0.475 0.441 0.489 0.541 GCAT 0.482 0.447 0.495 0.546 -

[1] BOLLACKER K, EVANS C, PARITOSH P, et al. Freebase: A collaboratively created graph database for structuring human knowledge[C]//Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data. New York: ACM, 2008: 1247-1250. [2] LEHMANN J, ISELE R, JAKOB M, et al. DBpedia-A large-scale, multilingual knowledge base extracted from Wikipedia[C]//The Semantic Web, 2015: 167-195. [3] BORDES A, USUNIER N, GARCIA-DURÁN A, et al. Translating embeddings for modeling multi-relational data[C]//Proceedings of the 26th International Conference on Neural Information Processing Systems. New York: ACM, 2013: 2787-2795. [4] YANG B, YIH W, HE X, et al. Embedding entities and relations for learning and inference in knowledge bases[C]//Processings of the International Conference on Learning Representations(ICLR), 2015: 1-12. [5] TROUILLON T, WELBL J, RIEDEL S, et al. Complex embeddings for simple link prediction[C]//Proceedings of the 33rd International Conference on Machine Learning(ICML), 2016: 2071-2080. [6] DETTMERS T, MINERVINI P, STENETORP P, et al. Convolutional 2D knowledge graph embeddings[C]//Proceedings of the AAAI Conference on Artificial Intelligence, 2018, 32: 1811-1818. [7] VASHISHTH S, SANYAL S, NITIN V, et al. InteractE: Improving convolution-based knowledge graph embeddings by increasing feature interactions[C]//Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(3): 3009-3016. [8] JIANG X T, WANG Q, WANG B. Adaptive convolution for multi-relational learning[C]//Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2019: 978-987. [9] NGUYEN D Q, NGUYEN T D, NGUYEN D Q, et al. A novel embedding model for knowledge base completion based on convolutional neural network[C]//Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2018: 327-333. [10] SCHLICHTKRULL M, KIPF T N, BLOEM P, et al. Modeling relational data with graph convolutional networks[C]//The Semantic Web, 2018: 593-607. [11] BANSAL T, JUAN D C, RAVI S, et al. A2N: Attending to neighbors for knowledge graph inference[C]//Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, 2019: 4387-4392. [12] VASHISHTH S, SANYAL S, NITIN N, et al. Composition-based multi-relational graph convolutional networks[C]//Proceedings of the International Conference on Learning Representations(ICLR), 2020: 1-15. [13] VELICKOVIC P, CUCURULL G, CASANOVA A, et al. Graph attention networks[C]//Proceeding of the International Conference on Learning Representations(ICLR), 2018: 1-12. [14] JAISWAL A, BABU A R, ZADEH M Z, et al. A survey on contrastive self-supervised learning[J]. Technologies, 2020, 9(1): 2. [15] JIAO Y Z, XIONG Y, ZHANG J W, et al. Sub-graph contrast for scalable self-supervised graph representation learning[C]//IEEE International Conference on Data Mining. Piscataway: IEEE Press, 2020: 222-231. [16] SUN F Y, HOFFMAN J, VERMA V, et al. InfoGraph: Unsupervised and semi-supervised graph-level representation learning via mutual information maximization[C]//Proceeding of the International Conference on Learning Representations(ICLR), 2020: 1-16. [17] YOU Y N, CHEN T L, SUI Y D, et al. Graph contrastive learning with augmentations[C]//Proceedings of the 34th Conference on Neural Information Processing Systems(NeurlPS), 2020. [18] VELICKOVIC P, FEDUS W, HAMILTON W L, et al. Deep graph infomax[C]//Proceeding of the International Conference on Learning Representations(ICLR), 2019: 1-17. [19] PENG Z, HUANG W B, LUO M N, et al. Graph representation learning via graphical mutual information maximization[C]//Proceedings of the Web Conference 2020. New York: ACM, 2020: 259-270. [20] TOUTANOVA K, CHEN D Q, PANTEL P, et al. Representing text for joint embedding of text and knowledge bases[C]// Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, 2015: 1499-1509. [21] ZHOU X H, YI Y H, JIA G. Path-RotatE: Knowledge graph embedding by relational rotation of path in complex space[C]//2021 IEEE/CIC International Conference on Communications in China (ICCC). Piscataway: IEEE Press, 2021: 905-910. [22] BALAZEVIC I, ALLEN C, HOSPEDALES T. Hypernetwork knowledge graph embeddings[C]// Proceeding of the 28th International Conference on Artificial Neural Networks (ICANN), 2019: 553-565. [23] BALAZEVIC I, ALLEN C, HOSPEDALES T. TuckER: Tensor factorization for knowledge graph completion[C]//Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 2019: 5185-5194. [24] SUN Z Q, VASHISHTH S, SANYAL S, et al. A re-evaluation of knowledge graph completion methods[C]//Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020: 5516-5522. -

下载:

下载: