-

摘要:

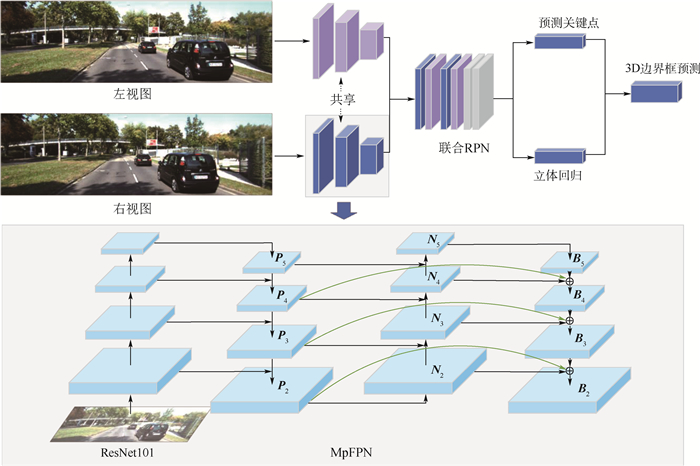

3D目标检测是计算机视觉和自动驾驶中一项重要的场景理解任务。当前基于立体图像的3D目标检测方法大多没有充分考虑多个目标之间的尺度存在较大差异,从而尺度小的物体容易被忽略,导致检测精度低。针对这一问题,提出了一种基于立体图像的多路径特征金字塔网络(MpFPN)3D目标检测方法。MpFPN对特征金字塔网络进行了扩展,增加了自底向上的路径、由上至下的路径及输入特征图到输出特征图之间的连接,为联合区域提议网络提供了更高语义信息和更细粒度空间信息的多尺度特征信息。实验结果表明:在3D目标检测KITTI数据集上,无论在场景简单、中等、复杂情况下,所提方法获得的结果都优于比较方法的结果。

-

关键词:

- 3D目标检测 /

- 特征金字塔网络(FPN) /

- 立体图像 /

- 多尺度 /

- 深度学习

Abstract:3D object detection is an important scene understanding task in computer vision and autonomous driving. However, most of these methods do not fully consider the large differences in scales between multiple objects. Thus, objects with a small scale are easily ignored, resulting in low detection accuracy. To address this problem, this paper proposes a 3D object detection method based on multi-path feature pyramid network (MpFPN) for stereo images. MpFPN extends feature pyramid network, adding a bottom-up path, top-down path, and connections between input and output features. It provides multi-scale feature information with higher semantic information and finer-grained spatial information for union region proposal network. Experimental results show that the proposed method achieves better results than comparative methods in easy, moderate and hard scenarios on the 3D object detection dataset KITTI.

-

Key words:

- 3D object detection /

- feature pyramid network (FPN) /

- stereo image /

- multi-scale /

- deep learning

-

表 1 KITTI验证集上汽车类别的APbev和AP3D

Table 1. APbev/AP3D of car category on KITTI validation set

% 方法 输入 IoU=0.5 IoU=0.7 简单 中等 困难 简单 中等 困难 MonoGRNet[24] M 54.21/50.51 39.69/36.97 33.06/30.82 24.97/13.88 19.44/10.19 16.30/7.62 M3D-RPN[5] M 55.37/48.96 42.49/39.57 35.29/33.01 25.94/20.27 21.18/17.06 17.90/15.21 AM3D[25] M 72.64/68.86 51.82/49.19 44.21/42.24 43.75/32.23 28.39/21.09 23.87/17.26 3DOP[9] S 55.04/46.04 41.25/34.63 34.55/30.09 12.63/6.55 9.49/5.07 7.59/4.10 TLNet[26] S 62.46/59.51 45.99/43.71 41.92/37.99 29.22/18.15 21.88/14.26 18.83/13.72 Stereo R-CNN[10] S 87.13/85.84 74.11/66.28 58.93/57.24 68.50/54.11 48.30/36.69 41.47/31.07 本文方法 S 87.62/86.49 75.04/72.62 59.31/58.04 69.44/55.26 49.36/37.94 42.11/32.38 注:S表示双目图像对作为输入,M表示单目图像作为输入。“/”前数据为APbev, “/”后数据为AP3D。 表 2 本文方法与Pseudo-LiDAR[11]方法在KITTI验证集上汽车类别的APbev和AP3D

Table 2. APbev and AP3D of car category on KITTI validation set between the proposed method and seudo-LiDAR[11] method

% 方法 APbev (IoU=0.7) AP3D(IoU=0.7) 简单 中等 困难 简单 中等 困难 本文方法 69.44 49.36 42.11 55.26 37.94 32.38 PL+FP[11] 69.7 48.1 41.8 54.9 36.4 31.1 表 3 在KITTI数据集上对于MpFPN方法的消融实验

Table 3. Ablation experiment of MpFPN approach on KITTI dataset

% Path Conn APbev (IoU=0.7) AP3D(IoU=0.7) 简单 中等 困难 简单 中等 困难 × × 65.92 46.11 40 52.25 34.69 30.27 √ × 68.01 48.15 41.21 54.78 36.88 31.42 √ √ 69.44 49.36 42.11 55.26 37.94 32.38 -

[1] WANG Z, JIA K. Frustum ConvNet: Sliding frustums to aggregate local point-wise features for a modal 3D object detection[C]//Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Piscataway: IEEE Press, 2019: 1742-1749. [2] SHI S, WANG X, LI H. PointRCNN: 3D object proposal generate-on and detection from point cloud[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 770-779. [3] QI C R, LITANY O, HE K, et al. Deep Hough voting for 3D object detection in point clouds[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 9277-9286. [4] SHI S, GUO C, JIANG L, et al. PV-RCNN: Point-voxel feature set abstraction for 3D object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 10529-10538. [5] BRAZIL G, LIU X. M3D-RPN: Monocular 3D region proposal network for object detection[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 9287-9296. [6] KU J, PON A D, WASLANDER S L. Monocular 3D object detection leveraging accurate proposals and shape reconstruction[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 11867-11876. [7] CHEN Y, TAI L, SUN K, et al. MonoPair: Monocular 3D object detection using pairwise spatial relationships[C]//Proceedi-ngs of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 12093-12102. [8] LIU L, WU C, LU J, et al. Reinforced axial refinement network for monocular 3D object detection[C]//European Conference on Computer Vision. Berlin: Springer, 2020: 540-556. [9] CHEN X, KUNDU K, ZHU Y, et al. 3D object proposals usingstereo imagery for accurate object class detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 40(5): 1259-1272. [10] LI P, CHEN X, SHEN S. Stereo R-CNN based 3D object detection for autonomous driving[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 7644-7652. [11] WANG Y, CHAO W L, GARG D, et al. Pseudo-LiDAR from visual depth estimation: Bridging the gap in 3D object detection for autonomous driving[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 8445-8453. [12] SUN J, CHEN L, XIE Y, et al. Disp R-CNN: Stereo 3D object detection via shape prior guided instance disparity estimation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 10548-10557. [13] GHIASI G, LIN T Y, LE Q V. NAS-FPN: Learning scalable feature pyramid architecture for object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 7036-7045. [14] ZHAO Q, SHENG T, WANG Y, et al. M2Det: A single-shot object detector based on multi-level feature pyramid network[C]// Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI, 2019: 9259-9266. [15] TAN M, PANG R, LE Q V. EfficientDet: Scalable and efficient object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 10781-10790. [16] 曹帅, 张晓伟, 马健伟. 基于跨尺度特征聚合网络的多尺度行人检测[J]. 北京航空航天大学学报, 2020, 46(9): 1786-1796. doi: 10.13700/j.bh.1001-5965.2020.0069CAO S, ZHANG X W, MA J W. Transscale feature aggregation network for multiscale pedestrian detection[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(9): 1786-1796(in Chinese). doi: 10.13700/j.bh.1001-5965.2020.0069 [17] 李晓光, 付陈平, 李晓莉, 等. 面向多尺度目标检测的改进Faster R-CNN算法[J]. 计算机辅助设计与图形学学报, 2019, 31(7): 1095-1101. https://www.cnki.com.cn/Article/CJFDTOTAL-JSJF201907005.htmLI X G, FU C P, LI X L, et al. Improved faster R-CNN for multi-scale object detection[J]. Journal of Computer-Aided Design & Computer Graphics, 2019, 31(7): 1095-1101(in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JSJF201907005.htm [18] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 2117-2125. [19] LIU S, QI L, QIN H, et al. Path aggregation network for inst-ance segmentation[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 8759-8768. [20] HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 770-778. [21] HE K, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 2961-2969. [22] DENG J, DONG W, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2009: 248-255. [23] GEIGER A, LENZ P, URTASUN R. Are we ready for autonomous driving? The KITTI vision benchmark suite[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2012: 3354-3361. [24] QIN Z, WANG J, LU Y. MonoGRNet: A geometric reasoning network for monocular 3D object localization[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI, 2019: 8851-8858. [25] MA X, WANG Z, LI H, et al. Accurate monocular 3D object detection via color-embedded 3D reconstruction for autonomous driving[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6851-6860. [26] QIN Z, WANG J, LU Y. Triangulation learning network: From monocular to stereo 3D object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 7615-7623. [27] CHANG J R, CHEN Y S. Pyramid stereo matching network[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 5410-5418. [28] KU J, MOZIFIAN M, LEE J, et al. Joint 3D proposal generation and object detection from view aggregation[C]//Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Piscataway: IEEE Press, 2018: 1-8. -

下载:

下载: